The automotive industry’s race to integrate artificial intelligence into vehicles advanced significantly with Ford Motor Company’s announcement of a new AI-powered digital assistant scheduled to debut in the company’s mobile application before expanding to vehicle integration in 2027. Developed in partnership with Microsoft and leveraging Google Cloud infrastructure, the assistant represents Ford’s bet that conversational AI can enhance the ownership experience and differentiate its vehicles in an increasingly competitive market.

Unlike previous generations of voice-activated systems that relied on pre-programmed commands and limited natural language understanding, Ford’s new assistant utilizes large language models capable of processing complex requests and engaging in contextual conversations. The system will tap into vehicle data, ownership information, service records, and Ford’s broader knowledge bases to provide personalized assistance beyond simple command execution.

Ford has been notably cautious in revealing specific capabilities and use cases for the assistant, focusing public announcements on the technical infrastructure and partnership arrangements rather than detailed feature descriptions. This measured approach likely reflects both competitive sensitivities and the reality that AI capabilities continue evolving rapidly, making premature commitments to specific functions potentially limiting.

The decision to launch the assistant first in Ford’s mobile application serves multiple strategic purposes. Mobile deployment allows the company to gather real-world usage data and refine the AI’s responses before integrating the technology into vehicle systems where user expectations for reliability and safety are higher. It also provides a testing ground for determining which types of interactions customers find most valuable, informing the eventual in-vehicle implementation.

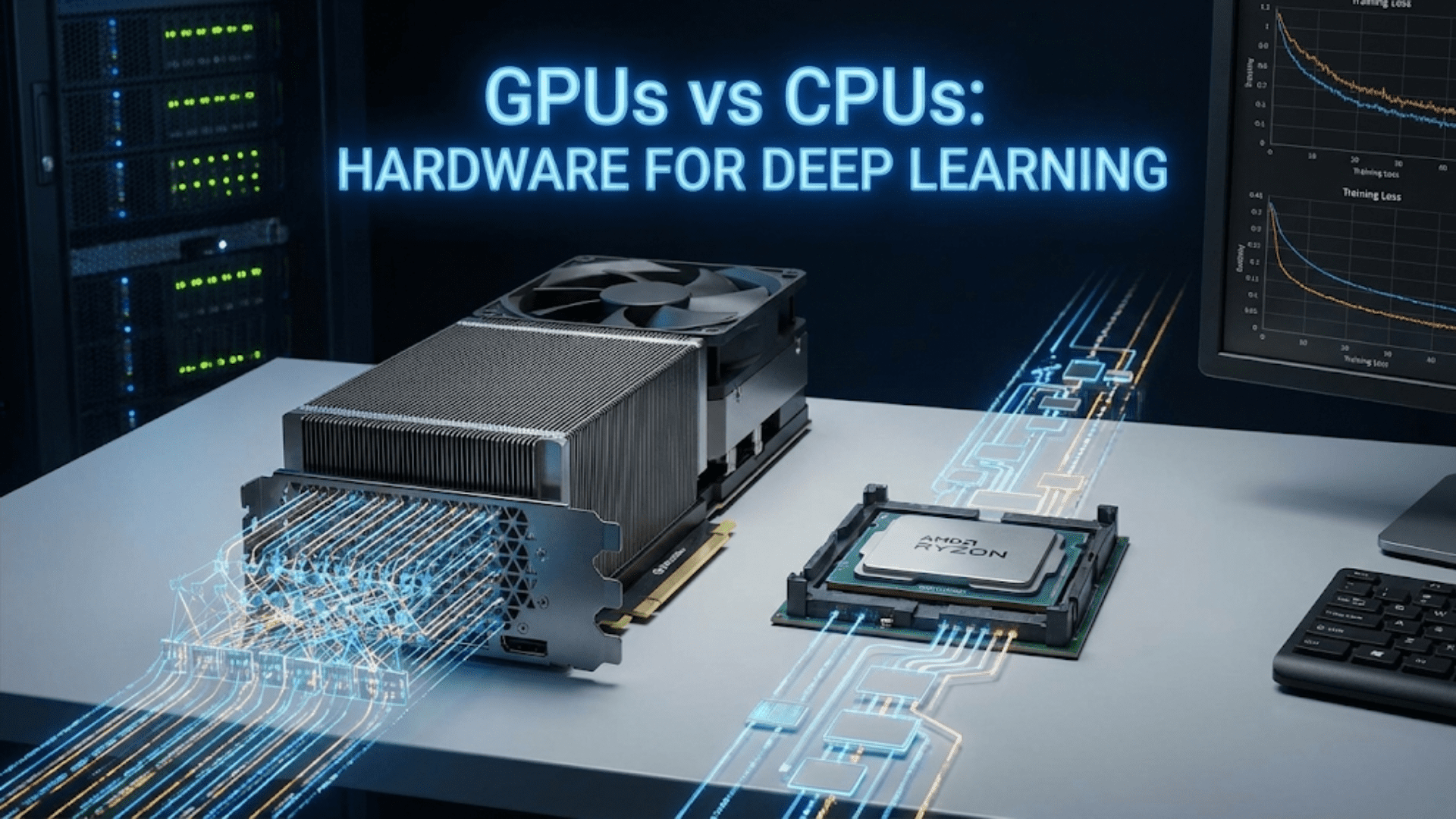

Google Cloud’s role in hosting the assistant addresses the substantial computational requirements of running large language models at scale. Processing natural language queries, generating contextually appropriate responses, and accessing distributed data sources requires significant infrastructure that would be impractical to replicate within individual vehicles. Cloud-based processing also enables continuous model updates and improvements without requiring vehicle software upgrades.

However, cloud dependency introduces latency and connectivity requirements that could impact user experience. Processing requests through remote servers means delays between user queries and system responses, particularly in areas with limited cellular coverage. Ford will need to carefully manage expectations around response times and potentially implement hybrid architectures that handle some interactions locally while offloading more complex processing to the cloud.

Microsoft’s involvement likely encompasses both technical contributions and commercial arrangements. The company has invested heavily in AI through its partnership with OpenAI and integration of AI capabilities across its product portfolio. Bringing this expertise to automotive applications represents a natural extension of Microsoft’s enterprise AI strategy, though specific technologies and models being used have not been publicly disclosed.

The timing of the announcement, coming during CES when multiple automotive companies showcased AI integration plans, reflects industry-wide recognition that in-vehicle AI assistants represent the next frontier of automotive user experience. However, the crowded field also highlights the challenge Ford faces in differentiating its implementation from competitors ranging from established automakers to new entrants like Tesla and Chinese manufacturers that have made AI integration a central selling point.

Privacy and data security concerns loom large over any automotive AI system that processes personal information and vehicle data through cloud infrastructure. Ford must navigate consumer concerns about what data is collected, how it’s used, where it’s stored, and who has access to it. The company has not detailed its privacy framework for the assistant, though regulatory requirements in markets like California and Europe will impose baseline protections.

The broader automotive industry has struggled to create voice assistants that customers genuinely prefer over their smartphones’ native AI systems. Many drivers default to using Siri, Google Assistant, or Alexa through their phones rather than learning manufacturer-specific interfaces. Ford’s challenge will be demonstrating that its assistant provides unique value tied to vehicle ownership that cannot be replicated by general-purpose alternatives.

Potential use cases that could justify a dedicated automotive assistant include integrated vehicle service scheduling based on predicted maintenance needs, personalized driving tips that account for individual vehicle behavior, seamless integration with Ford’s charging infrastructure for electric vehicles, and contextual assistance that understands both vehicle state and ownership history when resolving issues.

The phased rollout stretching from mobile launch in 2026 to vehicle integration in 2027 provides substantial time for refinement, though it also means customers will wait years to experience the full vision. By 2027, competitor capabilities will have advanced significantly, raising the bar for what constitutes a compelling automotive AI assistant.

Ford’s approach contrasts with companies like Tesla that have integrated AI-powered features more aggressively but have also faced criticism when capabilities fall short of promotional messaging. Ford’s more measured rollout may avoid early missteps but risks appearing conservative in a technology domain where rapid iteration has become the norm.