Introduction

The journey to becoming a self-taught data scientist is both challenging and incredibly rewarding. Unlike many technical fields where formal education remains the primary gateway, data science has become remarkably accessible to self-directed learners. The combination of high-quality free resources, active online communities, and the practical, project-based nature of the field means that motivated individuals can genuinely teach themselves the skills needed to launch a data science career.

However, accessible does not mean easy. The very abundance of resources that makes self-teaching possible also creates overwhelming choice paralysis. When you search for data science tutorials, you encounter thousands of courses, books, YouTube channels, and blog posts, each claiming to be the best starting point. Without structure, many aspiring data scientists spend months bouncing between resources, never developing deep competence in any area. Others dive too quickly into advanced topics like deep learning before mastering fundamentals, creating a shaky knowledge foundation that limits their progress.

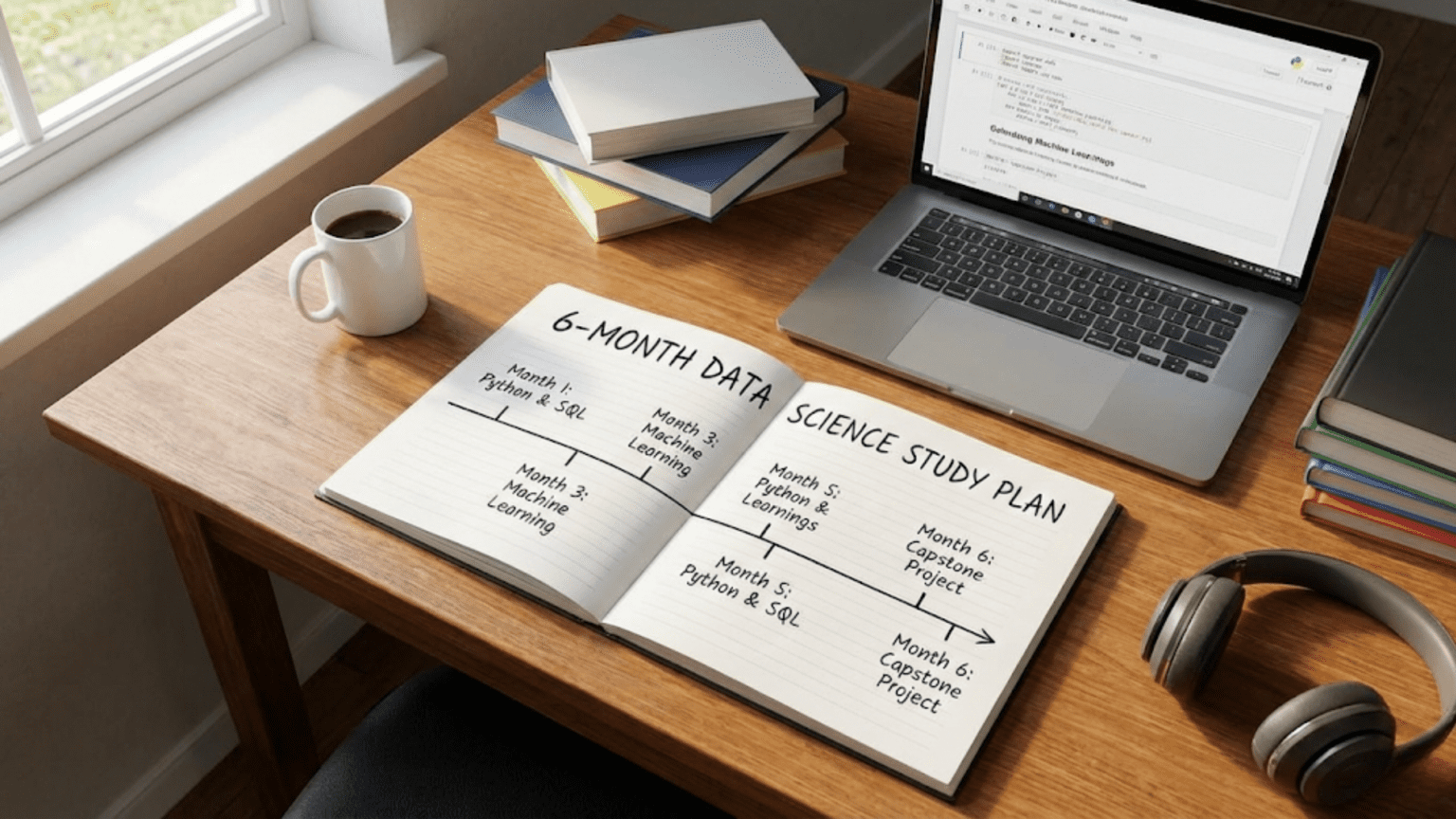

This comprehensive six-month study plan provides the structure and progression that self-taught data scientists need. It’s designed for someone who can dedicate fifteen to twenty hours per week to focused study, which translates to roughly two to three hours on weekdays and additional time on weekends. If you have more or less time available, you can adjust the pace accordingly, but the sequence of topics remains important regardless of speed.

The plan emphasizes building genuine competence over superficial familiarity. Rather than rushing through dozens of courses to claim you’ve “learned” various tools, you’ll spend time practicing fundamentals until they become intuitive. You’ll write code daily, work through problems that challenge you, and build projects that demonstrate real capability. By the end of six months, you won’t know everything about data science, but you’ll have solid foundations across programming, statistics, and machine learning, along with a portfolio of projects that prove your skills to potential employers or clients.

Before diving into the month-by-month plan, let’s establish some principles that will guide your self-teaching journey and maximize your chances of success.

Principles for Successful Self-Teaching

Self-teaching data science differs fundamentally from passive learning. You cannot simply watch videos or read tutorials and expect skills to develop. Active engagement with material, through writing code, solving problems, and building projects, drives real learning. Understanding these core principles helps you avoid common pitfalls that derail many self-taught aspirants.

The principle of active learning over passive consumption cannot be overstated. Watching a tutorial on Python data structures might feel productive, but the knowledge remains superficial until you’ve written hundreds of lines of code yourself, encountered errors, debugged them, and gradually internalized the patterns. Research consistently shows that active recall, where you attempt to solve problems from memory rather than following along with solutions, creates much stronger learning. This means that after completing a tutorial section, you should close it and try to solve similar problems on your own before moving forward.

Consistency matters far more than intensity. Studying three hours every single day for six months produces dramatically better results than occasional eight-hour marathon sessions. Daily engagement keeps concepts fresh in your mind and allows your brain to consolidate learning during sleep. The compounding effect of consistent practice over months creates expertise that intensive but sporadic effort cannot match. Build a realistic daily schedule that you can maintain, even on busy days, rather than an ambitious plan you’ll abandon after three weeks.

The concept of progressive overload, borrowed from physical training, applies perfectly to learning data science. You need to constantly work at the edge of your current ability, tackling problems that challenge you without being impossibly difficult. If exercises feel too easy, you’re not learning efficiently. If they’re so hard you can’t make progress, you’ll become discouraged. The sweet spot lies in problems that you can solve with effort, thinking, and perhaps some strategic Googling. This gradual increase in difficulty builds competence systematically.

Project-based learning provides context and motivation that abstract exercises cannot. While you need to learn syntax and concepts through tutorials and exercises, applying those concepts to projects that interest you makes learning meaningful. Projects also create artifacts you can showcase to employers, turning your learning into tangible proof of capability. Throughout this study plan, you’ll balance foundational learning with project work that integrates and applies what you’re studying.

Community engagement, even as a self-learner, provides accountability, help when you’re stuck, and exposure to different approaches. Join forums like Reddit’s data science communities, participate in Kaggle discussions, attend local meetups virtually or in person, and don’t hesitate to ask questions on Stack Overflow when genuinely stuck. Helping others once you’ve learned something reinforces your own understanding through teaching.

Finally, embrace the discomfort of not knowing. The gap between where you are and where you want to be can feel overwhelming, especially in the early months when everything seems foreign. Every expert data scientist experienced this same discomfort during their learning journey. The difference between those who succeed and those who quit often comes down to tolerance for confusion and persistence through frustration. Expect to feel lost sometimes, accept it as part of learning, and trust that continued effort gradually transforms confusion into clarity.

Months One and Two: Python Programming and Data Manipulation Fundamentals

The first two months establish your programming foundation. Python serves as the primary language for data science because of its readability, extensive libraries, and active community. Even if you’ve programmed in other languages, spending focused time on Python’s specific features and data science ecosystem pays dividends throughout your career.

During weeks one through four, you’ll immerse yourself in Python basics. Start with variables, data types, and basic operations. Understand integers, floats, strings, and booleans deeply before moving forward. Practice writing simple programs that take user input, perform calculations, and print results. This seems elementary, but building comfort with syntax and the pattern of writing code, running it, encountering errors, and fixing them establishes crucial habits.

Progress to data structures including lists, tuples, dictionaries, and sets. Really understand when to use each structure and how to manipulate them. Write programs that store data in lists, iterate through them with for loops, filter them with conditionals, and organize them in dictionaries. The goal is making these structures feel natural, so you can think about problems logically rather than struggling with syntax.

Functions represent a critical threshold in programming competence. Spend significant time understanding how to define functions, pass arguments, return values, and reason about variable scope. Write many small functions that perform specific tasks, then combine them to solve larger problems. This modular thinking underlies all good programming and becomes essential when working on data science projects.

By week five, transition to NumPy, the foundational library for numerical computing in Python. NumPy arrays differ significantly from Python lists, and understanding these differences matters for efficient data processing. Learn array creation, indexing, slicing, and broadcasting. Practice manipulating arrays with different shapes and dimensions. Work through exercises that require reshaping, stacking, and splitting arrays. The multidimensional array operations that feel awkward initially become intuitive with practice.

Weeks six through eight focus intensively on pandas, the library that handles most data manipulation in data science work. Start with Series and DataFrames, understanding how these structures organize data. Practice reading CSV files, which you’ll do constantly in real work. Learn to inspect DataFrames with methods like head, info, and describe. Understand how to select columns, filter rows, and sort data.

Dive deeply into data cleaning, which occupies substantial time in professional data science. Practice handling missing values through various strategies, from dropping to imputing. Learn to identify and correct data types, handle duplicates, and transform strings for analysis. Work with datetime data, which appears in virtually every real dataset. Understand how to parse dates, extract components like year or month, and perform date arithmetic.

Grouping and aggregating data represents another crucial skill set. Practice splitting data into groups based on categorical variables, applying functions to each group, and combining results. Understand the split-apply-combine pattern deeply, as it underlies much exploratory analysis. Learn to merge and join DataFrames, which models how you’ll combine data from multiple sources in real projects.

Throughout these two months, write code every single day. Work through structured courses like the official Python tutorial or DataCamp’s Python track, but supplement with your own exploration. When you learn a new pandas method, immediately try applying it to different datasets. Download CSV files from sources like Kaggle or government data portals and practice the skills you’re learning on real data.

Your first mini-project should emerge during month two. Choose a dataset that genuinely interests you, perhaps related to a hobby or field you care about. Load it into pandas, clean it, explore it with grouping and aggregation, and write a script that produces summary statistics and simple insights. This project doesn’t need to be sophisticated, but it should demonstrate that you can work through the full pipeline of loading, cleaning, and analyzing data. Document your code with comments and write a brief explanation of your findings. This becomes the first piece of your portfolio.

Month Three: Statistics and Probability

Month three shifts focus from programming to the mathematical foundations that underlie data analysis and machine learning. Many self-taught data scientists want to skip statistics and jump straight to machine learning algorithms, but this approach creates fragile knowledge. Without statistical foundations, you can’t truly understand when models are appropriate, how to evaluate them properly, or how to interpret their outputs responsibly.

Begin with descriptive statistics. Understand measures of central tendency including mean, median, and mode, and crucially, understand when each measure appropriately represents data. Study measures of spread like variance, standard deviation, and interquartile range. Practice calculating these statistics manually on small datasets before using library functions, which builds intuition about what these numbers actually represent.

Progress to understanding distributions. Start with the normal distribution, learning its properties and why it appears so frequently in nature and measurement. Understand how the standard deviation relates to the proportion of data within certain ranges. Study other important distributions including uniform, binomial, and Poisson distributions. For each distribution, understand the contexts where it naturally appears and practice generating and visualizing random samples.

Probability concepts build throughout the month. Start with basic probability rules including union, intersection, and complement. Understand conditional probability and independence deeply, as these concepts underlie much of statistical reasoning. Work through Bayes’ theorem not just as a formula but as a way of thinking about updating beliefs with evidence. This probabilistic thinking becomes essential for understanding machine learning algorithms later.

The concept of sampling distributions bridges descriptive statistics and inference. Understand that statistics calculated from samples vary, and this variation follows predictable patterns. Learn about the Central Limit Theorem, which explains why sample means tend toward normal distributions regardless of the underlying data distribution. This theorem justifies much of statistical inference, so understanding it deeply pays dividends.

Hypothesis testing represents a major conceptual leap. Learn the logic behind null and alternative hypotheses, p-values, and significance levels. Understand that hypothesis tests ask whether observed data would be surprising if the null hypothesis were true. Practice conducting t-tests for comparing means and chi-square tests for categorical data. More importantly, develop intuition about what these tests actually tell you and what they don’t.

Study confidence intervals alongside hypothesis tests. Understand how confidence intervals quantify uncertainty about parameters and how they relate to hypothesis tests. Practice calculating and interpreting confidence intervals for means and proportions. Recognize that confidence intervals often communicate more useful information than binary hypothesis test results.

Spend time understanding the assumptions behind statistical tests. Real data rarely perfectly satisfy assumptions of normality, independence, or equal variance that many tests require. Learn when violations of assumptions matter substantially and when they’re relatively harmless. This critical thinking about assumptions and limitations separates sophisticated analysis from mechanical application of tests.

Correlation and simple linear regression close out the month. Understand Pearson correlation and what it measures, along with its limitations. Recognize that correlation doesn’t imply causation, but understand this phrase deeply rather than treating it as a cliché. Learn how simple linear regression models the relationship between two variables, how to fit the model, interpret coefficients, and assess fit quality.

For statistics, the combination of theoretical understanding and practical computation matters. Use Python’s scipy and statsmodels libraries to perform calculations, but always think about what you’re computing and why. Work through the statistics in the “Think Stats” book by Allen Downey, which provides excellent exercises that build genuine understanding. Download datasets and practice calculating statistics, testing hypotheses, and computing confidence intervals. Write detailed explanations of what your analyses reveal, practicing the communication skills essential for data science work.

Month Four: Data Visualization and Exploratory Data Analysis

Month four develops your ability to understand data through visualization and systematic exploration. Effective visualization is both a tool for your own understanding and a way to communicate findings to others. Mastering the principles and tools of visualization dramatically enhances your analytical capabilities.

Start with matplotlib, Python’s foundational visualization library. Learn to create basic plots including line plots, scatter plots, bar charts, and histograms. Understand the anatomy of matplotlib figures and axes, which provides the foundation for all customization. Practice creating figures with multiple subplots, which allows comparing different aspects of data side by side.

Focus extensively on customization, learning to modify colors, line styles, markers, labels, titles, and legends. Good visualization requires attention to details that make plots clear and professional. Learn to adjust figure sizes and resolutions for different output formats. Practice saving figures as both PNG for presentations and vector formats like PDF for publications.

Progress to Seaborn, which builds on matplotlib to create statistical visualizations with less code. Learn to create distribution plots, box plots, violin plots, and categorical plots. Understand when each plot type effectively communicates particular aspects of data. Practice using Seaborn’s color palettes and themes to create aesthetically pleasing visualizations quickly.

Study the principles of effective visualization design. Learn about choosing appropriate plot types for different data types and relationships. Understand how to use visual encodings like position, length, color, and size effectively. Study common visualization mistakes like misleading axes, inappropriate chart types, and chartjunk that obscures rather than illuminates data.

Practice creating visualizations that tell stories. A good plot should communicate a clear message without requiring extensive explanation. Learn to use titles, annotations, and careful design choices to guide viewers’ attention to important patterns. Practice creating visualization series that build understanding progressively, taking viewers from overview to detail.

Exploratory data analysis represents the systematic process of understanding new datasets. Learn to approach unfamiliar data methodically, starting with basic questions about size, structure, and data types before progressing to distributions, relationships, and patterns. Develop a checklist of exploratory steps that you apply to every new dataset.

Practice identifying data quality issues through visualization. Learn to spot outliers, missing data patterns, unexpected distributions, and data entry errors visually. Understand that data is rarely clean in practice, and exploratory visualization helps you understand necessary cleaning steps before analysis.

Study multivariate visualization techniques for understanding relationships between multiple variables. Practice creating pair plots that show all pairwise relationships in a dataset. Learn to create correlation heatmaps that reveal which variables relate strongly. Understand how to use color or size to add additional dimensions to scatter plots, creating rich visualizations that reveal complex patterns.

Throughout the month, apply visualization to diverse datasets. Download data on topics that interest you and practice exploring them thoroughly. Create visualization portfolios where you take a dataset from initial exploration through polished visualizations that communicate clear insights. Write explanations of what your visualizations reveal, practicing the integration of visual and verbal communication.

Your major project for this month should be a comprehensive exploratory data analysis. Choose a moderately complex dataset with multiple variables and interesting relationships. Conduct systematic exploration, create a diverse set of visualizations revealing different aspects of the data, identify interesting patterns and relationships, and compile everything into a well-documented Jupyter notebook or report. This project demonstrates your ability to understand data independently and communicate findings effectively, skills that employers value highly.

Month Five: Machine Learning Foundations

Month five introduces machine learning, the aspect of data science that often draws people to the field. However, machine learning builds directly on everything you’ve learned in the previous months. Your programming skills enable you to implement algorithms, your statistical knowledge helps you understand model behavior, and your visualization abilities allow you to evaluate performance.

Begin with the fundamental concept of supervised learning, where you train models using labeled examples to make predictions on new data. Understand the distinction between regression problems, where you predict continuous values, and classification problems, where you predict categories. Recognize that much of machine learning involves these two problem types, making them essential to understand deeply.

Start with linear regression in the machine learning context. While you encountered linear regression in statistics month, now you’ll understand it as a machine learning algorithm. Learn to use scikit-learn, Python’s primary machine learning library, to fit models, make predictions, and evaluate performance. Understand the difference between training data used to fit models and test data used to evaluate them. This train-test split prevents overfitting, where models memorize training examples rather than learning generalizable patterns.

Study evaluation metrics for regression including mean squared error, root mean squared error, and R-squared. Understand what each metric measures and when to prefer each one. Practice calculating metrics manually before using library functions, building intuition about what numbers mean in context.

Progress to classification with logistic regression. Understand how logistic regression adapts the linear model for binary classification problems by passing predictions through the sigmoid function. Learn to interpret logistic regression coefficients as log-odds ratios. Practice training classifiers and making predictions on new examples.

Classification metrics require careful study. Accuracy seems intuitive but can be misleading with imbalanced classes. Learn about precision, recall, and F1-score, understanding the tradeoffs between false positives and false negatives. Study confusion matrices, which reveal exactly how your classifier performs across categories. Understand ROC curves and the area under the curve metric for evaluating classifiers across different decision thresholds.

Move to tree-based methods including decision trees and random forests. Understand how decision trees make predictions by learning rules that split data. Study the concept of tree depth and how it relates to model complexity. Learn about random forests, which combine multiple trees to create more robust predictions. Understand the bias-variance tradeoff and how ensemble methods reduce variance.

Feature engineering represents a crucial practical skill. Learn to create new features from existing ones through transformations, combinations, and domain knowledge. Practice encoding categorical variables using one-hot encoding or other techniques. Understand feature scaling and when it matters for different algorithms. Study feature selection methods for identifying the most informative variables.

Cross-validation elevates your understanding of model evaluation. Rather than a single train-test split, cross-validation uses multiple splits to assess performance more reliably. Learn k-fold cross-validation and understand how it provides more robust estimates of model performance. Practice using cross-validation to compare different models and tune hyperparameters.

Model tuning and hyperparameter optimization represent important practical skills. Learn about parameters that you set before training like tree depth in decision trees or regularization strength in regression. Understand grid search and random search for systematically exploring hyperparameter spaces. Practice tuning models and evaluating whether tuning actually improves performance on test data.

Throughout the month, work on multiple small prediction projects. Find datasets on Kaggle or UCI Machine Learning Repository and practice the full pipeline from data loading through model evaluation. Start with simple problems and gradually tackle more complex datasets. Document your process thoroughly, explaining your choices and evaluating results honestly.

Your major project should be an end-to-end supervised learning project. Choose a problem that interests you, potentially continuing with a dataset from earlier months or finding something new. Perform thorough exploratory analysis, engineer relevant features, train multiple models, evaluate their performance carefully, and select the best approach. Create visualizations of model performance and write a detailed explanation of your process and results. This project demonstrates your ability to approach machine learning problems systematically and make thoughtful decisions throughout the pipeline.

Month Six: Advanced Topics and Portfolio Development

The final month integrates everything you’ve learned while exploring more advanced topics and, crucially, developing your portfolio into a compelling demonstration of your capabilities. The portfolio you create this month will be what you show to potential employers or clients, so investing effort in quality and presentation pays substantial dividends.

Begin by exploring additional machine learning algorithms to broaden your toolkit. Study support vector machines, understanding how they find optimal separating hyperplanes for classification. Learn about naive Bayes classifiers and when their simplifying assumptions work well. Explore gradient boosting methods like XGBoost or LightGBM, which often achieve excellent performance in competitions and production systems.

Spend time on unsupervised learning, particularly clustering. Understand k-means clustering and how it groups similar observations. Study hierarchical clustering and how dendrograms visualize cluster relationships. Learn about dimensionality reduction with principal component analysis, understanding how it finds lower-dimensional representations that preserve variance. Practice using these techniques for exploration and preprocessing.

Introduction to natural language processing expands your capabilities to text data. Learn basic text preprocessing including tokenization, stopword removal, and stemming. Understand bag-of-words and TF-IDF representations. Practice building simple text classifiers, perhaps for sentiment analysis. This exposure to NLP opens an entire subdomain of data science for future exploration.

Time series analysis represents another important subdomain. Learn about time series decomposition into trend, seasonal, and residual components. Understand autocorrelation and how it differs from regular correlation. Practice forecasting with simple methods like moving averages and exponential smoothing. Build a basic time series prediction project to demonstrate these skills.

Throughout this month, focus substantially on portfolio development. Review the projects you’ve created in previous months and identify which are strongest. Polish these projects by improving code quality, enhancing visualizations, and writing better documentation. Create a personal website or GitHub Pages site to showcase your work professionally. Write clear README files for each project explaining the problem, your approach, and key findings.

Develop a signature project that demonstrates your full skill set. This capstone project should be more substantial than earlier work, addressing a real problem with practical implications. Choose a domain you’re genuinely interested in, whether sports analytics, financial prediction, health data analysis, or anything else. Conduct thorough exploratory analysis, build and compare multiple models, create compelling visualizations, and write a detailed report or blog post explaining your work.

The presentation of this capstone matters as much as the technical content. Practice explaining your project clearly, walking through your thought process and key decisions. Create visualizations that communicate findings to non-technical audiences. Write code that’s clean, well-commented, and easy to follow. This project should be something you’re proud to show potential employers and discuss in interviews.

Invest time in learning Git and GitHub properly. Version control is essential for professional data science work, and a well-maintained GitHub profile demonstrates professional practices. Learn to write good commit messages, organize repositories clearly, and use branching for experimentation. Make your GitHub profile look professional with a good README, pinned repositories, and regular activity.

Consider writing blog posts about your learning journey or technical topics you’ve mastered. Writing about data science concepts reinforces your understanding and demonstrates communication skills. Start simple, perhaps explaining a concept you found difficult or walking through a project. Share posts on platforms like Medium or your personal website. Even a few well-written posts can differentiate you from other entry-level candidates.

Engage with the data science community actively. Participate in Kaggle competitions, not necessarily to win but to learn from others’ approaches. Comment on others’ notebooks and share your own. Answer questions on Stack Overflow once you’re comfortable enough. Attend local data science meetups or virtual events. These activities build your network and often lead to job opportunities.

Practice for technical interviews by solving coding problems relevant to data science. Work through SQL problems on sites like HackerRank or LeetCode. Practice explaining your projects clearly and handling technical questions about your code and decisions. Prepare to discuss your learning journey, why you’re interested in data science, and what types of problems you want to work on.

Update your resume to reflect your new skills and projects. Focus on concrete accomplishments rather than just listing technologies. Describe projects with emphasis on problems solved, approaches taken, and results achieved. Quantify impacts where possible. Tailor your resume to emphasize skills relevant to the specific roles you’re targeting.

Maintaining Momentum and Continuing Growth

Completing this six-month plan represents a significant accomplishment, but it marks the beginning of your data science journey rather than the end. The field evolves constantly, and continuous learning remains essential throughout your career. Establishing habits of ongoing education during your initial self-teaching period makes lifelong learning sustainable.

After completing the core plan, continue building projects that interest you. Real learning happens through application, and each new project challenges you to integrate existing knowledge while learning new techniques. Choose projects that slightly exceed your current abilities, requiring you to stretch and research new approaches.

Contribute to open source data science projects. Many Python data science libraries welcome contributions, whether fixing bugs, improving documentation, or adding features. Contributing to projects used by thousands of other data scientists provides deep learning opportunities and builds your professional reputation.

Pursue areas of specialization that align with your interests and career goals. Data science encompasses many subdomains including deep learning, natural language processing, computer vision, time series forecasting, recommendation systems, and causal inference. Once you’ve established broad foundations, developing deeper expertise in one or two areas makes you more attractive for certain roles.

Stay current with industry developments by reading blogs, research papers, and following thought leaders on social media. Subscribe to newsletters like Data Science Weekly or follow companies whose work interests you. Understand that you don’t need to learn every new technique immediately, but awareness of trends helps you identify valuable learning opportunities.

Consider formal credentials once you have substantial self-taught experience. While you can absolutely get hired as a self-taught data scientist without credentials, some employers value certifications or master’s degrees. With solid foundations from self-study, you can evaluate credential programs more critically, choosing ones that offer genuine additional value rather than just restating basics you’ve already mastered.

Build and maintain professional relationships with other data scientists. Join online communities, attend conferences when possible, and don’t hesitate to reach out to people whose work interests you. Many data scientists are happy to offer advice to motivated learners. These relationships provide support, learning opportunities, and career assistance.

Common Challenges and How to Overcome Them

Self-teaching data science presents predictable challenges that most learners encounter. Anticipating these difficulties and having strategies to address them increases your likelihood of success.

Motivation often wanes after the initial excitement fades. When learning stops feeling like an adventure and starts feeling like work, maintaining consistency becomes harder. Combat this by connecting learning to your larger goals, celebrating small wins, and varying your activities. If you’re tired of tutorials, work on projects. If projects feel overwhelming, return to structured learning for a while. The variety maintains engagement while continuing progress.

Imposter syndrome affects most self-taught data scientists. You’ll feel behind people with formal degrees, incompetent compared to experts you follow online, and uncertain whether you’re learning the right things correctly. Recognize that these feelings are normal and don’t reflect reality. Everyone started knowing nothing. Degree holders often feel imposter syndrome too. Focus on your own progress rather than comparing yourself to others at different stages.

Getting stuck on concepts or bugs can be intensely frustrating. When you can’t understand something or can’t fix an error after hours of trying, the temptation to quit grows strong. Develop systematic debugging approaches and know when to ask for help. If you’ve struggled with something for more than a few hours, post a clear question on Stack Overflow or a relevant forum. Describe what you’re trying to do, what you’ve tried, and what’s happening. Most communities are helpful to learners asking good questions.

Isolation can make self-teaching lonely compared to classroom learning. Without classmates or a teacher, you miss social motivation and support. Combat isolation by engaging online communities, finding a study partner who’s also learning data science, or attending local meetups. Even if these interactions are brief, knowing others are on similar journeys provides motivation.

Unclear progress can be discouraging when you don’t know how much you’ve learned or how far you have to go. This plan provides structure, but you’ll still wonder whether you’re learning fast enough or well enough. Track your progress explicitly by keeping a learning journal, noting concepts mastered and projects completed. Review your early code occasionally to see how much your skills have improved. These concrete signs of progress combat feelings of stagnation.

Resource overwhelm happens when you encounter too many courses, books, and tutorials all claiming to be essential. Resist the temptation to hoard resources or constantly search for the “best” material. The plan you’re following provides sufficient resources. Stick with it rather than constantly second-guessing and switching approaches. Consistency with good-enough resources beats perfect resources used inconsistently.

Financial pressure can create stress if you’re learning without income or spending money on courses while working jobs that don’t fulfill you. Make realistic financial plans that account for your learning period. Take advantage of free resources when possible. Remember that data science careers can be lucrative, making current sacrifices investments in future earnings. However, be honest about how long you can sustain your learning without income.

Resources and Tools for Your Journey

Throughout this plan, you’ll need quality resources for learning each topic. While specific recommendations could become outdated, understanding the types of resources available and how to evaluate them helps you find what you need.

For Python programming fundamentals, the official Python tutorial provides excellent free material. Books like “Python Crash Course” by Eric Matthes or “Automate the Boring Stuff with Python” by Al Sweigart offer engaging introductions with many practice exercises. Online platforms like DataCamp, Codecademy, and Coursera provide interactive courses with immediate feedback that help beginners build confidence.

For pandas and data manipulation, the official pandas documentation includes excellent tutorials and examples. The book “Python for Data Analysis” by Wes McKinney, who created pandas, remains the authoritative reference. Practice datasets from Kaggle provide unlimited opportunities to apply skills to real data.

For statistics, “Think Stats” by Allen Downey offers an exceptional introduction that emphasizes computation and Python implementation. Khan Academy provides free video lessons covering probability and statistics thoroughly. The book “Statistics” by Freedman, Pisani, and Purves offers deeper conceptual understanding for those who want more mathematical rigor.

For machine learning, “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron provides comprehensive practical coverage. The scikit-learn documentation includes excellent user guides that explain concepts alongside code. Andrew Ng’s Machine Learning course on Coursera offers theoretical foundations with accessible explanations. FastAI courses provide practical deep learning education once you’ve mastered fundamentals.

For visualization, the matplotlib and seaborn documentation provide comprehensive references. “Fundamentals of Data Visualization” by Claus Wilke offers excellent design principles. Studying visualizations in publications like The Economist or FiveThirtyEight helps you understand professional standards.

Jupyter notebooks provide your primary development environment. Google Colab offers free GPU resources for projects requiring more computational power. GitHub serves as your portfolio platform and learning resource through reading others’ code.

Communities like r/datascience and r/learnmachinelearning on Reddit provide support, resource recommendations, and discussion. Stack Overflow answers specific technical questions. Kaggle forums focus on competitions but offer learning opportunities through discussion and shared notebooks.

Conclusion

Self-teaching data science over six months requires dedication, consistency, and strategic focus, but it’s genuinely achievable for motivated learners. This plan provides structure while remaining flexible enough to adapt to your circumstances and interests. The key to success lies not in following every detail perfectly but in maintaining forward progress week after week, gradually building the foundations that support a data science career.

Remember that six months represents the beginning rather than the end of your learning journey. By the end of this plan, you won’t know everything about data science, but you’ll have solid foundations across programming, statistics, and machine learning. You’ll have created projects that demonstrate your capabilities. You’ll understand how to continue learning independently. These outcomes position you to pursue entry-level opportunities or continue developing more specialized expertise.

Your success depends substantially on daily consistency. Studying three hours every single day for six months produces dramatically better results than sporadic intensive sessions. Build sustainable habits that fit your life. Make data science learning part of your daily routine, like exercise or meals. Some days you’ll feel motivated and progress quickly. Other days you’ll struggle to focus. Progress happens through showing up consistently regardless of motivation.

Don’t expect linear progress. Some weeks everything clicks and you feel invincible. Other weeks concepts seem impossibly difficult and nothing works. Both experiences are normal. Plateaus in learning are common and often precede breakthroughs. Persistence through difficult periods separates those who succeed from those who quit.

Engage with the community throughout your journey. Other learners provide support, motivation, and different perspectives. Experienced data scientists often generously share advice with motivated beginners. Don’t learn in complete isolation, but also don’t let comparison to others undermine your confidence.

The most important factor in your success is simply getting started and maintaining consistency. This plan provides the roadmap, but you must walk the path. Begin today with whatever time you have available. Work through the first section of Python basics. Write your first lines of code. Make the first step toward your goal of becoming a data scientist. Six months from now, looking back at today, you’ll be amazed by how far consistent effort has taken you.

The field of data science offers intellectually stimulating work, opportunities to solve meaningful problems, and strong career prospects. The barrier to entry continues lowering as resources improve and the community grows. You don’t need expensive degrees or insider connections. You need curiosity, persistence, and a systematic approach to learning. This six-month plan provides that systematic approach. Now begin your journey.