Introduction

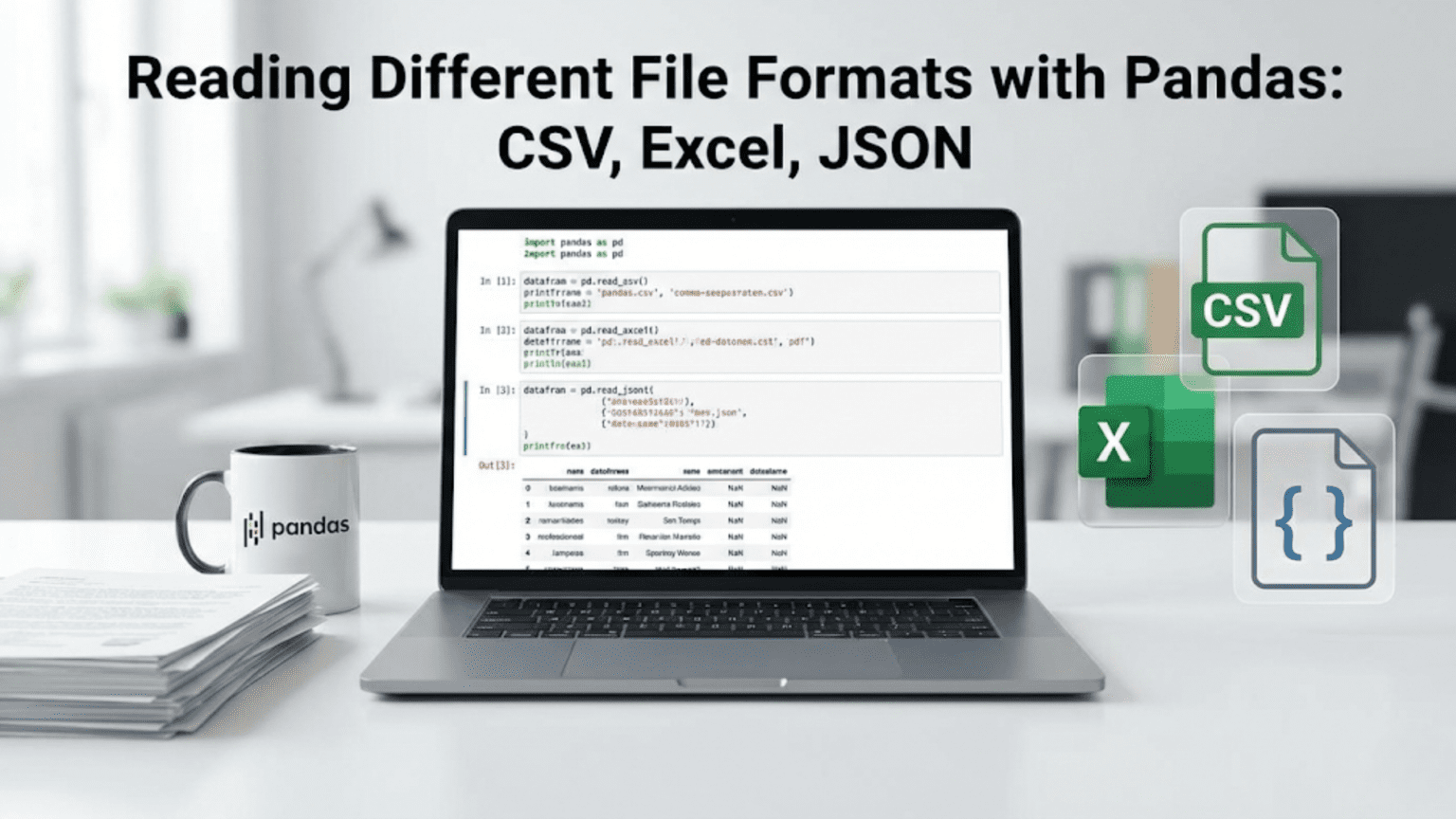

After learning pandas fundamentals including DataFrames and basic operations, you face a practical question: how do you get real-world data into pandas for analysis? While creating DataFrames manually from Python dictionaries or lists works for learning and small datasets, real data science projects work with data stored in files: CSV files exported from spreadsheets or databases, Excel workbooks shared by colleagues, JSON files from web APIs, and dozens of other formats. Pandas provides robust functions for reading these formats, intelligently handling data types, missing values, encoding issues, and structural variations that make manual parsing tedious and error-prone.

The ability to load data from various sources represents a fundamental data science skill. You might receive customer data as CSV, sales figures in Excel, API responses as JSON, and sensor readings as Parquet. Each format has conventions, quirks, and edge cases that pandas handles through flexible reading functions. Understanding these functions thoroughly prevents hours of frustration fighting with data loading issues and enables you to start analyzing data immediately rather than spending time on custom parsers. Moreover, pandas’ reading functions provide parameters for handling virtually any variation you encounter: different delimiters, custom missing value indicators, date parsing, column selection, and more.

What makes pandas’ I/O capabilities particularly valuable is their consistency across formats. While CSV, Excel, and JSON differ substantially in structure and complexity, pandas provides similar interfaces for reading them, always returning DataFrames ready for analysis. This consistency means that once you learn the patterns for one format, you can adapt quickly to others. The functions also handle common data quality issues automatically: converting numeric strings to numbers, parsing dates intelligently, and detecting missing values. This intelligent default behavior saves enormous time compared to lower-level file reading approaches.

This comprehensive guide explores reading the most common data formats you will encounter in data science. You will learn how to read CSV files with various delimiters and options, how to load Excel workbooks including specific sheets and ranges, how to parse JSON data from files and strings, how to handle common issues like encoding problems and messy headers, and best practices for efficient data loading. You will also discover how to specify data types, parse dates, handle missing values, and select subsets during loading to work efficiently with large files. By the end, you will confidently load data from any common format and troubleshoot issues that arise.

Reading CSV Files: The Most Common Format

CSV (Comma-Separated Values) represents the most universal data exchange format. Pandas provides read_csv() for loading CSV files, with extensive options for handling variations.

Read a basic CSV file:

import pandas as pd

# Read CSV file

df = pd.read_csv('data.csv')

print(df.head())This simplest form works when files have:

- Comma delimiters

- Headers in the first row

- Standard encoding (UTF-8)

- No problematic missing values

Specify delimiter for non-comma files:

# Tab-separated values

df = pd.read_csv('data.tsv', delimiter='\t')

# Pipe-delimited

df = pd.read_csv('data.txt', delimiter='|')

# Arbitrary delimiter

df = pd.read_csv('data.txt', sep=';')The sep parameter (alias for delimiter) accepts any string or regex pattern.

Handle files without headers:

# File has no header row

df = pd.read_csv('data.csv', header=None)

print(df.head())This creates default column names: 0, 1, 2, etc.

Specify custom column names:

# Provide column names

df = pd.read_csv('data.csv',

header=None,

names=['Name', 'Age', 'City', 'Salary'])Skip rows at the beginning:

# Skip first 3 rows (common for files with metadata at top)

df = pd.read_csv('data.csv', skiprows=3)

# Skip specific rows by number

df = pd.read_csv('data.csv', skiprows=[0, 2, 5])

# Skip rows based on condition

df = pd.read_csv('data.csv', skiprows=lambda x: x < 3 or x % 2 == 0)Read only specific columns:

# Select columns by name

df = pd.read_csv('data.csv', usecols=['Name', 'Age', 'Salary'])

# Select columns by position

df = pd.read_csv('data.csv', usecols=[0, 1, 3])

# Select using function

df = pd.read_csv('data.csv', usecols=lambda col: col.startswith('sales'))This saves memory when files have many columns you don’t need.

Specify data types explicitly:

# Define data types for columns

dtypes = {

'Name': str,

'Age': int,

'Salary': float,

'City': 'category' # Memory-efficient for repeated values

}

df = pd.read_csv('data.csv', dtype=dtypes)Explicit types prevent pandas from inferring incorrectly and can save memory.

Parse dates automatically:

# Parse single date column

df = pd.read_csv('data.csv', parse_dates=['Date'])

# Parse multiple date columns

df = pd.read_csv('data.csv', parse_dates=['Start_Date', 'End_Date'])

# Combine columns into date

df = pd.read_csv('data.csv',

parse_dates={'Date': ['Year', 'Month', 'Day']})Handle missing values:

# Recognize additional missing value indicators

df = pd.read_csv('data.csv',

na_values=['NA', 'N/A', 'missing', '?', '-'])

# Different missing values per column

na_dict = {

'Age': ['unknown', '?'],

'Salary': ['-', 'N/A', 0]

}

df = pd.read_csv('data.csv', na_values=na_dict)Handle encoding issues:

# Specify encoding explicitly

df = pd.read_csv('data.csv', encoding='utf-8')

# Common encodings

df = pd.read_csv('data.csv', encoding='latin-1') # Western European

df = pd.read_csv('data.csv', encoding='cp1252') # Windows

df = pd.read_csv('data.csv', encoding='iso-8859-1')

# Handle encoding errors

df = pd.read_csv('data.csv', encoding='utf-8', encoding_errors='replace')Read compressed files directly:

# Pandas automatically detects and decompresses

df = pd.read_csv('data.csv.gz')

df = pd.read_csv('data.csv.zip')

df = pd.read_csv('data.csv.bz2')Read from URL:

url = 'https://example.com/data.csv'

df = pd.read_csv(url)Read only first N rows:

# Load just 1000 rows (useful for previewing large files)

df = pd.read_csv('data.csv', nrows=1000)Set index while reading:

# Use first column as index

df = pd.read_csv('data.csv', index_col=0)

# Use specific column as index

df = pd.read_csv('data.csv', index_col='ID')

# Multi-level index

df = pd.read_csv('data.csv', index_col=['Country', 'Year'])Reading Excel Files: Working with Workbooks

Excel files (.xlsx, .xls) require the openpyxl or xlrd library for reading. Install with pip install openpyxl.

Read Excel file:

# Read first sheet

df = pd.read_excel('data.xlsx')

print(df.head())Specify which sheet to read:

# By sheet name

df = pd.read_excel('data.xlsx', sheet_name='Sales')

# By position (0-indexed)

df = pd.read_excel('data.xlsx', sheet_name=0)

# Read multiple sheets (returns dictionary)

dfs = pd.read_excel('data.xlsx', sheet_name=['Sales', 'Inventory'])

print(dfs['Sales'].head())

# Read all sheets

dfs = pd.read_excel('data.xlsx', sheet_name=None)

for sheet_name, df in dfs.items():

print(f"Sheet: {sheet_name}")

print(df.head())Specify range to read:

# Read specific rows

df = pd.read_excel('data.xlsx', skiprows=2, nrows=100)

# Read specific columns by name

df = pd.read_excel('data.xlsx', usecols='A:D')

# Read specific columns by name

df = pd.read_excel('data.xlsx', usecols=['Name', 'Age', 'City'])Handle Excel-specific options:

# Specify header row

df = pd.read_excel('data.xlsx', header=2) # Use row 2 as header

# No header

df = pd.read_excel('data.xlsx', header=None)

# Multi-level header

df = pd.read_excel('data.xlsx', header=[0, 1])Excel files often have formatting:

# Parse dates from Excel serial dates

df = pd.read_excel('data.xlsx', parse_dates=['Date'])

# Specify data types

df = pd.read_excel('data.xlsx',

dtype={'ID': str, 'Amount': float})Read from multiple files:

import glob

# Read all Excel files in directory

files = glob.glob('data/*.xlsx')

dfs = [pd.read_excel(f) for f in files]

combined = pd.concat(dfs, ignore_index=True)Reading JSON Files: Structured Data

JSON (JavaScript Object Notation) stores structured, hierarchical data. Pandas can read JSON files and strings.

Read JSON file:

# Read JSON file

df = pd.read_json('data.json')

print(df.head())JSON structure affects how pandas interprets it. Common formats:

Records format (list of objects):

[

{"Name": "Alice", "Age": 25, "City": "Boston"},

{"Name": "Bob", "Age": 30, "City": "Seattle"}

]Read records format:

df = pd.read_json('data.json', orient='records')Split format (columns as keys):

{

"Name": {"0": "Alice", "1": "Bob"},

"Age": {"0": 25, "1": 30},

"City": {"0": "Boston", "1": "Seattle"}

}Read split format:

df = pd.read_json('data.json', orient='split')Index format (rows as keys):

{

"0": {"Name": "Alice", "Age": 25},

"1": {"Name": "Bob", "Age": 30}

}Read index format:

df = pd.read_json('data.json', orient='index')Read JSON from string:

json_string = '''

[

{"Name": "Alice", "Age": 25},

{"Name": "Bob", "Age": 30}

]

'''

df = pd.read_json(json_string)Read nested JSON:

# Flatten nested structures

from pandas import json_normalize

data = [

{

"name": "Alice",

"address": {"city": "Boston", "state": "MA"}

},

{

"name": "Bob",

"address": {"city": "Seattle", "state": "WA"}

}

]

df = json_normalize(data)

print(df)

# Output:

# name address.city address.state

# 0 Alice Boston MA

# 1 Bob Seattle WARead JSON Lines format (one JSON object per line):

df = pd.read_json('data.jsonl', lines=True)Common with streaming data and large datasets.

Reading Other Common Formats

Pandas supports many additional formats:

HTML Tables

Extract tables from HTML:

# Read all tables from HTML file or URL

dfs = pd.read_html('tables.html')

# Returns list of DataFrames, one per table

df = dfs[0] # First table

# Read from URL

url = 'https://example.com/data-table.html'

dfs = pd.read_html(url)SQL Databases

Read from SQL database:

import sqlite3

# Connect to database

conn = sqlite3.connect('database.db')

# Read entire table

df = pd.read_sql('SELECT * FROM sales', conn)

# Read with query

df = pd.read_sql('SELECT * FROM sales WHERE year = 2024', conn)

# Read table directly

df = pd.read_sql_table('sales', conn)Parquet Files

Parquet is a columnar storage format, efficient for large datasets:

# Requires pyarrow or fastparquet

# pip install pyarrow

df = pd.read_parquet('data.parquet')

# Read specific columns

df = pd.read_parquet('data.parquet', columns=['Name', 'Age'])Clipboard

Read data from clipboard:

# Copy data from Excel or browser, then:

df = pd.read_clipboard()Useful for quick data imports during interactive analysis.

Handling Common Issues

Large Files

For files too large for memory:

# Read in chunks

chunk_size = 10000

chunks = []

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

# Process chunk

processed = chunk[chunk['value'] > 0]

chunks.append(processed)

df = pd.concat(chunks, ignore_index=True)Or read with iterator:

# Create iterator

reader = pd.read_csv('large_file.csv', iterator=True)

# Read first chunk

chunk = reader.get_chunk(1000)Malformed Files

Handle files with inconsistent columns:

# Skip bad lines

df = pd.read_csv('messy_data.csv', on_bad_lines='skip')

# Or warn

df = pd.read_csv('messy_data.csv', on_bad_lines='warn')Handle extra whitespace:

# Strip whitespace from strings

df = pd.read_csv('data.csv', skipinitialspace=True)Quote Characters

Handle quoted values:

# Values are quoted

df = pd.read_csv('data.csv', quotechar='"')

# Different quote character

df = pd.read_csv('data.csv', quotechar="'")

# No quoting

df = pd.read_csv('data.csv', quoting=3) # QUOTE_NONEDecimal Separators

Handle European number format (comma as decimal):

# Comma as decimal separator

df = pd.read_csv('data.csv', decimal=',')Writing Data: Saving DataFrames

After processing, save results:

Write CSV

# Write to CSV

df.to_csv('output.csv', index=False)

# With specific options

df.to_csv('output.csv',

sep=';',

encoding='utf-8',

index=False,

columns=['Name', 'Age'])Write Excel

# Write to Excel

df.to_excel('output.xlsx', index=False)

# Multiple sheets

with pd.ExcelWriter('output.xlsx') as writer:

df1.to_excel(writer, sheet_name='Sales', index=False)

df2.to_excel(writer, sheet_name='Inventory', index=False)Write JSON

# Write to JSON

df.to_json('output.json', orient='records', indent=2)

# Different orientations

df.to_json('output.json', orient='split')

df.to_json('output.json', orient='index')Write Parquet

# Write to Parquet

df.to_parquet('output.parquet', index=False)

# With compression

df.to_parquet('output.parquet', compression='gzip')Best Practices for Data Loading

Follow these patterns for efficient data loading:

Test with small samples first:

# Preview file structure

df_sample = pd.read_csv('large_file.csv', nrows=5)

print(df_sample.head())

print(df_sample.dtypes)

# Then read full file with correct settings

df = pd.read_csv('large_file.csv',

dtype={'ID': str, 'Amount': float},

parse_dates=['Date'])Specify data types when possible:

# Saves memory and prevents misinterpretation

df = pd.read_csv('data.csv',

dtype={'ID': str,

'Category': 'category',

'Value': float})Handle missing values explicitly:

# Be explicit about what represents missing

df = pd.read_csv('data.csv',

na_values=['NA', 'N/A', 'null', '-', '?'])Use compression for storage:

# Save compressed

df.to_csv('data.csv.gz', compression='gzip', index=False)

# Read compressed (automatic)

df = pd.read_csv('data.csv.gz')Document data loading:

def load_sales_data(filepath):

"""

Load sales data from CSV file.

Parameters

----------

filepath : str

Path to CSV file

Returns

-------

DataFrame

Cleaned sales data

"""

df = pd.read_csv(

filepath,

parse_dates=['Date'],

dtype={'ProductID': str, 'Quantity': int},

na_values=['NA', '-']

)

return dfTroubleshooting Common Errors

UnicodeDecodeError:

# Try different encodings

for encoding in ['utf-8', 'latin-1', 'cp1252', 'iso-8859-1']:

try:

df = pd.read_csv('data.csv', encoding=encoding)

print(f"Success with {encoding}")

break

except UnicodeDecodeError:

continueFileNotFoundError:

import os

# Check if file exists

if os.path.exists('data.csv'):

df = pd.read_csv('data.csv')

else:

print("File not found")

# Use absolute path

df = pd.read_csv('/full/path/to/data.csv')ParserError (inconsistent columns):

# Skip bad lines

df = pd.read_csv('data.csv', on_bad_lines='skip')

# Or inspect problematic rows

with open('data.csv') as f:

for i, line in enumerate(f):

if line.count(',') != expected_columns:

print(f"Line {i}: {line}")MemoryError:

# Read in chunks

chunks = []

for chunk in pd.read_csv('large.csv', chunksize=10000):

# Process and reduce size

processed = chunk[chunk['important_col'].notna()]

chunks.append(processed)

df = pd.concat(chunks, ignore_index=True)Conclusion

Reading data from files represents the first step in virtually every data science project, making proficiency with pandas’ I/O functions essential. Understanding how to load CSV, Excel, JSON, and other formats with appropriate options for handling variations, missing values, encodings, and data types enables you to start analyzing data immediately rather than struggling with parsing issues. The flexibility pandas provides through parameters like dtype, parse_dates, usecols, and encoding options handles virtually any file format variation you encounter.

The patterns covered here, specifying data types, parsing dates, handling missing values, reading subsets, and managing large files, apply across all formats pandas supports. While specific parameter names might vary between read_csv(), read_excel(), and read_json(), the underlying concepts remain consistent. This consistency makes learning new formats easy once you master the fundamental patterns with common formats.

As you work with real datasets, you will encounter countless variations in how files are structured and formatted. Rather than fighting with manual parsing, leverage pandas’ extensive options to handle these variations cleanly. The time invested learning these reading functions thoroughly pays dividends in every project by eliminating data loading as a source of frustration and enabling you to focus on analysis rather than parsing. Practice loading different file types, experiment with various options, and build intuition for troubleshooting common issues. With solid data loading skills, you can confidently tackle any dataset regardless of its source format.