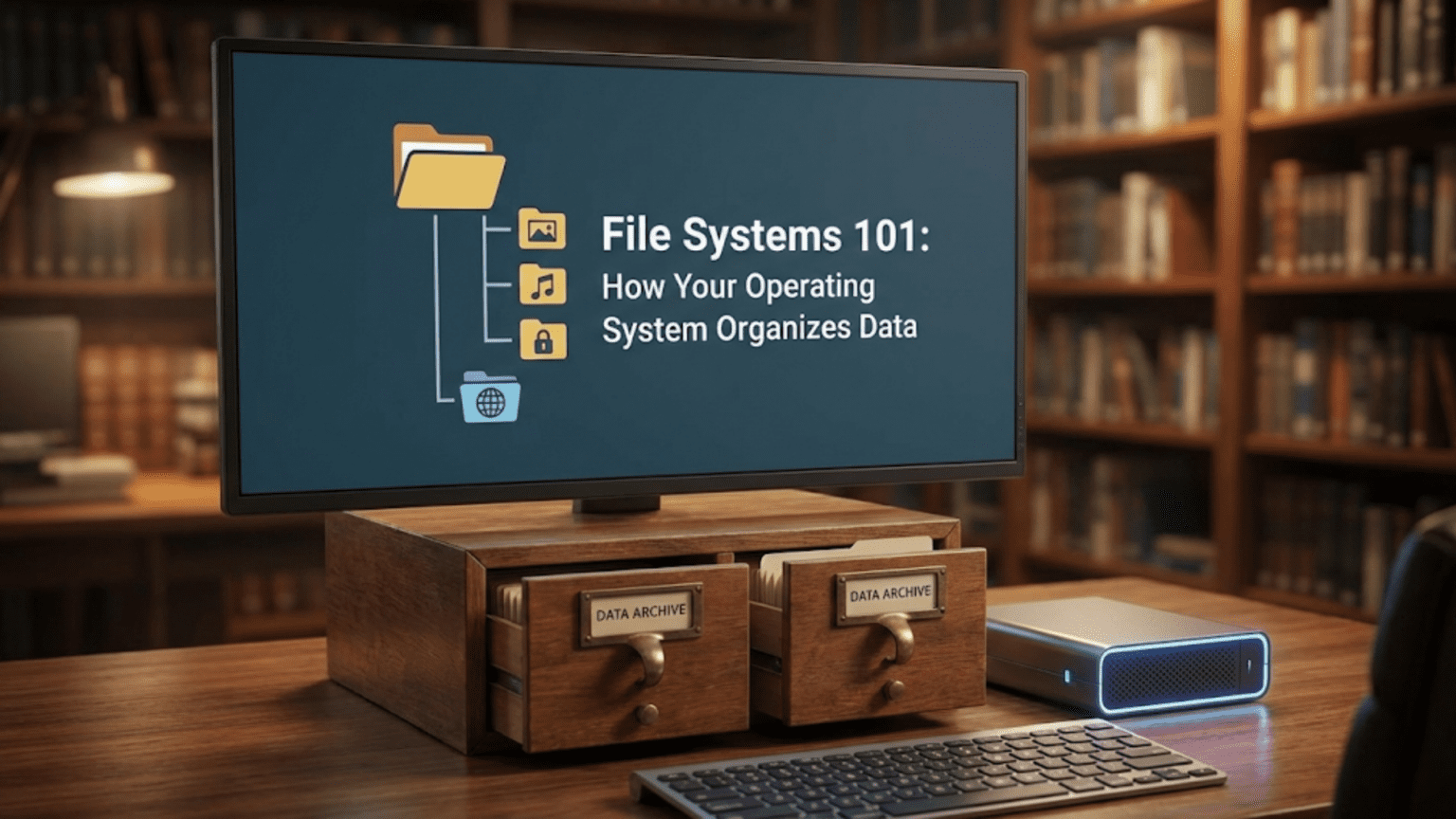

Understanding the Structure That Makes Digital Storage Possible

Every time you save a document, download a photo, or install an application, you’re relying on one of the most fundamental yet invisible aspects of computing: the file system. This sophisticated organizational framework transforms raw storage hardware—spinning magnetic platters in hard drives or electrical charges in solid-state drives—into the familiar hierarchy of folders and files you interact with daily. Without file systems, your storage devices would be like libraries without any organizational system, where finding a specific book among millions would be nearly impossible.

File systems serve as the organizational logic that operating systems use to store and retrieve data on storage devices. They define how data gets divided into discrete files, how those files are named and located, how space gets allocated and reclaimed, and how the system ensures data integrity. Different file systems take different approaches to these challenges, each with distinct characteristics, strengths, and limitations. Understanding how file systems work reveals why storage behaves the way it does, why certain operations take time while others happen instantly, and how your operating system keeps track of potentially millions of files across multiple storage devices.

The concept might seem simple on the surface—organize files into folders, save data when asked, retrieve it when needed. The reality involves extraordinary complexity hidden behind that simple interface. File systems must handle files ranging from tiny text documents to massive video files spanning hundreds of gigabytes. They must maintain consistency even when power fails unexpectedly. They must provide security by controlling who can access which files. They must enable multiple programs to access the same storage simultaneously without conflicts. They must optimize performance despite the vast speed differences between modern processors and storage hardware. All these challenges and more shape how file systems are designed and implemented.

The Fundamental Concepts: Files, Directories, and Metadata

At the heart of every file system lies the concept of a file—a named collection of related data stored as a unit. Files can contain anything: text documents, images, videos, program code, configuration settings, or data in proprietary formats. The file system doesn’t care about content; it simply stores and retrieves whatever bytes you give it. This content-agnostic approach allows file systems to handle any type of data without needing to understand what that data represents.

Every file has associated metadata—information about the file rather than the file’s actual content. The most obvious metadata is the filename, which provides a human-readable identifier for the file. File systems impose various rules on filenames: maximum length, allowed characters, case sensitivity. Windows file systems traditionally used short 8.3 names (eight characters, a dot, and three-character extension) before modern systems extended this to 255 characters. Linux file systems generally allow longer names and treat names as case-sensitive, while Windows treats them as case-insensitive but case-preserving.

Beyond names, file systems store numerous other metadata attributes. The file size tracks how many bytes the file contains. Timestamps record when the file was created, last modified, and last accessed. Permissions define which users or groups can read, write, or execute the file. Some file systems include additional attributes like archive flags, hidden status, read-only status, or extended attributes for application-specific metadata. This metadata resides in special data structures maintained by the file system, separate from the file’s actual content.

Directories, also called folders, provide hierarchical organization for files. Conceptually, directories contain files and other directories, creating a tree-like structure. However, directories are actually just special files that store lists of names and references to other files and directories. When you open a folder, the file system reads the directory file to determine what items it contains and retrieves metadata for each item to display. This directory structure allows you to organize thousands or millions of files into logical groupings that make them easier to find and manage.

The file system maintains a special root directory that serves as the starting point for the entire directory hierarchy. On Unix-like systems including Linux and macOS, this is simply “/” (forward slash), with all other directories and files existing somewhere beneath this root. Windows uses multiple roots, one for each drive letter—C:, D:, and so on—a design choice inherited from early DOS. Understanding this fundamental difference helps explain why file paths look different between operating systems: /home/user/documents on Linux versus C:\Users\user\Documents on Windows.

File systems use paths to specify the location of files within the directory hierarchy. An absolute path specifies the complete location from the root: /home/user/photo.jpg or C:\Users\user\photo.jpg. A relative path specifies location relative to some current directory: if you’re already in the user directory, simply photo.jpg refers to the same file. The file system resolves these paths by traversing the directory tree, following each directory name in sequence until reaching the final file.

Hard links and symbolic links add complexity to this seemingly simple model. A hard link creates a second directory entry pointing to the same file data—the file effectively has two names, and deleting either name doesn’t remove the file until both are deleted. Symbolic links, also called symlinks or soft links, are special files that contain paths to other files—they’re essentially shortcuts that the file system automatically follows when accessed. These linking capabilities enable sophisticated file organization without duplicating data.

Space Allocation: How File Systems Manage Storage

When you save a file, the file system must find space on the storage device to hold that data. This space allocation problem is more complex than it might initially appear. Storage devices are divided into fixed-size units called blocks or sectors, typically 4 kilobytes each on modern systems. The file system allocates space in multiples of these blocks, meaning a 1-byte file still consumes at least one full block of storage. This granularity represents a trade-off: smaller blocks waste less space for tiny files but require more metadata to track, while larger blocks waste more space but simplify management.

The file system maintains data structures that track which blocks are in use and which are available for allocation. Different file systems use different approaches. Bitmap allocation uses a large bitmap where each bit represents one block—a 1 means the block is in use, a 0 means it’s available. This approach is simple and makes finding free space straightforward, but the bitmap itself can become large on massive storage devices. Free lists maintain linked lists of available blocks, efficient for allocation but potentially slower for finding contiguous free space.

Extent-based allocation, used by modern file systems like ext4, APFS, and NTFS, groups contiguous blocks into extents—ranges of sequential blocks. Instead of tracking each 4KB block individually, the file system tracks larger extents like “blocks 1000-1999 are allocated to this file.” This dramatically reduces metadata overhead for large files, allows faster sequential access, and simplifies many operations. A large video file might be represented by just a few extents instead of thousands of individual block entries.

Fragmentation occurs when files can’t be stored in contiguous blocks. When you save a file and insufficient contiguous space exists, the file system must split it across multiple non-contiguous regions. The file might have its first megabyte in blocks 1000-1255, the next megabyte in blocks 5000-5255, and so on. Fragmentation degrades performance, particularly on traditional hard drives where the read head must physically move between different disk locations. Solid-state drives suffer less from fragmentation since they have no moving parts, but it still imposes some overhead.

File systems employ various strategies to minimize fragmentation. Allocating space in larger chunks reduces the number of fragments. Clustering related files together improves performance when those files are accessed together. Reserving blocks near existing file data for future expansion allows files to grow without immediate fragmentation. Some file systems perform online defragmentation, automatically relocating file data to consolidate fragments during normal operation. Windows historically required periodic manual defragmentation, while Linux file systems like ext4 are designed to resist fragmentation naturally.

When you delete a file, the file system must reclaim its storage space for reuse. This typically doesn’t immediately erase the file’s data—it simply marks the blocks as available and removes the directory entry. The actual data remains on disk until those blocks get reallocated to another file. This behavior enables file recovery tools to sometimes resurrect deleted files, but also means that truly removing sensitive data requires secure deletion tools that overwrite blocks before freeing them.

Space allocation also involves managing disk quotas, limiting how much storage individual users or groups can consume. The file system tracks space used by each user and prevents allocations that would exceed their quota. This feature is essential in multi-user environments like servers or enterprise systems where storage is a shared resource that must be distributed fairly.

File System Structures: Inodes, FAT, and Master File Tables

Different file systems use different data structures to organize files and track their locations on disk. Understanding these structures reveals why file systems behave differently and explains various quirks and limitations. Three important examples—inodes from Unix file systems, the File Allocation Table from FAT file systems, and the Master File Table from NTFS—illustrate different approaches to the same fundamental problems.

Unix-style file systems including ext4 on Linux use inodes (index nodes) as their core organizational structure. Each file and directory has an associated inode containing all the file’s metadata: size, timestamps, permissions, and most importantly, pointers to the data blocks containing the file’s content. The inode doesn’t contain the filename—that’s stored in directory entries that map names to inode numbers. This separation means a file’s content and attributes exist independently of its name, allowing hard links where multiple names reference the same inode.

An inode contains a fixed number of block pointers. The first several pointers directly reference data blocks—for small files, these direct pointers are sufficient. For larger files, the inode includes indirect pointers that point to blocks containing additional block pointers, allowing much larger files. Double-indirect pointers point to blocks of indirect pointers, and triple-indirect pointers add another level, enabling files of practically unlimited size though with increasing overhead for accessing blocks deep in the pointer chain.

The FAT (File Allocation Table) file system, used by DOS and still common on USB drives and SD cards, takes a completely different approach. The file system reserves the beginning of the storage device for the File Allocation Table itself—a large array where each entry corresponds to one cluster (group of blocks) on the disk. Directory entries contain the cluster number where a file starts. The FAT entry for that cluster contains either the next cluster number if the file continues or a special end-of-chain marker if that’s the last cluster. Following these chains allows the file system to traverse fragmented files, though performance suffers when files spread across many non-contiguous clusters.

FAT’s simplicity makes it universal—virtually every operating system can read FAT file systems, making it ideal for removable media. However, FAT has significant limitations: poor support for large files and volumes, no built-in security or permissions, limited metadata, and no journaling for crash protection. FAT32, the most common FAT variant, can’t represent files larger than 4 gigabytes, a serious limitation in an era of high-definition video. ExFAT addressed some limitations for flash drives but still lacks advanced features found in modern file systems.

NTFS (New Technology File System), used by modern Windows, organizes everything around the Master File Table (MFT). The MFT is a database containing records for every file and directory, with each record storing file attributes and data location information. For small files, NTFS can store the entire file content directly in the MFT record, eliminating the need for separate data blocks. Larger files use data runs—compressed descriptions of extent locations—making NTFS efficient for both tiny and enormous files.

NTFS includes sophisticated features like file compression, encryption, access control lists for detailed permissions, change journals that track file modifications, volume shadow copies for point-in-time snapshots, and sparse file support where large regions of zeros don’t consume actual disk space. These features make NTFS powerful for enterprise environments but also more complex than simpler file systems.

Modern Linux file systems like ext4 extend the inode concept with extent trees for efficient large file support, journaling for crash recovery, delayed allocation to reduce fragmentation, and multi-block allocation to improve performance. Apple’s APFS (Apple File System) uses B-tree structures for metadata, enabling features like snapshots, clones, space sharing, and crash protection while optimizing for flash storage characteristics.

Journaling and Data Integrity

One of the most significant advances in file system design is journaling—a technique that dramatically improves reliability when systems crash or lose power unexpectedly. Understanding journaling reveals why modern systems recover gracefully from crashes while older systems often required lengthy disk checks and sometimes suffered data corruption.

File system operations aren’t atomic—they require multiple separate steps. Creating a file requires allocating an inode, updating the directory, allocating data blocks, updating the free space bitmap, and writing actual data. If power fails midway through this sequence, the file system can be left in an inconsistent state—perhaps the inode is allocated but not referenced by any directory, creating wasted space, or the directory points to an unallocated inode, creating potential corruption. Without journaling, the system must perform a full file system check (fsck on Linux, chkdsk on Windows) on reboot, scanning the entire disk to detect and repair inconsistencies—a process that can take hours on large drives.

Journaling file systems maintain a special journal or log area on disk. Before making changes to the file system metadata, they write descriptions of the intended changes to the journal. Only after the journal entry is safely written do they proceed with the actual modifications. If a crash occurs, the journal contains a record of operations that were in progress. On reboot, the file system replays these journal entries, completing operations that were interrupted. This journal replay takes only seconds regardless of disk size, dramatically reducing recovery time.

Different journaling modes offer different trade-offs. Metadata journaling logs only changes to file system structures—inodes, directories, allocation bitmaps—providing consistency guarantees for the file system itself but not for file data. If a crash occurs while writing a file, the file system structure remains consistent but the file might contain a mixture of old and new data. Full data journaling logs both metadata and file content, guaranteeing complete consistency but with higher performance overhead since all data gets written twice—once to the journal and again to its final location. Many file systems allow users to choose journaling modes based on their needs.

Copy-on-write file systems like Btrfs and ZFS take a different approach to consistency. Instead of modifying data blocks in place, they write new versions of modified blocks to new locations and atomically update pointers to reference the new blocks. The old blocks remain valid until the transaction completes, providing automatic rollback if anything goes wrong. This approach naturally provides crash consistency without separate journaling and enables features like instant snapshots and efficient incremental backups.

File systems also implement various checksumming schemes to detect data corruption. Advanced file systems like ZFS and Btrfs checksum both metadata and data, verifying integrity when reading and detecting silent corruption caused by hardware failures, cosmic rays flipping bits in memory, or other rare but real phenomena. When reading from mirrored or RAID configurations, checksums allow the file system to detect which copy is correct and automatically repair corrupted data from intact copies.

Performance Optimization and Caching

File systems employ numerous techniques to maximize performance despite the vast speed differences between processors and storage. While a processor might execute billions of instructions per second, traditional hard drives complete only hundreds of operations per second. Even fast solid-state drives are thousands of times slower than RAM. File systems bridge this performance gap through caching, prefetching, and careful optimization of access patterns.

The operating system maintains a page cache or buffer cache—a region of RAM containing recently accessed file data. When a program reads from a file, the file system first checks if the requested data exists in cache. If so, it returns the cached data instantly without accessing the disk. If not, it reads from disk, adds the data to cache, and returns it. Subsequent accesses to the same data find it in cache, eliminating slow disk operations. The cache also buffers writes, allowing programs to continue immediately while the file system writes data to disk in the background.

Read-ahead or prefetching anticipates future access patterns. When you read sequentially through a file, the file system notices this pattern and automatically reads additional data beyond what you requested, betting that you’ll want it soon. This speculation masks disk latency—by the time you request the next chunk of data, it’s already in cache. Smart prefetching algorithms detect various access patterns—sequential reads, strided access, reverse sequential—and adapt accordingly.

Write-back caching delays writes to disk, batching multiple operations together for efficiency. When you save a file, the data initially goes only to cache while the file system continues with other work. Later, the file system flushes dirty cache pages—modified data that hasn’t been written to disk yet—potentially combining multiple operations into more efficient larger writes. This improves performance dramatically but creates a risk: if power fails before dirty pages are flushed, changes are lost. File systems must carefully balance write-back delays against data safety, providing periodic automatic flushing and forced flushes for critical operations.

Directory caching maintains recently accessed directory contents in memory, making directory listings and file lookups fast. Name caches remember the translations from paths to inodes, avoiding repeated directory traversals. These caches make navigation through deep directory hierarchies efficient even though conceptually each path component requires reading a different directory.

File systems also optimize physical data layout. Placing directories and their files in nearby disk locations reduces seek times. Allocating related files contiguously enables efficient sequential access. Reserving space near files for future growth accommodates expansion without fragmentation. These layout optimizations primarily benefit traditional hard drives with moving read heads, though they provide modest benefits for solid-state drives as well.

For solid-state drives, file systems implement additional optimizations. TRIM commands inform the drive which blocks are no longer in use, allowing the drive to erase them in advance and maintain performance. Alignment of partitions and allocations to the drive’s physical page size ensures efficient access. Minimizing unnecessary writes extends drive lifetime since flash memory has limited write endurance. Modern file systems detect solid-state drives and adjust behavior accordingly, disabling optimizations that help hard drives but hurt SSDs while enabling SSD-specific features.

File System Types and Their Characteristics

Different file systems excel at different tasks, optimized for various usage scenarios. Understanding the major file systems helps explain why different operating systems use different formats and why you might choose one over another when formatting storage.

NTFS dominates Windows systems, providing enterprise features like permissions, encryption, compression, and journaling. It handles large files and volumes efficiently, supports advanced features like alternate data streams and reparse points, and integrates deeply with Windows security. However, NTFS write support on non-Windows systems is sometimes problematic, limiting its usefulness for shared external drives.

The ext family (ext2, ext3, ext4) serves as the standard for Linux systems. Ext4, the current version, provides journaling, large file support, extent-based allocation, and delayed allocation for performance. It’s mature, stable, and well-understood, making it the default choice for many Linux distributions. However, it lacks some advanced features found in newer file systems like built-in snapshots or transparent compression.

XFS excels at handling large files and high-performance workloads, traditionally used for media production and high-performance computing. It provides excellent scalability, efficient handling of parallel I/O operations, and sophisticated allocation strategies. However, recovering deleted files is more difficult than with ext4, and it requires more careful handling during power failures.

Btrfs (B-tree file system) brings advanced features to Linux: copy-on-write for data safety, built-in RAID support, transparent compression, subvolumes, snapshots, and checksumming for data integrity. It’s designed for modern needs but has historically suffered from maturity issues and complexity, though it continues improving and gaining adoption.

ZFS, originally from Solaris and now available on Linux and BSD systems, provides extraordinary data integrity through end-to-end checksumming, automatic corruption detection and repair, built-in volume management, snapshots, clones, and sophisticated caching. Its advanced features make it popular for network-attached storage and data centers, though it requires significant RAM and has licensing issues that prevent its inclusion in the Linux kernel.

APFS replaced HFS+ as macOS’s default file system, optimized for flash storage with features like space sharing, cloning, encryption, snapshots, and crash protection. It improves performance on SSDs while providing modern features that the aging HFS+ lacked. However, it’s proprietary to Apple systems with limited support elsewhere.

FAT32 and exFAT remain important for removable media despite their limitations. Their simplicity and universal support make them the de facto standard for USB drives, SD cards, and external drives that need to work across different operating systems. ExFAT addresses FAT32’s file size limits while maintaining broad compatibility, making it suitable for large removable media.

Understanding File System Limitations and Quotas

Every file system has limits—maximum file size, maximum volume size, maximum number of files, path length limits—determined by its design and data structures. Understanding these limits helps avoid surprises and explains certain error messages.

Traditional FAT32 cannot represent files larger than 4GB because it uses 32-bit values for file sizes. This limit becomes problematic with modern high-definition video files that easily exceed 4GB. NTFS’s 64-bit file sizes support files up to 16 exabytes—far beyond any practical need for the foreseeable future. Most modern file systems use 64-bit addressing, effectively eliminating file size as a practical constraint.

Path length limits vary by file system and operating system. Windows historically limited paths to 260 characters, a constraint from early DOS that persisted for decades and caused problems with deeply nested directories. Modern Windows can support longer paths with certain configuration changes. Linux systems typically support paths up to 4096 characters, rarely causing practical issues.

File systems impose maximum numbers of files based on their structure. Ext4 can handle billions of files, limited primarily by available inodes. NTFS’s Master File Table can grow to accommodate virtually unlimited files. These limits rarely constrain modern usage, though embedded systems with small storage might need to consider them.

Quotas allow administrators to limit storage space per user or group, preventing any single user from monopolizing shared storage. File systems track space usage by owner and enforce quota limits, refusing allocations that would exceed limits. Some file systems support grace periods, allowing users to temporarily exceed quotas with warnings before enforcement begins.

The Future of File Systems

File system technology continues evolving to address new storage technologies, scale requirements, and use cases. Persistent memory technologies that retain data when powered off but offer speed approaching RAM challenge traditional file system assumptions. Systems designed for byte-addressable persistent memory treat storage more like memory than traditional block devices, enabling new application architectures.

Distributed and parallel file systems spread data across multiple machines, providing both redundancy and aggregate performance exceeding any single storage device. These systems, essential for cloud computing and high-performance computing, face challenges around consistency, performance, and failure handling that single-machine file systems never encounter.

File systems increasingly incorporate features traditionally found in separate layers—volume management, RAID, encryption—simplifying administration and enabling tighter integration. This trend toward integrated storage stacks continues as file systems become more sophisticated.

The humble file system, organizing your photos, documents, videos, and applications, represents decades of engineering solving extraordinarily complex problems. Every file you save, every directory you create, every program you run depends on file systems faithfully maintaining order in the chaos of digital storage. Understanding how they work provides insight into your computer’s behavior and appreciation for the elegant solutions to difficult problems that make modern computing reliable and efficient.