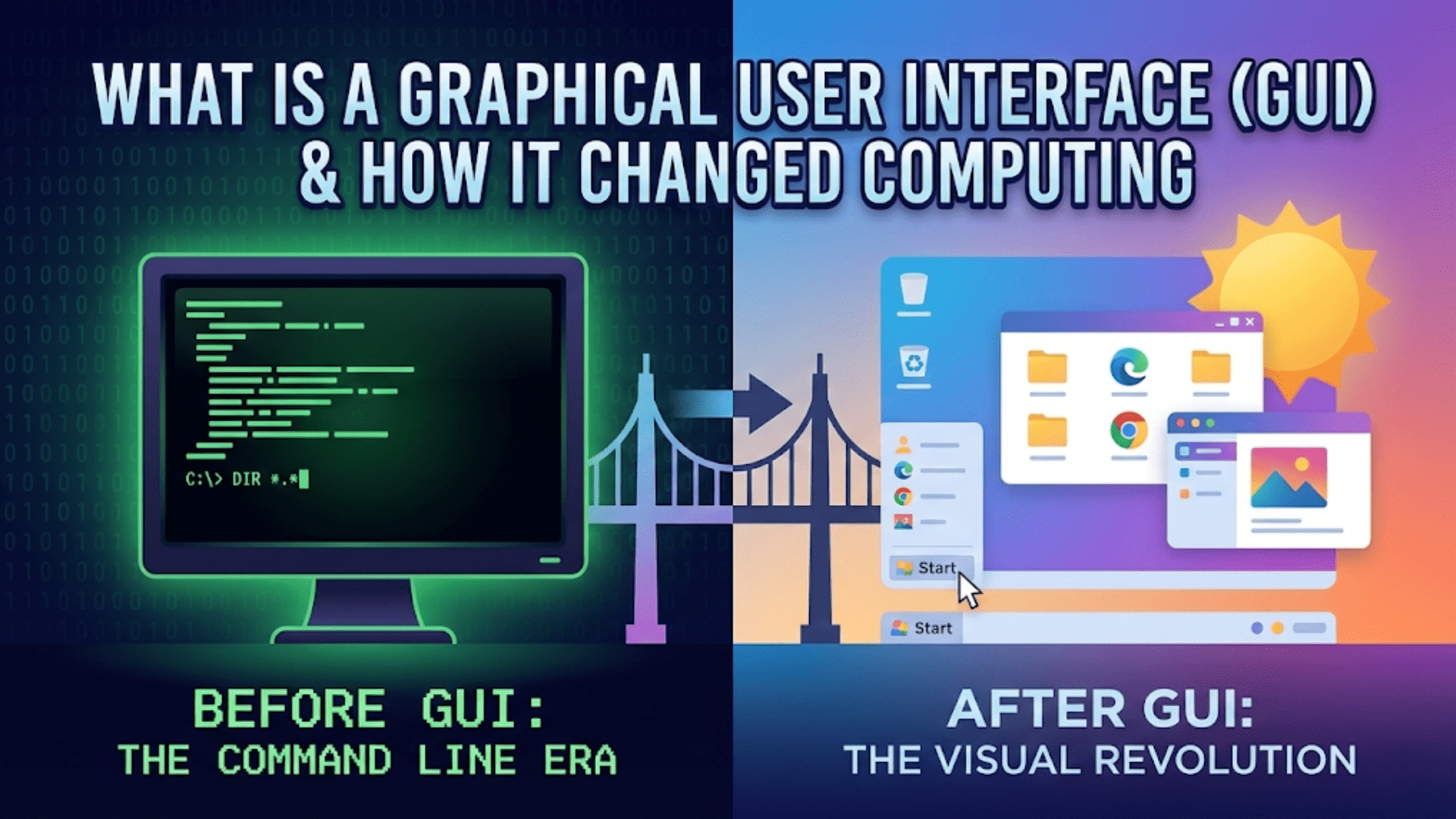

Deep learning, a subset of machine learning, has emerged as a transformative technology in the field of artificial intelligence (AI). With its ability to analyze and learn from large amounts of data, deep learning powers innovations in computer vision, natural language processing, robotics, and beyond. Inspired by the structure and function of the human brain, deep learning models are capable of performing tasks that were once thought to be exclusive to human intelligence.

In this article, we explore the fundamentals of deep learning, its architecture, and its applications across industries.

What Is Deep Learning?

Deep learning is a branch of AI that uses artificial neural networks (ANNs) with multiple layers to process data and make predictions. These networks, often referred to as deep neural networks (DNNs), are capable of learning hierarchical representations of data. For example, in image recognition, a deep neural network may first identify edges and shapes, then combine these features to recognize objects.

How Is Deep Learning Different from Machine Learning?

While both deep learning and machine learning involve training models to make predictions, there are key distinctions:

- Feature Engineering: Machine learning often requires manual feature engineering to extract meaningful inputs from raw data. In contrast, deep learning models automatically learn features from the data.

- Scalability: Deep learning excels with large datasets, whereas traditional machine learning models may struggle to scale effectively.

- Complexity: Deep learning models can handle more complex patterns and relationships in data compared to traditional algorithms.

The Building Blocks of Deep Learning

1. Artificial Neural Networks (ANNs)

At the heart of deep learning are artificial neural networks, which are inspired by biological neural systems. An ANN consists of:

- Input Layer: Receives raw data as input.

- Hidden Layers: Process the data through interconnected nodes (neurons).

- Output Layer: Produces the final prediction or classification.

Each neuron processes inputs by applying a weighted sum, adding a bias, and passing the result through an activation function.

2. Activation Functions

Activation functions introduce non-linearity, enabling neural networks to learn complex relationships. Common activation functions include:

- Sigmoid: Outputs values between 0 and 1, often used in binary classification.

- ReLU (Rectified Linear Unit): Outputs the input directly if positive; otherwise, it outputs zero.

- Tanh: Outputs values between -1 and 1, providing stronger gradients than Sigmoid.

3. Forward Propagation

Forward propagation refers to the process of passing data through the layers of the network to produce an output. During this process, each layer applies transformations to the input data.

4. Loss Function

The loss function quantifies the difference between the predicted output and the actual target. Common loss functions include:

- Mean Squared Error (MSE): Used for regression tasks.

- Cross-Entropy Loss: Common in classification tasks.

5. Backpropagation

Backpropagation is the process of adjusting the network’s weights and biases to minimize the loss. It involves:

- Calculating Gradients: Determining how each weight contributes to the error.

- Updating Weights: Using optimization algorithms like Gradient Descent to reduce the loss.

Types of Deep Learning Architectures

Deep learning encompasses a variety of architectures tailored to different types of data and tasks:

1. Feedforward Neural Networks (FNNs)

- The simplest form of neural networks.

- Information flows in one direction, from the input layer to the output layer.

- Commonly used for basic classification and regression problems.

2. Convolutional Neural Networks (CNNs)

- Designed for image and video data.

- Use convolutional layers to detect spatial hierarchies (e.g., edges, textures).

- Widely used in tasks like object detection, facial recognition, and medical imaging.

3. Recurrent Neural Networks (RNNs)

- Specialized for sequential data, such as time series or text.

- Use loops to retain information from previous inputs, making them ideal for tasks like language modeling and speech recognition.

- Variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) address the limitations of basic RNNs.

4. Transformer Networks

- Based on self-attention mechanisms, Transformers are state-of-the-art for processing sequential data.

- Power advanced models like BERT and GPT, widely used in natural language processing (NLP).

5. Generative Adversarial Networks (GANs)

- Consist of two networks: a generator and a discriminator.

- Used for generating realistic images, videos, and other synthetic data.

Applications of Deep Learning

Deep learning’s ability to process unstructured data and uncover patterns has led to its adoption across various industries:

1. Healthcare

- Medical Imaging: Identifying diseases from X-rays, MRIs, and CT scans.

- Drug Discovery: Accelerating the development of new treatments through molecular analysis.

- Predictive Analytics: Anticipating patient outcomes using electronic health records.

2. Autonomous Systems

- Self-Driving Cars: Detecting and classifying objects in real time.

- Robotics: Enabling robots to perform complex tasks with precision.

3. Natural Language Processing

- Language Translation: Powering applications like Google Translate.

- Chatbots and Virtual Assistants: Enhancing customer support and personal interactions.

- Sentiment Analysis: Understanding customer opinions from text data.

4. Finance

- Fraud Detection: Identifying suspicious transactions in real time.

- Algorithmic Trading: Optimizing investment strategies using predictive models.

5. Entertainment

- Recommendation Systems: Suggesting movies, music, and shows based on user preferences.

- Content Creation: Generating realistic animations and effects.

Training Deep Learning Models

Training a deep learning model involves teaching the neural network to learn patterns from data. The process is iterative and requires careful design and monitoring.

1. Data Preparation

The quality of the training data plays a crucial role in model performance. Effective data preparation includes:

- Data Cleaning: Handling missing values, removing duplicates, and fixing inconsistencies.

- Normalization: Scaling input features to a similar range to improve gradient flow.

- Data Augmentation: Generating new samples by applying transformations like rotation, flipping, or cropping to increase the diversity of training data.

- Splitting Data: Dividing the dataset into training, validation, and test sets to ensure robust evaluation.

2. Model Training Workflow

The typical workflow for training a deep learning model includes the following steps:

a. Initialize Model Parameters

Weights and biases are initialized, often using techniques like Xavier Initialization or He Normal Initialization, to improve convergence.

b. Forward Propagation

Input data passes through the network, layer by layer, to compute predictions.

c. Compute Loss

The loss function quantifies the error between predictions and actual labels. Examples include:

- Mean Squared Error (MSE): For regression tasks.

- Binary Cross-Entropy: For binary classification.

- Categorical Cross-Entropy: For multi-class classification.

d. Backpropagation and Gradient Descent

The network adjusts its parameters to minimize the loss by computing gradients and updating weights:

- Gradient Descent: Iteratively reduces the loss by moving in the direction of negative gradients.

- Variants of Gradient Descent: Include Stochastic Gradient Descent (SGD), Adam Optimizer, and RMSProp, which improve speed and stability.

e. Evaluate and Validate

The model’s performance is evaluated on the validation set to ensure it generalizes well to unseen data.

f. Iterate Until Convergence

The process is repeated until the model achieves satisfactory performance or the loss stabilizes.

Challenges in Deep Learning

While deep learning has revolutionized AI, it comes with its own set of challenges. Understanding these obstacles is crucial for developing effective solutions.

1. Overfitting

Overfitting occurs when the model learns the noise in the training data rather than generalizable patterns, leading to poor performance on unseen data.

Solutions:

- Regularization: Techniques like L2 regularization (weight decay) penalize large weights to discourage overfitting.

- Dropout: Randomly deactivating a subset of neurons during training improves generalization.

- Early Stopping: Halting training when the validation loss stops improving.

2. Vanishing and Exploding Gradients

In deep networks, gradients can become very small (vanish) or very large (explode) during backpropagation, making training difficult.

Solutions:

- Use advanced activation functions like ReLU to mitigate vanishing gradients.

- Apply Batch Normalization to stabilize gradient flow.

- Use gradient clipping to limit the size of gradients and prevent exploding gradients.

3. Computational Costs

Training deep models, especially with large datasets, requires significant computational resources.

Solutions:

- Utilize GPUs or TPUs for faster training.

- Optimize code with frameworks like TensorFlow or PyTorch.

- Use distributed training across multiple devices.

4. Data Imbalance

An imbalanced dataset can bias the model towards the majority class, reducing performance on minority classes.

Solutions:

- Oversample the minority class or undersample the majority class.

- Use techniques like SMOTE (Synthetic Minority Over-sampling Technique).

- Assign class weights to emphasize the importance of minority classes during training.

Strategies for Optimizing Model Performance

1. Hyperparameter Tuning

Hyperparameters, such as learning rate, batch size, and the number of layers, significantly impact model performance. Optimize these parameters using:

- Grid Search: Systematically testing combinations of hyperparameters.

- Random Search: Testing random combinations for faster exploration.

- Bayesian Optimization: A more efficient approach that models the relationship between hyperparameters and performance.

2. Learning Rate Scheduling

Adjusting the learning rate during training helps the model converge more effectively:

- Step Decay: Reduce the learning rate at fixed intervals.

- Exponential Decay: Decrease the learning rate exponentially over time.

- Cyclical Learning Rates: Oscillate the learning rate within a range to escape local minima.

3. Transfer Learning

Leverage pre-trained models to reduce training time and improve performance on tasks with limited data. Fine-tuning involves:

- Freezing earlier layers and training only the final layers.

- Adjusting the entire model for domain-specific tasks.

4. Ensembling

Combine predictions from multiple models to improve accuracy and robustness. Common techniques include:

- Bagging: Training multiple models on random subsets of data.

- Boosting: Sequentially training models, where each model corrects the errors of the previous one.

- Stacking: Combining predictions from multiple models using a meta-model.

Real-World Examples of Deep Learning in Action

To better understand the power of deep learning, let’s look at a few real-world implementations:

1. Autonomous Vehicles

Deep learning is used to process sensor data, detect objects, and make real-time decisions in self-driving cars. Tesla and Waymo are pioneers in leveraging deep learning for autonomy.

2. Healthcare

Deep learning models analyze medical images to detect diseases like cancer or identify fractures. In COVID-19 research, deep learning has been used to identify patterns in chest X-rays.

3. Virtual Assistants

Personal assistants like Siri, Alexa, and Google Assistant rely on deep learning to understand speech, process language, and deliver personalized responses.

4. E-Commerce

Recommendation engines powered by deep learning suggest products based on user preferences, boosting sales and customer satisfaction.

5. Entertainment

Streaming platforms like Netflix and Spotify use deep learning to provide personalized recommendations for movies, shows, and music.

Cutting-Edge Advancements in Deep Learning

Deep learning is a rapidly evolving field, with researchers developing new architectures and techniques to tackle increasingly complex problems. Here are some of the latest advancements:

1. Transformer Models and Attention Mechanisms

Transformers have revolutionized natural language processing (NLP) and other domains. These models, built on attention mechanisms, excel at understanding contextual relationships in sequential data. Key innovations include:

- BERT (Bidirectional Encoder Representations from Transformers): Pre-trained for bidirectional understanding of text.

- GPT (Generative Pre-trained Transformer): Generates coherent and contextually accurate text, enabling applications like chatbots and content creation.

- Vision Transformers (ViT): Apply transformer architecture to image data, rivaling convolutional neural networks (CNNs) in performance.

2. Reinforcement Learning

Reinforcement learning trains agents to make decisions by maximizing cumulative rewards. Combined with deep learning, it has enabled breakthroughs like:

- AlphaGo: A system that defeated human champions in the game of Go.

- Robotics: Training robots to perform tasks such as assembly and navigation.

3. Generative Models

Generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) create synthetic data that closely resembles real-world data. Applications include:

- Creating realistic images, videos, and audio.

- Enhancing resolution of images (super-resolution).

- Generating virtual environments for gaming and simulation.

4. Neural Architecture Search (NAS)

NAS automates the design of neural networks by searching for optimal architectures. This reduces the time and expertise required to create efficient deep learning models.

5. Edge AI and Model Compression

Edge AI involves deploying deep learning models on edge devices, such as smartphones and IoT devices. Techniques like model pruning, quantization, and knowledge distillation reduce model size and computational requirements, enabling real-time inference on resource-constrained hardware.

Ethical Considerations in Deep Learning

As deep learning becomes more integrated into everyday life, ethical concerns must be addressed to ensure responsible development and use.

1. Bias in Models

Deep learning models can inherit biases from the data they are trained on, leading to unfair or discriminatory outcomes. For example:

- Facial recognition systems have shown disparities in accuracy across different demographics.

- Hiring algorithms may unintentionally favor certain groups based on historical data.

Solutions:

- Use diverse and representative datasets.

- Implement fairness-aware learning algorithms.

- Regularly audit models for bias.

2. Privacy Concerns

Deep learning often requires vast amounts of personal data, raising privacy issues. For example, models trained on medical or financial data could inadvertently expose sensitive information.

Solutions:

- Use techniques like federated learning to train models without centralizing data.

- Apply differential privacy to anonymize data during training.

3. Environmental Impact

Training large-scale deep learning models consumes significant computational resources, leading to high energy usage and carbon emissions. For instance, training models like GPT-3 requires massive data centers and prolonged computing time.

Solutions:

- Optimize models for energy efficiency using techniques like model pruning and quantization.

- Promote the use of renewable energy sources for data centers.

4. Accountability and Transparency

Deep learning models are often seen as “black boxes,” making it difficult to interpret their decisions. This lack of transparency can be problematic in high-stakes applications like healthcare or criminal justice.

Solutions:

- Develop explainable AI (XAI) techniques to provide insights into model decisions.

- Use simpler models when interpretability is critical.

5. Misuse of Technology

Deep learning technologies like GANs can be misused to create deepfakes or spread disinformation, posing risks to society.

Solutions:

- Develop robust detection mechanisms for identifying synthetic content.

- Establish ethical guidelines for the use of generative models.

The Future of Deep Learning

The future of deep learning is bright, with significant potential to transform industries and improve lives. Here’s what we can expect in the coming years:

1. Generalized AI

While current deep learning models are task-specific, future research aims to create generalized AI systems capable of performing multiple tasks with minimal supervision.

2. Integration with Other Technologies

Deep learning will increasingly integrate with technologies like quantum computing, 5G, and blockchain to solve problems faster and more securely.

3. Personalized AI

Advances in deep learning will enable highly personalized AI systems that adapt to individual users. Applications include:

- Custom healthcare solutions tailored to a patient’s genetic profile.

- Education platforms that cater to a student’s learning style and pace.

4. Climate Change and Sustainability

Deep learning will play a critical role in combating climate change by:

- Analyzing climate data to predict extreme weather events.

- Optimizing energy usage in buildings and transportation.

- Accelerating research on renewable energy technologies.

5. Democratization of AI

Efforts are underway to make deep learning tools and frameworks more accessible to non-experts. Platforms like TensorFlow, PyTorch, and AutoML allow developers of all skill levels to build and deploy AI solutions.

Real-World Applications Driving Change

Here are some ways deep learning is driving innovation across industries:

1. Healthcare

- Early detection of diseases using medical imaging.

- Predicting patient outcomes and treatment responses.

- Assisting in drug discovery through molecule analysis.

2. Finance

- Fraud detection by identifying anomalies in transaction patterns.

- Automated risk assessment for loans and investments.

- High-frequency trading strategies powered by predictive analytics.

3. Autonomous Systems

- Enabling self-driving cars to navigate complex environments.

- Powering drones for agriculture, delivery, and surveillance.

4. Entertainment

- Creating personalized content recommendations on streaming platforms.

- Generating realistic visual effects for movies and video games.

5. Agriculture

- Monitoring crop health using image analysis.

- Optimizing irrigation and pest control through predictive models.

Conclusion

Deep learning has redefined the possibilities of artificial intelligence, unlocking new opportunities in fields ranging from healthcare to autonomous systems. Its ability to learn complex patterns and process unstructured data has made it an indispensable tool for solving real-world problems.

While the advancements in deep learning are remarkable, they come with challenges and responsibilities. Addressing ethical concerns, improving model efficiency, and ensuring fairness will be critical as we continue to innovate in this space.

The future of deep learning promises a world where AI systems are not only more intelligent but also more accessible, sustainable, and aligned with human values. By embracing these advancements responsibly, we can harness the full potential of deep learning to improve lives and drive progress across industries.