Introduction

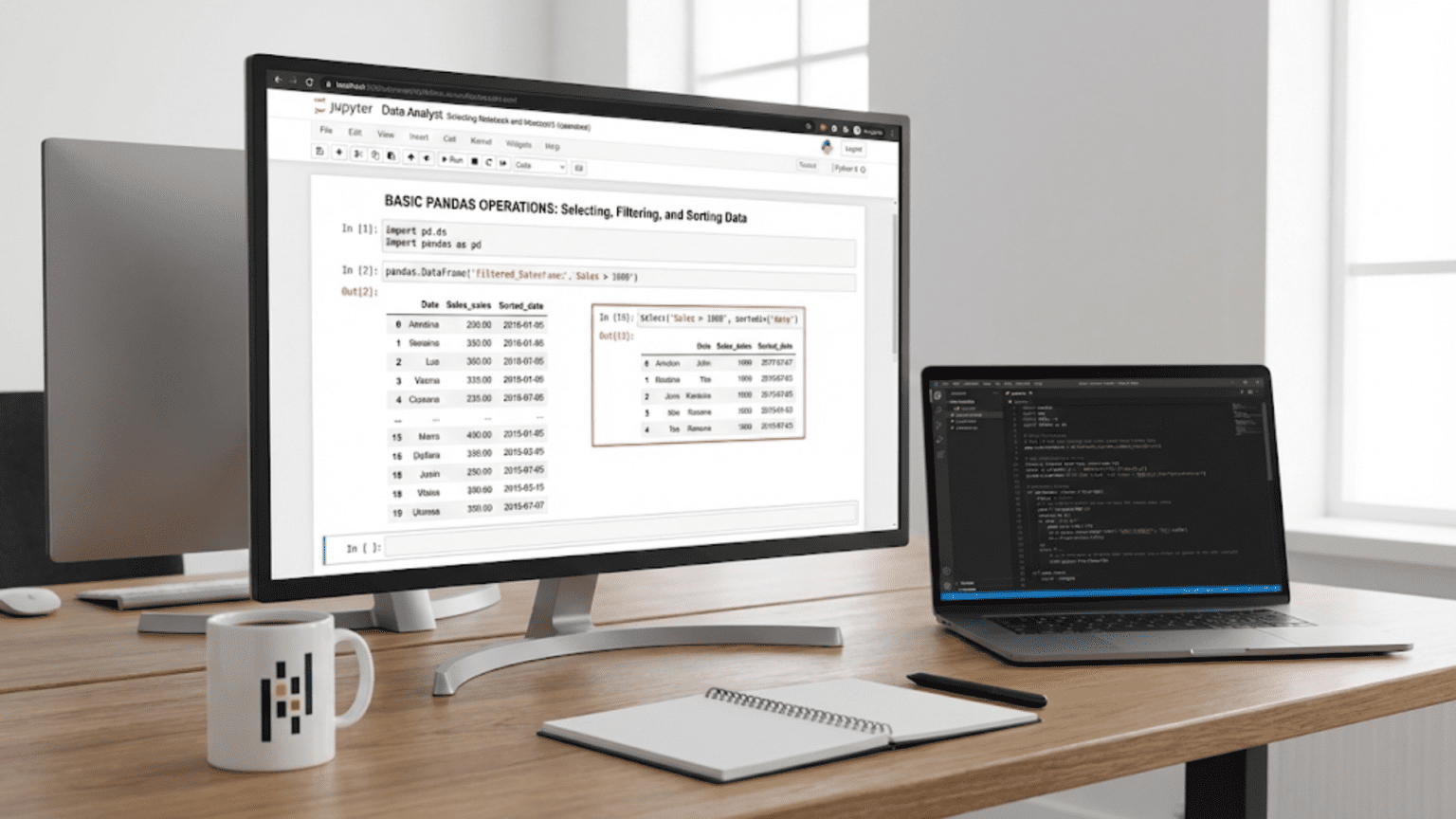

After learning to load data into pandas DataFrames, you face the fundamental operations that consume most data analysis time: selecting specific columns you need, filtering rows that meet criteria, and sorting data to reveal patterns. Every analysis requires these operations repeatedly. You might select demographic columns from a customer dataset, filter to active users only, and sort by lifetime value to identify your best customers. Or you might select time and price columns from financial data, filter to trading hours, and sort chronologically. These operations represent the building blocks from which complex analyses are constructed.

Pandas provides multiple approaches for these operations, each suited for different scenarios. You can select columns by name using bracket notation or attribute access. You can select rows by position using iloc or by label using loc. You can filter using boolean conditions, the query method, or conditional functions. You can sort by single columns or multiple columns with custom ordering. This flexibility enables elegant solutions but can confuse beginners who wonder which approach to use when. Understanding each method’s strengths and appropriate use cases transforms you from someone struggling with pandas syntax to someone wielding it fluently.

What makes these operations particularly powerful is how they compose together. You might filter a DataFrame to recent data, select specific columns, sort by a calculated value, and take the top 10 results, all in a chain of operations that reads almost like natural language. This composability enables building complex data transformations from simple, understandable steps. Moreover, pandas operations execute efficiently on large DataFrames through vectorized implementations, so the code you write for exploratory analysis on small samples scales naturally to production datasets.

This comprehensive guide explores the fundamental pandas operations for data selection, filtering, and sorting. You will learn multiple methods for selecting columns and rows, when to use each selection approach, how to filter data using boolean conditions and the query method, how to combine multiple filtering criteria, how to sort by single and multiple columns, and best practices for writing readable data manipulation code. You will also discover common patterns for these operations and how to avoid pitfalls that trip up beginners. By the end, you will navigate DataFrames confidently and build complex data transformations from these fundamental operations.

Selecting Columns: Choosing Your Variables

Column selection represents the most common operation, letting you focus on relevant variables for analysis.

Select single column (returns Series):

import pandas as pd

import numpy as np

# Create sample DataFrame

df = pd.DataFrame({

'Name': ['Alice', 'Bob', 'Charlie', 'Diana', 'Eve'],

'Age': [25, 30, 35, 28, 32],

'City': ['Boston', 'Seattle', 'Chicago', 'Austin', 'Denver'],

'Salary': [70000, 85000, 90000, 75000, 80000],

'Department': ['Sales', 'Engineering', 'Sales', 'Marketing', 'Engineering']

})

# Select single column

ages = df['Age']

print(type(ages)) # <class 'pandas.core.series.Series'>

print(ages)Alternative attribute access (only works for valid Python identifiers):

# Dot notation

ages = df.Age

print(ages)

# Cannot use dot notation if column name has spaces or special characters

# df.First Name # SyntaxError

# df['First Name'] # WorksSelect multiple columns (returns DataFrame):

# Select multiple columns - returns DataFrame

subset = df[['Name', 'Age', 'Salary']]

print(type(subset)) # <class 'pandas.core.frame.DataFrame'>

print(subset)Note the double brackets: outer for indexing, inner for the list of column names.

Select columns by position:

# Select columns by position using iloc

first_three_cols = df.iloc[:, 0:3]

print(first_three_cols)

# Select specific columns by position

cols = df.iloc[:, [0, 2, 4]] # Columns 0, 2, and 4

print(cols)Select columns matching pattern:

# Select columns containing specific text

salary_cols = df.filter(like='Salary')

# Select columns matching regex

numeric_cols = df.filter(regex='^[AS]') # Columns starting with A or S

# Select columns by data type

numeric_df = df.select_dtypes(include=[np.number])

string_df = df.select_dtypes(include=['object'])Exclude columns:

# Drop columns (returns new DataFrame)

df_without_salary = df.drop('Salary', axis=1)

# Drop multiple columns

df_subset = df.drop(['Salary', 'Department'], axis=1)

# Drop columns in place (modifies original)

df.drop('Salary', axis=1, inplace=True)Reorder columns:

# Specify new column order

df_reordered = df[['Name', 'City', 'Age', 'Department', 'Salary']]

# Move column to front

cols = ['Name'] + [col for col in df.columns if col != 'Name']

df = df[cols]Selecting Rows: Accessing Observations

Row selection uses different methods than column selection, with iloc for position-based and loc for label-based access.

Select rows by position using iloc:

# First row (returns Series)

first_row = df.iloc[0]

print(first_row)

# First row (returns DataFrame)

first_row_df = df.iloc[[0]]

print(first_row_df)

# First three rows

first_three = df.iloc[0:3]

print(first_three)

# Specific rows

rows = df.iloc[[0, 2, 4]] # First, third, and fifth rows

print(rows)

# Last row

last_row = df.iloc[-1]Select rows by label using loc:

# Create DataFrame with custom index

df_indexed = df.set_index('Name')

# Select by index label

alice_row = df_indexed.loc['Alice']

print(alice_row)

# Select multiple rows

subset = df_indexed.loc[['Alice', 'Charlie']]

print(subset)

# Slice by label (inclusive of endpoints)

subset = df_indexed.loc['Alice':'Charlie']Select rows and columns together:

# iloc: rows by position, columns by position

subset = df.iloc[0:3, 0:2] # First 3 rows, first 2 columns

# loc: rows by label, columns by name

subset = df.loc[0:2, ['Name', 'Age']]

# Mix: all rows, specific columns

subset = df.loc[:, ['Name', 'Salary']]

# Mix: specific rows, all columns

subset = df.iloc[0:3, :]Random sampling:

# Random sample of rows

sample = df.sample(n=3) # 3 random rows

# Random percentage

sample = df.sample(frac=0.5) # 50% of rows

# With replacement

sample = df.sample(n=10, replace=True)

# Reproducible sampling

sample = df.sample(n=3, random_state=42)Filtering with Boolean Indexing

Boolean indexing selects rows based on conditions, the most powerful selection method.

Basic boolean filtering:

# Filter based on single condition

young_employees = df[df['Age'] < 30]

print(young_employees)

# How it works

age_mask = df['Age'] < 30 # Boolean Series

print(age_mask) # True where condition met

filtered = df[age_mask]Multiple conditions with & (and) and | (or):

# AND condition (both must be true)

filtered = df[(df['Age'] > 25) & (df['Salary'] > 75000)]

# OR condition (either can be true)

filtered = df[(df['Age'] < 28) | (df['Salary'] > 85000)]

# NOT condition

filtered = df[~(df['Age'] > 30)] # Age <= 30

# Complex combinations

filtered = df[((df['Age'] > 25) & (df['Salary'] > 70000)) |

(df['Department'] == 'Engineering')]Note: Use & and | for element-wise boolean operations, not and and or. Use parentheses to control precedence.

Filter using isin():

# Check if values are in a list

cities = ['Boston', 'Seattle', 'Denver']

filtered = df[df['City'].isin(cities)]

print(filtered)

# Exclude values

filtered = df[~df['City'].isin(cities)]Filter using string methods:

# String contains

filtered = df[df['Name'].str.contains('a')]

# Starts with

filtered = df[df['City'].str.startswith('B')]

# Ends with

filtered = df[df['Department'].str.endswith('ing')]

# Case insensitive

filtered = df[df['Name'].str.contains('alice', case=False)]

# Regex pattern

filtered = df[df['Name'].str.match(r'^[AB]')] # Starts with A or BFilter using between():

# Values in range (inclusive)

filtered = df[df['Age'].between(28, 32)]

# Exclude boundaries

filtered = df[df['Age'].between(28, 32, inclusive='neither')]Filter null values:

# Create DataFrame with missing values

df_with_nulls = df.copy()

df_with_nulls.loc[2, 'Salary'] = np.nan

# Rows where value is null

has_null = df_with_nulls[df_with_nulls['Salary'].isnull()]

# Rows where value is not null

no_null = df_with_nulls[df_with_nulls['Salary'].notnull()]Using the Query Method

The query method provides SQL-like syntax for filtering, often more readable than boolean indexing.

Basic query:

# Query using column names directly

filtered = df.query('Age > 30')

print(filtered)

# Multiple conditions

filtered = df.query('Age > 25 and Salary > 75000')

# Or condition

filtered = df.query('Age < 28 or Salary > 85000')Query with variables:

min_age = 28

max_age = 32

# Use @ to reference variables

filtered = df.query('Age >= @min_age and Age <= @max_age')

print(filtered)Query with string matching:

# Check membership

departments = ['Sales', 'Engineering']

filtered = df.query('Department in @departments')

# String methods

filtered = df.query('City.str.startswith("B")', engine='python')Query advantages:

# Boolean indexing - verbose

filtered = df[(df['Age'] > 25) &

(df['Salary'] > 75000) &

(df['Department'] == 'Engineering')]

# Query - more readable

filtered = df.query('Age > 25 and Salary > 75000 and Department == "Engineering"')Sorting Data: Ordering Observations

Sorting reveals patterns and makes data easier to interpret.

Sort by single column:

# Sort by age (ascending)

sorted_df = df.sort_values('Age')

print(sorted_df)

# Sort descending

sorted_df = df.sort_values('Age', ascending=False)Sort by multiple columns:

# Sort by department, then age within department

sorted_df = df.sort_values(['Department', 'Age'])

print(sorted_df)

# Different sort order for each column

sorted_df = df.sort_values(['Department', 'Age'],

ascending=[True, False])Sort in place:

# Modify original DataFrame

df.sort_values('Salary', ascending=False, inplace=True)Sort by index:

# Sort by index values

df_sorted = df.sort_index()

# Sort descending

df_sorted = df.sort_index(ascending=False)Sort with custom order:

# Define custom order for categories

dept_order = ['Engineering', 'Sales', 'Marketing']

# Create categorical with specific order

df['Department'] = pd.Categorical(df['Department'],

categories=dept_order,

ordered=True)

# Sort using category order

sorted_df = df.sort_values('Department')Handle missing values during sort:

# Create DataFrame with missing values

df_with_nulls = df.copy()

df_with_nulls.loc[2, 'Salary'] = np.nan

# NaN at end (default)

sorted_df = df_with_nulls.sort_values('Salary')

# NaN at beginning

sorted_df = df_with_nulls.sort_values('Salary', na_position='first')Sort by computed values:

# Sort by value not in DataFrame

# Add temporary column, sort, drop column

df['temp'] = df['Age'] * df['Salary']

df.sort_values('temp', inplace=True)

df.drop('temp', axis=1, inplace=True)

# Or use key parameter (pandas 1.1+)

sorted_df = df.sort_values('Name', key=lambda x: x.str.lower())Combining Operations: Building Complex Queries

Chain operations to build sophisticated data manipulations:

# Filter, select, and sort in one chain

result = (df

.query('Age > 25')

.filter(['Name', 'Age', 'Salary'])

.sort_values('Salary', ascending=False)

.head(3)

)

print(result)Multiple filters:

# Apply filters sequentially

result = df.copy()

result = result[result['Age'] > 25]

result = result[result['Salary'] > 70000]

result = result[result['Department'] != 'Marketing']

print(result)

# Or combine in single condition

result = df[

(df['Age'] > 25) &

(df['Salary'] > 70000) &

(df['Department'] != 'Marketing')

]Conditional selection:

# Select different columns based on condition

high_earners = df[df['Salary'] > 80000][['Name', 'Salary']]

others = df[df['Salary'] <= 80000][['Name', 'Department']]Reset index after filtering:

# Filtering preserves original index

filtered = df[df['Age'] > 30]

print(filtered.index) # May have gaps: [1, 2, 4]

# Reset to continuous index

filtered = filtered.reset_index(drop=True)

print(filtered.index) # [0, 1, 2]Getting Top/Bottom N Rows

Pandas provides convenient methods for top/bottom rows:

# Top N by value

top_earners = df.nlargest(3, 'Salary')

print(top_earners)

# Bottom N by value

lowest_paid = df.nsmallest(3, 'Salary')

print(lowest_paid)

# Top N by multiple columns

top = df.nlargest(3, ['Salary', 'Age'])These methods are more efficient than sorting entire DataFrame:

# Less efficient

top_earners = df.sort_values('Salary', ascending=False).head(3)

# More efficient

top_earners = df.nlargest(3, 'Salary')Selecting Based on Data Types

Select columns by their data type:

# Numeric columns only

numeric_df = df.select_dtypes(include=[np.number])

print(numeric_df.columns)

# String columns only

string_df = df.select_dtypes(include=['object'])

print(string_df.columns)

# Multiple types

selected = df.select_dtypes(include=[np.number, 'category'])

# Exclude types

non_numeric = df.select_dtypes(exclude=[np.number])Common Patterns and Idioms

Filter and count:

# Count rows meeting condition

count = len(df[df['Age'] > 30])

# Or using sum on boolean

count = (df['Age'] > 30).sum()Get unique values after filtering:

# Unique cities for young employees

cities = df[df['Age'] < 30]['City'].unique()

print(cities)Filter one column based on another:

# Get salaries of engineering department

eng_salaries = df[df['Department'] == 'Engineering']['Salary']

print(eng_salaries)Conditional column assignment:

# Create column based on condition

df['Age_Group'] = df['Age'].apply(

lambda x: 'Young' if x < 30 else 'Senior'

)

# Using np.where (more efficient)

df['Age_Group'] = np.where(df['Age'] < 30, 'Young', 'Senior')

# Using multiple conditions

conditions = [

df['Age'] < 30,

(df['Age'] >= 30) & (df['Age'] < 35),

df['Age'] >= 35

]

choices = ['Young', 'Mid', 'Senior']

df['Age_Group'] = np.select(conditions, choices)Performance Considerations

Use vectorized operations instead of iteration:

# Slow - iterating

filtered = []

for idx, row in df.iterrows():

if row['Age'] > 30:

filtered.append(row)

# Fast - vectorized

filtered = df[df['Age'] > 30]Use query for complex conditions:

# Can be faster for complex conditions

filtered = df.query('Age > 25 and Salary > 75000 and Department == "Sales"')Use isin() for membership tests:

# Efficient for checking membership

cities = ['Boston', 'Seattle', 'Chicago']

filtered = df[df['City'].isin(cities)]

# Avoid using or for multiple values

# filtered = df[(df['City'] == 'Boston') |

# (df['City'] == 'Seattle') |

# (df['City'] == 'Chicago')] # Less efficientCommon Mistakes to Avoid

Modifying filtered view:

# Warning: SettingWithCopyWarning

subset = df[df['Age'] > 30]

subset['Salary'] = subset['Salary'] * 1.1 # May not work as expected

# Better: use loc

df.loc[df['Age'] > 30, 'Salary'] = df.loc[df['Age'] > 30, 'Salary'] * 1.1

# Or copy explicitly

subset = df[df['Age'] > 30].copy()

subset['Salary'] = subset['Salary'] * 1.1Using ‘and’ instead of ‘&’:

# Wrong

# filtered = df[df['Age'] > 25 and df['Salary'] > 70000] # Error

# Correct

filtered = df[(df['Age'] > 25) & (df['Salary'] > 70000)]Forgetting parentheses in complex conditions:

# Wrong precedence

filtered = df[df['Age'] > 25 & df['Salary'] > 70000] # Error

# Correct with parentheses

filtered = df[(df['Age'] > 25) & (df['Salary'] > 70000)]Best Practices

Use meaningful variable names:

# Clear intent

high_salary_employees = df[df['Salary'] > 80000]

young_engineers = df[(df['Age'] < 30) & (df['Department'] == 'Engineering')]

# Less clear

subset1 = df[df['Salary'] > 80000]

data2 = df[(df['Age'] < 30) & (df['Department'] == 'Engineering')]Chain operations for readability:

# Readable pipeline

result = (df

.query('Age > 25')

.sort_values('Salary', ascending=False)

[['Name', 'Age', 'Salary']]

.head(10)

)Use copy() when creating independent DataFrames:

# Create true copy

subset = df[df['Age'] > 30].copy()

subset['Salary'] *= 1.1 # Safe modificationDocument complex filters:

# Complex condition - add comment

# Select employees: age 28-35, salary > 75k, not in Marketing

filtered = df.query(

'Age >= 28 and Age <= 35 and '

'Salary > 75000 and '

'Department != "Marketing"'

)Conclusion

Selecting, filtering, and sorting represent the fundamental operations you will use in virtually every pandas analysis. Mastering these operations, understanding when to use loc versus iloc, boolean indexing versus query, and single versus multiple column sorts, enables you to manipulate data confidently and expressively. The flexibility pandas provides through multiple approaches for the same operations might feel overwhelming initially, but with practice you will develop intuition for which approach suits each situation.

These basic operations compose together to build sophisticated data transformations. A complex analysis that initially seems daunting often breaks down into a series of selections, filters, and sorts that each handle one aspect of the transformation. Writing these operations as clear, composable steps produces code that is easier to understand, debug, and modify than monolithic approaches. Moreover, the patterns you learn here transfer directly to more advanced pandas operations including grouping, joining, and reshaping.

Practice these operations extensively on real datasets. Filter data using various conditions, select columns different ways, sort by different criteria, and chain operations together. Build muscle memory for common patterns like filtering then sorting, selecting then aggregating, or combining multiple conditions. The fluency you develop with these fundamental operations enables everything that follows in pandas, from basic exploratory analysis through complex data preprocessing pipelines. Master selection, filtering, and sorting, and you gain the foundation for all pandas work.