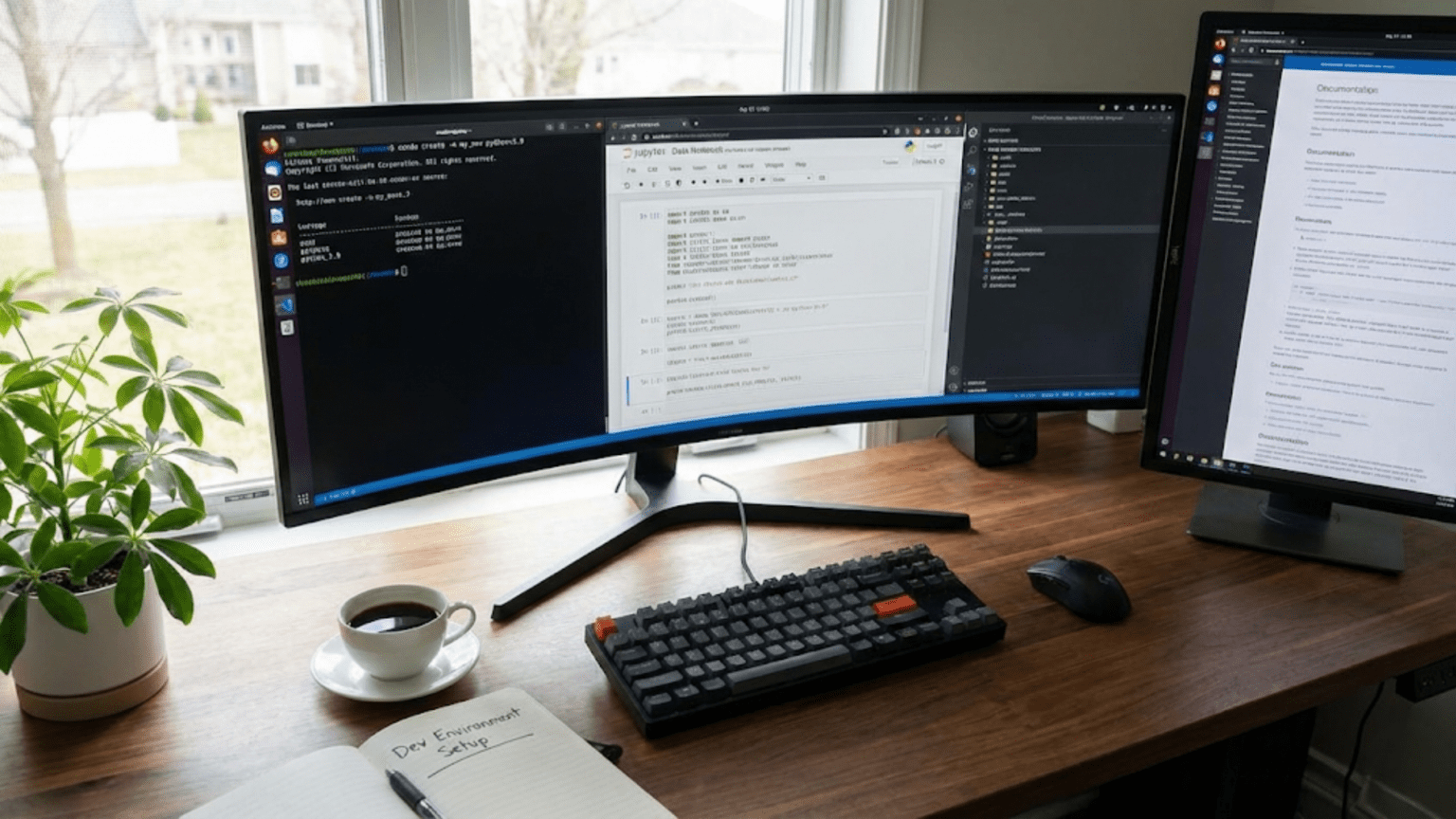

Why Your Development Environment Matters

Imagine trying to cook a gourmet meal in a kitchen with missing utensils, a broken oven, and ingredients scattered randomly across multiple pantries. Even with excellent recipes and culinary knowledge, the disorganized environment would frustrate your efforts and make cooking far harder than it should be. Your data science development environment works the same way. A well-configured setup lets you focus on learning and analysis, while a problematic environment creates constant friction that drains energy and motivation.

Many aspiring data scientists stumble at this first technical hurdle. They attempt to install Python directly from the official website, then spend hours troubleshooting why packages will not install. They discover that different projects need conflicting package versions. They accidentally break their system Python while trying to configure data science tools. These problems are so common and frustrating that some people give up on data science before ever analyzing their first dataset.

The good news is that modern tools have made environment setup dramatically easier than it was even a few years ago. Following the right approach from the beginning saves you countless hours of frustration and sets you up for smooth learning. In this comprehensive guide, I will walk you through setting up a professional data science development environment step by step, explaining not just what to do but why you are doing it. By the end, you will have a fully functional workspace ready for data science work.

Understanding the Components You Need

Before jumping into installation, let me clarify what components make up a data science development environment and why you need each one. Understanding the ecosystem helps you troubleshoot issues and make informed decisions about your setup.

At the foundation, you need Python itself, the programming language that will execute your code. Python is free, open-source, and available for Windows, Mac, and Linux. However, installing Python alone gives you just the base language without the specialized libraries that make data science practical. You could install each library individually, but this becomes tedious and error-prone.

A package manager handles installing and updating libraries for you. Python includes pip as its default package manager, which can install libraries from the Python Package Index. While pip works well, data science packages often have complex dependencies on non-Python libraries that pip struggles with. This is where Anaconda becomes valuable.

Anaconda is a distribution of Python specifically designed for data science and scientific computing. It bundles Python with hundreds of pre-installed libraries, includes conda as a powerful package manager that handles complex dependencies better than pip, and provides tools for managing separate environments for different projects. For beginners, Anaconda eliminates most installation headaches and provides everything you need in one download.

Jupyter Notebook creates interactive documents where you can write code, see results immediately, add explanatory text, and include visualizations all in one place. These notebooks have become the standard interface for data science work because they support the exploratory, iterative nature of data analysis. Jupyter comes included with Anaconda, so installing Anaconda gives you both Python and Jupyter together.

An Integrated Development Environment or code editor provides a workspace for writing and running code. While Jupyter notebooks work excellently for analysis, you sometimes need a traditional code editor for writing Python scripts or modules. Many options exist, from simple text editors to full IDEs. We will discuss options and how to choose, but this is not strictly necessary when starting since Jupyter handles most initial needs.

Version control with Git tracks changes to your code over time, enables collaboration with others, and allows you to experiment safely knowing you can revert changes. While not required for learning data science basics, adopting version control early builds good habits. We will cover basic Git setup as an optional but recommended step.

Installing Anaconda: Your All-In-One Solution

Anaconda provides the smoothest path to a working data science environment, especially for beginners. Let me walk you through installation for each major operating system.

For Windows users, start by visiting the Anaconda website and downloading the installer for Windows. You want the Python 3 version, choosing between 64-bit and 32-bit based on your system. Almost all modern Windows computers are 64-bit, so that is likely the right choice. The download is large, around 500 megabytes, because it includes many libraries.

Run the downloaded installer file. It will guide you through several screens. When asked whether to install for just yourself or all users, choosing just yourself avoids permission issues. The installer will suggest an installation location, typically in your user directory. The default location works fine for most people. Accept it unless you have specific reasons to change it.

Two important checkboxes appear during installation. The first asks whether to add Anaconda to your PATH environment variable. The installer recommends against this, and you should follow that recommendation. Adding Anaconda to PATH can interfere with other Python installations and cause confusion. The second checkbox asks whether to register Anaconda as your default Python. Checking this is fine if Anaconda is your only Python installation.

After installation completes, verify it worked by opening the Anaconda Navigator application from your Start menu. Navigator provides a graphical interface for launching tools and managing packages. You should see icons for Jupyter Notebook, JupyterLab, Spyder, and other applications. If Navigator opens successfully, your installation worked.

For Mac users, the process is similar but with some Mac-specific steps. Download the Mac installer from the Anaconda website. Mac offers both a graphical installer and a command-line installer. The graphical installer is easier for beginners. Download the file, which will be a .pkg file.

Double-click the downloaded file to launch the installer. It will guide you through accepting the license agreement and choosing an installation location. The default location in your home directory works well. The installer does not ask about PATH or default Python settings on Mac because it handles these automatically in a way that avoids conflicts.

Installation takes several minutes as it copies files. When complete, open Terminal and type conda --version to verify installation. If you see a version number like “conda 23.7.4”, your installation succeeded. You can also launch Anaconda Navigator from your Applications folder to access the graphical interface.

For Linux users, installation happens through the command line, which matches how most Linux users prefer to work. Download the Linux installer script from the Anaconda website. This will be a .sh file. Open your terminal and navigate to the directory where you downloaded the file.

Make the installer executable with the command chmod +x Anaconda3-*.sh where the asterisk matches your specific filename. Then run the installer with ./Anaconda3-*.sh. The installer displays the license agreement, which you must review and accept. It then asks for an installation location, defaulting to a directory in your home folder, which is fine.

The installer asks whether to initialize Anaconda3 by running conda init. Answer yes to this question. This configures your shell to work with conda properly. After installation completes, close and reopen your terminal for changes to take effect. Type conda --version to verify installation succeeded.

Regardless of operating system, you should now have a working Anaconda installation with Python, hundreds of libraries, and Jupyter Notebook ready to use. This single installation provides everything you need to begin learning data science.

Launching and Exploring Jupyter Notebook

With Anaconda installed, you can launch Jupyter Notebook and begin working with Python interactively. Let me show you how to start Jupyter and navigate its interface.

The simplest way to launch Jupyter is through Anaconda Navigator. Open Navigator and click the Launch button under Jupyter Notebook. This opens your web browser with the Jupyter interface showing your file system. Despite appearing in a browser, Jupyter runs locally on your computer rather than on the internet. The browser simply provides the interface.

Alternatively, you can launch Jupyter from the command line, which many users prefer for its speed. On Windows, open Anaconda Prompt from your Start menu, not the regular Command Prompt. On Mac or Linux, open Terminal. Type jupyter notebook and press Enter. This starts the Jupyter server and opens your browser automatically.

The Jupyter home screen shows folders and files from the directory where you launched it. You can navigate through your file system by clicking on folders. To start working, you need to create a new notebook. Click the New button in the upper right and select Python 3 from the dropdown menu. This creates a new notebook and opens it in a new browser tab.

Your new notebook contains a single empty cell where you can type Python code. Try typing print("Hello, Data Science!") in the cell. To execute the code, press Shift+Enter or click the Run button in the toolbar. The output appears immediately below the cell, and a new empty cell appears below the output, ready for your next command.

Cells can contain either code or text formatted with Markdown. Click in a cell and use the dropdown menu in the toolbar to change it from Code to Markdown. In a Markdown cell, you can write explanatory text, create headers with hash symbols, add bullet lists, and include formatted text. This capability to mix code, results, and documentation in one document makes notebooks powerful for data analysis.

The notebook interface includes a menu bar with options for saving, inserting cells, running code, and more. The toolbar provides quick access to common actions like adding cells, cutting and pasting, running cells, and stopping execution. Keyboard shortcuts make working faster once you learn them. The most important are Shift+Enter to run a cell, Esc then A to insert a cell above, Esc then B to insert a cell below, and Esc then DD to delete a cell.

Jupyter automatically saves your work periodically, creating checkpoint files. You can manually save with Ctrl+S or Command+S. Each notebook is stored as a .ipynb file that contains all your code, outputs, and markdown text. You can share these files with others who can open them in their own Jupyter installations to see your work and run your code.

When you finish working, close the notebook tab in your browser. This does not stop the Jupyter server. To fully stop Jupyter, go back to the terminal or Anaconda Prompt where you launched it and press Ctrl+C twice. This shuts down the server. Alternatively, use the Quit button in the Jupyter home screen.

Installing Additional Libraries You Will Need

While Anaconda includes hundreds of libraries, you will occasionally need packages not included by default. Learning to install libraries prepares you for when you need specialized tools. Anaconda provides two ways to install packages: using conda or using pip.

The conda package manager is Anaconda’s preferred tool because it handles dependencies particularly well and can install non-Python dependencies that some data science libraries require. To install a package with conda, open Anaconda Prompt or Terminal and type conda install package-name where package-name is the library you want. For example, conda install scikit-learn installs the popular machine learning library.

Before actually installing, conda shows you what packages will be installed or updated and asks for confirmation. This preview helps you understand what will change and gives you a chance to cancel if something looks wrong. Type y and press Enter to proceed with installation.

Sometimes the package you want is not available through conda’s default channels. Conda channels are repositories of packages maintained by different organizations. The conda-forge channel maintains community-contributed packages and often has libraries not in the default channel. You can install from conda-forge by specifying the channel: conda install -c conda-forge package-name.

When conda cannot find a package at all, pip provides a fallback option since it has access to the entire Python Package Index. Use pip only when conda does not have the package you need. Install with pip install package-name. Mixing conda and pip in the same environment can occasionally cause dependency conflicts, so prefer conda when possible.

Some essential libraries you will use frequently include pandas for data manipulation, numpy for numerical computing, matplotlib for basic plotting, seaborn for statistical visualization, and scikit-learn for machine learning. Anaconda includes all of these by default, so you do not need to install them separately. However, knowing how to install packages prepares you for when you need something specialized.

Updating packages keeps you current with bug fixes and new features. Update a specific package with conda update package-name or update all packages with conda update --all. The latter is powerful but potentially time-consuming as it downloads many updates. Updating periodically, perhaps monthly, keeps your environment reasonably current without constant maintenance.

Creating and Managing Virtual Environments

Virtual environments solve a problem you will eventually encounter: different projects needing different versions of the same library. Perhaps you are working on two projects, one using an older tutorial that requires pandas version 1.0, and another using the latest pandas features from version 2.0. Installing both versions simultaneously in the same environment creates conflicts.

Virtual environments provide isolated spaces where each project can have its own package versions without interfering with others. Think of them as separate workspaces on your computer, each with its own set of installed libraries. Anaconda makes creating and managing environments straightforward.

To create a new environment, use the command conda create --name myenv python=3.11 where myenv is whatever name you choose for the environment and 3.11 is the Python version you want. Choose meaningful environment names that remind you what the environment is for, like project-name or tutorial-name. Creating the environment installs Python but no other packages initially.

Activate an environment to start using it with conda activate myenv. Your command prompt changes to show the active environment name in parentheses, like (myenv) at the start of the line. While an environment is active, any packages you install go into that environment only, not affecting your base environment or other environments.

Install packages needed for the project while the environment is active. For example, after activating a new environment, you might run conda install pandas numpy matplotlib jupyter to install common data science libraries. These installations exist only in this environment. When you later create another environment, you start fresh and install only what that project needs.

Deactivate an environment when you finish working with it using conda deactivate. This returns you to the base environment. You can have many environments on your system and switch between them as needed for different projects.

List all your environments with conda env list. This shows each environment’s name and location. The active environment is marked with an asterisk. Remove environments you no longer need with conda env remove --name myenv to free up disk space, though environments are usually small enough that you can keep them indefinitely.

For beginners, you may not need multiple environments immediately. You can work in the base environment that comes with Anaconda for your initial learning. However, understanding environments from the start prepares you for when you do need them and builds good habits. Many tutorials and courses will mention environments, and now you will understand what they mean.

Environment specifications can be saved to files that others can use to recreate your exact setup. This becomes important for collaboration and reproducibility. Export your environment with conda env export > environment.yml. Others can recreate it with conda env create -f environment.yml. These files list every package and version in the environment, ensuring everyone works with identical configurations.

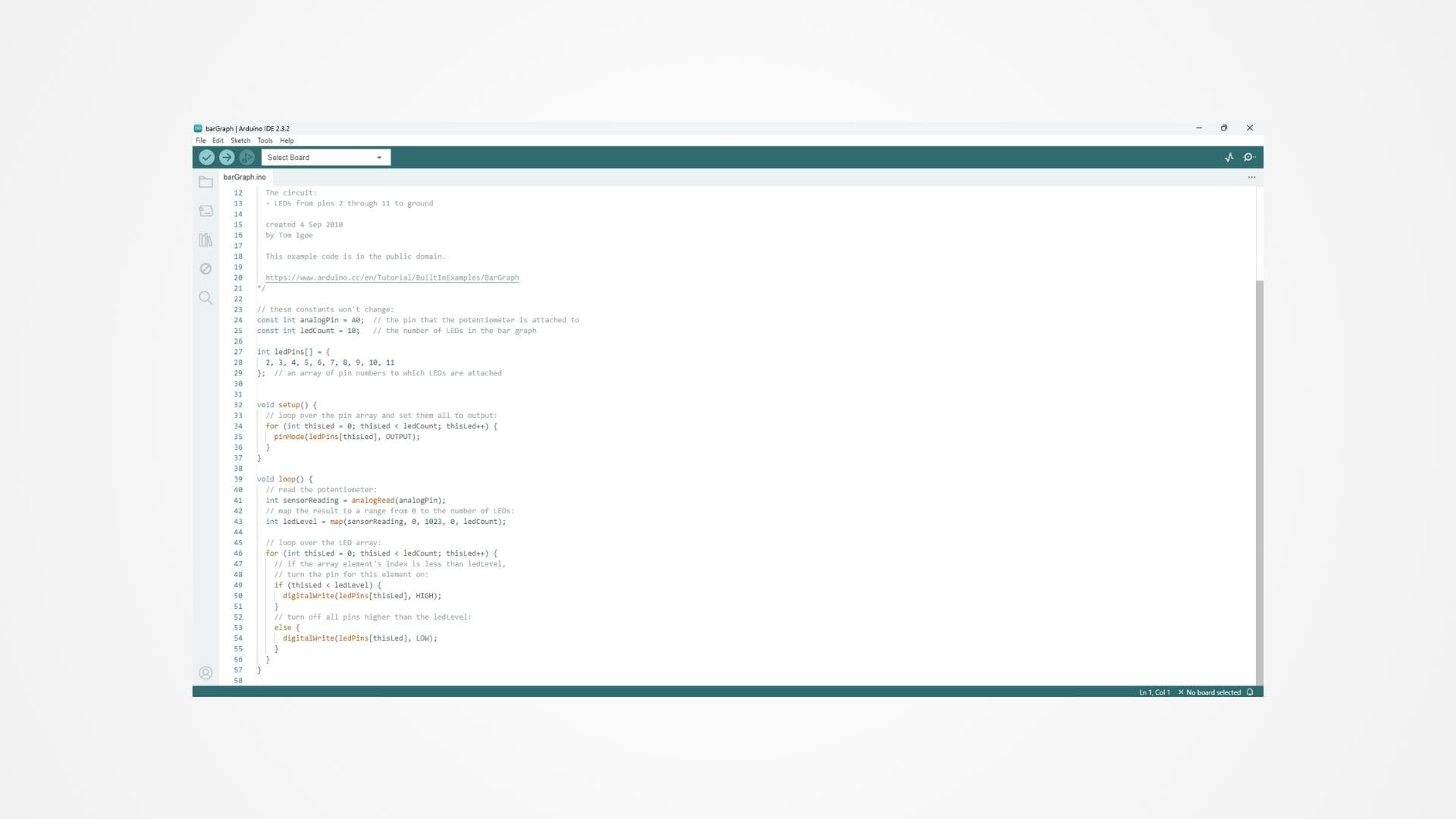

Choosing and Setting Up a Code Editor

While Jupyter notebooks handle most data science work, you sometimes need a traditional code editor for writing Python scripts, creating modules, or working with non-notebook files. Several excellent options exist, from lightweight editors to full integrated development environments.

Visual Studio Code has become extremely popular among Python developers and data scientists. Microsoft develops it as free, open-source software that works on Windows, Mac, and Linux. Despite being a Microsoft product, it genuinely works well across platforms. VS Code is lightweight and fast while offering powerful features through extensions.

To set up VS Code for Python, first download and install it from the official website. After installation, open VS Code and navigate to the Extensions panel by clicking the square icon in the left sidebar or pressing Ctrl+Shift+X. Search for “Python” and install the official Python extension from Microsoft. This extension adds Python language support, debugging, code completion, and Jupyter notebook support.

VS Code can open and edit Jupyter notebooks directly, providing an alternative to the browser interface some users prefer. The integration feels seamless, letting you work with notebooks alongside Python scripts in the same editor. The Python extension also includes a Python environment selector, letting you choose which conda environment to use for your work.

PyCharm is a full IDE from JetBrains specifically designed for Python development. It offers two versions: Professional, which costs money but includes advanced data science features, and Community Edition, which is free but more limited. For learning data science, Community Edition suffices. PyCharm provides intelligent code completion, integrated debugging, and project management features.

PyCharm has a steeper learning curve than VS Code because it includes more features and opinions about how you should structure projects. However, once you learn it, PyCharm can make you very productive. The data science features in the Professional version include native Jupyter notebook support and database tools that some users find valuable.

Spyder comes bundled with Anaconda and provides an IDE specifically designed for scientific computing and data science. Its interface resembles MATLAB or RStudio, with panels for code editing, variable inspection, and plotting. For users coming from those tools, Spyder feels familiar. Launch it through Anaconda Navigator.

Spyder integrates tightly with IPython, the interactive Python shell underlying Jupyter, providing similar interactive capabilities in a desktop application rather than browser interface. Some users strongly prefer Spyder’s desktop application feel to Jupyter’s browser-based interface. The variable explorer lets you examine data frames and arrays graphically, which can be helpful when learning.

For absolute beginners, I recommend starting with Jupyter Notebook for interactive analysis and adding VS Code when you need to write scripts or organize larger projects. This combination covers most use cases well while keeping complexity manageable. As you gain experience, you can explore other tools and settle on whatever best matches your workflow preferences.

Setting Up Git for Version Control

Version control tracks changes to your code over time, allowing you to experiment safely, collaborate with others, and maintain a history of your work. Git has become the standard version control system, and GitHub provides popular hosting for Git repositories. While not strictly required for learning data science basics, setting up Git early builds good professional habits.

Installing Git differs by operating system. Windows users should download Git for Windows from the official Git website. The installer includes Git Bash, a terminal emulator that provides a Unix-like command-line interface. During installation, accept most defaults, but when asked about adjusting your PATH environment, choose “Git from the command line and also from 3rd-party software.” This makes Git available from any terminal.

Mac users can install Git using Homebrew, a package manager for Mac. If you do not have Homebrew, install it first following instructions on their website. Then run brew install git in Terminal. Alternatively, installing Xcode Command Line Tools includes Git. You can trigger installation by typing git --version in Terminal, which prompts Mac to install developer tools if they are missing.

Linux users likely already have Git installed or can install it through their distribution’s package manager. On Ubuntu or Debian, use sudo apt-get install git. On Fedora, use sudo dnf install git. Verify installation by typing git --version in your terminal.

After installing Git, configure it with your name and email. Open your terminal and run these commands, replacing the example values with your actual information:

git config --global user.name "Your Name"

git config --global user.email "your.email@example.com"These settings identify you as the author of changes you make. The global flag applies these settings to all repositories on your computer.

Create a GitHub account if you do not have one by visiting github.com and signing up. GitHub hosts your code repositories online, enabling backup and collaboration. After creating your account, you should set up SSH keys for secure authentication, though you can also use HTTPS authentication with a personal access token.

Learning Git itself is a separate skill that deserves dedicated study. For now, understanding that version control exists and having it installed prepares you for when tutorials or projects use it. Many data science courses now include Git in their workflows, and having it ready avoids installation interruptions mid-lesson.

Testing Your Setup with Your First Data Analysis

With everything installed, verify your setup works by conducting a simple data analysis. This test confirms that all components function together and gives you practice with the workflow.

Open Jupyter Notebook and create a new Python 3 notebook. In the first cell, import essential libraries:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as snsRun the cell with Shift+Enter. If no errors appear, your libraries are installed correctly. Errors would indicate installation problems you need to resolve.

In the next cell, create a simple dataset to analyze:

# Create sample data

np.random.seed(42)

data = {

'category': ['A', 'B', 'C', 'D'] * 25,

'values': np.random.randn(100) * 10 + 50,

'counts': np.random.randint(1, 100, 100)

}

df = pd.DataFrame(data)

df.head()This creates a pandas DataFrame with random data and displays the first few rows. You should see a nicely formatted table. This confirms pandas and numpy work correctly.

Create a visualization:

# Create a simple plot

plt.figure(figsize=(10, 6))

sns.boxplot(data=df, x='category', y='values')

plt.title('Values by Category')

plt.show()Running this cell should display a box plot showing the distribution of values across categories. The plot appearing confirms matplotlib and seaborn work correctly.

Calculate summary statistics:

# Summary statistics

df.groupby('category')['values'].describe()This produces a table of statistical summaries for each category. Everything displaying correctly confirms your entire data science stack functions properly.

If all these cells execute without errors and produce expected outputs, congratulations! Your data science development environment is fully functional. You can now work through tutorials, take courses, or start your own analysis projects with confidence that your tools work correctly.

Troubleshooting Common Setup Issues

Even following instructions carefully, you might encounter problems during setup. Let me address common issues and their solutions.

If Anaconda installation fails or freezes on Windows, temporarily disable antivirus software during installation. Some antivirus programs interfere with installers. Remember to re-enable antivirus after installation completes. Also ensure you have administrative privileges on your computer, as installation requires writing to system directories.

On Mac, if you see permission errors during installation, make sure you are installing to your home directory rather than system directories. The installer should default to your home directory, which does not require administrator permissions. If you must install to a system location, use sudo with command-line installation, though this is not recommended.

If Jupyter Notebook does not launch or shows errors about missing kernels, your Python environment may not be properly configured. Try running python -m ipykernel install --user from Anaconda Prompt or Terminal to register the Python kernel with Jupyter.

Package installation failures often result from network issues or missing dependencies. If conda install commands fail, try adding the conda-forge channel with conda install -c conda-forge package-name. If that fails, pip usually succeeds: pip install package-name. For persistent network issues, check your firewall settings to ensure they allow conda to access package repositories.

Import errors when trying to use installed packages usually mean the package is installed in a different environment than the one you are using. Verify which environment is active with conda env list. Activate the correct environment, then launch Jupyter from within that environment so notebooks use the same environment’s packages.

On Windows, if commands like conda or jupyter are not recognized in Command Prompt, you are likely using regular Command Prompt instead of Anaconda Prompt. Anaconda Prompt is a special terminal configured to find Anaconda commands. Find it in your Start menu under Anaconda.

Memory errors during package installation on systems with limited RAM can be resolved by closing other applications to free memory. Large package installations can be memory-intensive. Alternatively, install packages individually rather than all at once to reduce memory requirements.

Maintaining Your Environment Over Time

After initial setup, your development environment needs occasional maintenance to stay current and functional. Building good maintenance habits prevents problems before they occur.

Update Anaconda itself periodically with conda update conda followed by conda update anaconda. This updates the conda package manager and then the Anaconda distribution. Major updates happen a few times per year, while minor updates are more frequent. Updating monthly keeps you reasonably current without constant maintenance.

Update individual packages when you need new features or bug fixes with conda update package-name. Be aware that updates can sometimes break existing code if the package changed its API. For learning and experimentation, staying current is fine. For important projects, you might want to pin specific versions to ensure reproducibility.

Clean up package caches periodically to free disk space. Conda stores downloaded packages in a cache directory that grows over time. Run conda clean --all to remove cached files you no longer need. This can free several gigabytes on systems where you have installed many packages.

Back up important notebooks and code regularly. While Jupyter autosaves, hardware failures or accidental deletions can lose work. Use cloud storage, Git repositories, or simple file backups to protect your work. Making this a habit prevents devastating losses.

Periodically review your installed environments and remove ones you no longer use with conda env remove --name environment-name. Unused environments consume disk space unnecessarily. Keeping only active environments keeps your system cleaner and makes it easier to remember which environments serve which purposes.

Conclusion

Setting up your data science development environment is the essential first step that enables everything else you will learn. While the process involves multiple components and can seem overwhelming initially, following systematic steps leads to a fully functional workspace ready for learning and analysis.

Anaconda provides the smoothest path to a working environment by bundling Python, essential libraries, conda package manager, and Jupyter Notebook in one installation. This single download eliminates most setup headaches that plague beginners trying to configure environments manually. Jupyter Notebook gives you an interactive interface perfect for exploratory data analysis and learning. Optional tools like code editors and version control enhance your capabilities as you advance.

The time invested in proper setup pays dividends immediately by eliminating technical friction that would otherwise interrupt learning. With a working environment, you can focus on actually doing data science rather than fighting with installation issues. The skills you develop managing packages, environments, and tools transfer to any data science work you do throughout your career.

Now that your development environment is ready, you can begin your practical data science journey. In the next article, we will write our first Python code specifically for data science, learning to work with variables, data types, and basic operations that form the foundation of all data analysis. You will start seeing how Python enables you to work with data programmatically.

Key Takeaways

Anaconda provides the most beginner-friendly path to a working data science environment by bundling Python, hundreds of libraries, the conda package manager, and Jupyter Notebook in one installation. This eliminates the complexity of installing and configuring components individually and handles the complex dependencies that plague manual installations.

Jupyter Notebook creates interactive documents combining code, results, and documentation in one place, making it ideal for exploratory data analysis and learning. The browser-based interface allows writing code, executing it immediately, seeing results inline, and adding explanatory text all in one document that you can save and share.

Virtual environments provide isolated spaces where different projects can use different package versions without conflicts, solving the problem of incompatible dependencies between projects. While beginners can start with the base environment, understanding environments early builds good habits and prepares you for when you need them.

Installing additional packages uses conda as the preferred package manager for data science libraries, with pip as a fallback when packages are not available through conda. The conda package manager handles complex dependencies better than pip, particularly for libraries that depend on non-Python components.

Regular maintenance including updating packages, cleaning caches, and backing up work keeps your environment functional and protects against data loss. Building these habits early prevents problems before they occur and ensures you can focus on data science rather than technical troubleshooting.