Introduction

You have spent months learning data science, building portfolio projects, and polishing your resume. Now you have finally landed an interview for a data science position, and a mixture of excitement and anxiety washes over you. What will they ask? How technical will it be? What if you freeze up when faced with a coding challenge? These worries are completely normal, and the good news is that data science interviews, while challenging, follow predictable patterns that you can prepare for systematically.

Understanding what to expect in data science interviews and how to prepare effectively can transform your anxiety into confidence. Unlike some fields where interview questions vary wildly, data science interviews tend to cover a core set of topics that appear repeatedly across different companies and roles. Interviewers want to assess whether you understand fundamental concepts, can apply techniques appropriately, think analytically about problems, and communicate technical ideas clearly. Each of these dimensions can be prepared for through focused study and practice.

The challenge for beginners is knowing what to prioritize in your preparation. Data science is a broad field touching statistics, programming, machine learning, data manipulation, visualization, and domain knowledge. You could spend months studying every possible topic and still feel unprepared. The key is understanding what interviewers actually test and focusing your preparation on the concepts and skills that appear most frequently across interviews.

This comprehensive guide will walk you through everything you need to know to prepare for data science interviews as a beginner. We will explore the different types of questions you will encounter, from conceptual questions about statistics and machine learning to hands-on coding challenges and case studies. For each category, you will learn what interviewers are really assessing, how to structure your answers effectively, and what specific concepts to review. By the time you finish this guide, you will have a clear roadmap for interview preparation that maximizes your chances of success.

Remember that interview performance improves dramatically with practice. Your first few interviews may feel rough as you learn to think on your feet and articulate your knowledge under pressure. Each interview experience, whether it results in an offer or not, teaches you something valuable and makes you stronger for the next opportunity. Let’s begin building the knowledge and confidence you need to excel in data science interviews.

Understanding the Interview Landscape

Before diving into specific questions and preparation strategies, it helps to understand the overall structure of data science interview processes and what different types of companies are looking for. This big picture perspective helps you tailor your preparation and know what to expect at each stage.

Most data science interview processes consist of multiple rounds, each designed to evaluate different aspects of your capabilities. The initial screening typically happens through a phone or video call with a recruiter or hiring manager. This conversation assesses your background, interest in the role, and basic qualifications. While not deeply technical, this screening often includes fundamental questions about data science concepts to verify that you have genuine knowledge rather than just buzzwords on your resume.

If you pass the initial screening, you move to technical rounds that dig deeper into your skills. These interviews might be conducted by data scientists, machine learning engineers, or technical leads from the team you would join. Technical rounds often combine different question types including conceptual questions about statistics and machine learning, coding challenges that test your programming ability, case studies or problem-solving scenarios, and deep dives into your portfolio projects or previous work.

The specific emphasis varies significantly across companies and roles. A startup with a small data team might focus heavily on your ability to work independently and ship solutions quickly, asking practical questions about how you would approach real business problems. A large tech company might include rigorous coding assessments similar to software engineering interviews, expecting you to implement algorithms efficiently and discuss computational complexity. A research-oriented role might dive deep into statistical theory or the mathematics behind machine learning algorithms.

Understanding what type of company you are interviewing with helps you calibrate your preparation. Research the company before your interview to understand their data science maturity, what problems their data teams work on, and what technologies they use. This context allows you to emphasize the most relevant aspects of your background and demonstrate genuine interest in their specific challenges.

The interview conversation itself serves multiple purposes beyond just testing your knowledge. Interviewers are assessing your technical competence, certainly, but they are also evaluating how you think through problems, communicate complex ideas, handle uncertainty and ambiguity, respond to feedback and hints, and collaborate during problem-solving. This means that how you approach questions matters as much as whether you arrive at correct answers.

When you encounter a question you cannot answer immediately, the interviewer wants to see how you handle that challenge. Do you give up immediately, or do you work through the problem methodically? Do you explain your thought process, or do you sit in silence? Do you make reasonable assumptions and approximations, or do you get stuck demanding perfect information? These behaviors reveal how you would work as part of their team, making them critical to interview success beyond pure knowledge.

For beginners, it is especially important to manage expectations about what interviewers expect. They generally do not expect you to know everything or to answer every question perfectly. What they look for is solid understanding of fundamentals, ability to reason through problems logically, honesty about what you do and do not know, and enthusiasm for learning. Admitting when you are unsure about something, then making a thoughtful attempt to reason through it, often impresses interviewers more than confidently stating incorrect information.

Statistics and Probability Questions

Statistics forms the theoretical foundation of data science, and interviewers frequently test your statistical understanding through both conceptual questions and applied scenarios. For beginners, focusing on core statistical concepts that appear repeatedly in interviews provides the best return on preparation time.

Probability concepts appear in many interview questions because they underlie how we quantify uncertainty in data science. You should be comfortable explaining what probability distributions are and why they matter. A probability distribution describes how likely different outcomes are for a random variable. For instance, if you flip a fair coin many times, you would expect roughly half the flips to be heads and half to be tails, which follows a binomial distribution. Understanding common distributions like the normal distribution, which describes many natural phenomena, or the Poisson distribution, which models count data, allows you to recognize when certain analytical approaches apply.

Interviewers might ask you to explain the central limit theorem, which is one of the most important concepts in statistics. The central limit theorem states that when you take the average of many independent random samples, those sample averages will follow a normal distribution, even if the original data does not follow a normal distribution. This theorem is powerful because it allows us to make inferences about populations based on samples. When an interviewer asks about this, they want to hear that you understand both what the theorem states and why it matters for real data science work. You might explain that the central limit theorem is why we can use normal distribution-based methods for hypothesis testing even when our raw data is not normally distributed, as long as we have enough samples.

Hypothesis testing questions appear frequently because testing whether observed effects are real or due to chance is fundamental to data science. You should be able to explain what a p-value means in simple terms. A p-value represents the probability of observing results at least as extreme as what you saw if there were actually no real effect. Smaller p-values suggest that your observed result is unlikely to be due to random chance alone. When interviewers ask about p-values, they are often checking whether you understand common misconceptions. You should know that a p-value does not tell you the probability that your hypothesis is true, and that statistical significance does not necessarily mean practical importance.

Type I and Type II errors come up regularly in interviews because they represent fundamental tradeoffs in statistical decision making. A Type I error occurs when you conclude there is an effect when there actually is not, like a false positive in medical testing. A Type II error happens when you fail to detect an effect that truly exists, like missing a real disease in a patient. Interviewers want to see that you understand these errors exist, why they matter, and how different applications might care more about preventing one type versus the other. For instance, in fraud detection, you might tolerate more false positives because missing actual fraud is very costly.

Confidence intervals represent another core concept that interviewers use to assess statistical understanding. You should be able to explain that a ninety-five percent confidence interval means that if you repeated your sampling process many times, about ninety-five percent of the confidence intervals you calculate would contain the true population parameter. This is subtly different from saying there is a ninety-five percent chance the true value falls in your specific interval. Interviewers appreciate when you demonstrate understanding of these nuances, as they indicate deeper statistical thinking.

Questions about correlation and causation test whether you understand one of the most important distinctions in data analysis. You should be ready to explain that correlation means two variables move together, while causation means one variable actually causes changes in the other. When you observe that ice cream sales and drowning deaths both increase in summer, that is correlation. The heat causes both phenomena independently rather than ice cream sales causing drownings. Interviewers want to see that you would not jump to causal conclusions from observational data without proper experimental design or causal inference techniques.

Bias and variance represent key concepts in machine learning that have statistical foundations. You should understand that bias refers to systematic errors where your model consistently misses the true pattern, while variance refers to how much your model predictions would change if you trained on different data. High bias means your model is too simple and underfits the data. High variance means your model is too complex and overfits to training data noise. When interviewers ask about this tradeoff, they want to hear that you understand both concepts and can discuss how different modeling choices affect each.

When approaching statistical questions in interviews, always explain your reasoning clearly rather than just stating facts. Walk the interviewer through your thought process. If asked about when to use a particular statistical test, discuss the assumptions that test makes about the data and what problem it is designed to solve. This demonstration of conceptual understanding matters more than memorizing formulas or procedures.

Practice explaining statistical concepts to non-technical friends or family before your interview. If you can make someone without a statistics background understand what a confidence interval means, you will certainly be able to explain it clearly to an interviewer. This practice also helps you develop intuitive explanations rather than relying on technical jargon that might obscure whether you truly understand the concept.

Machine Learning Fundamentals

Machine learning questions form the technical core of most data science interviews. Interviewers want to verify that you understand how major algorithms work, when to apply different techniques, and how to evaluate model performance. For beginners, focusing on the most commonly used algorithms and their practical applications provides solid interview preparation.

Supervised learning questions dominate interviews because most business applications of machine learning involve prediction from labeled examples. You should be thoroughly comfortable discussing both regression and classification problems. When asked about linear regression, demonstrate that you understand it models the relationship between features and a continuous target variable by finding the best linear fit. Explain that it assumes a linear relationship between inputs and output, and that you can assess fit quality using metrics like mean squared error or R-squared. Be ready to discuss when linear regression works well and when its assumptions might be violated.

Logistic regression appears constantly in interviews despite its name suggesting regression, because it actually handles classification problems. You should explain that logistic regression predicts probabilities that an example belongs to a particular class by transforming a linear combination of features through the logistic function, which constrains outputs between zero and one. Interviewers often ask why you would use logistic regression versus more complex models. Good answers mention that logistic regression is interpretable, trains quickly, works well when the relationship between features and log-odds of the outcome is roughly linear, and provides probability estimates rather than just class predictions.

Decision trees merit deep understanding because they appear frequently themselves and form the basis for powerful ensemble methods. You should explain that decision trees split data recursively based on feature values to create increasingly homogeneous groups. They are intuitive, handle both numerical and categorical features naturally, and provide clear feature importance scores showing which variables most influence predictions. Be prepared to discuss limitations including their tendency to overfit training data and their instability where small data changes can produce very different trees.

Random forests build on decision trees by combining many trees trained on different random subsets of data and features. When interviewers ask about random forests, they want to hear that you understand the ensemble approach where many weak learners together create a strong learner. Explain that random forests reduce overfitting compared to individual trees, handle high-dimensional data well, and provide robust performance across many problem types. Discuss how the randomness in selecting features for each split creates diversity among trees, which improves overall predictions when their results are averaged or voted on.

Gradient boosting methods like XGBoost and LightGBM dominate many Kaggle competitions and real-world applications, making them important interview topics. You should understand that boosting trains models sequentially, with each new model focusing on correcting errors made by previous models. This differs from random forests, which train trees independently in parallel. Gradient boosting often achieves excellent performance but requires careful tuning and can overfit if not properly regularized. When discussing boosting, mention that it is powerful but less interpretable than simpler models and can be sensitive to hyperparameter choices.

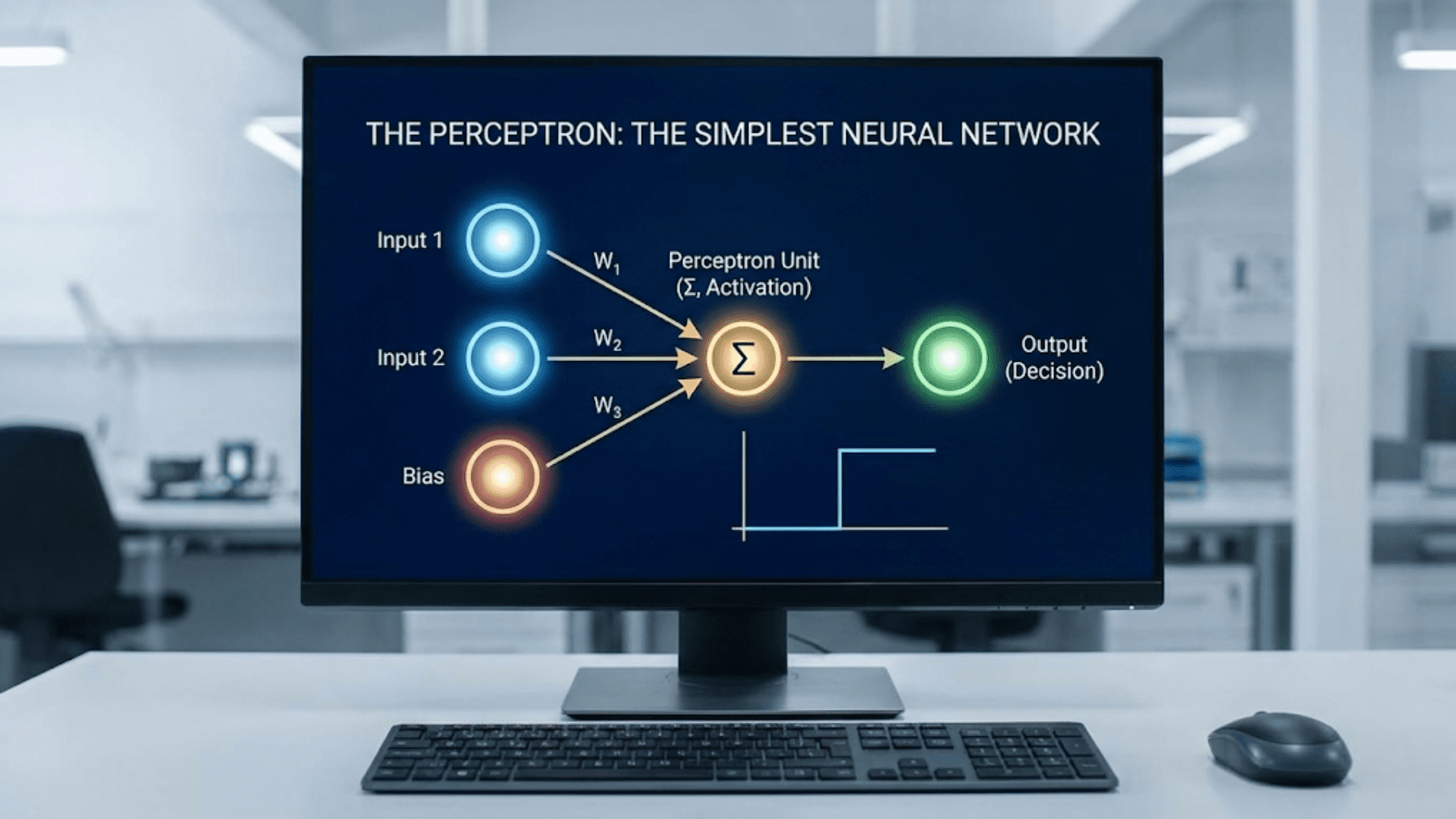

Neural networks and deep learning generate interest, but for entry-level interviews, you generally need only high-level understanding unless applying specifically for deep learning roles. Be able to explain that neural networks consist of layers of connected nodes where each connection has a weight that gets adjusted during training. The network learns by using backpropagation to calculate how to adjust weights to reduce prediction errors. Understand that deep learning refers to neural networks with many layers, and that these deep networks excel at learning complex patterns in large datasets, particularly for images, text, and audio.

Support vector machines appear less frequently in modern interviews but still surface occasionally. You should know that SVMs find the hyperplane that best separates different classes by maximizing the margin between classes. They work well in high-dimensional spaces and with clear class separation but can be slow to train on large datasets. The kernel trick allows SVMs to find nonlinear decision boundaries, extending their applicability.

Clustering questions test your understanding of unsupervised learning. K-means clustering is the most common algorithm interviewers ask about. Explain that K-means partitions data into K clusters by iteratively assigning points to the nearest cluster center and then recalculating centers based on assigned points. It is fast and simple but requires specifying the number of clusters beforehand and can get stuck in local optima. Discuss how you might choose K using methods like the elbow method or silhouette analysis.

Dimensionality reduction comes up when discussing how to handle high-dimensional data. Principal component analysis transforms correlated features into uncorrelated principal components that capture most of the variance in the data. The first few principal components often capture most important patterns, allowing you to reduce dimensions while retaining key information. Explain that dimensionality reduction helps with visualization, speeds up training, and can reduce overfitting by eliminating noisy features.

When answering machine learning questions, always connect algorithms to practical use cases. Do not just describe how an algorithm works, explain when you would choose it over alternatives. If discussing random forests, mention they work well for tabular data with mixed feature types and provide good baseline performance. This practical framing shows you think about machine learning as a tool for solving problems rather than an academic exercise.

Prepare to discuss the complete machine learning workflow, not just individual algorithms. Interviewers often ask how you would approach a problem from start to finish. Walk through defining the problem clearly, exploring and understanding the data, cleaning and preprocessing data appropriately, engineering useful features, selecting appropriate algorithms to try, training models with proper validation, evaluating performance using relevant metrics, and iterating to improve results. This end-to-end perspective demonstrates that you understand machine learning in context rather than in isolation.

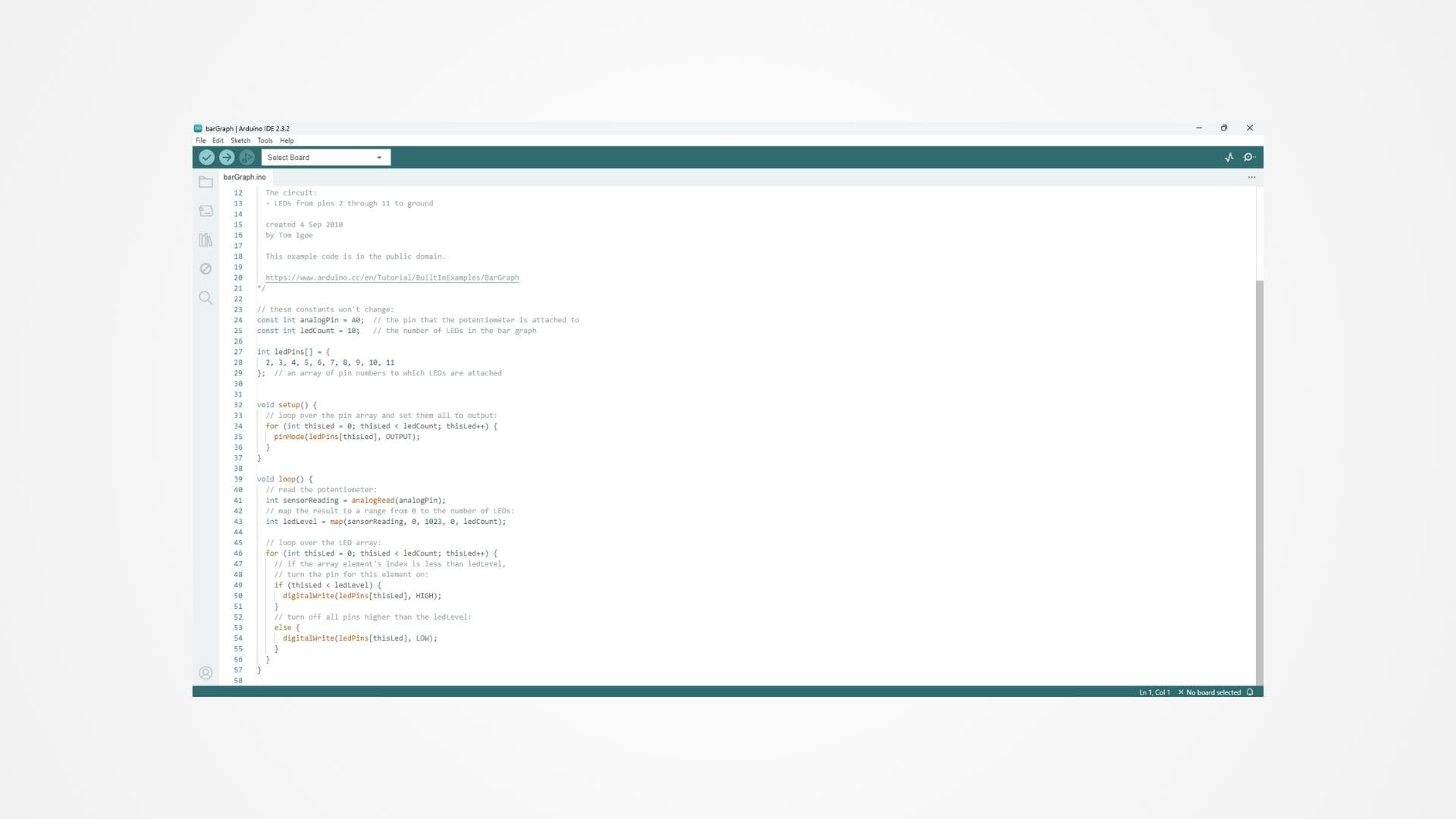

Coding and Programming Challenges

Coding questions in data science interviews test your ability to manipulate data, implement algorithms, and solve problems programmatically. While typically less intense than software engineering interviews, data science coding challenges still require solid programming fundamentals and comfort with key libraries.

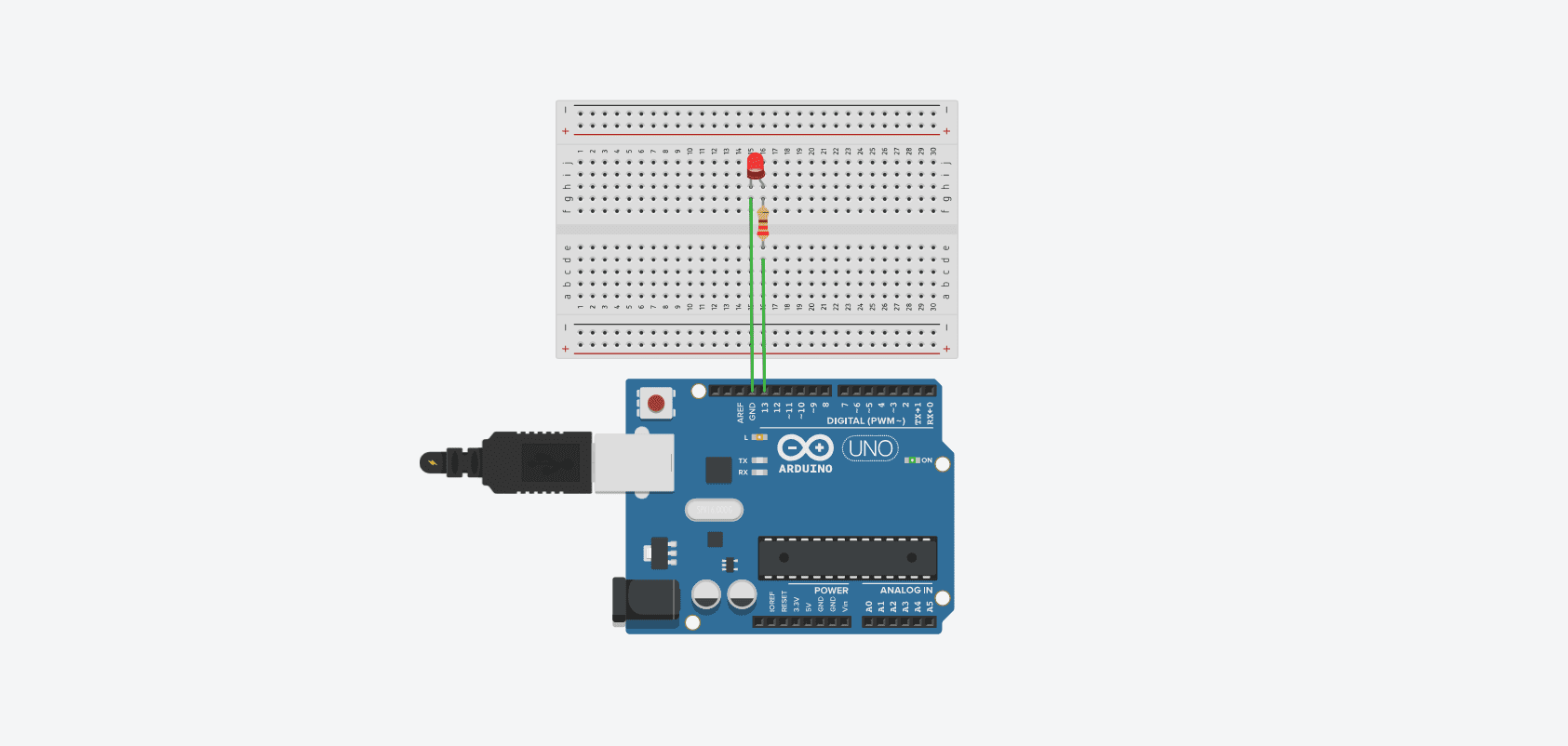

Python dominates data science interviews, so your preparation should focus heavily on Python unless you are specifically applying to R-focused roles. You should be fluent in fundamental Python including working with lists, dictionaries, and other built-in data structures, writing functions that encapsulate logic cleanly, using control flow with if statements and loops appropriately, handling errors gracefully when they occur, and reading and understanding error messages to debug problems.

Interviewers frequently ask you to manipulate data using pandas, testing whether you can perform common data wrangling tasks. You should practice selecting rows and columns using various indexing methods, filtering data based on conditions, creating new calculated columns, handling missing values by dropping or imputing them, grouping data and computing aggregates within groups, merging or joining multiple datasets together, and reshaping data between wide and long formats.

A common interview question might ask you to find the top K products by sales from a sales dataset. This tests whether you can group by product, sum sales, sort results, and select the top K. Practice these types of questions so you can complete them fluently without fumbling with syntax. The interviewer cares less about whether you remember the exact pandas method names and more about your logical approach to solving the problem and your ability to figure out syntax details.

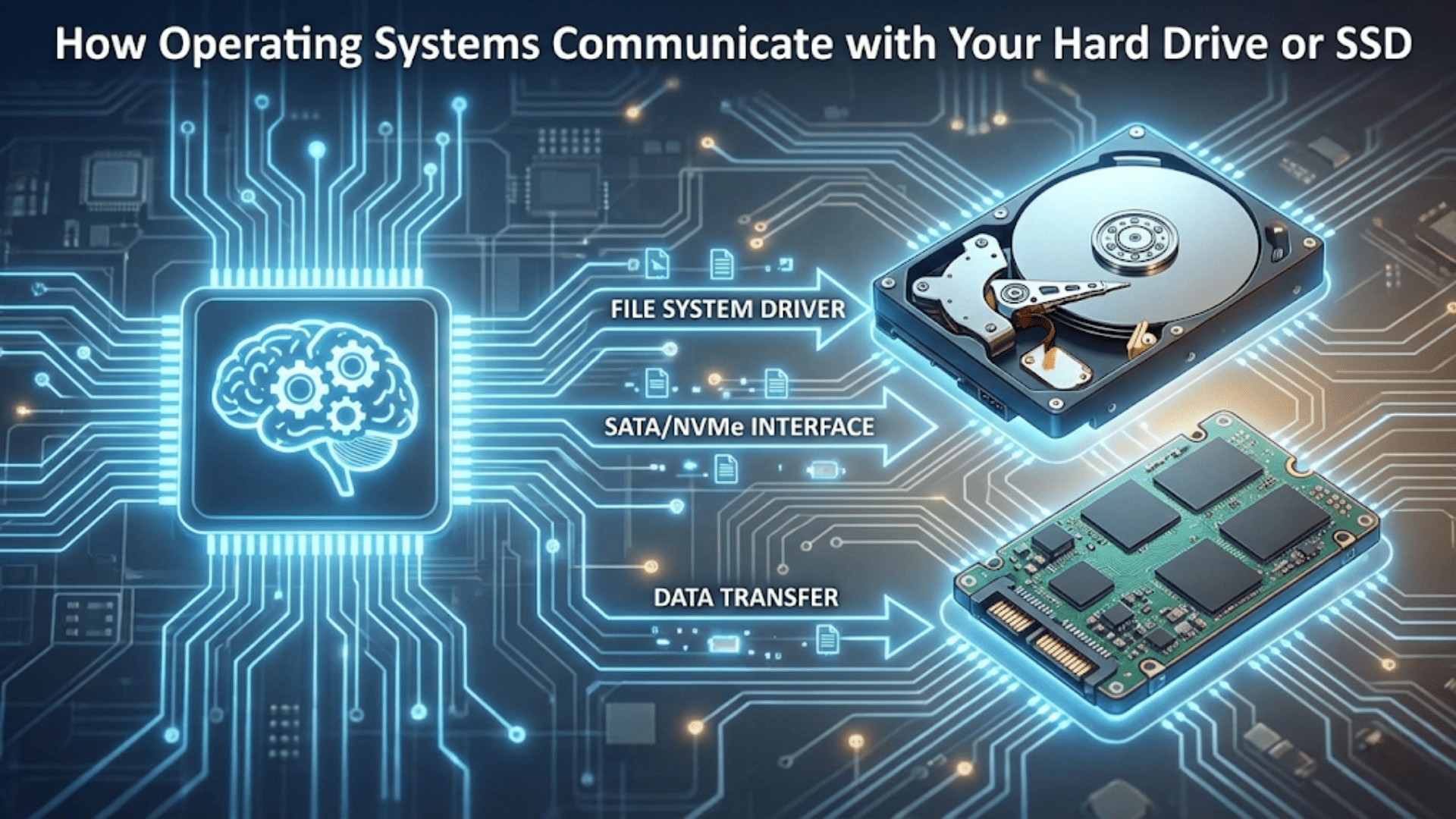

SQL often appears in data science interviews because most real-world data lives in databases. You should be comfortable writing queries that select specific columns from tables, filter rows using WHERE clauses, join multiple tables using appropriate join types, aggregate data with GROUP BY clauses, sort results using ORDER BY, and combine queries using subqueries or common table expressions. Practice translating business questions into SQL queries, as interviews often pose problems like “find customers who made purchases in the last month but not this month” that require you to construct appropriate queries.

Algorithm implementation questions test your understanding of how methods work internally. You might be asked to implement a basic algorithm like K-nearest neighbors from scratch. For KNN, you would need to calculate distances between the test point and all training points, find the K nearest neighbors, and aggregate their labels through majority voting for classification or averaging for regression. These questions assess whether you truly understand algorithms rather than just knowing how to call library functions.

Statistical simulation questions appear frequently because they test both programming and statistical thinking. A classic example asks you to simulate flipping a biased coin or rolling dice, then estimate probabilities from many trials. Another common question asks you to implement bootstrap resampling, where you repeatedly sample from your dataset with replacement and compute statistics on each sample. These problems verify that you understand random sampling, can implement iterative processes, and know how to estimate distributions through simulation.

Data manipulation challenges might ask you to transform data from one format to another. For instance, you might need to convert a dataset with one row per customer per month into one row per customer with monthly values in separate columns. Or you might need to unpivot wide data into long format suitable for time series analysis. These transformations test your comfort with data reshaping operations that are common in real data science work.

When approaching coding challenges, think aloud as you work through the problem. Explain your approach before diving into implementation. If you are asked to find duplicate values in a dataset, you might say “I could create a set of unique values and compare the set length to the original list length, or I could use pandas to group by all columns and filter for groups with counts greater than one.” This explanation shows your thought process and allows the interviewer to guide you if you are heading in an unproductive direction.

Write clean, readable code during interviews even though you might be working quickly. Use descriptive variable names rather than single letters. Add brief comments explaining non-obvious logic. Structure your code into logical chunks rather than writing one long block. This attention to code quality signals professional habits and makes it easier for interviewers to follow your work.

Test your code as you write it by walking through examples mentally or actually running it if the interview format allows. If asked to find the median of a list, test your implementation mentally with a small example like three values to verify it handles both odd and even length lists correctly. This testing habit catches bugs before the interviewer does and demonstrates thoroughness.

When you get stuck on a coding problem, do not sit in silence. Explain what you are trying to do and where you are stuck. Interviewers often provide hints if they see you are on the right track but missing a specific detail. Asking clarifying questions is encouraged, not a sign of weakness. Questions like “can I assume the input data is already sorted” or “what should I return if the list is empty” show you are thinking about edge cases and requirements.

Practice coding problems regularly before interviews using platforms like LeetCode, HackerRank, or Stratascratch that offer data science specific challenges. Time yourself solving problems to build comfort working under pressure. Review solutions to problems you struggled with to learn better approaches and expand your problem-solving toolkit. The confidence that comes from having practiced similar problems before makes interviews significantly less stressful.

Case Studies and Business Problem Solving

Case study questions assess your ability to translate ambiguous business problems into concrete analytical approaches. These questions do not have single correct answers but rather evaluate how you structure problems, what assumptions you make, and how you reason through uncertainty.

A typical case study might present a scenario like “our e-commerce company wants to reduce cart abandonment, how would you approach this with data science?” The interviewer wants to see your complete thought process from problem framing through potential solutions and evaluation approaches. This type of question reveals whether you understand data science as a tool for solving business problems rather than just a technical exercise.

When you encounter a case study, begin by asking clarifying questions to understand the problem better before diving into solutions. For cart abandonment, you might ask about what data is available on user behavior, whether there is information about why users abandon carts, what the current abandonment rate is, and what would be considered a successful reduction. These questions demonstrate that you gather requirements before proposing solutions, a critical real-world skill.

After understanding the problem, explicitly state the problem you are trying to solve in data science terms. For cart abandonment, you might frame it as both a prediction problem where you identify which users are likely to abandon their carts before checkout, and an analysis problem where you understand what factors correlate with abandonment. This framing shows you can translate business questions into analytical approaches.

Walk through what data you would want to collect or analyze. For cart abandonment, relevant data might include user demographics, browsing history, cart contents, product prices, time spent on checkout page, shipping costs, whether the user is logged in, and device type. Explain why each data source might be valuable. For instance, if shipping costs are high, users might abandon carts, suggesting that offering free shipping could reduce abandonment for high-value carts.

Discuss what features you would engineer from available data. Features are the specific variables your models would use. For cart abandonment, you might create features like total cart value, number of items in cart, time since items were added to cart, ratio of cart value to user’s average purchase, and whether the user has abandoned carts before. Explaining your feature engineering thinking demonstrates both domain intuition and technical understanding.

Propose multiple analytical approaches rather than jumping to one solution. For cart abandonment, you might suggest building a predictive model to identify high-risk users for targeted interventions, analyzing abandonment patterns to understand common triggers, running A/B tests on checkout flow changes, and segmenting users to understand if abandonment differs across customer groups. This multi-faceted approach shows you consider problems from different angles.

Discuss how you would validate whether your solutions work. For predictive models, you would track whether users identified as high-risk actually abandon more frequently. For checkout flow changes, you would run controlled experiments comparing abandonment rates between old and new flows. This emphasis on measurement demonstrates you understand the importance of validating that solutions deliver promised value.

Address potential challenges and limitations in your approach. For cart abandonment, you might note that you cannot easily measure why users abandon without survey data, that some abandonment is inevitable and might not be preventable, that interventions like discount offers could reduce immediate abandonment but hurt long-term profitability, and that you need to consider costs of interventions against the value of recovered sales. This balanced perspective shows mature thinking about real-world tradeoffs.

When presenting your approach, structure your thinking clearly. You might organize your response by first understanding the current state, then identifying root causes through data analysis, then building predictive models to identify at-risk sessions, then testing interventions, and finally measuring impact. This logical flow makes it easy for interviewers to follow your reasoning.

Practice case studies by working through business problems from companies you know. How would you use data science to improve Netflix recommendations? How would you help Airbnb optimize pricing? How would you detect fraudulent transactions for a bank? Working through these scenarios helps you develop frameworks for approaching open-ended problems that you can adapt to whatever case study appears in your interview.

Remember that interviewers care more about your thought process than your specific recommendations. They want to see that you ask clarifying questions, make reasonable assumptions when information is missing, consider multiple approaches, think about practical implementation, and evaluate solutions systematically. Even if your specific solution differs from what they might propose, demonstrating structured analytical thinking impresses interviewers and shows you can contribute meaningfully to their team.

Behavioral and Communication Questions

While technical knowledge is essential, data science roles also require strong communication, collaboration, and professional maturity. Behavioral questions assess these softer skills that often distinguish good data scientists from great ones.

The classic “tell me about yourself” opening appears in almost every interview. This question gives you the opportunity to concisely share your background, explain your path to data science, highlight relevant experiences, and convey enthusiasm for the role. Structure your response to cover your background briefly, your transition into data science if you career changed, one or two key accomplishments or projects, and why you are excited about this specific opportunity. Keep your answer to two or three minutes, hitting the highlights without exhaustive detail.

Interviewers frequently ask why you are interested in data science and this particular role. They want to understand your motivation and assess whether you have genuine interest versus just chasing salary or hype. Good answers mention specific aspects of data science that appeal to you, such as solving complex problems, continuous learning, business impact of your work, or intellectual challenge. Connect your interest to the specific company by mentioning what excites you about their domain, data challenges, or team. This specificity demonstrates genuine interest rather than generic enthusiasm.

Questions about your portfolio projects or previous work allow you to showcase your experience and thinking process. Prepare to discuss two or three projects in depth, ready to explain what problem you were solving, what data you worked with and how you obtained it, what exploratory analysis revealed, what models or approaches you tried and why, what challenges you encountered and how you overcame them, what results you achieved and how you measured them, and what you would do differently if you could redo the project. This level of detail demonstrates ownership and deep engagement with your work.

When discussing projects, emphasize your decision-making process. Interviewers want to understand how you choose between alternatives, what tradeoffs you consider, and how you balance competing priorities like accuracy versus interpretability or development time versus performance. Explaining that you chose logistic regression over random forest because the business needed to understand which factors drove predictions shows sophisticated thinking that purely technical discussions might miss.

Behavioral questions using the STAR format appear frequently in interviews. STAR stands for Situation, Task, Action, and Result. Interviewers might ask you to describe a time when you faced a difficult technical problem, worked on a team with conflicting opinions, had to learn something new quickly, or delivered results under tight deadlines. Structure your responses by setting the scene briefly, explaining what needed to be accomplished, describing the specific actions you took, and sharing the outcome. This framework keeps your answers concise and demonstrates that you can articulate your experiences clearly.

Questions about handling failure or mistakes test your self-awareness and growth mindset. Everyone makes mistakes, and interviewers want to see that you can acknowledge them, learn from them, and improve. When asked about a mistake you made, choose an example where you genuinely erred but took responsibility and fixed the problem. Explain what you learned from the experience and how it changed your approach to similar situations. This demonstrates maturity and continuous improvement rather than defensiveness.

Technical communication questions might ask you to explain a complex concept to a non-technical audience. Interviewers use these questions to assess your ability to communicate with business stakeholders who lack technical backgrounds. Practice explaining concepts like how machine learning works, what a confidence interval means, or why correlation does not imply causation using everyday language and relatable analogies. This skill is crucial because data scientists must regularly present findings to executives, product managers, and other non-technical colleagues.

When asked about working in teams, emphasize collaboration and communication. Describe how you share knowledge with teammates, help others when they are stuck, incorporate feedback on your work, and navigate disagreements professionally. Data science is increasingly a team sport, and companies want to hire people who work well with others. Specific examples of successful collaboration strengthen your answers.

Questions about your weaknesses or areas for improvement require honest self-assessment balanced with positive framing. Choose genuine areas where you are working to improve rather than fake weaknesses like “I work too hard” that ring hollow. You might mention that you are still developing your deep learning knowledge, are working to improve your data visualization skills, or are building experience with particular tools or techniques. The key is to couple the weakness with what you are doing to address it, demonstrating self-awareness and commitment to growth.

Prepare questions to ask your interviewers when they inevitably ask if you have questions for them. Thoughtful questions signal genuine interest and help you evaluate whether the role suits you. Ask about what a typical day looks like for data scientists on the team, what projects the team is currently working on, what tools and technologies they use, what success looks like in this role over the first six months, what opportunities exist for mentorship and learning, or what they enjoy most about working at the company. Avoid questions about salary or benefits early in the process, saving those for later stages.

Throughout behavioral questions, be authentic rather than trying to present a perfect image. Interviewers appreciate honesty and can usually tell when responses feel rehearsed or fake. Share genuine experiences and real thoughts about your data science journey. This authenticity helps build rapport and allows interviewers to envision you as part of their team.

Creating Your Interview Preparation Plan

Effective interview preparation requires a systematic approach that covers all the areas interviewers might test while managing your limited preparation time efficiently. Creating a structured plan helps you make steady progress without feeling overwhelmed by the breadth of data science.

Start by assessing your current strengths and weaknesses across the major interview domains. For each area including statistics and probability, machine learning fundamentals, Python programming, data manipulation with pandas, SQL querying, case study problem solving, and behavioral communication, honestly evaluate where you feel confident and where you need improvement. This assessment helps you allocate preparation time effectively, spending more time on weaker areas while maintaining strength in topics you already understand well.

Create a study schedule that spreads preparation over several weeks rather than cramming everything into the final days before an interview. Consistent daily practice over an extended period builds deeper understanding and retention than intensive last-minute studying. Dedicate different days or sessions to different topics to ensure comprehensive coverage. You might focus on statistics concepts on Mondays, machine learning on Wednesdays, coding practice on Fridays, and case studies on weekends. This variation also keeps preparation more engaging than drilling the same topic repeatedly.

For statistics and machine learning concepts, create a question bank covering topics that commonly appear in interviews. For each concept, write a clear explanation in your own words, create an example that illustrates the concept, note common misconceptions or tricky details, and identify when you would use this concept in practice. This active processing transforms passive reading into deep understanding. When you can explain concepts clearly to yourself in writing, you will be able to articulate them clearly to interviewers.

Practice coding problems regularly using resources like LeetCode, HackerRank, or Stratascratch that offer data science-specific questions. Start with easier problems to build confidence, then progressively tackle harder challenges. Time yourself solving problems to simulate interview pressure. After solving each problem, review other solutions to learn different approaches and identify more efficient or elegant implementations. This exposure to various problem-solving strategies expands your toolkit.

Conduct mock interviews with friends, colleagues, or through platforms that match you with practice partners. Mock interviews simulate the pressure and dynamic of real interviews while providing safe opportunities to make mistakes and improve. Ask your practice partner to give honest feedback about your explanations, communication style, and areas for improvement. Recording yourself during mock interviews, while perhaps uncomfortable, provides valuable insights into verbal tics, unclear explanations, or nervous habits you might not notice otherwise.

Prepare detailed answers to common behavioral questions by reflecting on your experiences and identifying compelling stories that demonstrate your skills and growth. Write out your responses to questions like “tell me about a challenging project,” “describe a time you worked on a team,” or “explain how you handle failure.” Practice delivering these answers aloud until they flow naturally without sounding overly rehearsed. This preparation prevents you from freezing when asked these questions in actual interviews.

Study the company and role thoroughly before each interview. Research what problems their data science team works on, what technologies they use, and what recent news or developments might be relevant. This research allows you to ask informed questions, connect your experience to their needs, and demonstrate genuine interest. Reviewing the job description carefully and preparing examples of how your skills match their requirements makes your interview responses more targeted and compelling.

Create a portfolio of projects you can discuss in depth during interviews. Choose two or three projects that showcase different skills and be prepared to discuss every aspect of them including the problem and motivation, data sources and characteristics, exploratory analysis findings, modeling approaches and why you chose them, results and how you validated them, and limitations and potential improvements. Anticipating questions about your projects and rehearsing explanations ensures you can discuss them confidently and thoroughly.

Maintain a learning journal where you record new concepts you study, problems you solve, and insights you gain. This journal serves multiple purposes including reinforcing learning through writing, creating a reference you can review before interviews, and tracking your progress to maintain motivation. When you feel discouraged, looking back at what you have learned reminds you of how much you have grown.

Manage your energy and stress during preparation to avoid burnout. While dedication is important, trying to study every waking hour leads to diminishing returns and exhaustion. Schedule regular breaks, maintain physical exercise, get adequate sleep, and preserve activities you enjoy. This balance keeps you fresh and mentally sharp, improving both your learning efficiency and interview performance.

As interview dates approach, shift from learning new material to reviewing and reinforcing what you already know. The final week before an interview should focus on reviewing your notes, practicing explanations of key concepts, solving a few practice problems to stay sharp, rehearsing answers to behavioral questions, and resting adequately so you are alert on interview day. This review phase consolidates your preparation rather than introducing new confusion with unfamiliar topics.

Remember that interview preparation is itself a learning process that improves with practice. Your first interview might feel rough as you discover what questions actually get asked and what communication style works best. Each subsequent interview builds on those lessons, making you progressively better at demonstrating your capabilities. Even interviews that do not result in offers provide valuable feedback that strengthens future performance.

Conclusion: Transforming Preparation into Success

Data science interviews can feel intimidating, especially when you are beginning your career and facing experienced interviewers who assess your knowledge across diverse technical domains. However, understanding what interviewers are looking for and preparing systematically transforms anxiety into confidence. The questions they ask, while varied in specific wording, draw from a core set of concepts in statistics, machine learning, programming, and problem-solving that you can master through focused study and practice.

Your preparation should balance technical knowledge with communication skills, recognizing that interviewers evaluate both what you know and how effectively you explain your thinking. Demonstrating solid grasp of fundamentals matters more than claiming expertise in every advanced technique. Articulating your reasoning clearly, asking thoughtful questions when uncertain, and connecting technical concepts to practical applications shows the kind of thinking that makes you valuable as a data scientist.

Statistics and probability questions test your theoretical foundation and ability to reason about uncertainty. Focus on understanding core concepts like probability distributions, hypothesis testing, confidence intervals, and the bias-variance tradeoff rather than memorizing formulas. Being able to explain these concepts in simple terms and discuss when they apply in practice demonstrates genuine understanding that rote memorization cannot achieve.

Machine learning fundamentals form the technical core of most data science roles. Prepare to discuss major supervised learning algorithms including linear regression, logistic regression, decision trees, random forests, and gradient boosting. Understand not just how these algorithms work but when to apply each one, what assumptions they make, and how to evaluate their performance. Connecting algorithms to real use cases shows you think about machine learning as a problem-solving tool rather than an academic exercise.

Coding and programming challenges assess your ability to manipulate data and implement solutions. Build fluency with Python fundamentals, pandas for data wrangling, and SQL for querying databases. Practice solving data manipulation problems until common operations become second nature. Remember that interviewers care more about your logical approach and problem-solving process than whether you remember every pandas method name.

Case studies and business problems evaluate your ability to translate ambiguous requirements into analytical approaches. Practice structured problem-solving where you ask clarifying questions, state assumptions explicitly, propose multiple potential approaches, and discuss how you would validate solutions. This structured thinking demonstrates the business acumen that distinguishes data scientists who deliver value from those who simply build models.

Behavioral questions assess your communication skills, collaboration abilities, and professional maturity. Prepare compelling stories about your experiences and projects using the STAR framework. Practice explaining technical concepts to non-technical audiences using clear language and helpful analogies. These soft skills often determine your success in team environments where technical knowledge alone is insufficient.

Creating a systematic preparation plan helps you cover all interview domains without feeling overwhelmed. Assess your strengths and weaknesses, then allocate preparation time accordingly. Mix studying concepts with solving practice problems and conducting mock interviews. This varied preparation builds both knowledge and the confidence that comes from repeated practice under interview-like conditions.

Remember that interview performance improves dramatically with experience. Your first few interviews provide learning opportunities as you discover what questions actually get asked and how to effectively communicate under pressure. Each interview makes you stronger for the next, gradually transforming you from a nervous candidate into someone who can confidently demonstrate their capabilities.

Approach interviews as conversations where you demonstrate your thinking rather than tests where you must produce perfect answers. Interviewers understand that you are still learning and do not expect omniscience. What they look for is solid understanding of fundamentals, logical problem-solving ability, intellectual honesty about what you do and do not know, and enthusiasm for continued growth. Demonstrating these qualities matters more than answering every question flawlessly.

When you walk into your data science interviews, you now have a clear understanding of what to expect and how to prepare. The statistics questions test your theoretical foundation. The machine learning questions assess your technical knowledge. The coding challenges evaluate your practical skills. The case studies probe your business thinking. The behavioral questions examine your communication and collaboration. Each dimension is preparable through systematic study and practice.

Your preparation transforms nerves into confidence as you internalize core concepts, practice articulating your thinking, and build comfort with the interview format. The investment you make in preparation pays dividends not just in landing your first role but throughout your data science career as the foundational knowledge you build now supports all your future learning and growth. Begin your preparation today, stay consistent in your effort, and trust that systematic preparation will carry you successfully through your interviews.