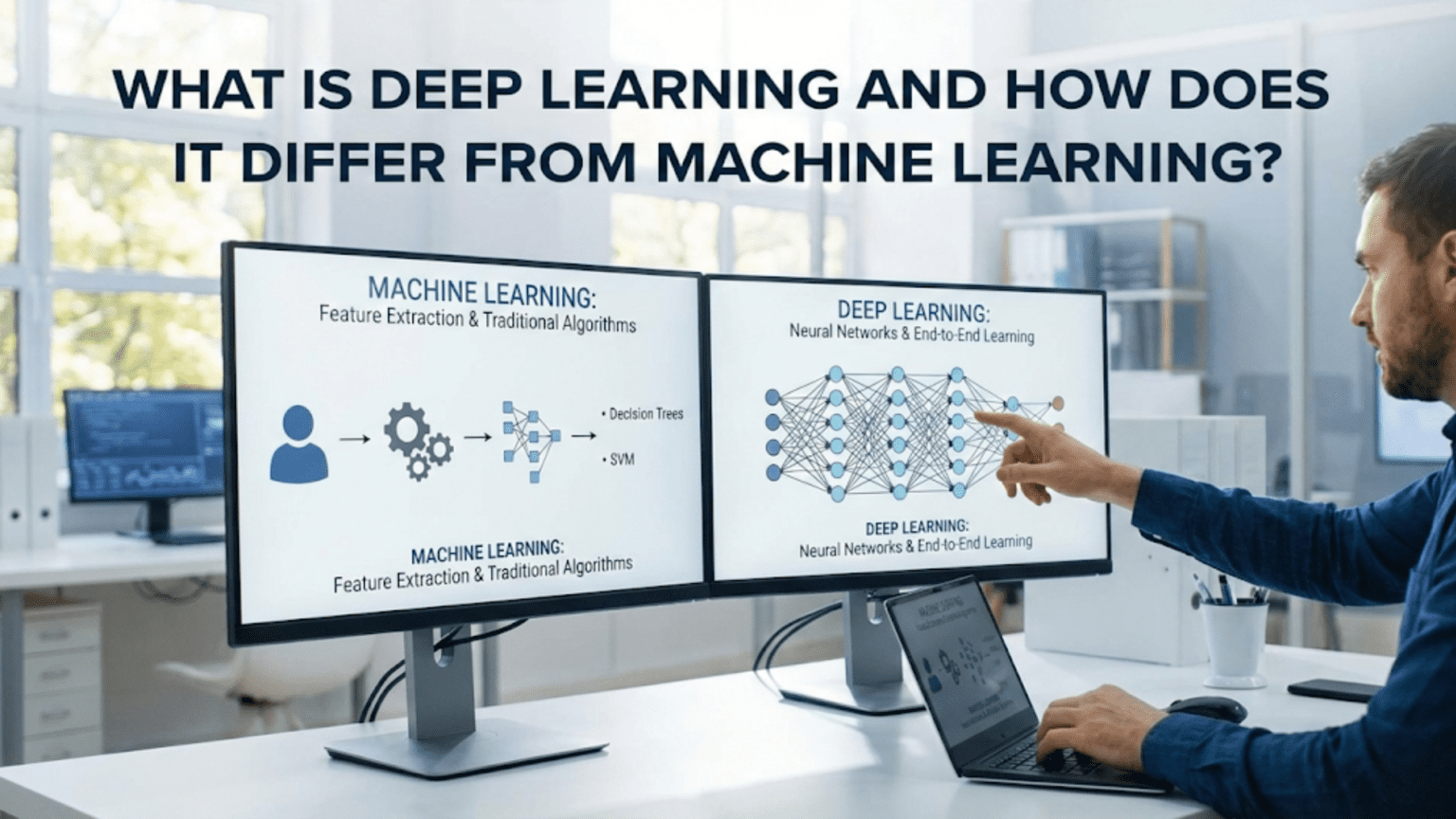

Deep learning is a specialized subset of machine learning that uses artificial neural networks with multiple layers (hence “deep”) to automatically learn hierarchical representations from data. While traditional machine learning requires manual feature engineering, deep learning automatically discovers the features needed for detection or classification from raw data. Deep learning excels at processing unstructured data like images, audio, and text, but requires large datasets and significant computational power, whereas traditional machine learning works well with smaller datasets and structured data.

Introduction: The AI Revolution Within AI

Imagine trying to teach a computer to recognize cats in photos. With traditional machine learning, you’d need to manually define features: “look for pointy ears, whiskers, four legs, fur texture.” You’d spend months engineering these features, testing them, refining them. Now imagine instead showing the computer millions of cat photos and saying “learn what makes a cat a cat on your own.” That’s the fundamental shift deep learning represents.

Deep learning has sparked an AI renaissance. It’s the technology behind virtual assistants understanding speech, smartphones recognizing faces, cars driving themselves, and chatbots conversing naturally. In barely a decade, deep learning transformed AI from a promising but limited technology into systems that rival and sometimes exceed human capabilities in specific domains.

Yet deep learning is often misunderstood, conflated with all of machine learning or portrayed as completely different. The reality is more nuanced: deep learning is a powerful subset of machine learning with unique characteristics, capabilities, and limitations. Understanding what deep learning is, how it differs from traditional machine learning, when to use it, and when traditional approaches work better is essential for anyone working with or evaluating AI systems.

This comprehensive guide clarifies the relationship between machine learning and deep learning. You’ll learn what deep learning actually is, how neural networks work at a high level, the key differences from traditional ML, why deep learning has become so powerful, its advantages and limitations, and practical guidance for choosing between approaches. With clear explanations and concrete examples, you’ll develop an accurate mental model of deep learning’s place in the broader AI landscape.

What is Deep Learning? The Core Concept

Deep learning is a subset of machine learning based on artificial neural networks with multiple layers, enabling automatic learning of hierarchical representations from data.

The Building Blocks

Artificial Neural Networks (ANNs):

- Computational models inspired by biological neurons

- Composed of interconnected nodes (neurons) organized in layers

- Each connection has a weight that adjusts during learning

- Neurons apply mathematical functions to inputs

“Deep” in Deep Learning:

- Refers to multiple hidden layers between input and output

- “Shallow” networks: 1-2 hidden layers

- “Deep” networks: Many hidden layers (10s to 100s)

- Each layer learns increasingly abstract representations

Example Architecture:

Input Layer → Hidden Layer 1 → Hidden Layer 2 → Hidden Layer 3 → Output Layer

(Raw pixels) → (Edges/textures) → (Parts/shapes) → (Objects) → (Classification)How Deep Learning Works: The High-Level View

Training Process:

- Forward Pass: Input data flows through network

- Each layer transforms the data

- Final layer produces prediction

- Compare: Prediction vs. actual answer

- Calculate error/loss

- Backward Pass: Error propagates backward

- Adjust weights to reduce error

- Uses algorithm called backpropagation

- Repeat: Process thousands/millions of examples

- Gradually improves predictions

- Network “learns” patterns in data

Key Insight: Unlike traditional ML, you don’t specify what features to look for. The network automatically learns useful representations at each layer.

The Hierarchical Learning

Image Recognition Example:

Layer 1 (Low-level features):

- Detects edges, lines, simple shapes

- Horizontal, vertical, diagonal lines

- Basic textures

Layer 2 (Mid-level features):

- Combines edges into parts

- Corners, curves, simple patterns

- Basic object parts

Layer 3 (High-level features):

- Complex parts and shapes

- Eyes, noses, ears, wheels

- Recognizable object components

Output Layer:

- Combines high-level features

- Final classification: “cat,” “dog,” “car”

Automatic Discovery: Network learns this hierarchy automatically from labeled images, without being told what features to look for.

Traditional Machine Learning: The Comparison Point

To understand deep learning, we need to understand traditional machine learning.

Traditional ML Workflow

Process:

- Collect Data: Gather labeled examples

- Feature Engineering (Manual, Critical):

- Human experts design features

- Extract relevant characteristics

- Transform raw data into numerical features

- Most time-consuming step

- Select Algorithm: Choose learning algorithm

- Decision trees, SVM, Random Forest, etc.

- Relatively simple compared to deep networks

- Train Model: Learn from features and labels

- Evaluate: Test performance

Example: Spam Detection

Feature Engineering:

Raw email → Extract features:

- Word frequencies (count "viagra", "winner", etc.)

- Email length

- Number of exclamation marks

- Sender domain

- Presence of links

- Time sent

- etc.

Human expert decides which features matterModel: Use extracted features with Random Forest or SVM

The Feature Engineering Bottleneck

Challenge: Designing good features requires:

- Domain expertise

- Trial and error

- Time and effort

- Different features for each problem

Example: Medical Diagnosis

Traditional ML:

- Radiologist identifies relevant image features

- Tumor size, shape, border characteristics

- Texture patterns, density measurements

- Manually extract these from images

- Train classifier on extracted features

Limitation: Features limited by human knowledge and perception

Deep Learning vs. Machine Learning: The Key Differences

Let’s examine the fundamental differences between these approaches.

Difference 1: Feature Engineering

Traditional ML:

- Manual feature engineering required

- Humans design and extract features

- Domain expertise crucial

- Different features for each problem type

Deep Learning:

- Automatic feature learning (representation learning)

- Network discovers useful features

- Same architecture applicable to different problems

- Features emerge during training

Example Impact:

Traditional ML:

- Weeks designing features

- Expert knowledge required

- Limited by human insight

Deep Learning:

- Network learns features automatically

- No feature engineering needed

- Can discover non-obvious patternsDifference 2: Data Requirements

Traditional ML:

- Works with small to medium datasets

- Can learn from hundreds to thousands of examples

- Feature engineering compensates for limited data

- Performance plateaus with more data

Deep Learning:

- Requires large datasets

- Typically needs thousands to millions of examples

- More data → better performance (often)

- Data-hungry but scales well

Performance vs. Data:

Traditional ML: _____/‾‾‾‾‾

____/

(plateaus early)

Deep Learning: ____

__/

__/

_/

(keeps improving)

↑

Amount of DataDifference 3: Computational Requirements

Traditional ML:

- CPU-friendly: Trains on standard computers

- Minutes to hours on laptop

- Moderate computational requirements

- Deployable on resource-constrained devices

Deep Learning:

- GPU-intensive: Requires specialized hardware

- Hours to weeks on powerful GPUs

- High computational requirements

- Often needs server/cloud deployment

Example:

Random Forest (1000 trees):

- Training: 10 minutes on laptop CPU

- Inference: Milliseconds

Deep CNN (ResNet-50):

- Training: 24 hours on high-end GPU

- Inference: Faster on GPU, slower on CPUDifference 4: Interpretability

Traditional ML:

- More interpretable (especially simpler models)

- Decision trees: can see decision rules

- Linear models: understand feature weights

- Feature importance clear

Deep Learning:

- “Black box” nature

- Millions of parameters

- Difficult to understand why specific decision made

- Active research on interpretability

Consequence:

Traditional ML: Good for regulated industries (finance, healthcare) where explanations required

Deep Learning: Acceptable for applications prioritizing performance over interpretabilityDifference 5: Best Use Cases

Traditional ML:

- Structured/tabular data (spreadsheets, databases)

- Small to medium datasets

- Need interpretability

- Limited computational resources

- Well-defined features available

Examples:

- Customer churn prediction

- Fraud detection (when explanations needed)

- Credit scoring

- Sales forecasting

- Medical diagnosis with structured clinical data

Deep Learning:

- Unstructured data (images, audio, text, video)

- Large datasets available

- Complex pattern recognition

- Performance critical, interpretability less so

- Abundant computational resources

Examples:

- Image recognition

- Speech recognition

- Natural language processing

- Video analysis

- Autonomous driving

- Game playing

Difference 6: Development and Deployment

Traditional ML:

- Faster development: Simpler to prototype

- Easier debugging

- Less hyperparameter tuning

- Lighter deployment (smaller models)

- Easier to maintain

Deep Learning:

- Longer development: Complex architectures

- Challenging debugging

- Extensive hyperparameter tuning

- Heavy deployment requirements

- More complex maintenance

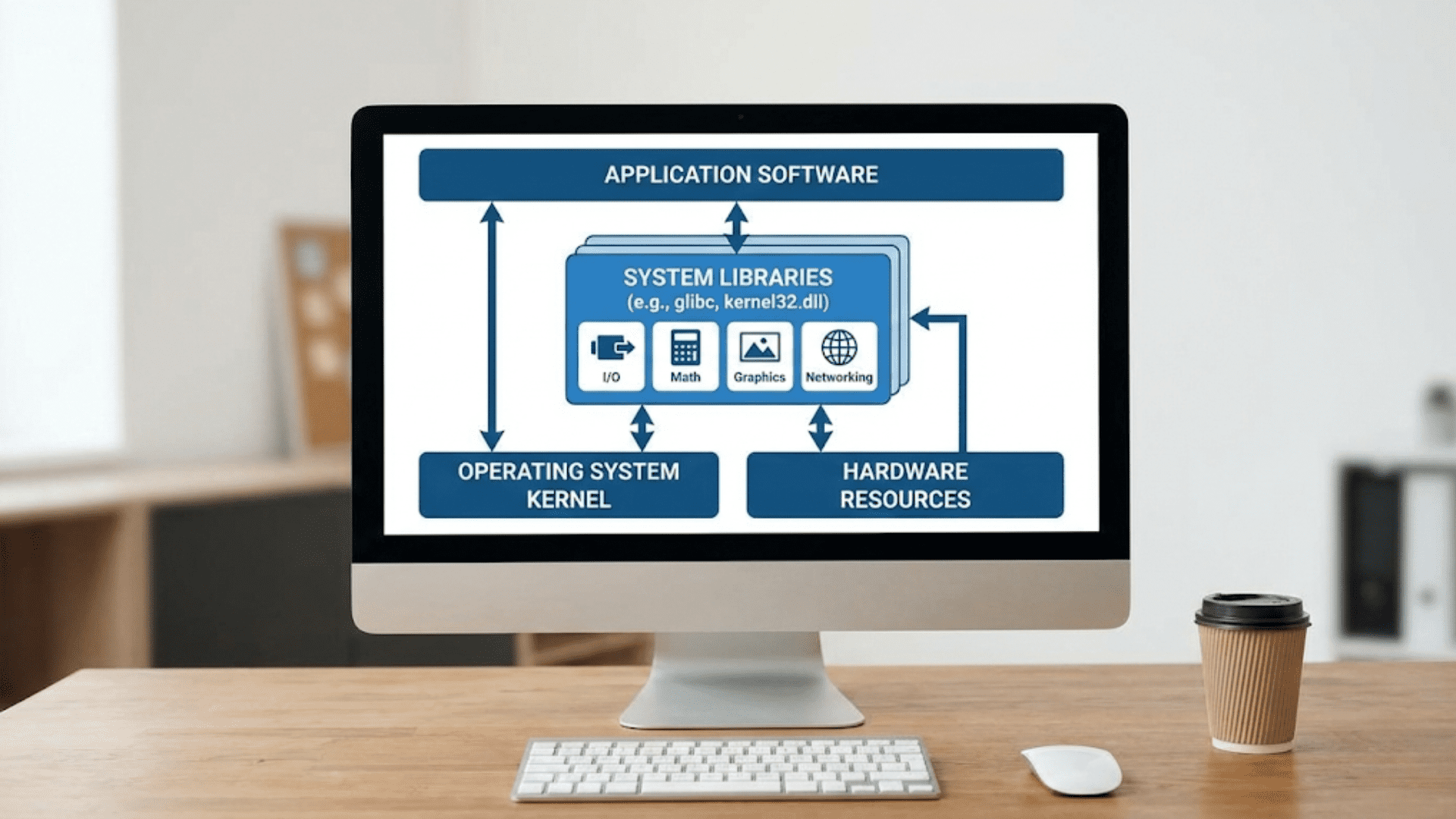

The Relationship: Hierarchy of AI Technologies

Understanding how deep learning fits in the broader AI landscape:

┌───────────────────────────────────────────── ┐

│ Artificial Intelligence │

│ (Machines performing tasks requiring │

│ human intelligence) │

│ │

│ ┌──────────────────────────────────────── ┐ │

│ │ Machine Learning │ │

│ │ (Learning from data without explicit │ │

│ │ programming) │ │

│ │ │ │

│ │ ┌──────────────────────────────────┐ │ │

│ │ │ Deep Learning │ │ │

│ │ │ (Neural networks with multiple │ │ │

│ │ │ layers) │ │ │

│ │ └──────────────────────────────────┘ │ │

│ │ │ │

│ │ Traditional ML (Decision trees, SVM, │ │

│ │ Random Forest, etc.) │ │

│ └──────────────────────────────────────── ┘ │

│ │

│ Rule-based AI (Expert systems, etc.) │

└───────────────────────────────────────────── ┘Key Points:

- Deep learning ⊂ Machine learning ⊂ Artificial Intelligence

- Deep learning is one approach to machine learning

- Traditional ML includes many other algorithms

- All share the goal: learn from data

Why Deep Learning Emerged: The Perfect Storm

Deep learning isn’t new—neural networks date to the 1950s. Why the recent explosion?

Factor 1: Big Data

Historical Constraint: Insufficient training data

Modern Reality:

- Internet generates massive data

- Millions of labeled images (ImageNet)

- Billions of text documents

- Petabytes of video

- Social media interactions

Impact: Deep learning finally has data to learn from

Factor 2: Computational Power

Historical Constraint: Computers too slow

Modern Reality:

- GPUs (Graphics Processing Units) accelerate training 10-100x

- Specialized AI chips (TPUs)

- Cloud computing provides accessible power

- Distributed training across multiple machines

Impact: What took months now takes hours

Factor 3: Algorithmic Innovations

Breakthroughs:

- Better activation functions (ReLU vs. sigmoid)

- Improved initialization methods

- Batch normalization

- Dropout for regularization

- Residual connections for very deep networks

- Attention mechanisms

Impact: Networks train more effectively and achieve better performance

Factor 4: Open Source Frameworks

Accessibility:

- TensorFlow (Google)

- PyTorch (Facebook)

- Keras (high-level API)

- Pre-trained models available

Impact: Democratized deep learning development

The Convergence

These factors converged around 2012:

2012: ImageNet Breakthrough

- Deep learning (AlexNet) crushed traditional ML

- Error rate: 15.3% (DL) vs. 26.2% (traditional)

- Sparked deep learning revolution

Since Then:

- Rapid improvements in performance

- Expansion to new domains

- Integration into products

Deep Learning Architectures: Different Types for Different Tasks

Deep learning isn’t monolithic—different architectures for different problems.

Convolutional Neural Networks (CNNs)

Designed For: Images and spatial data

Key Idea:

- Convolutional layers detect local patterns

- Preserve spatial relationships

- Translation invariant (recognize cat anywhere in image)

Applications:

- Image classification

- Object detection

- Facial recognition

- Medical image analysis

- Self-driving cars (vision)

Example:

Input Image → Convolution layers → Pooling layers → Dense layers → Classification

(Raw pixels) → (Detect features) → (Downsample) → (Combine) → (Cat/Dog)Recurrent Neural Networks (RNNs)

Designed For: Sequential data (time series, text)

Key Idea:

- Maintain internal state/memory

- Process sequences of variable length

- Output depends on current input and past context

Variants:

- LSTM (Long Short-Term Memory): Better at long sequences

- GRU (Gated Recurrent Unit): Simpler variant

Applications:

- Language modeling

- Machine translation

- Speech recognition

- Time series forecasting

- Sentiment analysis

Transformers

Designed For: Sequence-to-sequence tasks, especially language

Key Idea:

- Attention mechanism: focus on relevant parts of input

- Parallel processing (faster than RNNs)

- Captures long-range dependencies

Applications:

- Modern NLP (GPT, BERT)

- Machine translation

- Text generation

- Question answering

- Image generation (Vision Transformers)

Impact: Revolutionized NLP, enabling models like ChatGPT

Generative Adversarial Networks (GANs)

Designed For: Generating new data

Key Idea:

- Two networks compete

- Generator: Creates fake data

- Discriminator: Tries to detect fakes

- Competition improves both

Applications:

- Image generation (faces, art)

- Style transfer

- Data augmentation

- Super-resolution

- Video generation

Autoencoders

Designed For: Dimensionality reduction, denoising, anomaly detection

Key Idea:

- Compress input to lower dimensions

- Reconstruct original from compressed version

- Learns efficient representations

Applications:

- Anomaly detection

- Denoising images

- Dimensionality reduction

- Recommendation systems

- Generative modeling (VAEs)

Practical Example: Image Classification

Let’s compare approaches for classifying images of cats and dogs.

Traditional ML Approach

Process:

- Feature Engineering:

# Extract handcrafted features

features = []

for image in images:

# Color histogram

color_hist = compute_color_histogram(image)

# Texture features

texture = compute_texture_features(image)

# Edge detection

edges = detect_edges(image)

# Shape descriptors

shapes = compute_shape_descriptors(image)

# Combine all features

features.append([color_hist, texture, edges, shapes])- Train Classifier:

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier(n_estimators=100)

model.fit(features, labels)Characteristics:

- Manual feature design

- Domain expertise required

- Works with moderate data (1000s of images)

- Fast training

- Limited by feature quality

Performance: ~80-85% accuracy (typical)

Deep Learning Approach

Process:

- Load Data (No feature engineering!):

# Raw images directly

X_train = load_images() # Just pixel values

y_train = load_labels()- Define Network:

from tensorflow.keras import Sequential, layers

model = Sequential([

layers.Conv2D(32, (3,3), activation='relu', input_shape=(224, 224, 3)),

layers.MaxPooling2D((2,2)),

layers.Conv2D(64, (3,3), activation='relu'),

layers.MaxPooling2D((2,2)),

layers.Conv2D(64, (3,3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dense(1, activation='sigmoid')

])- Train:

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=50, validation_split=0.2)Characteristics:

- No feature engineering

- Network learns features automatically

- Needs large data (10,000s+ images)

- Slow training (GPU required)

- Learns complex patterns

Performance: ~95-98% accuracy (with sufficient data)

Comparison Results

| Aspect | Traditional ML | Deep Learning |

|---|---|---|

| Feature Engineering | Weeks of work | None required |

| Data Needed | 2,000 images | 20,000 images |

| Training Time | 10 minutes (CPU) | 4 hours (GPU) |

| Development Time | 2-3 weeks | 3-5 days |

| Accuracy | 82% | 96% |

| Interpretability | High (can see features) | Low (black box) |

| Deployment | Light (small model) | Heavy (large model) |

When Traditional ML Wins:

- Only 2,000 images available

- Need fast training/inference

- Must explain decisions

- Limited GPU access

When Deep Learning Wins:

- Have 20,000+ images

- Performance critical

- GPU available

- Don’t need interpretability

Advantages of Deep Learning

Advantage 1: Automatic Feature Learning

Benefit: No feature engineering required

- Saves weeks/months of development

- Discovers non-obvious patterns

- Adapts features to specific task

Example: ImageNet competition

- Traditional ML: Hand-designed features (SIFT, HOG)

- Deep Learning: Learned features automatically

- Result: Deep learning dominates

Advantage 2: Scalability with Data

Benefit: Performance improves with more data

Traditional ML: Plateaus after moderate data amount Deep Learning: Continues improving with massive data

Real-World:

- Google Translate: Billions of sentences

- Face recognition: Millions of faces

- Speech recognition: Thousands of hours

Advantage 3: Transfer Learning

Benefit: Pre-trained models reusable

Process:

- Train large network on massive dataset (ImageNet)

- Use learned features for different task

- Fine-tune on small dataset for new task

Impact:

- Achieve good results with limited data

- Reduce training time dramatically

- Democratizes deep learning

Example:

Pre-trained on ImageNet (1M images, 1000 classes)

→ Fine-tune on medical images (1K images, 5 diseases)

→ Achieve 90%+ accuracy despite small datasetAdvantage 4: Multi-Task Learning

Benefit: Single network handles multiple related tasks

Example: Object detection network

- Identifies objects

- Localizes position

- Estimates pose

- All from one network

Advantage 5: End-to-End Learning

Benefit: Learn entire pipeline jointly

Traditional: Multiple stages, each optimized separately Deep Learning: One network, optimized together

Example: Speech Recognition

Traditional:

Audio → Feature extraction → Phoneme detection → Word formation → Language model

(Each stage separate)Deep Learning:

Audio → Neural Network → Text

(End-to-end, optimized together)Result: Better performance, simpler pipeline

Limitations of Deep Learning

Limitation 1: Data Hunger

Challenge: Requires large labeled datasets

Problem:

- Labeling expensive (human time)

- Some domains lack data (rare diseases)

- Privacy concerns (medical, financial)

Mitigations:

- Transfer learning

- Data augmentation

- Semi-supervised learning

- Synthetic data generation

Limitation 2: Computational Cost

Challenge: Expensive training and inference

Training:

- Large models: Weeks on multiple GPUs

- Cost: Thousands to millions of dollars

- Environmental impact (energy consumption)

Inference:

- Real-time applications challenging

- Edge deployment difficult

- Latency concerns

Limitation 3: Black Box Nature

Challenge: Difficult to interpret decisions

Problems:

- Regulatory compliance (finance, healthcare)

- Debugging failures

- Building trust

- Bias detection

Example:

- Traditional ML: “Rejected loan because debt-to-income ratio too high”

- Deep Learning: “Neural network score below threshold” (Why? Hard to say)

Limitation 4: Adversarial Vulnerability

Challenge: Easily fooled by specially crafted inputs

Example:

- Add imperceptible noise to image

- Human sees same image

- Network completely misclassifies

Concern: Security-critical applications

Limitation 5: Poor Generalization to Different Distributions

Challenge: Performance drops when data distribution changes

Example:

- Train on sunny daytime images

- Test on rainy nighttime images

- Performance degrades significantly

Traditional ML: Often more robust to distribution shift

Limitation 6: Hyperparameter Sensitivity

Challenge: Many hyperparameters to tune

Examples:

- Learning rate

- Batch size

- Network architecture

- Regularization strength

- Optimizer choice

Result: Extensive experimentation needed

When to Use Deep Learning vs. Traditional ML

Decision framework for choosing approach:

Use Deep Learning When:

- Unstructured Data:

- Images, video, audio, text

- Complex patterns

- Large Datasets Available:

- 10,000s to millions of examples

- Or can leverage pre-trained models

- Performance Critical:

- Need state-of-the-art accuracy

- Small improvements valuable

- Computational Resources Available:

- Access to GPUs

- Cloud computing budget

- Interpretability Not Required:

- Black box acceptable

- Performance over explanations

Best Applications:

- Computer vision

- Speech recognition

- Natural language processing

- Recommendation systems (with large data)

- Game playing

Use Traditional ML When:

- Structured/Tabular Data:

- Spreadsheets, databases

- Well-defined features

- Small to Medium Datasets:

- Hundreds to thousands of examples

- Limited labeled data

- Need Interpretability:

- Regulated industries

- Must explain decisions

- Limited Computational Resources:

- CPU-only environment

- Edge devices

- Fast inference required

- Fast Development Needed:

- Quick prototypes

- Limited time/resources

Best Applications:

- Fraud detection (with explanations)

- Customer churn prediction

- Credit scoring

- Medical diagnosis (structured clinical data)

- Sales forecasting

- A/B test analysis

Hybrid Approaches

Best of Both Worlds:

- Deep learning for feature extraction

- Traditional ML for final prediction

Example:

Images → Pre-trained CNN → Feature vectors → Random Forest → Prediction

(Deep features) → (Interpretable classifier)Benefits:

- Powerful features from deep learning

- Interpretability from traditional ML

- Can work with moderate data

The Future: Converging and Evolving

Trends Blurring the Lines

AutoML: Automated machine learning

- Automatically select algorithms

- Tune hyperparameters

- Works for both traditional ML and deep learning

Neural Architecture Search:

- Deep learning for deep learning

- Automatically design network architectures

Explainable AI:

- Making deep learning more interpretable

- Reducing black box concern

Emerging Paradigms

Few-Shot Learning:

- Learn from very few examples

- Reduces data requirements

Self-Supervised Learning:

- Learn from unlabeled data

- Reduces labeling needs

Neural-Symbolic Integration:

- Combine neural networks with symbolic reasoning

- Best of both worlds

Efficient Deep Learning:

- Smaller, faster models

- Mobile and edge deployment

- Reduce computational cost

Comparison Table: Deep Learning vs. Traditional ML

| Aspect | Traditional ML | Deep Learning |

|---|---|---|

| Feature Engineering | Manual, expert-driven | Automatic, learned |

| Data Requirements | Small-medium (100s-1000s) | Large (10,000s-millions) |

| Training Time | Minutes-hours (CPU) | Hours-weeks (GPU) |

| Hardware | CPU sufficient | GPU/TPU needed |

| Interpretability | High (simpler models) | Low (black box) |

| Best For | Structured/tabular data | Unstructured (image/text/audio) |

| Computational Cost | Low | High |

| Development Speed | Fast prototyping | Slower development |

| Performance Ceiling | Good | Excellent (with data) |

| Scalability | Plateaus with data | Improves with data |

| Deployment | Lightweight | Heavy models |

| Examples | Decision trees, SVM, Random Forest | CNN, RNN, Transformers |

| When to Use | Small data, need interpretability | Large data, unstructured, performance critical |

Conclusion: Complementary Tools in the AI Toolkit

Deep learning hasn’t replaced traditional machine learning—it’s expanded the toolkit. Both approaches have distinct strengths and ideal use cases. Understanding the differences enables you to choose the right tool for each problem.

Deep learning excels at:

- Automatic feature learning from raw, unstructured data

- Handling massive datasets with millions of examples

- Achieving state-of-the-art performance on perceptual tasks

- Learning hierarchical representations of increasing abstraction

Traditional ML excels at:

- Working with small to medium structured datasets

- Providing interpretable, explainable models

- Fast training and deployment on standard hardware

- Problems where domain-specific features are well understood

The key insights:

Deep learning is a subset of machine learning, not a replacement. It’s one approach among many, particularly powerful for specific types of problems.

The automatic feature learning of deep learning is revolutionary, but comes with significant data and computational requirements.

Neither approach is universally superior—the best choice depends on your specific problem, data, resources, and constraints.

Modern practice often combines both: deep learning for powerful feature extraction, traditional ML for interpretability or when data is limited.

As you work with machine learning, consider both approaches. Don’t default to deep learning because it’s trendy, nor avoid it when it’s actually the right tool. Evaluate based on your data characteristics, computational resources, interpretability needs, and performance requirements.

The future of AI isn’t deep learning OR traditional machine learning—it’s knowing when to use each, how to combine them, and leveraging both to solve real problems effectively. Master both, and you’ll be equipped to tackle the full spectrum of machine learning challenges.