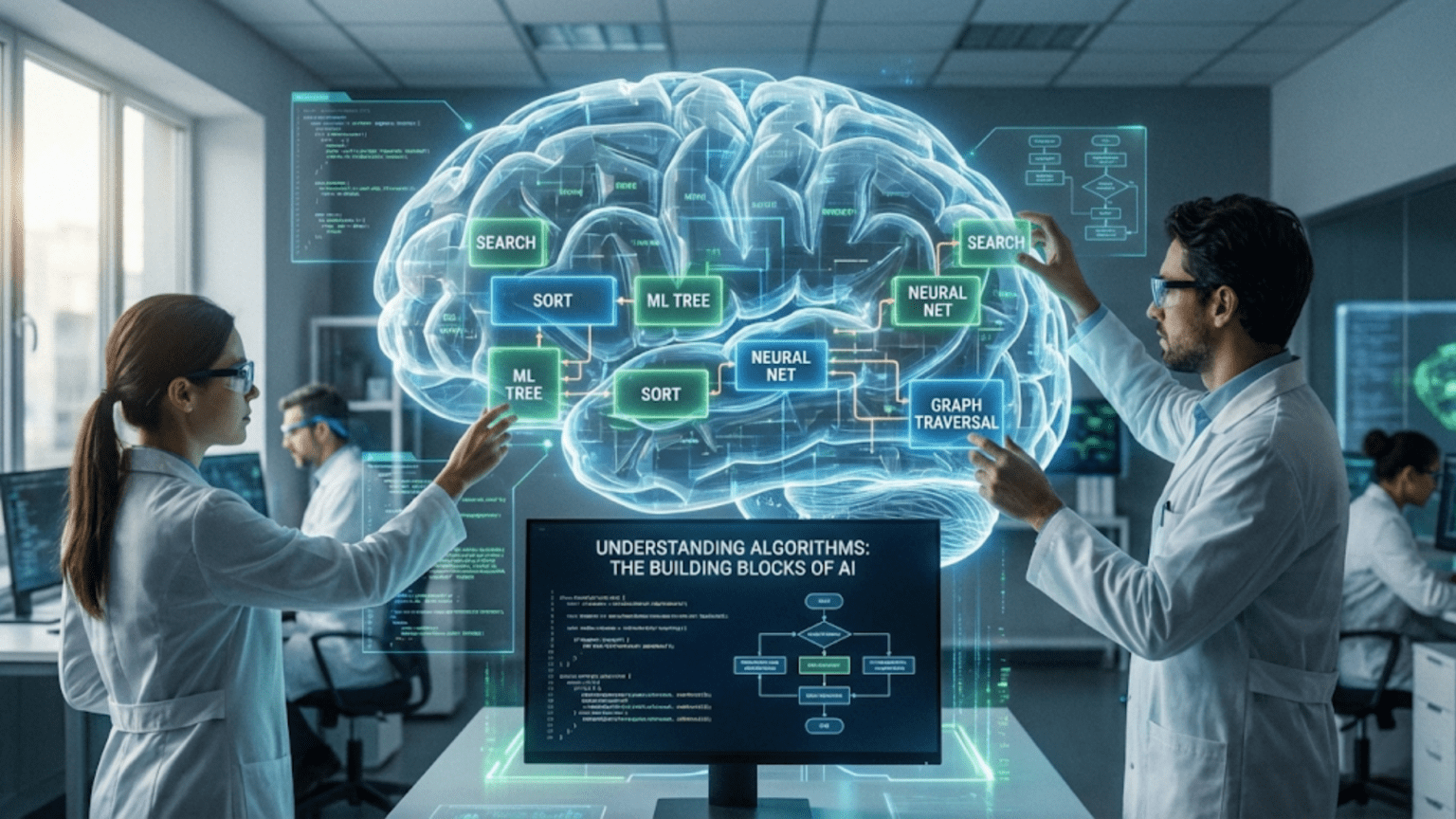

When people talk about artificial intelligence, they often mention algorithms as if everyone understands what they are. News articles describe “new algorithms” that improve AI performance. Tutorials tell you to “choose the right algorithm” for your problem. Researchers publish papers about “novel algorithmic approaches.” But if you’re new to AI, you might be wondering: what exactly is an algorithm, and why do they matter so much?

Algorithms are genuinely the building blocks of all artificial intelligence. They’re the step-by-step procedures that tell computers how to process data, make decisions, and learn from experience. Without algorithms, AI wouldn’t exist—there would be no way to translate human ideas about intelligence into instructions that computers can execute.

Understanding algorithms doesn’t require advanced mathematics or years of programming experience. At their core, algorithms are simply recipes—precise sets of instructions for accomplishing specific tasks. In this comprehensive guide, we’ll demystify algorithms, exploring what they are, how they work, why they matter for AI, and what different types of algorithms exist. By the end, you’ll have a solid conceptual foundation that will help you understand every other aspect of artificial intelligence you encounter.

What Is an Algorithm? Starting with the Basics

Let’s begin with a clear definition: an algorithm is a finite sequence of well-defined instructions for solving a problem or accomplishing a task. These instructions must be precise, unambiguous, and executable—each step must be clear enough that it can be performed without confusion.

Algorithms in Everyday Life

You encounter algorithms constantly in daily life, even if you don’t call them that. A recipe for baking cookies is an algorithm:

- Preheat oven to 350°F

- Mix butter and sugar until creamy

- Add eggs and vanilla, mix well

- Combine flour, baking soda, and salt in separate bowl

- Gradually add dry ingredients to wet mixture

- Stir in chocolate chips

- Drop spoonfuls on baking sheet

- Bake for 10-12 minutes

- Cool before serving

This recipe has all the characteristics of an algorithm: it’s a finite sequence of steps, each step is clearly defined, following the steps produces a specific result (cookies), and the same steps produce consistent results when repeated.

Assembly instructions for furniture are algorithms. Driving directions from your home to a destination are algorithms. Even the morning routine you follow—shower, dress, eat breakfast, brush teeth, leave for work—is an algorithm, though perhaps a less formal one.

What Makes Computer Algorithms Different

Computer algorithms share the same basic concept but have additional requirements. They must be:

Precise: No room for interpretation. “Mix until creamy” is fine for humans but too vague for computers. Computer algorithms specify exact conditions and operations.

Unambiguous: Each step has only one possible interpretation. Humans can handle ambiguity and use context; computers cannot.

Executable: Every instruction must be something the computer can actually do. You can’t tell a computer to “stir” without defining what stirring means in terms of operations the computer understands.

Finite: Algorithms must eventually terminate. They can’t run forever (though they might run for a very long time).

Effective: Each step must actually be accomplishable. You can’t include impossible operations.

A Simple Computer Algorithm Example

Here’s a simple algorithm for finding the largest number in a list:

1. Start with the first number in the list

2. Call this number "largest_so_far"

3. Look at the next number in the list

4. If this number is larger than "largest_so_far":

- Update "largest_so_far" to be this new number

5. If there are more numbers in the list:

- Go back to step 3

6. Otherwise:

- The answer is "largest_so_far"This algorithm is precise, unambiguous, executable, finite (it examines each number once), and effective (every step can be performed). It solves a specific problem: finding the maximum value in a list.

Why Algorithms Matter for Artificial Intelligence

Now that you understand what algorithms are generally, let’s explore why they’re particularly crucial for AI.

Algorithms Are How We Implement Intelligence

Artificial intelligence requires translating intelligent behavior into precise instructions that computers can execute. When you want a computer to recognize faces, translate languages, or play chess, you need algorithms that specify exactly how to process input data and produce intelligent output.

Humans perform intelligent tasks intuitively, often without being able to explain exactly how we do them. You recognize your friend’s face instantly, but could you write down step-by-step instructions for face recognition that someone else could follow? This is the challenge AI researchers face: taking intelligent behavior and decomposing it into algorithmic steps.

Different Algorithms Produce Different Results

The choice of algorithm profoundly affects AI system performance. Two different algorithms solving the same problem might produce:

- Different accuracy levels

- Different computational requirements

- Different training times

- Different abilities to handle edge cases

- Different requirements for training data

Understanding algorithms helps you choose appropriate approaches for specific problems and understand why certain AI systems work better than others.

Algorithms Embody Assumptions and Approaches

Every algorithm embodies specific assumptions about the problem and specific approaches to solving it. Understanding these assumptions helps you:

- Recognize when an algorithm is appropriate for your problem

- Understand why an algorithm succeeds or fails in specific situations

- Debug problems when algorithms don’t work as expected

- Make informed decisions about which algorithms to use

Algorithms Are Testable and Improvable

Because algorithms are precisely specified, they can be:

- Tested systematically: Apply the algorithm to test cases and verify results

- Analyzed mathematically: Determine theoretical properties and guarantees

- Compared objectively: Measure performance against other algorithms

- Improved iteratively: Modify specific steps to enhance performance

This scientific approach to developing AI relies on algorithms being explicit and testable.

Traditional Algorithms vs. Learning Algorithms

A crucial distinction in AI is between traditional algorithms and learning algorithms. This difference is fundamental to understanding modern AI.

Traditional Algorithms: Explicit Instructions

Traditional algorithms provide explicit instructions for every situation. They’re programmed with complete logic for how to handle all possible inputs.

Example: Calculating Average

1. Add all numbers together to get sum

2. Count how many numbers there are

3. Divide sum by count

4. Return resultThis algorithm explicitly specifies exactly what to do. There’s no learning involved—the steps are fixed and will always be the same regardless of what numbers you process.

Characteristics:

- Logic is explicitly programmed

- Behavior is completely predictable

- Same input always produces same output

- No adaptation to data

- Transparent and easily understood

Learning Algorithms: Discovering Patterns

Learning algorithms, in contrast, don’t explicitly specify how to solve the problem. Instead, they specify how to learn from data to solve the problem.

Example: Learning to Recognize Spam

Instead of explicitly programming rules (if email contains “free money” and comes from unknown sender, it’s probably spam), a learning algorithm:

1. Examine many examples of spam and legitimate emails

2. Identify patterns that distinguish spam from legitimate email

3. Create a model encoding these patterns

4. Use this model to classify new emailsThe algorithm specifies the learning process, not the specific classification rules. The actual rules emerge from data.

Characteristics:

- Logic is learned from data

- Behavior depends on training data

- Can adapt to new patterns

- May produce different results with different training data

- Often less transparent than traditional algorithms

Why Learning Algorithms Matter for AI

Many intelligent tasks are too complex to program explicitly. You can’t write explicit rules for:

- Recognizing faces across different angles, lighting, and ages

- Understanding natural language with all its ambiguity and context-dependence

- Diagnosing diseases from medical images

- Driving cars in complex, unpredictable traffic

Learning algorithms solve these problems by discovering patterns in data rather than requiring humans to specify every rule. This is why machine learning has been so successful—it can handle complexity that exceeds our ability to program explicitly.

Categories of Algorithms in AI

AI uses many different types of algorithms, each suited to different types of problems. Let’s explore the major categories.

Search Algorithms

Search algorithms explore possible solutions to find optimal or satisfactory answers. They’re fundamental to AI and solve problems like pathfinding, game playing, and planning.

How They Work:

Search algorithms systematically explore possibilities:

- Start with initial state

- Generate possible next states

- Evaluate which states are promising

- Choose best options to explore further

- Continue until finding a solution or exhausting possibilities

Examples:

- Breadth-First Search: Explores all possibilities at one level before moving deeper

- Depth-First Search: Explores one path completely before trying alternatives

- A Search:* Uses heuristics to guide search toward promising solutions

- Minimax: Searches game trees to find optimal moves

Applications:

- GPS navigation finding optimal routes

- Game-playing AI choosing moves

- Robot path planning

- Scheduling and optimization

Strengths:

- Can find optimal solutions

- Work when explicit rules are available

- Mathematically analyzable

Limitations:

- Can be computationally expensive

- May struggle with very large search spaces

- Require well-defined problem formulations

Optimization Algorithms

Optimization algorithms find the best solution according to specific criteria. They’re crucial for training machine learning models.

How They Work:

Optimization algorithms:

- Define an objective function measuring solution quality

- Start with an initial solution

- Iteratively improve the solution

- Make changes that improve the objective function

- Continue until reaching optimal or satisfactory solution

Examples:

- Gradient Descent: Follows the slope to find minimum of a function

- Stochastic Gradient Descent: Uses random samples for efficiency

- Genetic Algorithms: Evolves solutions using principles from natural selection

- Simulated Annealing: Explores solutions using probabilistic acceptance

Applications:

- Training neural networks (gradient descent)

- Hyperparameter tuning

- Resource allocation

- Engineering design

Strengths:

- Can handle complex objective functions

- Find good solutions when exact optimization is impossible

- Adaptable to many problem types

Limitations:

- May find local optima instead of global optimum

- Require careful tuning

- Can be computationally intensive

Classification Algorithms

Classification algorithms assign inputs to categories based on learned patterns. They’re among the most common machine learning algorithms.

How They Work:

Classification algorithms:

- Examine training examples with known categories

- Learn patterns distinguishing different categories

- Create decision boundaries or rules separating categories

- Apply learned patterns to classify new examples

Examples:

- Logistic Regression: Linear model for binary classification

- Decision Trees: Tree-like structures making sequential decisions

- Random Forests: Combinations of multiple decision trees

- Support Vector Machines: Find optimal boundaries between classes

- Neural Networks: Learn complex, non-linear patterns

Applications:

- Email spam detection

- Medical diagnosis

- Image recognition

- Credit risk assessment

- Sentiment analysis

Strengths:

- Handle complex, non-linear patterns

- Can learn from examples without explicit rules

- Generalizable to new data

Limitations:

- Require substantial training data

- May overfit to training data

- Performance depends on data quality

Regression Algorithms

Regression algorithms predict continuous numerical values rather than discrete categories. They’re essential for forecasting and estimation tasks.

How They Work:

Regression algorithms:

- Examine examples with known input-output relationships

- Learn mathematical relationships between inputs and outputs

- Create models predicting outputs from inputs

- Apply models to predict values for new inputs

Examples:

- Linear Regression: Fits straight lines or planes to data

- Polynomial Regression: Fits curves to data

- Ridge/Lasso Regression: Linear regression with regularization

- Regression Trees: Tree-based predictions

- Neural Networks: Complex non-linear regression

Applications:

- Stock price prediction

- House price estimation

- Weather forecasting

- Sales forecasting

- Energy consumption prediction

Strengths:

- Provide interpretable relationships

- Can capture complex patterns

- Give confidence intervals

Limitations:

- Sensitive to outliers

- May extrapolate poorly beyond training range

- Require choosing appropriate model complexity

Clustering Algorithms

Clustering algorithms group similar items together without predefined categories. They discover natural structure in data.

How They Work:

Clustering algorithms:

- Measure similarity between data points

- Group similar points together

- Separate dissimilar points into different groups

- Refine groupings iteratively

Examples:

- K-Means: Partitions data into K clusters around centroids

- Hierarchical Clustering: Creates tree-like cluster structures

- DBSCAN: Finds clusters of varying shapes based on density

- Gaussian Mixture Models: Models data as mixture of probability distributions

Applications:

- Customer segmentation

- Image segmentation

- Anomaly detection

- Document organization

- Gene expression analysis

Strengths:

- Discover hidden patterns

- Work without labeled data

- Useful for exploratory analysis

Limitations:

- Require choosing number of clusters (some algorithms)

- Sensitive to initialization

- Results can be difficult to interpret

Reinforcement Learning Algorithms

Reinforcement learning algorithms learn through trial and error, receiving rewards for good actions and penalties for bad ones.

How They Work:

Reinforcement learning algorithms:

- Start with no knowledge of what actions are good

- Try different actions in an environment

- Receive rewards or penalties based on outcomes

- Learn which actions lead to better rewards

- Develop policies for choosing actions

Examples:

- Q-Learning: Learns value of actions in different states

- Policy Gradient Methods: Directly learn action-selection policies

- Actor-Critic Methods: Combine value and policy learning

- Deep Q-Networks: Neural networks for Q-learning

Applications:

- Game playing (AlphaGo, chess, video games)

- Robotics control

- Autonomous vehicles

- Resource management

- Personalized recommendations

Strengths:

- Learn from interaction without supervision

- Can discover novel strategies

- Handle sequential decision-making

Limitations:

- Require many training episodes

- Can be unstable during training

- Difficult to apply in high-stakes domains

How Algorithms Learn: The Learning Process

Understanding how learning algorithms actually learn provides insight into how AI systems work.

The Training Process

Most machine learning algorithms follow this general process:

1. Initialize: Start with a model containing random or default parameters

2. Make Predictions: Use current model to make predictions on training data

3. Measure Error: Calculate how wrong the predictions are using a loss function

4. Update: Adjust model parameters to reduce error

5. Repeat: Continue steps 2-4 until performance is satisfactory

Gradient Descent: The Fundamental Learning Algorithm

Most modern AI relies on gradient descent or variants. Here’s how it works conceptually:

Imagine you’re blindfolded on a hillside and need to reach the bottom. Your strategy:

- Feel the ground around you to determine which direction slopes downward most steeply

- Take a step in that direction

- Repeat until you can’t go any lower

Gradient descent works similarly:

- Calculate the gradient (slope) of the error function

- Move parameters in the direction that reduces error

- Repeat until error minimizes

The “learning rate” determines step size—too large and you might overshoot the minimum; too small and learning takes forever.

The Role of Data

Learning algorithms are fundamentally data-driven:

Training Data: Examples the algorithm learns from Validation Data: Examples used to tune the algorithm and prevent overfitting Test Data: Examples used to evaluate final performance

The quality and quantity of data profoundly affect learning:

- More data generally improves performance (with diminishing returns)

- Better quality data (accurate labels, representative samples) is crucial

- Diverse data helps models generalize to new situations

- Biased data leads to biased models

Overfitting and Generalization

A key challenge in learning algorithms is balancing:

Overfitting: Learning the training data too specifically, including noise and peculiarities, resulting in poor performance on new data

Underfitting: Not learning enough from training data, resulting in poor performance everywhere

Good algorithms find the sweet spot: learning general patterns without memorizing specific examples.

Evaluating Algorithms: How Do We Know They Work?

With learning algorithms, we need ways to evaluate whether they actually work well.

Performance Metrics

Different metrics measure different aspects of algorithm performance:

For Classification:

- Accuracy: Percentage of correct predictions

- Precision: Of predicted positives, how many were actually positive

- Recall: Of actual positives, how many were correctly predicted

- F1 Score: Harmonic mean of precision and recall

For Regression:

- Mean Squared Error: Average squared difference between predictions and actual values

- Mean Absolute Error: Average absolute difference

- R-squared: Proportion of variance explained by the model

For Clustering:

- Silhouette Score: How well-separated clusters are

- Within-Cluster Sum of Squares: Compactness of clusters

Cross-Validation

To reliably evaluate algorithms, we use cross-validation:

- Split data into multiple folds (typically 5 or 10)

- Train on all folds except one

- Test on the held-out fold

- Repeat for each fold

- Average results across all folds

This provides more reliable performance estimates than single train-test splits.

Computational Complexity

Beyond accuracy, we care about computational requirements:

Time Complexity: How runtime scales with data size Space Complexity: How memory requirements scale with data size

Algorithms are often described using Big O notation:

- O(n): Linear time—doubles with double data

- O(n²): Quadratic time—quadruples with double data

- O(log n): Logarithmic time—grows slowly even with large data increases

- O(1): Constant time—unchanged regardless of data size

The Art of Choosing Algorithms

Selecting appropriate algorithms requires understanding your problem, data, and constraints.

Problem Characteristics

What type of problem?

- Classification → Classification algorithms

- Prediction → Regression algorithms

- Grouping → Clustering algorithms

- Sequential decisions → Reinforcement learning

How much data?

- Small datasets → Simpler algorithms (trees, linear models)

- Large datasets → More complex algorithms possible (deep learning)

What data type?

- Structured/tabular → Traditional ML (trees, linear models)

- Images → Convolutional neural networks

- Text → NLP algorithms, transformers

- Time series → Recurrent networks, specialized time series methods

Practical Constraints

Computational resources:

- Limited resources → Simpler, faster algorithms

- Abundant resources → Complex algorithms possible

Interpretability requirements:

- Need explainability → Decision trees, linear models

- Performance priority → Neural networks acceptable

Training time available:

- Quick deployment → Pre-trained models, simple algorithms

- Time available → More thorough training, complex algorithms

Deployment environment:

- Mobile/edge devices → Lightweight algorithms

- Cloud servers → Complex algorithms feasible

The Iterative Approach

In practice, algorithm selection is iterative:

- Start simple: Begin with basic algorithms as baseline

- Evaluate thoroughly: Test on validation data

- Analyze failures: Understand where and why algorithm fails

- Try alternatives: Test different algorithms

- Optimize: Tune hyperparameters of best-performing algorithms

- Ensemble: Sometimes combine multiple algorithms

Common Misconceptions About Algorithms

Let’s address some common misunderstandings:

“More Complex Algorithms Are Always Better”

Reality: Complex algorithms can overfit, require more data and computation, and may not outperform simpler approaches. Start simple and increase complexity only when justified.

“Algorithms Are Objective”

Reality: Algorithms embody choices made by their creators—what to optimize, what data to use, what assumptions to make. These choices reflect human values and biases.

“The Algorithm Is the Hard Part”

Reality: In practical AI projects, data collection, cleaning, and feature engineering typically consume more effort than algorithm selection. Algorithms matter, but they’re one part of a larger process.

“New Algorithms Are Always Better”

Reality: Newer algorithms aren’t automatically superior. Many classic algorithms remain competitive. The best algorithm depends on your specific problem, data, and constraints.

“Algorithms Are Set in Stone”

Reality: Algorithms are constantly evolving. Researchers continually develop new algorithms and improve existing ones. What works best today may be surpassed tomorrow.

The Future of Algorithms in AI

Algorithms continue evolving, with several exciting directions:

Neural Architecture Search

Algorithms that design other algorithms—automatically finding optimal neural network architectures for specific tasks. This meta-learning could accelerate AI development.

Few-Shot and Zero-Shot Learning

Algorithms that learn from very few examples or even generalize to tasks they weren’t explicitly trained on, moving closer to human-like learning efficiency.

Explainable AI

Algorithms designed for interpretability, helping humans understand AI decisions. This addresses the “black box” problem of complex algorithms.

Efficient Algorithms

Development of algorithms requiring less computation and energy, making AI more sustainable and accessible.

Hybrid Approaches

Combining neural learning with symbolic reasoning, potentially achieving both the pattern recognition of deep learning and the logical reasoning of traditional AI.

Conclusion: Algorithms as Foundation

Understanding algorithms—what they are, how they work, and why they matter—provides the foundation for understanding all of artificial intelligence. Algorithms are the precise instructions that transform hardware and data into intelligent behavior. They’re the link between human ideas about intelligence and computational implementation.

Every AI system you encounter—from voice assistants to self-driving cars to recommendation systems—is built on algorithms. These algorithms might be simple or complex, traditional or learning-based, but they’re all following the same basic principle: precise, step-by-step instructions for accomplishing tasks.

As you continue learning about AI, you’ll encounter many specific algorithms. Some will seem intimidating at first, with mathematical notation and technical terminology. But remember that underneath, they’re all variants of the same basic concept: recipes for solving problems, decomposed into steps a computer can execute.

Understanding algorithms doesn’t mean you need to implement every algorithm from scratch or memorize mathematical details. It means understanding the concepts: what problems different algorithms solve, how they approach those problems, what trade-offs they make, and when to use which approaches.

This conceptual understanding is powerful. It helps you:

- Choose appropriate tools for your problems

- Understand why AI systems succeed or fail

- Debug issues when they arise

- Evaluate claims about AI capabilities

- Communicate effectively about AI

Algorithms are the building blocks of AI, and now you understand what those building blocks are and how they fit together. This foundation will support everything else you learn about artificial intelligence. Each new concept, technique, or application will ultimately come back to algorithms—precise instructions for accomplishing intelligent tasks.

Welcome to the algorithmic foundation of AI. Every intelligent system you’ll encounter is built on these principles. Understanding them means understanding the essence of how we create artificial intelligence.