Neural networks are at the heart of modern artificial intelligence, powering advancements in image recognition, natural language processing, recommendation systems, and more. These computational models, inspired by the human brain, are capable of learning from data and making decisions without being explicitly programmed. Neural networks have revolutionized machine learning by enabling systems to tackle complex problems that were once considered unsolvable. In this article, we explore the fundamentals of neural networks, their structure, and their significance in today’s AI landscape.

What Are Neural Networks?

A neural network is a computational model designed to recognize patterns and relationships in data. Inspired by the structure of the human brain, a neural network comprises layers of interconnected nodes, or neurons, that process and transmit information. Neural networks excel at learning from large datasets, making them suitable for tasks such as classification, regression, and clustering.

Key Characteristics

Non-Linear Decision Making: Neural networks can model complex, non-linear relationships between inputs and outputs.

Learning From Data: By adjusting their internal parameters, neural networks learn patterns directly from data, improving performance over time.

Versatility: They can handle structured data (e.g., tabular data) and unstructured data (e.g., images, text, audio).

Biological Inspiration

Neural networks are loosely based on the structure and functioning of biological neural systems. In the human brain, neurons receive signals through dendrites, process them in the cell body, and transmit them via axons to other neurons. Artificial neural networks mimic this process by using artificial neurons (nodes) that receive, process, and pass on information to other neurons in the network.

While neural networks are inspired by biology, they are simplified mathematical models designed for computational efficiency rather than biological accuracy.

Components of a Neural Network

A neural network consists of the following primary components:

1. Neurons

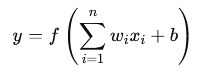

Neurons are the building blocks of a neural network. Each neuron receives inputs, processes them using a weighted sum and an activation function, and produces an output.

Mathematically, a neuron performs the following operation:

Where:

- xi: Input features

- wi: Weights associated with each input

- b: Bias term

- f: Activation function

- y: Output of the neuron

2. Layers

Neural networks are organized into layers, each serving a specific purpose:

- Input Layer: The first layer that accepts raw data inputs.

- Hidden Layers: Intermediate layers that process and transform the data. The number and size of hidden layers determine the network’s depth and complexity.

- Output Layer: The final layer that generates the network’s predictions or outputs.

3. Weights and Biases

Weights and biases are the parameters of a neural network. Weights determine the strength of connections between neurons, while biases help the network make adjustments independent of the input values.

4. Activation Functions

Activation functions introduce non-linearity into the network, enabling it to model complex relationships. Common activation functions include:

- Sigmoid: Maps input values to a range between 0 and 1.

- ReLU (Rectified Linear Unit): Outputs the input directly if it’s positive; otherwise, it outputs zero.

- Tanh: Maps input values to a range between -1 and 1.

How Neural Networks Work

Neural networks operate in two primary phases: forward propagation and backpropagation.

1. Forward Propagation

During forward propagation, input data flows through the network, layer by layer. Each neuron calculates its output using the weighted sum of its inputs and an activation function. The final output is generated at the output layer.

2. Backpropagation

Backpropagation is the process of adjusting weights and biases to minimize the error between the predicted output and the actual target. It involves:

- Calculating the Loss: The difference between the predicted output and the actual target is quantified using a loss function (e.g., Mean Squared Error or Cross-Entropy Loss).

- Updating Parameters: Using optimization algorithms like Gradient Descent, the weights and biases are updated to reduce the loss.

This iterative process continues until the network converges to an optimal solution.

Types of Neural Networks

Neural networks come in various forms, each suited for specific tasks:

1. Feedforward Neural Networks (FNNs)

- The simplest type of neural network where information flows in one direction: from input to output.

- Commonly used for tasks like regression and classification.

2. Convolutional Neural Networks (CNNs)

- Specialized for image and video data.

- Use convolutional layers to detect patterns like edges and textures in images.

3. Recurrent Neural Networks (RNNs)

- Designed for sequential data, such as time series or text.

- Use recurrent connections to capture temporal dependencies.

4. Generative Adversarial Networks (GANs)

- Consist of two networks: a generator and a discriminator.

- Used for generating realistic images, videos, and other synthetic data.

5. Autoencoders

- Learn compressed representations of data.

- Used for tasks like dimensionality reduction and anomaly detection.

Applications of Neural Networks

The versatility of neural networks has led to their adoption in a wide range of applications:

1. Image Recognition

Neural networks power facial recognition, object detection, and medical imaging diagnostics.

2. Natural Language Processing

Tasks like sentiment analysis, language translation, and chatbots are driven by neural networks.

3. Autonomous Systems

Self-driving cars rely on neural networks for tasks such as object detection and route planning.

4. Fraud Detection

Financial institutions use neural networks to detect fraudulent transactions by identifying unusual patterns.

5. Gaming

Neural networks are used to create adaptive AI that can learn and improve over time in video games.

Deep Neural Networks (DNNs)

When a neural network consists of multiple hidden layers, it is referred to as a Deep Neural Network (DNN). The depth of a network allows it to learn hierarchical patterns in data, where each successive layer captures increasingly abstract features.

Why Depth Matters?

Adding more layers enables the network to model complex relationships. For example:

- In image processing, the first layer might detect edges, the second layer detects shapes, and deeper layers recognize objects.

- In natural language processing, shallow layers may identify individual words, while deeper layers capture sentence structures and meanings.

However, the increased depth comes with challenges like computational complexity, the risk of overfitting, and difficulty in training.

Key Challenges in Training Neural Networks

1. Vanishing and Exploding Gradients

When training deep networks using backpropagation, gradients can become very small (vanishing gradients) or very large (exploding gradients). This makes it difficult for the network to update its weights effectively, particularly in the earlier layers.

- Vanishing Gradients: Activation functions like sigmoid or tanh squash input values into small ranges, leading to gradients close to zero during backpropagation.

- Exploding Gradients: Uncontrolled growth in gradients can cause instability and hinder convergence.

2. Overfitting

Overfitting occurs when the network memorizes the training data instead of learning generalizable patterns. This results in poor performance on unseen data.

3. Computational Costs

Training large neural networks with millions of parameters requires significant computational power and time. This can be a barrier for researchers and practitioners with limited resources.

4. Hyperparameter Tuning

Neural networks have many hyperparameters, such as learning rate, number of layers, and number of neurons per layer. Optimizing these hyperparameters can be time-consuming and challenging.

5. Lack of Interpretability

Deep neural networks are often considered “black boxes” because it is difficult to understand how they make decisions. This lack of transparency can be a concern in high-stakes applications like healthcare or finance.

Strategies for Training and Optimizing Neural Networks

1. Addressing Vanishing and Exploding Gradients

- Use advanced activation functions like ReLU (Rectified Linear Unit), which mitigates vanishing gradients by allowing gradients to flow more effectively.

- Apply Batch Normalization, a technique that normalizes the inputs to each layer, improving gradient flow and accelerating convergence.

2. Preventing Overfitting

- Regularization: Techniques like L2 regularization (weight decay) penalize large weights, discouraging the model from overfitting.

- Dropout: Randomly deactivating a subset of neurons during training forces the network to rely on multiple pathways, improving generalization.

- Early Stopping: Monitor the validation loss during training and stop the process when performance on validation data starts to degrade.

3. Managing Computational Costs

- Use pre-trained models: Leveraging models like ResNet or BERT can save time and resources by fine-tuning rather than training from scratch.

- Reduce model complexity: Start with smaller networks and increase complexity only when necessary.

- Utilize hardware accelerators: GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) significantly speed up training.

4. Hyperparameter Optimization

Automated methods like grid search, random search, or Bayesian optimization can streamline the process of finding optimal hyperparameters.

5. Improving Interpretability

- Visualization Tools: Use tools like Grad-CAM or SHAP to visualize the contribution of features or neurons to the final prediction.

- Simpler Architectures: Opt for simpler models when interpretability is critical.

Popular Architectures in Neural Networks

Over time, researchers have developed specialized architectures for solving domain-specific problems. Here are some widely used neural network architectures:

1. Convolutional Neural Networks (CNNs)

CNNs are designed to process grid-like data such as images. They use convolutional layers to detect spatial hierarchies of patterns (e.g., edges, shapes).

Key components of CNNs:

- Convolutional Layers: Apply filters to input data to detect patterns.

- Pooling Layers: Downsample feature maps, reducing dimensions and retaining important information.

- Fully Connected Layers: Combine extracted features for classification or regression.

Applications:

- Image classification (e.g., ResNet, VGGNet).

- Object detection (e.g., YOLO, Faster R-CNN).

- Medical imaging analysis.

2. Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data, such as time series or text. They maintain a hidden state that captures information about previous inputs, enabling them to process sequences.

Challenges with RNNs:

- They suffer from vanishing gradients, making it difficult to capture long-term dependencies.

Solutions:

- Long Short-Term Memory (LSTM): A variation of RNNs that introduces memory cells to capture long-term dependencies.

- Gated Recurrent Units (GRU): A simpler alternative to LSTMs that performs similarly.

Applications:

- Text generation.

- Speech recognition.

- Financial time-series forecasting.

3. Transformer Networks

Transformers have revolutionized natural language processing (NLP) by introducing mechanisms like attention, which allows the model to focus on relevant parts of the input sequence.

Key features:

- Self-attention mechanism to capture relationships between all words in a sequence.

- Scalability for parallel processing, making training faster.

Applications:

- Language translation (e.g., Google Translate).

- Sentiment analysis.

- Large-scale pre-trained models like GPT and BERT.

Applications of Neural Networks Across Industries

Neural networks continue to transform industries with their ability to solve diverse problems:

1. Healthcare

- Diagnosing diseases using medical images.

- Predicting patient outcomes from electronic health records.

- Drug discovery and personalized medicine.

2. Finance

- Fraud detection in transactions.

- Stock price prediction and portfolio optimization.

- Risk assessment for loans and insurance.

3. Retail and E-Commerce

- Product recommendation systems.

- Customer segmentation for targeted marketing.

- Demand forecasting and inventory management.

4. Manufacturing

- Predictive maintenance for machinery.

- Quality control using image analysis.

- Process optimization and automation.

5. Entertainment

- Content recommendation (e.g., Netflix, Spotify).

- AI-driven video game characters.

- Realistic visual effects in movies.

Advancements in Neural Networks

As research in neural networks evolves, several cutting-edge advancements are shaping the future of AI:

1. Transfer Learning

Transfer learning leverages pre-trained neural networks to solve new problems with limited data. Instead of training a model from scratch, practitioners fine-tune pre-trained models like BERT, ResNet, or GPT for specific tasks. This approach has revolutionized fields like natural language processing (NLP) and computer vision, making state-of-the-art performance accessible to smaller teams and organizations.

2. Neural Architecture Search (NAS)

Designing optimal neural network architectures is a challenging and time-consuming task. Neural Architecture Search automates this process by using machine learning to identify architectures that perform well on a given dataset. NAS has led to the creation of highly efficient models like EfficientNet.

3. Generative Neural Networks

Generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) have advanced the field of content creation. Applications include:

- Generating realistic images and videos.

- Synthesizing voices and music.

- Enhancing low-resolution images.

4. Attention Mechanisms and Transformers

Transformers, powered by attention mechanisms, have transformed sequential data processing. Models like GPT-4 and BERT achieve state-of-the-art results in NLP tasks by capturing context across entire sequences rather than processing data step by step.

5. Neural Network Compression

Techniques like pruning, quantization, and knowledge distillation reduce the size and computational requirements of neural networks without significantly affecting performance. These methods enable neural networks to run efficiently on edge devices like smartphones and IoT devices.

6. Federated Learning

Federated learning allows neural networks to be trained on distributed data sources without sharing the raw data. This approach enhances privacy and security while enabling collaborative model development across organizations.

Ethical Considerations in Neural Networks

The rapid development and deployment of neural networks raise important ethical questions that must be addressed to ensure responsible AI adoption.

1. Bias in Neural Networks

Neural networks trained on biased datasets can propagate and even amplify societal biases. For example, facial recognition systems have been shown to perform poorly on minority groups due to imbalanced training data. Mitigating bias requires:

- Careful dataset curation and augmentation.

- Bias detection and correction mechanisms.

- Transparent reporting of model limitations.

2. Privacy Concerns

Neural networks often require large amounts of personal data for training. Ensuring data privacy involves:

- Using anonymization techniques.

- Employing federated learning to keep data decentralized.

- Adhering to regulations like GDPR.

3. Misuse of Generative Models

Generative neural networks can be misused to create deepfakes or spread disinformation. Addressing these risks requires:

- Developing robust detection methods for synthetic content.

- Implementing ethical guidelines for AI development and deployment.

4. Environmental Impact

Training large-scale neural networks consumes significant computational resources, leading to high energy usage and carbon emissions. Researchers are exploring energy-efficient training methods and promoting the use of renewable energy sources for AI infrastructure.

5. Accountability and Transparency

Neural networks often operate as “black boxes,” making it difficult to explain their decisions. Improving transparency involves:

- Using interpretable models when possible.

- Developing tools to visualize and explain neural network decisions.

- Ensuring accountability for AI-driven outcomes.

Future of Neural Networks

Neural networks are poised to play a transformative role in shaping the future of technology and society. Here’s a glimpse of what lies ahead:

1. Expansion of AI Applications

Neural networks will continue to penetrate new domains, including:

- Healthcare: Personalized treatment plans and real-time diagnostics.

- Education: AI-driven personalized learning experiences.

- Climate Science: Predicting and mitigating the effects of climate change.

2. Collaboration Between Humans and AI

The future will see greater collaboration between humans and neural networks. For instance, AI can assist scientists in drug discovery, architects in design, and artists in creating new forms of expression.

3. AI in Edge Computing

Advancements in neural network compression and hardware acceleration will enable sophisticated AI models to run on edge devices, reducing latency and dependence on cloud computing. This shift will drive innovations in autonomous vehicles, smart homes, and IoT ecosystems.

4. Generalized AI

While current neural networks excel at specific tasks, research is progressing toward generalized AI systems capable of performing a wide range of tasks with minimal human intervention.

5. Ethical and Regulatory Frameworks

As neural networks become more ubiquitous, governments and organizations will establish comprehensive frameworks to ensure their safe and ethical use. This includes guidelines for transparency, accountability, and fairness.

Conclusion

Neural networks have evolved from simple computational models to powerful tools that are transforming industries and redefining human potential. Their ability to learn from data, adapt to new challenges, and solve complex problems has made them indispensable in modern technology.

While neural networks offer immense opportunities, they also come with challenges and ethical considerations that must be addressed. Ensuring fair, transparent, and responsible use of neural networks is essential to harness their benefits while mitigating risks.

As research continues to advance, the potential of neural networks to revolutionize fields like healthcare, education, and climate science is limitless. By building on the foundations of today, the neural networks of tomorrow promise a future of innovation, collaboration, and progress.