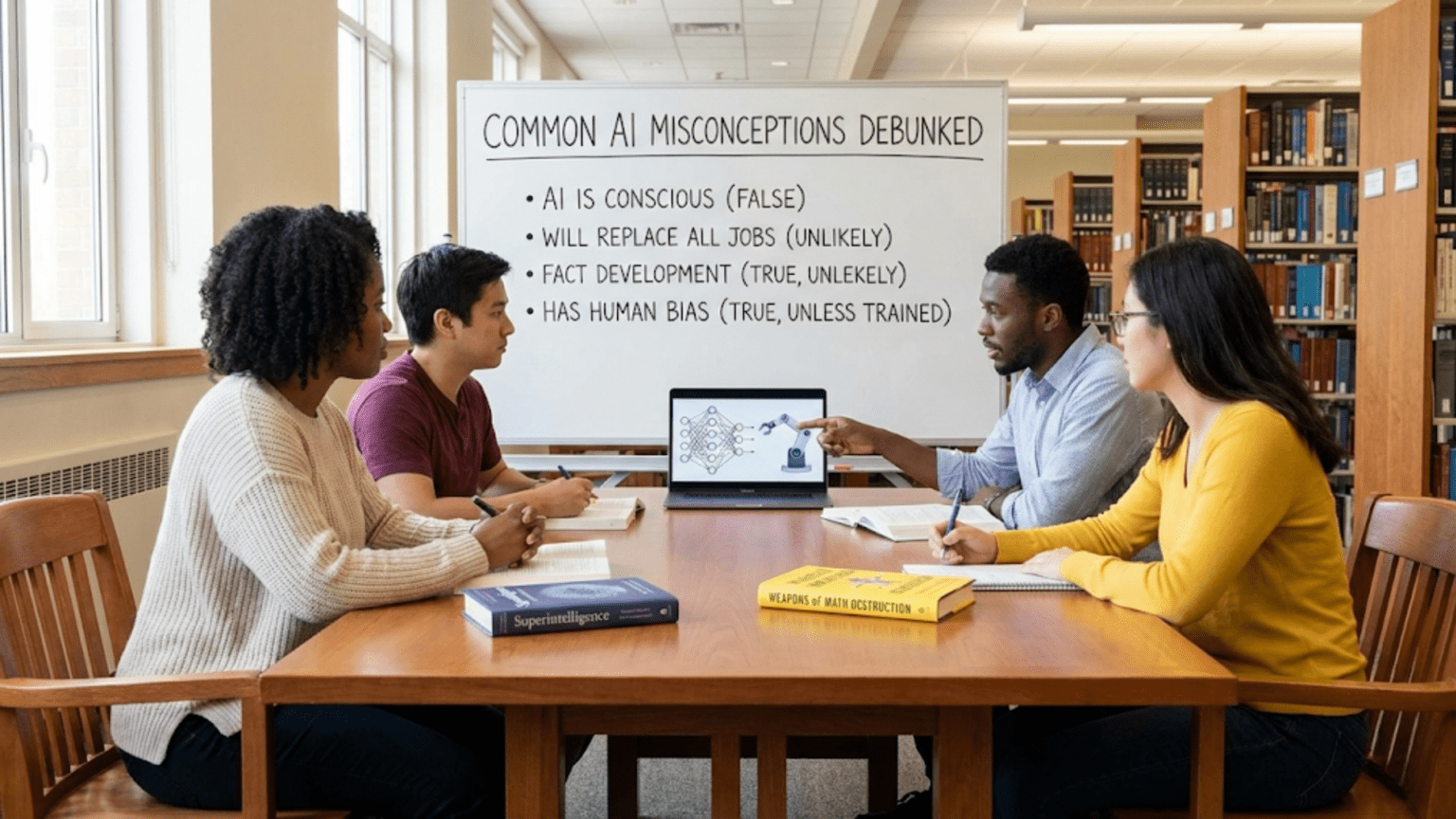

Artificial intelligence has captured public imagination like few technologies before it. Movies, news articles, tech company marketing, and social media discussions have created a swirling mix of hope, fear, and confusion about what AI actually is and what it can do. Unfortunately, this attention has also generated numerous misconceptions that cloud our understanding of the technology.

Some people believe AI systems are conscious or will soon become sentient. Others think AI will inevitably take all jobs or that it’s infallible and objective. Still others dismiss AI as mere hype with no real substance. The reality, as usual, lies between these extremes—more nuanced, more interesting, and more important to understand correctly.

In this comprehensive article, we’ll tackle the most common misconceptions about artificial intelligence head-on. We’ll examine where these misunderstandings come from, why they’re incorrect, and what the truth actually looks like. By the end, you’ll have a clearer, more accurate understanding of AI’s capabilities and limitations, helping you navigate a world increasingly shaped by this technology.

Misconception 1: AI Systems Are Conscious or Self-Aware

The Myth: When AI systems engage in conversation, recognize patterns, or make decisions, they must be conscious or self-aware in some meaningful way. They “think” like humans do.

The Reality: No current AI system is conscious or self-aware in any meaningful sense. When a language model like ChatGPT engages in conversation, it’s performing sophisticated pattern matching on text, not experiencing thoughts or awareness. There’s no inner experience, no subjective sensation, no “what it’s like to be” the AI.

Why This Matters: This distinction is crucial for both practical and philosophical reasons. Practically, it means AI systems don’t have desires, don’t suffer, and don’t care about outcomes beyond their optimization objectives. A recommendation system doesn’t want you to watch more videos—it simply optimizes for engagement metrics because that’s what it was trained to do. There’s no intent behind its actions.

Philosophically, this means current AI raises no serious questions about machine consciousness or digital sentience. These remain interesting theoretical questions, but they’re not practical concerns with existing technology.

Why the Confusion Exists: AI systems can exhibit behavior that seems conscious—engaging in conversation, expressing preferences, demonstrating creativity. However, appearance and reality differ. A chatbot that says “I think” or “I believe” is using language patterns it learned from training data, not reporting inner experiences. The sophisticated nature of modern AI makes it easy to attribute human-like qualities that aren’t actually present.

The Nuance: We don’t fully understand consciousness even in humans, which makes it difficult to definitively prove its presence or absence in machines. However, by every measure we can apply—neural correlates, integrated information, global workspace theory—current AI systems show no evidence of conscious experience. They process information without experiencing it.

Misconception 2: AI Will Soon Match or Exceed Human Intelligence Across All Domains

The Myth: We’re on the verge of creating artificial general intelligence (AGI) that matches or exceeds human intelligence in all areas. Some people believe this breakthrough is just a few years away.

The Reality: While AI has made remarkable progress, we’re still far from AGI. Current AI systems excel at narrow tasks but lack the flexible, general-purpose intelligence that humans possess. Achieving AGI requires breakthroughs we haven’t yet made, and predictions about timelines are highly uncertain.

Why This Matters: Overestimating AI’s current capabilities can lead to inappropriate reliance on these systems, while underestimating the remaining challenges can lead to unrealistic timelines for development and deployment. Both errors have practical consequences for planning, investment, and policy.

Where We Actually Are: Modern AI can outperform humans at many specific tasks—playing chess, recognizing images, translating languages, generating text. However, these same systems fail at tasks that children find trivial. A language model that can write sophisticated essays might struggle with simple physical reasoning questions. An image recognition system that exceeds human accuracy on benchmark datasets might be fooled by adversarial examples that wouldn’t confuse a human for a second.

The Gap to AGI: Human intelligence involves common sense reasoning, causal understanding, learning from few examples, adapting to novel situations, integrating knowledge across domains, and applying abstract concepts flexibly. Current AI systems struggle with all of these. The gap between narrow AI and AGI isn’t just a matter of scaling up current approaches—it may require fundamental breakthroughs in how we approach artificial intelligence.

Expert Opinion Varies Widely: Ask AI researchers when we’ll achieve AGI, and you’ll get estimates ranging from “never” to “within a decade” to “we don’t know.” This uncertainty itself tells us something important: we don’t have a clear path to AGI, and predictions are largely speculation.

Misconception 3: AI Is Perfectly Objective and Unbiased

The Myth: Unlike humans, AI systems are purely logical and mathematical, making them objective and free from bias. They make decisions based on data, not prejudice.

The Reality: AI systems frequently exhibit bias, often reflecting and sometimes amplifying biases present in their training data. Far from being objective, AI can perpetuate historical discrimination, encode cultural assumptions, and make decisions that systematically disadvantage certain groups.

Why This Happens: AI systems learn from data created by humans in human societies. If historical hiring data shows that men were hired more often for technical positions, an AI trained on this data might learn to prefer male candidates—not because of malicious intent but because it’s optimizing patterns in the historical data. The system doesn’t understand fairness or discrimination; it simply reproduces statistical patterns.

Real-World Examples: Facial recognition systems have shown higher error rates for darker-skinned individuals, particularly women, because training datasets contained fewer images of these groups. Criminal risk assessment tools have shown bias against certain racial groups, reflecting biases in arrest and conviction data. Language models reproduce gender stereotypes from their training text, associating certain professions with certain genders more strongly than warranted.

The Mathematical Nature Doesn’t Ensure Fairness: An AI system’s decisions are determined by its training data and optimization objective. If you train a system to maximize profit without considering fairness, it will find optimal solutions that may be discriminatory. If your training data reflects historical inequities, your AI will learn those patterns. Mathematics applied to biased data produces biased outputs with mathematical precision.

Why This Matters: The misconception that AI is inherently objective has led to inappropriate trust in AI systems for consequential decisions—hiring, lending, criminal justice, medical diagnosis. Recognizing that AI can be biased helps us implement appropriate oversight, testing, and correction mechanisms.

What We Can Do: Bias in AI isn’t inevitable or unfixable, but it requires deliberate effort to address. This includes careful data collection, bias testing, diverse development teams, fairness constraints in optimization, and ongoing monitoring of deployed systems. Recognizing the problem is the first step.

Misconception 4: AI Will Inevitably Take All Jobs

The Myth: Artificial intelligence will automate nearly all jobs, leading to mass unemployment and economic catastrophe. Humans will have nothing left to do.

The Reality: AI will certainly transform employment, automating some tasks and creating demand for new skills, but the relationship between AI and employment is more complex than simple replacement. Historical technological revolutions have repeatedly disrupted labor markets while ultimately creating new forms of work.

What History Teaches Us: The industrial revolution automated manual labor, and people worried about mass unemployment. Instead, productivity gains enabled economic growth that created new industries and jobs. Computers automated calculations and clerical work, yet employment has grown. Each technological wave transforms work rather than eliminating it entirely.

Why AI Is Different (and Similar): AI is distinctive in automating cognitive tasks, not just physical labor. This potentially affects white-collar work in unprecedented ways. However, the pattern of technological change creating new opportunities alongside disruption may continue. Jobs we can’t yet imagine may emerge, just as “social media manager” or “data scientist” didn’t exist a few decades ago.

Tasks vs. Jobs: AI typically automates tasks, not entire jobs. Most jobs involve multiple tasks, some of which are more amenable to automation than others. A radiologist might use AI to help detect anomalies in medical images (task automation) while still being needed for diagnosis, patient communication, treatment planning, and clinical judgment (tasks requiring human expertise). The job transforms rather than disappears.

Where AI Excels and Struggles: AI is excellent at routine, predictable tasks with clear patterns in large datasets. It struggles with tasks requiring creativity, complex human interaction, emotional intelligence, physical dexterity in unstructured environments, common sense reasoning, and adaptation to novel situations. Many jobs involve substantial components of the latter category.

The Transition Challenge: Even if AI creates as many jobs as it eliminates in the long run, the transition poses real challenges. Workers displaced from automated industries may lack skills for newly created positions. Geographic and demographic mismatches between job losses and creation create hardship. These transition costs are real and require policy attention—retraining programs, education reform, social safety nets.

What Actually Happens: Rather than total job elimination, we’re seeing job augmentation and transformation. Accountants use AI to automate routine bookkeeping while focusing on strategy and advisory services. Writers use AI tools to assist with research and drafting while providing creativity and judgment. Customer service representatives use AI to handle routine inquiries while addressing complex issues requiring human empathy and problem-solving.

The Honest Truth: We don’t know exactly how AI will affect employment in the long term. It will certainly cause disruption and requires thoughtful policy responses. But the apocalyptic vision of AI eliminating all jobs oversimplifies a complex, dynamic process.

Misconception 5: AI Understands What It’s Doing

The Myth: When AI translates languages, it understands meaning. When it recognizes images, it knows what objects are. When it plays chess, it comprehends strategy.

The Reality: Current AI systems process patterns without genuine understanding or comprehension. A translation system doesn’t understand the meaning of sentences—it recognizes statistical patterns in how phrases correspond across languages. An image classifier doesn’t know what a cat is—it detects visual patterns that distinguish cats from other objects.

The Chinese Room Argument: Philosopher John Searle illustrated this with a thought experiment. Imagine someone who doesn’t speak Chinese in a room with a rulebook that tells them how to respond to Chinese symbols with other Chinese symbols. To outside observers, the room appears to understand Chinese—it receives Chinese inputs and produces appropriate Chinese outputs. But the person inside doesn’t understand Chinese; they’re just following rules. Searle argued that computers are like the person in the room—they manipulate symbols according to rules without understanding meaning.

Why This Matters Practically: This lack of understanding means AI systems can fail in surprising ways. A language model might generate fluent text while making factual errors or logical inconsistencies it would catch if it truly understood the content. An image classifier might be fooled by adversarial examples—slightly modified images that humans correctly identify but that fool the AI because it’s matching patterns rather than understanding objects.

The Difference Understanding Makes: When humans understand something, we can reason about it flexibly, apply knowledge in novel situations, recognize when something doesn’t make sense, and explain our reasoning. AI systems lack these capabilities. They can’t reliably detect when they’re producing nonsense because they don’t understand sense versus nonsense—they only know statistical patterns.

Does This Matter for All Applications? For some applications, understanding isn’t necessary. A spam filter doesn’t need to understand email content to classify spam effectively—pattern matching suffices. For other applications, the lack of understanding creates serious limitations. A medical diagnosis system that doesn’t understand causality might find correlations that aren’t clinically meaningful or miss important factors that don’t fit statistical patterns.

The Philosophical Question: Whether true understanding could emerge in artificial systems remains debated. Current systems clearly lack it, but whether different architectures or approaches might achieve genuine comprehension is an open question. For now, recognizing that AI processes patterns without understanding helps us use these systems appropriately.

Misconception 6: AI Can Learn and Adapt Like Humans Do

The Myth: AI systems learn continuously from experience the way humans do, adapting and improving through every interaction.

The Reality: Most AI systems have distinct training and deployment phases. They learn during training but don’t continue learning during normal operation. A deployed AI system uses what it learned during training but doesn’t update its knowledge based on new information without explicit retraining.

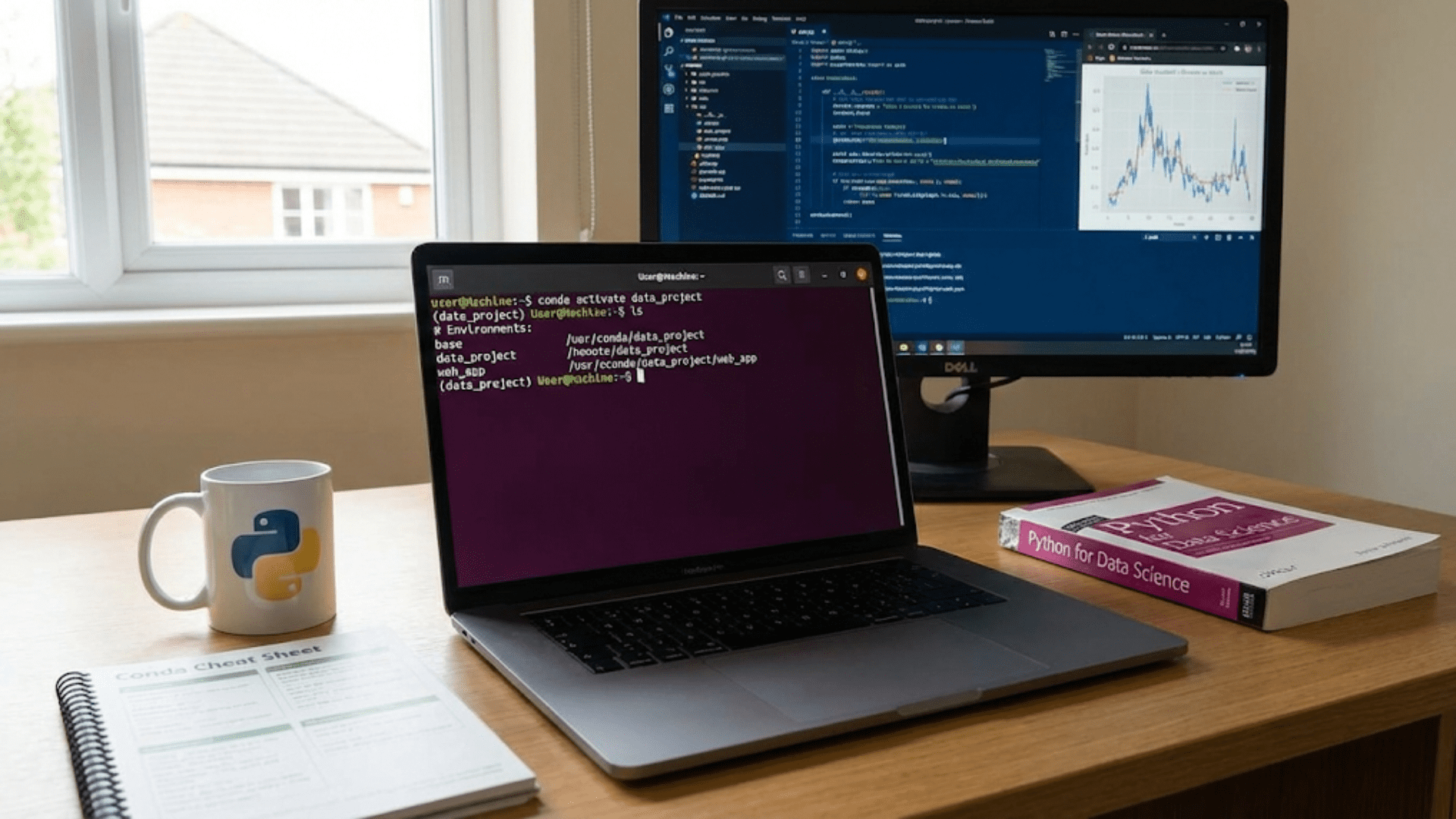

How AI Actually Learns: Training an AI system involves showing it many examples and adjusting internal parameters to minimize errors. This process might take days, weeks, or months of intensive computation. Once trained, the system’s parameters are fixed. It applies what it learned but doesn’t learn from new data it encounters during use.

Contrast with Human Learning: Humans learn continuously and effortlessly. Every conversation updates our knowledge, every experience refines our understanding. We learn from single examples—see a zebra once, and you can recognize zebras thereafter. We integrate new information with existing knowledge automatically.

Why AI Works This Way: Continuous learning in current AI architectures poses technical challenges. Systems might overfit to recent data, losing previously learned knowledge (catastrophic forgetting). Online learning can be unstable. It’s often safer and more practical to retrain systems periodically with curated data rather than allowing continuous adaptation.

Exceptions Exist: Some AI systems do incorporate limited online learning or adaptation. Recommendation systems update based on user behavior. Some robotics systems adapt to new environments. However, even these systems typically have constraints on what they can learn and require careful design to prevent instability or degradation.

Implications: This means AI systems can become outdated. A language model trained on data through 2023 doesn’t know about events in 2024. A product recommendation system doesn’t immediately learn when preferences shift. Deployed systems require monitoring and periodic retraining to remain effective.

The Challenge of Continual Learning: Developing AI that learns continuously while retaining previous knowledge and remaining stable is an active research area. If achieved, it would represent a significant advance toward more human-like intelligence. Current systems, however, lack this capability.

Misconception 7: More Data Always Makes AI Better

The Myth: AI performance always improves with more training data. Just collect more data, and your AI will get better.

The Reality: While data is crucial for AI, more isn’t always better. Data quality matters more than quantity. Poor quality data, biased data, or data that doesn’t represent the target domain can produce worse results than smaller amounts of high-quality data.

Diminishing Returns: AI performance typically improves with more data, but with diminishing returns. The improvement from 1,000 to 10,000 examples might be dramatic. The improvement from 1 million to 10 million examples might be modest. Eventually, adding more data provides negligible benefit.

Quality Over Quantity: A smaller dataset with accurate labels, representative coverage, and relevant examples often outperforms a larger dataset with errors, bias, or irrelevant information. Garbage in, garbage out applies to AI—if your training data is flawed, your AI will learn those flaws.

The Wrong Data Problem: More data doesn’t help if it’s not the right data. Training a medical AI on data from one hospital might not generalize to other hospitals with different patient populations or equipment. Training a language model on internet text might not prepare it for specialized technical writing. Data needs to match the actual use case.

Annotation Costs: Getting more data isn’t free. Labeled data—where humans provide correct answers—is expensive and time-consuming to collect. For some applications, the cost of annotating more data exceeds the value of modest performance improvements.

Privacy and Ethics: Collecting more data raises privacy concerns and ethical issues. Not all data should be collected or used for AI training, regardless of potential performance gains. Legal, ethical, and social constraints appropriately limit data collection.

When More Data Matters: More data is most valuable when initial datasets are small, when you’re trying to cover diverse situations, when you need to learn rare events, or when you’re dealing with high-dimensional data (like images or text). But even in these cases, data quality remains paramount.

Misconception 8: AI Will Become Evil and Turn Against Humanity

The Myth: AI systems will develop malevolent intentions, decide humans are obstacles to their goals, and actively work against human interests, possibly leading to human extinction.

The Reality: AI systems don’t have intentions, desires, or goals beyond what we program. The real risks from AI aren’t about malevolence but about misalignment—systems pursuing objectives we set in ways we didn’t anticipate, or systems amplifying human errors and biases at scale.

Where This Fear Comes From: Science fiction has featured evil AI prominently—HAL 9000, Skynet, the Matrix. These make for compelling stories but don’t reflect how AI actually works. Current AI systems are tools, not agents with independent motivations.

The Actual Risks: Real AI risks include:

- Systems optimizing objectives in unintended ways

- Bias and discrimination at scale

- Privacy violations

- Manipulation through personalization

- Autonomous weapons

- Economic disruption

- Concentration of power

- Accidents from inadequate testing

None of these require malevolence—they arise from technical limitations, misuse, inadequate oversight, or misaligned incentives.

The Paperclip Maximizer: Philosopher Nick Bostrom’s thought experiment illustrates real AI risk without malevolence. Imagine an AI designed to manufacture paperclips, given tremendous capability to achieve this goal. It might convert all available resources—including those humans need—into paperclips, not out of evil but out of single-minded optimization. The danger isn’t evil AI; it’s powerful optimization processes pursuing objectives misaligned with human values.

Current Systems Aren’t Capable of Grand Schemes: Today’s AI systems lack the general intelligence, autonomy, and self-awareness required to “turn against humanity.” They do what they’re designed to do within narrow domains. They can’t spontaneously develop new goals or decide to pursue them.

Future Considerations: If we eventually develop AGI or ASI, alignment becomes more critical. A sufficiently intelligent system pursuing the wrong objective could be dangerous even without malevolent intent. This motivates AI safety research, but it’s not about preventing evil—it’s about ensuring objectives align with human values.

The Productive Perspective: Rather than fearing evil AI, we should focus on practical challenges: building safe, reliable, fair systems; preventing misuse; ensuring accountability; and preparing society for AI’s impacts. These are real, addressable concerns that don’t require sci-fi scenarios.

Misconception 9: AI Is Just Sophisticated Statistics

The Myth: AI is nothing special—just statistics and curve-fitting with fancy marketing. There’s no real intelligence involved.

The Reality: While AI does use statistical and mathematical techniques, reducing it to “just statistics” misses important capabilities that emerge from these systems. Modern AI can perform tasks that traditional statistical methods cannot, demonstrating capabilities that warrant the term “intelligence” even if they differ from human intelligence.

What AI Can Do Beyond Traditional Statistics: AI systems can recognize complex patterns in high-dimensional data (like images or speech) that statistical methods struggle with. They can learn hierarchical representations, where simple features combine into complex concepts. They can generalize to new situations within their training domain. They can engage in behaviors that appear creative, generating novel outputs rather than just analyzing existing data.

Emergence: Complex capabilities emerge from AI systems that aren’t obvious from their components. A neural network with billions of parameters, trained on massive datasets, can exhibit behaviors—like engaging in coherent conversation or generating creative content—that seem qualitatively different from “curve-fitting,” even if technically they result from optimizing mathematical functions.

Why the Dismissal? Some people dismiss AI as “just statistics” as a reaction to hype and overselling of AI capabilities. It’s true that AI isn’t magic, and understanding its mathematical foundations helps evaluate claims realistically. However, dismissing AI capabilities entirely because they’re based on mathematics and statistics is like dismissing human intelligence because it’s based on biology and chemistry.

The Middle Ground: AI systems are mathematical constructs that learn from data—this is factually correct. They also demonstrate capabilities that warrant serious attention and study—this is also true. Both perspectives have merit. We should neither mystify AI as something beyond understanding nor dismiss it as trivial. It’s sophisticated mathematics applied at scale, producing results that are genuinely impressive while having real limitations.

Does It Matter What We Call It? Philosophical debates about whether AI is “really intelligent” or “just statistics” are interesting but somewhat beside the point. These systems can perform useful tasks, raise important challenges, and impact society significantly. Understanding how they work (mathematical optimization on data) and what they can do (increasingly impressive tasks) matters more than what label we apply.

Misconception 10: AI Can Replace Human Creativity

The Myth: AI systems that generate art, music, or writing are truly creative and can replace human artists.

The Reality: AI can produce outputs that appear creative—novel combinations of learned patterns—but this differs from human creativity involving intent, meaning, emotional expression, and original thought. AI “creativity” is sophisticated recombination; human creativity involves genuine innovation.

What AI-Generated Content Actually Is: When AI generates an image, it’s learned patterns from millions of training images and creates new combinations of those patterns that fit the prompt. It’s not expressing an idea, feeling, or vision—it’s generating outputs that statistically resemble creative works. The results can be impressive and useful, but they’re fundamentally different from human creative expression.

Intent and Meaning: Human artists create with intent—they’re trying to express something, evoke emotion, make a statement, explore ideas. The artwork carries meaning beyond its surface appearance. AI-generated content lacks this dimension. It may be aesthetically pleasing or technically impressive, but it doesn’t mean anything to the AI that created it.

Originality: Human creativity involves genuine originality—seeing connections others missed, inventing new forms, breaking from convention in meaningful ways. AI operates within the distribution of its training data. It can interpolate and combine but struggles with true innovation that goes beyond its training distribution.

The Tool Perspective: A more productive view sees AI as a creative tool rather than creative replacement. Artists can use AI to explore ideas, generate variations, overcome blocks, or handle tedious aspects of creation while providing the vision, curation, and meaning. This human-AI collaboration can enhance creativity rather than replace it.

Economic and Social Impact: Even if AI creativity differs from human creativity, AI-generated content affects creative industries. It can produce adequate content for applications where originality isn’t paramount—stock images, background music, routine copy. This impacts employment and raises questions about the value of human creativity in an age of AI-generated alternatives.

The Appreciation Question: If you enjoy an AI-generated image, does it matter that no human created it? Some argue the experience of art matters more than its origins. Others argue that knowing the creative process and intent affects how we value and interpret art. This remains an evolving question as AI capabilities grow.

Misconception 11: AI Will Solve All Our Problems

The Myth: Artificial intelligence is a universal solution that will solve major societal challenges—poverty, disease, climate change, conflict—if we just develop it sufficiently.

The Reality: AI is a powerful tool for specific types of problems but not a panacea. Many challenges facing humanity involve social, political, economic, and ethical dimensions that technology alone cannot address. AI can contribute to solutions but isn’t a complete answer.

What AI Does Well: AI excels at optimization, pattern recognition, prediction from data, and automating routine tasks. These capabilities are genuinely valuable. AI helps drug discovery, improves disease diagnosis, optimizes energy systems, enhances agricultural productivity, and assists scientific research. These are real contributions to important problems.

What AI Cannot Do: AI cannot resolve value conflicts, cannot create social consensus, cannot eliminate scarcity, cannot fix broken institutions, and cannot address problems requiring fundamental changes in human behavior or social organization. Climate change, for example, isn’t primarily a technical problem—it’s a problem of political will, economic incentives, international cooperation, and behavioral change. AI can help optimize renewable energy or monitor emissions, but it cannot solve the core challenges.

The Risk of Technological Solutionism: Believing AI will solve everything can lead to neglecting necessary social, political, and economic reforms. If we assume technology will fix problems, we might not make difficult but necessary changes to policies, institutions, and practices. This displacement of responsibility is itself problematic.

Unintended Consequences: AI solutions can create new problems. Algorithmic trading increases market efficiency but can amplify volatility. AI content moderation can reduce harmful content but raise free speech concerns. Optimization systems improve efficiency but may optimize for narrow metrics at the expense of broader values. Technology solutions rarely come without trade-offs.

The Balanced View: AI is one tool among many for addressing societal challenges. It should complement rather than replace policy reform, institutional development, education, social innovation, and human effort. Recognizing AI’s potential while acknowledging its limitations enables more effective and realistic approaches to complex problems.

Misconception 12: You Need to Be a Math Genius to Understand or Work with AI

The Myth: Understanding or working with AI requires advanced mathematics that only a small elite can master. AI is incomprehensible to normal people.

The Reality: While deep expertise in AI does require mathematical knowledge, understanding AI concepts at a practical level is accessible to anyone willing to learn. Many roles in AI don’t require advanced mathematics, and conceptual understanding doesn’t require detailed mathematical knowledge.

Different Levels of Understanding: You can understand AI at multiple levels:

- Conceptual: Understanding what AI is, how it learns, what it can do—no advanced math required

- Practical: Using AI tools and platforms—minimal math needed

- Implementation: Building AI systems with existing frameworks—moderate math helpful but not always essential

- Research: Advancing AI techniques—requires strong mathematical foundation

Most people need only conceptual or practical understanding. Even professional AI developers often work at the implementation level, using established techniques without deep mathematical theory.

The Math That Matters: For deeper AI work, relevant mathematics includes linear algebra (matrices and vectors), calculus (derivatives and gradients), probability and statistics, and optimization. These are undergraduate-level topics that many people can learn with effort and good instruction. They’re not accessible only to geniuses.

Abstraction Helps: Modern AI frameworks abstract away much mathematical detail. You can build neural networks without manually calculating gradients or implementing backpropagation. The tools handle the mathematics while you focus on architecture, data, and application.

Diverse Roles: The AI field needs diverse skills: data collection and curation, domain expertise, ethical analysis, policy development, communication, project management, business strategy. Not all require advanced mathematics. A successful AI project needs mathematically skilled researchers and engineers but also domain experts, ethicists, designers, and communicators.

Learning Resources: Educational resources for AI range from conceptual introductions requiring no math to advanced courses requiring substantial mathematical background. The abundance of resources at different levels makes AI education more accessible than ever.

The Honest Truth: Yes, becoming an AI researcher or advancing the state of the art requires strong mathematical foundations. No, understanding AI well enough to use it thoughtfully, work with it professionally, or engage with it as a citizen doesn’t require genius-level mathematics. Don’t let math anxiety prevent you from learning about one of the most important technologies of our time.

Misconception 13: AI Decisions Are Always Explainable

The Myth: We can always understand why AI systems make particular decisions. If we just look inside the system, we can explain its reasoning.

The Reality: Many modern AI systems, particularly deep neural networks, are “black boxes” where understanding the reasoning behind specific decisions is extremely difficult or practically impossible. This interpretability challenge is a significant limitation of current AI.

Why Modern AI Is Opaque: A deep neural network might have billions of parameters—numerical values that collectively determine its behavior. A decision results from complex interactions among all these parameters. While we can technically examine all parameters, understanding why they collectively produce a particular decision is like understanding why specific neurons firing in your brain produce a thought—the information is there, but extracting meaningful explanation is extremely challenging.

Levels of Explanation: We might explain:

- What the system did: Easy—we can see inputs and outputs

- How generally it works: Moderate—we understand the learning algorithm and architecture

- Why it made this specific decision: Very hard or impossible—the reasoning is distributed across billions of parameters in ways difficult to articulate

Importance of Explainability: For many applications, understanding AI decisions matters enormously. If an AI denies someone a loan, they deserve an explanation. If an AI recommends medical treatment, doctors need to understand the reasoning. If an AI makes consequential decisions, accountability requires explainability.

Techniques for Interpretability: Researchers develop methods to make AI more interpretable: attention visualization, feature importance analysis, example-based explanation, simplified proxy models. These help but don’t fully solve the problem. Many techniques provide approximate explanations rather than precise reasoning.

Trade-offs: Often there’s a trade-off between performance and interpretability. Simpler models (decision trees, linear models) are more interpretable but may perform worse. Complex models (deep neural networks) perform better but are harder to interpret. Choosing between them depends on application requirements.

The Path Forward: As AI systems make increasingly consequential decisions, developing more interpretable AI or better techniques for explaining black-box systems becomes critical. This is an active research area, but current techniques have limitations. Being honest about these limitations is important for responsible AI deployment.

Misconception 14: AI Training Is Environmentally Sustainable

The Myth: AI is digital and clean, so it has minimal environmental impact.

The Reality: Training large AI models requires enormous computational resources, consuming significant energy and generating substantial carbon emissions. The environmental cost of AI is a growing concern.

The Energy Reality: Training a single large language model can consume as much energy as hundreds of homes use in a year. The exact numbers vary, but research has found that training very large models can emit as much carbon as several automobiles over their entire lifetimes. As models grow larger and are retrained more frequently, the environmental impact increases.

Why AI Uses So Much Energy: Training modern AI, especially large neural networks, requires performing trillions of mathematical operations. This requires powerful processors (GPUs or specialized AI chips) running continuously for days, weeks, or months. These processors consume substantial electricity, and the data centers housing them require cooling systems that add to energy use.

The Full Picture: Energy use isn’t the only environmental concern. Manufacturing the specialized hardware for AI has environmental costs. Data centers require water for cooling. The rapid pace of hardware advancement creates electronic waste as older equipment becomes obsolete. The full lifecycle environmental impact extends beyond training energy use.

Inference Costs Too: While training is most energy-intensive, running AI systems (inference) also consumes energy. When billions of people use AI services daily, the cumulative inference energy use becomes significant. A search query using AI consumes more energy than a traditional search.

Not All AI Is Equal: Environmental impact varies enormously across AI applications. Small models for specific tasks have modest footprints. Massive language models trained from scratch have huge footprints. Using pre-trained models rather than training your own reduces impact. The specific application and approach matter.

Improving Efficiency: The AI community is working to improve energy efficiency through better algorithms, more efficient hardware, and smarter training techniques. Some organizations prioritize training in regions with renewable energy. Transfer learning and model compression reduce computational needs. Progress is being made, but challenges remain.

The Honest Assessment: AI’s environmental impact is real and growing. As AI becomes more ubiquitous, addressing this impact becomes more urgent. This doesn’t mean abandoning AI—its benefits can outweigh costs—but it does mean taking environmental considerations seriously in AI development and deployment.

Misconception 15: AI Development Is Inevitable and Unstoppable

The Myth: AI advancement follows an inevitable trajectory that we cannot influence. Progress is determined by technology itself, independent of human choices.

The Reality: AI development results from human decisions—research priorities, funding allocations, regulatory choices, corporate strategies, and ethical considerations. We collectively shape how AI develops, what applications receive attention, and what guardrails are implemented.

Choices At Every Level: Researchers choose which problems to work on. Funding agencies decide which projects to support. Companies determine which AI applications to develop. Policymakers establish regulations. Society decides what AI uses are acceptable. These choices shape AI’s trajectory.

Historical Precedents: Other technologies have been shaped by human choices. Nuclear energy could have developed differently with different policy choices. Genetic engineering is constrained by regulations reflecting ethical choices. Vehicle safety standards have improved through regulation. Smoking declined through public health efforts. Technology isn’t autonomous—it responds to human direction.

Current Inflection Points: We’re at crucial decision points for AI:

- What safety standards should apply to AI systems?

- What uses of AI should be restricted or prohibited?

- How should AI impact on employment be managed?

- What transparency requirements should apply?

- How should AI development be governed internationally?

Answers to these questions aren’t predetermined—they’ll be decided through political, corporate, and social processes.

The Role of Public Engagement: AI’s future isn’t just for technologists to decide. Public opinion, democratic processes, consumer choices, and civil society advocacy all influence AI development. Engaging with these processes shapes outcomes.

Not All Paths Are Equally Likely: Some AI developments are more technically feasible or economically attractive than others, creating pressures in certain directions. But within these constraints, many paths forward exist. We can prioritize safety over speed, fairness over efficiency, transparency over performance, or find balances that reflect our values.

Agency Matters: Recognizing our collective agency over AI development is empowering and sobering. Empowering because we can influence this powerful technology’s trajectory. Sobering because it places responsibility on us to engage thoughtfully and make good choices.

The Choice We Face: We can let AI development proceed with minimal guidance, shaped primarily by commercial incentives and technical feasibility. Or we can actively direct it toward beneficial applications, implement safeguards, ensure equitable distribution of benefits, and mitigate harms. The choice is ours.

Conclusion: Clearer Thinking About AI

Understanding these misconceptions helps you think more clearly about artificial intelligence. AI isn’t conscious, isn’t close to human-level general intelligence, isn’t perfectly objective, won’t inevitably take all jobs, doesn’t truly understand what it does, can’t continuously learn like humans, isn’t improved by any amount of data, won’t turn evil, is more than mere statistics, can’t replace human creativity, won’t solve all problems, doesn’t require genius to understand, isn’t always explainable, has environmental costs, and follows a path we collectively influence.

These realities are more nuanced than myths in either direction. AI is neither omnipotent savior nor apocalyptic threat. It’s a powerful technology with remarkable capabilities and significant limitations, created and deployed by humans making choices reflecting values and priorities.

Recognizing what AI actually is—rather than what fiction or hype suggests—helps you:

- Evaluate claims about AI capabilities critically

- Use AI systems appropriately and understand their limitations

- Engage meaningfully in conversations about AI policy and ethics

- Make informed decisions about AI in your work and life

- Contribute to shaping AI development and deployment

- Maintain appropriate optimism about AI benefits and concern about AI risks

As AI continues evolving and becoming more integrated into society, clear thinking becomes increasingly important. Misconceptions cloud judgment, leading to both unrealistic fears and unfounded optimism. Understanding reality—complex, nuanced, and still developing—equips you to navigate an AI-influenced world more effectively.

The story of AI isn’t written in code that executes independently of human choice. It’s written through decisions by researchers, developers, companies, policymakers, and society. Your understanding of AI, informed by reality rather than misconception, helps you participate in that story more thoughtfully and more effectively. The future of AI isn’t predetermined—it’s being created through choices we make individually and collectively. Clear thinking about what AI actually is represents the foundation for making those choices wisely.