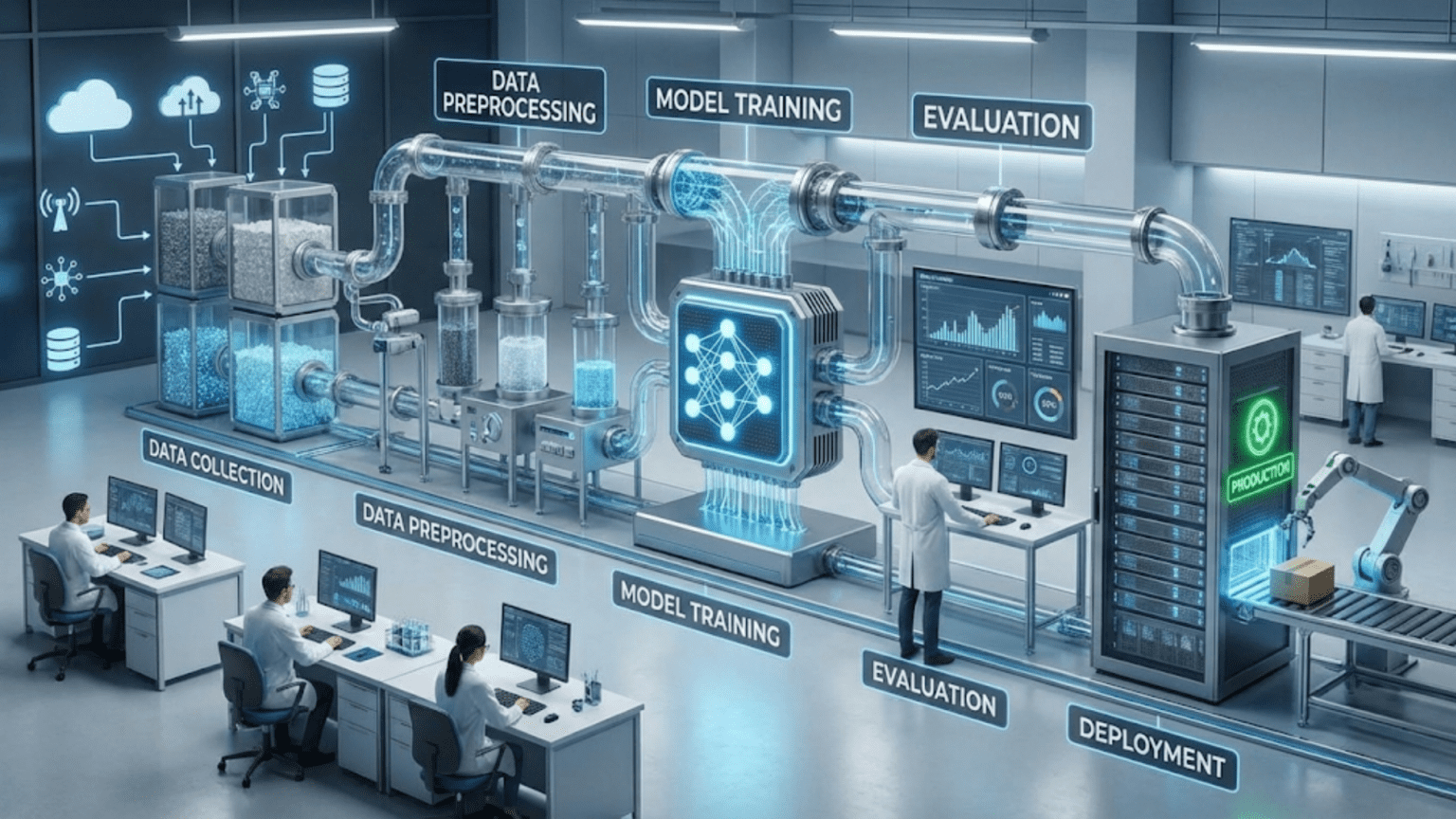

The machine learning pipeline is a systematic workflow that transforms raw data into a deployed model making real-world predictions. It consists of sequential stages: problem definition, data collection and preparation, exploratory data analysis, feature engineering, model selection and training, evaluation, optimization, and deployment with monitoring. Each stage builds upon the previous one to create production-ready machine learning systems.

Introduction: The Journey from Idea to Impact

Building a machine learning model isn’t about writing a few lines of code and calling it done. It’s a comprehensive journey involving multiple interconnected stages, each critical to success. Just as a manufacturing assembly line transforms raw materials into finished products through sequential operations, the machine learning pipeline transforms raw data into actionable predictions through systematic steps.

Understanding this pipeline is essential whether you’re a data scientist building models, a business leader evaluating machine learning projects, or a developer integrating ML into applications. The pipeline provides structure to what can otherwise feel like an overwhelming process, breaking down complex projects into manageable, logical stages.

Real-world machine learning projects fail not because the algorithms aren’t sophisticated enough, but because teams skip critical pipeline stages or execute them poorly. Data scientists often joke that 80% of their time goes to data preparation—not because they’re inefficient, but because thorough data work is fundamental to success. Models trained on poorly prepared data, no matter how sophisticated, will perform poorly in production.

This comprehensive guide walks through every stage of the machine learning pipeline, from initial problem definition through production deployment and monitoring. You’ll learn what happens at each stage, why it matters, common pitfalls to avoid, and best practices for success. We’ll use concrete examples throughout, following a fictional e-commerce company building a product recommendation system to illustrate concepts.

By understanding the complete pipeline, you’ll be equipped to plan projects realistically, allocate resources appropriately, and build machine learning systems that actually work in production—not just in Jupyter notebooks.

Stage 1: Problem Definition and Goal Setting

Every successful machine learning project begins with clearly defining what you’re trying to achieve. This might seem obvious, but poorly defined problems are among the most common reasons projects fail.

Identifying the Business Problem

Start by understanding the business need, not the technical solution. Ask questions like:

What specific business outcome do we want to improve? Instead of “we want to use machine learning,” say “we want to reduce customer churn by 15%” or “we want to decrease fraudulent transactions by 30%.”

What decisions will this model inform? Will it recommend products to users, approve or deny loan applications, route customer service calls, or schedule maintenance?

Who will use the model outputs? End customers, internal employees, other systems? This affects requirements for speed, interpretability, and integration.

What constraints exist? Budget limitations, timeline requirements, privacy regulations, computational resources, acceptable error rates?

Let’s use our running example: ShopSmart, an e-commerce company, wants to increase revenue through personalized product recommendations. Their current system shows popular products to everyone, but conversion rates are low.

Translating Business Problems to ML Problems

Once you understand the business need, frame it as a machine learning problem:

Problem Type: Is this classification (predicting categories), regression (predicting numbers), clustering (finding groups), ranking (ordering items), or something else?

For ShopSmart, this is a ranking problem—order products by likelihood the user will purchase them.

Success Metrics: How will you measure success? Metrics should align with business goals.

For ShopSmart:

- Business metric: Revenue per user, conversion rate

- ML metrics: Click-through rate, add-to-cart rate, purchase rate, ranking quality metrics like Mean Average Precision

Baseline Performance: What’s the current performance without machine learning? This establishes what you need to beat.

ShopSmart’s current popularity-based recommendations have a 2% conversion rate. Any ML solution must exceed this to justify the investment.

Feasibility Assessment

Before diving in, assess whether machine learning is appropriate and feasible:

Is ML necessary? Can simpler rule-based approaches work? Machine learning adds complexity—only use it when needed.

Is sufficient data available? You need representative data covering diverse scenarios. How much depends on problem complexity.

Are features predictive? Can you measure factors that actually influence the outcome?

Is the problem solvable? Some problems are inherently unpredictable. Can humans perform this task reasonably well?

What’s the ROI? Will the improvement justify development and maintenance costs?

ShopSmart confirms:

- Rule-based recommendations underperform (tried popularity, categories)

- They have 2 years of user purchase history and browsing data (millions of interactions)

- Features like past purchases, browsing history, and product attributes should be predictive

- Human curators can make reasonable recommendations, suggesting it’s learnable

- A 1% improvement in conversion would generate $5M annually, justifying investment

Defining Success Criteria

Set specific, measurable goals:

Minimum viable performance: What’s the threshold for deployment?

- ShopSmart: 2.5% conversion rate (beating baseline by 0.5%)

Target performance: What would represent strong success?

- ShopSmart: 3.5% conversion rate (1.5% improvement)

Non-functional requirements: Latency (recommendation speed), explainability, fairness, privacy compliance, maintenance burden

Timeline and milestones: When do you need results?

- ShopSmart: Proof-of-concept in 2 months, production deployment in 6 months

Clear success criteria prevent projects from drifting indefinitely and provide objective evaluation standards.

Stage 2: Data Collection and Acquisition

With a clear problem definition, you need data—the foundation of any machine learning system.

Identifying Data Sources

Determine what data you need and where to get it:

Internal Data: Databases, logs, CRM systems, transaction records, sensor data, user interactions

ShopSmart has:

- User database: demographics, registration date, account type

- Purchase history: products bought, dates, prices, quantities

- Clickstream data: page views, searches, cart additions

- Product catalog: descriptions, categories, prices, attributes, images

External Data: Public datasets, purchased data, web scraping, APIs, third-party services

ShopSmart considers:

- Product reviews from external sites

- Market trends and seasonality data

- Competitor pricing information

Data Partnerships: Collaborations with other companies or organizations for shared data

Synthetic Data: Generated data to augment real data, especially for rare scenarios

Data Collection Strategy

Plan how to gather and combine data:

Historical Data: Past data for training and validation. You need sufficient history covering diverse conditions.

ShopSmart extracts 2 years of data—enough to capture seasonal patterns and diverse user behaviors.

Real-time Data: For features that need current information (e.g., current inventory, time-sensitive trends)

Data Integration: Combining data from multiple sources requires matching records, handling different formats, and resolving conflicts.

ShopSmart joins user data with purchases and clickstream data using user IDs and timestamps. They handle challenges like:

- Users who browse without logging in (anonymous sessions)

- Product IDs that changed when catalog was reorganized

- Inconsistent timestamps across systems

Legal and Ethical Considerations

Data collection must comply with regulations and ethical standards:

Privacy Regulations: GDPR, CCPA, and other laws govern data collection and use. Ensure:

- User consent for data collection

- Right to deletion requests

- Data minimization (collect only what’s needed)

- Secure storage and handling

Bias and Fairness: Does your data represent all relevant populations? Historical data may reflect past biases.

Sensitive Attributes: Handle protected characteristics (race, gender, age) carefully. Even if not used directly, models might learn proxies.

ShopSmart ensures:

- Privacy policy discloses data usage

- Personal identifiers are hashed

- European users’ data complies with GDPR

- They audit for demographic bias in recommendations

Data Storage and Infrastructure

Plan infrastructure for storing and accessing data:

Storage Systems: Databases, data lakes, data warehouses. Choose based on data volume, query patterns, and access frequency.

Scalability: Can your infrastructure handle data growth?

Versioning: Track data versions to reproduce experiments and models.

Access Control: Who can access what data? Security is critical.

ShopSmart sets up:

- Data warehouse combining all sources

- Versioned datasets for reproducibility

- Role-based access control

- Automated daily data pipelines

Stage 3: Data Preparation and Cleaning

Raw data is messy. Data preparation transforms it into a clean, usable format—typically the most time-consuming pipeline stage.

Data Quality Assessment

First, understand your data’s condition:

Completeness: Are values missing? How much? Why?

ShopSmart finds:

- 15% of users missing demographic information

- Product descriptions missing for 5% of catalog

- Some purchase records missing price information

Accuracy: Are values correct? Look for outliers, impossible values, contradictions.

ShopSmart discovers:

- Some product prices are negative (data entry errors)

- Users with birthdates in the future

- Purchase timestamps before product launch dates

Consistency: Do values follow expected formats and patterns?

ShopSmart sees:

- Product categories inconsistently capitalized

- Prices in different currencies without notation

- Date formats varying across systems

Relevance: Is the data actually useful for your problem?

ShopSmart evaluates:

- User login timestamps are relevant (activity patterns)

- Account creation IP addresses less relevant for recommendations

- Shipping addresses not needed

Handling Missing Data

Missing data requires careful handling:

Understand Why Data is Missing:

- Missing Completely at Random (MCAR): No pattern to missingness

- Missing at Random (MAR): Missingness relates to observed data

- Missing Not at Random (MNAR): Missingness relates to the missing value itself

Strategies:

Deletion: Remove records with missing values

- Simple but loses information

- Acceptable if data missing is truly random and you have plenty of data

- ShopSmart removes users with missing purchase history (tiny fraction)

Imputation: Fill missing values

- Mean/median/mode for numerical/categorical features

- Forward/backward fill for time series

- Model-based imputation using other features

- ShopSmart fills missing ages with median age of similar users

Indicator Features: Create binary features indicating missingness

- Useful when missingness itself is informative

- ShopSmart adds “demographics_missing” flag—users who skip optional fields might behave differently

Domain-Specific Handling: Use domain knowledge

- ShopSmart treats missing product views as zero views (didn’t view the product)

Data Cleaning Operations

Clean data systematically:

Correcting Errors:

- Fix negative prices, invalid dates, impossible values

- ShopSmart corrects obvious typos and entry errors

Standardizing Formats:

- Convert dates to consistent format

- Normalize text (lowercase, remove punctuation)

- Standardize units and currencies

- ShopSmart converts all prices to USD and dates to ISO format

Removing Duplicates:

- Identify and remove duplicate records

- ShopSmart deduplicates purchases (same user, product, timestamp)

Handling Outliers:

- Decide whether outliers are errors or legitimate extreme values

- Options: remove, cap (winsorize), or keep with special handling

- ShopSmart investigates unusually large orders—some are legitimate bulk purchases, others are errors

Resolving Inconsistencies:

- Reconcile conflicting information from different sources

- ShopSmart establishes transaction log as source of truth for purchase data

Data Transformation

Transform data into suitable formats:

Encoding Categorical Variables:

- Convert categories to numbers

- One-hot encoding: Create binary column for each category

- Label encoding: Assign numbers to categories (for ordinal data)

- Target encoding: Use category’s relationship with target variable

- ShopSmart one-hot encodes product categories and user account types

Scaling Numerical Features:

- Many algorithms perform better with scaled features

- Standardization: Transform to mean=0, std=1

- Normalization: Scale to [0,1] range

- ShopSmart standardizes price, viewing time, and purchase frequency

Text Processing:

- Tokenization, stemming, lemmatization

- Remove stop words

- Create bag-of-words or TF-IDF representations

- ShopSmart processes product descriptions and search queries

Date/Time Features:

- Extract hour, day of week, month, season

- Calculate time differences

- ShopSmart creates features for time since last purchase, day of week, holiday indicators

Data Aggregation:

- Create summary statistics over groups or time windows

- ShopSmart calculates: average purchase value, purchase frequency, product category preferences

Stage 4: Exploratory Data Analysis (EDA)

Before modeling, explore and understand your data through statistical analysis and visualization.

Understanding Data Distributions

Examine how values are distributed:

Numerical Features:

- Calculate descriptive statistics (mean, median, std, min, max, quartiles)

- Create histograms and box plots

- ShopSmart discovers: purchase amounts are right-skewed (many small purchases, few large ones); most users make 2-5 purchases per year

Categorical Features:

- Count frequencies of each category

- Identify rare categories that might need special handling

- ShopSmart finds: electronics category dominates, some categories have very few products

Target Variable:

- Understand what you’re predicting

- Check for class imbalance in classification

- ShopSmart sees: most product pairs have zero purchases (sparse data), successful recommendations are rare events

Exploring Relationships

Understand how features relate to each other and the target:

Correlation Analysis:

- Calculate correlation between numerical features

- Identify highly correlated features (potential multicollinearity)

- ShopSmart finds: user age and account tenure correlate; price and product category correlate

Feature-Target Relationships:

- How do individual features relate to the target?

- Which features appear most predictive?

- ShopSmart discovers:

- Users who browse more categories have higher purchase rates

- Time since last purchase strongly predicts next purchase likelihood

- Product views in the same category as past purchases predict purchases

Segmentation Analysis:

- Do patterns differ across user segments or conditions?

- ShopSmart finds: mobile users behave differently than desktop; weekend vs weekday patterns differ

Data Visualization

Create visualizations to communicate insights:

Distribution Plots: Histograms, kernel density plots, box plots

Relationship Plots: Scatter plots, correlation heatmaps

Time Series Plots: Track trends and seasonality

Categorical Plots: Bar charts, count plots

ShopSmart creates visualizations showing:

- Purchase patterns over time (clear holiday spikes)

- Product category preferences by user segment

- Correlation heatmap of user behavior features

- Distribution of time between purchases

Identifying Patterns and Anomalies

Look for interesting patterns, anomalies, or data quality issues:

Seasonality and Trends: Do patterns change over time?

- ShopSmart sees holiday shopping spikes, back-to-school patterns

Unexpected Patterns: Surprises that might indicate opportunities or problems

- ShopSmart notices: users who abandon carts often return to purchase later, suggesting retargeting opportunities

Data Leakage Risks: Features that wouldn’t be available at prediction time

- ShopSmart identifies: some product attributes were added after launch dates—can’t use for recommendations before that date

Anomalies: Unusual patterns warranting investigation

- ShopSmart finds: spike in returns for specific product batch (quality issue)

EDA insights inform feature engineering, algorithm selection, and validation strategies.

Stage 5: Feature Engineering

Feature engineering—creating and selecting the most informative features—often determines model success more than algorithm choice.

Creating New Features

Generate features that better capture patterns:

Domain-Driven Features: Use business knowledge to create meaningful features

ShopSmart engineers features like:

- User purchase recency, frequency, monetary value (RFM)

- Average basket size

- Favorite product categories

- Price sensitivity (average discount waited for)

- Browsing-to-purchase ratio

Interaction Features: Combine multiple features to capture joint effects

- User age × product category (different demographics prefer different products)

- Day of week × time of day (weekend evening browsing patterns)

- Price × user income estimate (affordability)

Aggregation Features: Summarize information over groups or windows

- Average rating for products in category

- Number of times user viewed similar products

- Trending score (recent purchase velocity)

Time-Based Features: Capture temporal patterns

- Days since last visit

- Day of week, month, season

- Holiday indicators

- Time-on-page measurements

Text Features: Extract information from text

- Product description length

- Key word presence (e.g., “limited edition,” “sale”)

- Sentiment from reviews

- TF-IDF vectors for search queries

Embedding Features: Dense representations of categorical data

- Product embeddings (similar products have similar vectors)

- User embeddings (similar users have similar vectors)

- Learned through neural networks or matrix factorization

Feature Selection

Not all features improve models—some add noise or redundancy. Select the most valuable features:

Filter Methods: Evaluate features independently

- Correlation with target

- Statistical tests (chi-square, ANOVA)

- Information gain, mutual information

- ShopSmart calculates mutual information between each feature and purchase probability

Wrapper Methods: Evaluate feature subsets using model performance

- Forward selection (iteratively add features)

- Backward elimination (iteratively remove features)

- Recursive feature elimination

- ShopSmart uses recursive feature elimination with a random forest

Embedded Methods: Feature selection built into model training

- Lasso regression (L1 regularization zeros out coefficients)

- Tree-based feature importance

- ShopSmart uses XGBoost’s built-in feature importance

Dimensionality Reduction: Transform features to lower-dimensional space

- Principal Component Analysis (PCA)

- Autoencoders for non-linear reduction

- ShopSmart uses PCA on product attribute vectors

Feature Validation

Ensure features are valid and useful:

Temporal Validity: Features must not use future information (data leakage)

- ShopSmart ensures features use only data available before prediction time

- Example: Can’t use “user purchased this product” to predict whether they’ll purchase it

Practical Availability: Will these features be available in production?

- ShopSmart confirms all features can be calculated in real-time or near real-time

- Example: Can’t use features requiring manual labeling

Stability: Do features remain predictive over time?

- ShopSmart monitors feature distributions and correlations over time

- Example: Product catalog changes might invalidate some features

Consistency: Are features calculated identically in training and production?

- ShopSmart implements feature engineering pipelines that work in both contexts

- Example: Same code for calculating aggregations, handling missing values

Stage 6: Model Selection and Training

With prepared data and engineered features, you’re ready to build models.

Choosing Algorithms

Select appropriate algorithms based on:

Problem Type:

- Classification: Logistic Regression, Random Forest, Gradient Boosting, Neural Networks

- Regression: Linear Regression, Ridge/Lasso, Gradient Boosting Regressors, Neural Networks

- Ranking: Learning-to-Rank algorithms, collaborative filtering

ShopSmart chooses: Gradient Boosting Trees (XGBoost) and Neural Networks for ranking

Data Characteristics:

- Small data: Simpler models (logistic regression, random forest)

- Large data: More complex models (deep learning, large ensembles)

- High-dimensional: Regularized models, dimensionality reduction

- Mixed types: Tree-based models handle different feature types well

ShopSmart has large data (millions of interactions) supporting complex models

Interpretability Requirements:

- Need explanations: Linear models, decision trees, GAMs

- Black box acceptable: Neural networks, ensemble methods

ShopSmart needs some interpretability for business understanding but prioritizes performance

Computational Constraints:

- Training time: Real-time learning requires fast training

- Inference speed: Recommendations need low latency

- Resource limits: Mobile deployment requires small models

ShopSmart has computational resources for training but needs fast inference

Start Simple: Begin with baseline models, add complexity if needed

ShopSmart starts with matrix factorization (simple collaborative filtering) before trying complex models

Data Splitting Strategy

Split data for proper evaluation:

Train-Validation-Test Split:

- Training set (typically 60-70%): Learn model parameters

- Validation set (typically 15-20%): Tune hyperparameters, make development decisions

- Test set (typically 15-20%): Final evaluation on completely unseen data

Time-Based Splitting: For temporal data, respect time order

- ShopSmart uses data from:

- Training: Jan 2022 – Dec 2023 (24 months)

- Validation: Jan 2024 – Mar 2024 (3 months)

- Test: Apr 2024 – Jun 2024 (3 months)

Stratification: Ensure splits have similar target distributions

- Important for imbalanced classes

- ShopSmart ensures similar purchase rates across splits

Cross-Validation: For robust estimation (discussed in evaluation stage)

Training Process

Train models systematically:

Set Random Seeds: For reproducibility

Establish Baseline: Simple model or rule-based system to beat

- ShopSmart baseline: recommend most popular products (2% conversion)

Start Simple: Train baseline ML model

- ShopSmart trains matrix factorization: 2.3% conversion (slight improvement)

Iterate: Try more sophisticated approaches

- ShopSmart experiments with:

- XGBoost with user/item features: 2.8% conversion

- Neural network with embeddings: 3.1% conversion

- Hybrid ensemble: 3.3% conversion

Monitor Training: Watch for issues

- Check loss curves for convergence

- Monitor training vs validation performance (detect overfitting)

- Track training time and resource usage

ShopSmart monitors:

- Training loss decreasing smoothly

- Validation loss plateaus around epoch 50

- Training takes 4 hours on GPU

Hyperparameter Tuning: Optimize configuration

- Grid search: Try all combinations

- Random search: Sample parameter space randomly

- Bayesian optimization: Intelligently explore parameter space

- ShopSmart uses Bayesian optimization for learning rate, tree depth, embedding dimensions

Handling Common Challenges

Address issues that arise during training:

Class Imbalance:

- Recommendations: Most user-product pairs aren’t purchased

- Solutions: Resampling, class weights, specialized loss functions

- ShopSmart uses weighted loss giving more importance to positive examples

Data Leakage:

- Features that wouldn’t be available at prediction time

- ShopSmart carefully audits features for temporal validity

Overfitting:

- Model memorizes training data, fails on new data

- Solutions: Regularization, simpler models, more data, early stopping

- ShopSmart uses dropout in neural networks, early stopping based on validation loss

Computational Limits:

- Large datasets or models exceed memory/time budgets

- Solutions: Sampling, mini-batch training, model simplification, distributed training

- ShopSart uses mini-batch training and GPU acceleration

Stage 7: Model Evaluation

Thoroughly evaluate models before deployment using appropriate metrics and validation strategies.

Choosing Evaluation Metrics

Select metrics aligned with business goals:

Classification Metrics:

- Accuracy: Overall correctness (misleading with imbalanced classes)

- Precision: Of predicted positives, how many are actually positive?

- Recall: Of actual positives, how many did we identify?

- F1 Score: Harmonic mean of precision and recall

- AUC-ROC: Model’s ability to distinguish classes across thresholds

Regression Metrics:

- Mean Absolute Error (MAE): Average absolute prediction error

- Mean Squared Error (MSE): Average squared error (penalizes large errors more)

- Root Mean Squared Error (RMSE): Square root of MSE (same units as target)

- R-squared: Proportion of variance explained

Ranking Metrics:

- Precision@K: Precision in top K recommendations

- Recall@K: Recall in top K recommendations

- Mean Average Precision (MAP): Average precision across all users

- Normalized Discounted Cumulative Gain (NDCG): Considers ranking quality

ShopSmart uses:

- Primary: Conversion rate on recommendations

- Secondary: Click-through rate, NDCG@10, MAP

- Business: Revenue per user, average order value

Validation Strategies

Beyond simple train-test splits:

K-Fold Cross-Validation:

- Split data into K folds

- Train on K-1 folds, validate on remaining fold

- Repeat K times, average results

- Provides robust performance estimate

- ShopSmart uses 5-fold temporal cross-validation (respecting time order)

Stratified K-Fold:

- Ensures each fold has similar target distribution

- Important for imbalanced data

Time Series Cross-Validation:

- For temporal data, use expanding or sliding window

- ShopSmart uses expanding window: train on increasingly more history, always test on future period

Nested Cross-Validation:

- Outer loop: Performance estimation

- Inner loop: Hyperparameter tuning

- Prevents optimistic bias from tuning on validation set

Comprehensive Evaluation

Evaluate multiple aspects:

Overall Performance: ShopSmart’s neural network achieves:

- 3.3% conversion rate (1.3% above baseline)

- 8.2% click-through rate

- NDCG@10: 0.68

- Projected revenue increase: $6.5M annually

Performance by Segment:

- Analyze performance across user groups, product categories, time periods

- ShopSmart finds:

- Model performs better for frequent shoppers (more data)

- Electronics recommendations stronger than clothing (more objective features)

- Performance consistent across quarters (no temporal drift yet)

Error Analysis:

- Study incorrect predictions

- Identify patterns in failures

- ShopSmart examines:

- Why some purchased products ranked low (often first-time category purchases)

- Why some recommended products weren’t purchased (out of stock, price increases)

- User segments with poor performance (very new users with no history)

Robustness Testing:

- Test with corrupted inputs, edge cases, adversarial examples

- ShopSmart tests:

- New users with minimal data

- Users with unusual behavior patterns

- Product catalog changes

Fairness Evaluation:

- Check for demographic biases

- Ensure equitable performance across groups

- ShopSmart audits:

- Recommendation diversity across demographic groups

- No systematic exclusion of any product categories

- Equal performance for different user segments

Model Comparison

Compare multiple models objectively:

| Model | Conversion Rate | Click-Through Rate | NDCG@10 | Training Time | Inference Time |

|---|---|---|---|---|---|

| Baseline (Popularity) | 2.0% | 4.5% | 0.42 | N/A | <1ms |

| Matrix Factorization | 2.3% | 5.8% | 0.51 | 30 min | 5ms |

| XGBoost | 2.8% | 7.1% | 0.61 | 2 hours | 10ms |

| Neural Network | 3.1% | 8.0% | 0.66 | 4 hours | 15ms |

| Ensemble | 3.3% | 8.2% | 0.68 | 5 hours | 25ms |

ShopSmart chooses the ensemble for best performance despite higher latency (acceptable for their use case).

Stage 8: Model Optimization and Refinement

After initial evaluation, optimize and refine the model.

Hyperparameter Optimization

Fine-tune model configuration:

Grid Search: Exhaustively try all combinations

- Thorough but computationally expensive

- ShopSmart uses for final tuning of top 2-3 parameters

Random Search: Sample parameter space randomly

- Often finds good solutions faster than grid search

- ShopSmart uses for initial broad exploration

Bayesian Optimization: Intelligently explore based on past results

- Efficient for expensive evaluations

- ShopSmart uses for main hyperparameter search

Automated ML (AutoML): Automated hyperparameter and architecture search

- Tools: Optuna, Hyperopt, Ray Tune

- ShopSmart uses Optuna for neural network architecture search

Key hyperparameters ShopSmart optimizes:

- Learning rate, batch size

- Number and size of hidden layers

- Dropout rate, regularization strength

- Embedding dimensions

- Number of boosting rounds

Feature Optimization

Refine feature set:

Feature Importance Analysis:

- Identify most influential features

- ShopSmart finds top features:

- User’s past purchase categories

- Product popularity in user segment

- Time since last purchase

- Product price relative to user average

- User-product embedding similarity

Remove Redundant Features:

- Features that don’t add value

- ShopSmart removes correlated features providing duplicate information

Add Derived Features:

- Based on error analysis insights

- ShopSmart adds:

- Cross-category purchase patterns

- Seasonal purchase indicators

- Price drop alerts

Feature Interaction:

- Explicit interaction terms between important features

- ShopSmart creates interactions between user demographics and product attributes

Addressing Model Weaknesses

Target specific failure modes:

Poor Performance on Segments:

- New users: Create specialized cold-start model

- Niche products: Add content-based features

- ShopSmart develops:

- Separate model for new users using demographics

- Content-based backup for products with few interactions

Overfitting:

- Increase regularization

- Add more training data

- Reduce model complexity

- Early stopping

- ShopSmart increases dropout and adds data augmentation

Underfitting:

- Increase model capacity

- Add more features

- Reduce regularization

- Train longer

- ShopSmart adds hidden layers after discovering model has capacity for more complexity

Slow Inference:

- Model compression (quantization, pruning)

- Knowledge distillation (train smaller model to mimic larger one)

- Caching frequent predictions

- ShopSmart compresses embeddings and caches popular product recommendations

Ensemble Methods

Combine multiple models for better performance:

Voting/Averaging:

- Classification: Majority vote

- Regression/Ranking: Average predictions

- Simple but effective

Stacking:

- Train meta-model on predictions of base models

- More sophisticated combination

- ShopSmart trains meta-model combining:

- Neural network predictions

- XGBoost predictions

- Matrix factorization predictions

Boosting:

- Sequentially train models, each correcting previous errors

- XGBoost, LightGBM, CatBoost

Bagging:

- Train models on different data subsets, average results

- Random Forests use this approach

ShopSmart’s final ensemble combines neural network (weight=0.5), XGBoost (weight=0.3), and matrix factorization (weight=0.2), achieving 3.3% conversion rate.

Stage 9: Model Deployment

Moving from development to production requires careful planning and execution.

Deployment Architecture

Design how the model serves predictions:

Batch Predictions:

- Generate predictions for all users/items periodically

- Store in database for retrieval

- Advantages: Simple, fast retrieval, consistent

- Disadvantages: Not real-time, storage overhead

- ShopSmart uses for:

- Email recommendation campaigns

- Pre-computed homepage recommendations

Real-Time Predictions:

- Generate predictions on-demand when requested

- Advantages: Always current, no storage needed

- Disadvantages: Latency requirements, computational load

- ShopSmart uses for:

- Product page recommendations

- Cart recommendations

Hybrid Approach:

- Pre-compute some predictions, generate others on-demand

- ShopSmart approach:

- Pre-compute top 100 recommendations per user nightly

- Generate personalized refinements in real-time based on current session

Deployment Infrastructure

Set up technical infrastructure:

Model Serving:

- REST API for predictions

- Containerization (Docker) for consistency

- Orchestration (Kubernetes) for scaling

- ShopSmart deploys:

- Dockerized FastAPI service

- Kubernetes cluster with auto-scaling

- Load balancer for traffic distribution

Feature Computation:

- Real-time feature calculation infrastructure

- Feature store for sharing features across models

- ShopSmart implements:

- Redis cache for frequently used features

- Feature computation service

- Daily batch jobs for slow-changing features

Model Storage and Versioning:

- Store trained models with versions

- Enable rollback if needed

- ShopSmart uses:

- MLflow for model registry

- S3 for model artifact storage

- Versioned model deployments

Monitoring Infrastructure:

- Track model performance and system health

- Alert on anomalies

- ShopSmart monitors:

- Prediction latency

- Throughput (requests per second)

- Prediction distribution

- Error rates

Deployment Strategies

Carefully roll out models to minimize risk:

Shadow Mode:

- Run new model alongside existing system

- Don’t use predictions yet, just evaluate

- Compare performance

- ShopSmart runs ensemble in shadow mode for 2 weeks, confirming performance

A/B Testing:

- Show some users new model predictions, others old system

- Measure comparative performance

- Statistical significance testing

- ShopSmart runs A/B test:

- 10% of users get new recommendations

- 90% get old system

- Run for 2 weeks, measure conversion rates

Canary Deployment:

- Gradually increase traffic to new model

- Monitor for issues before full rollout

- ShopSmart rollout:

- Week 1: 5% traffic

- Week 2: 20% traffic

- Week 3: 50% traffic

- Week 4: 100% traffic (if all metrics healthy)

Blue-Green Deployment:

- Maintain two production environments

- Switch traffic between them

- Instant rollback if problems occur

- ShopSmart keeps previous model active for quick rollback

Integration and API Design

Integrate model into existing systems:

API Design:

# ShopSmart recommendation API

POST /api/v1/recommendations

{

"user_id": "user123",

"context": {

"current_product": "prod456",

"session_history": ["prod789", "prod012"]

},

"num_recommendations": 10,

"filters": {

"exclude_categories": ["electronics"],

"price_max": 100

}

}

Response:

{

"recommendations": [

{"product_id": "prod345", "score": 0.92, "reason": "similar to recent views"},

{"product_id": "prod678", "score": 0.88, "reason": "popular in your interests"},

...

],

"metadata": {

"model_version": "v2.1.3",

"latency_ms": 15,

"timestamp": "2024-06-15T10:30:00Z"

}

}Error Handling:

- Graceful degradation if model fails

- Fallback to simpler system

- ShopSmart fallbacks:

- Primary: Neural network ensemble

- Backup 1: Matrix factorization

- Backup 2: Popularity-based

Performance Optimization:

- Caching common predictions

- Batch processing when possible

- Model optimization (quantization, pruning)

- ShopSmart optimizations:

- Cache top products per category

- Pre-compute user embeddings

- Use TensorRT for neural network inference

Stage 10: Monitoring and Maintenance

Deployment isn’t the end—ongoing monitoring and maintenance ensure continued success.

Performance Monitoring

Track model performance in production:

Prediction Monitoring:

- Distribution of predictions (detecting drift)

- Prediction latency

- Error rates

- ShopSmart monitors:

- Daily prediction distributions match validation distributions

- 95th percentile latency < 50ms

- API error rate < 0.1%

Business Metrics:

- Actual conversions, revenue, engagement

- Compare to expected performance

- ShopSmart tracks:

- Conversion rate (target: >3.2%)

- Revenue per user

- Recommendation diversity

Data Drift:

- Are input features changing?

- Is target distribution shifting?

- ShopSmart monitors:

- Weekly feature distribution comparisons

- Statistical tests for distribution changes

- Alerts if drift detected

Model Performance Degradation:

- Is accuracy declining over time?

- Compare online performance to offline validation

- ShopSmart evaluates:

- Weekly holdout set performance

- Online A/B test metrics

- Triggers retrain if performance drops >0.3%

Alerting and Incident Response

Set up alerts for problems:

Automated Alerts:

- Latency spikes

- Error rate increases

- Performance degradation

- Data quality issues

ShopSmart alert thresholds:

- Critical: Latency >200ms, error rate >1%, conversion rate <2.8%

- Warning: Latency >100ms, error rate >0.5%, conversion rate <3.0%

Incident Response:

- Runbooks for common issues

- Clear escalation procedures

- Rollback capabilities

ShopSmart procedures:

- Alert triggers

- On-call engineer notified

- Check dashboards for root cause

- If severe: rollback to previous model

- Investigate and fix

- Document incident and learnings

Model Updating and Retraining

Keep models current:

Scheduled Retraining:

- Regular updates with fresh data

- ShopSmart schedule:

- Weekly: Retrain on last 3 months data

- Monthly: Full retrain on all data

- Quarterly: Reevaluate architecture and features

Triggered Retraining:

- Retrain when performance drops

- When new data patterns emerge

- After major business changes

- ShopSmart triggers:

- Automatic retrain if conversion drops >0.3%

- Manual retrain after catalog updates

- Retrain after major promotions or events

Continuous Learning:

- Online learning: Update model with each new example

- Incremental learning: Periodic small updates

- ShopSmart experiments:

- Test online learning for user embeddings

- Incremental updates for trending products

Model Evolution:

- Periodic evaluation of new algorithms

- Architecture improvements

- Feature engineering updates

- ShopSmart quarterly reviews:

- Evaluate new model architectures

- Test new features

- Consider new data sources

Documentation and Knowledge Transfer

Maintain comprehensive documentation:

Technical Documentation:

- Model architecture

- Feature definitions

- Training procedures

- Deployment configuration

- API documentation

Operational Runbooks:

- Monitoring procedures

- Incident response

- Retraining steps

- Rollback procedures

Business Documentation:

- Model capabilities and limitations

- Expected performance

- Use cases and non-use cases

- Decision thresholds and their business impact

ShopSmart maintains:

- Confluence wiki for documentation

- GitHub for code and configuration

- Runbook repository

- Quarterly stakeholder reports

Common Pipeline Pitfalls and Best Practices

Learn from common mistakes:

Pitfall 1: Inadequate Problem Definition

Problem: Jumping to modeling without clear goals

Consequences: Building something that doesn’t solve the actual business problem

Solution: Spend time upfront defining success metrics, constraints, and business value. Get stakeholder alignment.

Pitfall 2: Data Quality Issues

Problem: Skipping thorough data cleaning and validation

Consequences: “Garbage in, garbage out”—models learn from errors and biases

Solution: Invest time in data quality. Profile data systematically. Document cleaning decisions.

Pitfall 3: Data Leakage

Problem: Using information not available at prediction time

Consequences: Artificially inflated performance estimates; models fail in production

Solution: Carefully audit features for temporal validity. Think about what’s actually available when making predictions.

Pitfall 4: Improper Train-Test Split

Problem: Random splitting of temporal data, or data leakage between sets

Consequences: Overly optimistic performance estimates

Solution: Respect temporal order. Ensure strict separation between train/validation/test.

Pitfall 5: Overfitting to Validation Set

Problem: Repeatedly tuning based on validation performance without held-out test set

Consequences: Optimistic bias; poor performance on truly new data

Solution: Keep test set completely separate until final evaluation. Use cross-validation.

Pitfall 6: Ignoring Business Constraints

Problem: Building models without considering deployment constraints (latency, interpretability, cost)

Consequences: Models that can’t actually be deployed

Solution: Include deployment requirements from the start. Involve engineering early.

Pitfall 7: No Baseline Comparison

Problem: Not establishing what you’re trying to beat

Consequences: Can’t assess whether ML adds value

Solution: Implement simple baseline (rules, heuristics, simple models). ML must beat this to justify complexity.

Pitfall 8: Insufficient Monitoring

Problem: Deploy and forget; assume models keep working

Consequences: Silent degradation; discover problems only when users complain

Solution: Comprehensive monitoring of both model and business metrics. Automated alerts.

Pitfall 9: Neglecting Fairness and Bias

Problem: Not auditing for demographic biases or fairness issues

Consequences: Discriminatory models; regulatory, legal, and ethical problems

Solution: Include fairness evaluation in pipeline. Test across demographic groups. Document bias mitigation.

Pitfall 10: Poor Documentation

Problem: Inadequate documentation of decisions, procedures, and learnings

Consequences: Knowledge loss when team members leave; difficult maintenance and debugging

Solution: Document throughout the pipeline. Maintain runbooks. Share knowledge.

Best Practices for Production ML Pipelines

Successful production pipelines share common practices:

Automation

Automate Repetitive Tasks:

- Data collection and preprocessing pipelines

- Model training workflows

- Deployment procedures

- Monitoring and alerting

ShopSmart automates:

- Daily data ETL pipelines

- Weekly retraining workflows

- Automated testing before deployment

- Continuous monitoring dashboards

Version Control

Version Everything:

- Code (Git)

- Data (DVC, data versioning tools)

- Models (MLflow, model registry)

- Configurations

- Environments (Docker, requirements files)

ShopSmart versions:

- Feature engineering code

- Training scripts and configs

- Model artifacts with metadata

- Dataset snapshots for reproducibility

Testing

Test Thoroughly:

- Unit tests for data processing functions

- Integration tests for pipeline components

- Model validation tests (performance thresholds)

- Data quality tests

- A/B tests for deployment

ShopSmart testing:

- pytest suite for all data processing

- Validation that retrained models beat baseline

- Data schema validation

- Shadow mode before production

Reproducibility

Ensure Reproducibility:

- Set random seeds

- Document dependencies and versions

- Save complete pipeline configurations

- Version datasets

ShopSmart ensures:

- Fixed random seeds throughout

- Pinned package versions (requirements.txt)

- Saved hyperparameters for each model

- Data provenance tracking

Collaboration

Enable Team Collaboration:

- Shared code repositories

- Centralized experiment tracking

- Documentation culture

- Code reviews

ShopSmart practices:

- Pull request reviews for all changes

- MLflow for experiment sharing

- Weekly team syncs on model performance

- Collaborative documentation

Conclusion: From Pipeline to Production Success

The machine learning pipeline transforms an abstract idea—”let’s use ML to solve this problem”—into a concrete, production-ready system delivering business value. While the specifics vary by problem, the fundamental structure remains consistent: define the problem, prepare data, build models, evaluate thoroughly, deploy carefully, and monitor continuously.

Understanding this pipeline helps you:

Plan Realistically: Allocate time and resources appropriately. Data preparation and deployment often take more time than modeling.

Avoid Common Pitfalls: Learn from others’ mistakes. Data leakage, improper evaluation, and inadequate monitoring cause many project failures.

Build Production-Ready Systems: Move beyond Jupyter notebooks to deployed models serving real users.

Communicate Effectively: Speak intelligently about ML projects with stakeholders, engineers, and data scientists.

Make Better Decisions: Know when to invest more in data quality versus model sophistication, when to deploy versus iterate further.

ShopSmart’s recommendation system succeeded because they followed the pipeline systematically: clearly defined success criteria, thoroughly prepared data, engineered predictive features, rigorously evaluated models, deployed carefully with A/B testing, and continuously monitored performance. Their 1.3% conversion improvement translates to $6.5M annual revenue—a substantial return on their ML investment.

The pipeline isn’t a rigid formula but a flexible framework. Adapt it to your context—some projects need more emphasis on data collection, others on deployment infrastructure. Small projects might combine stages; large projects might have dedicated teams for each.

As you build ML systems, remember: the goal isn’t just training accurate models, it’s deploying systems that create value. That requires mastering the entire pipeline from initial data to production deployment and beyond. The most sophisticated model is worthless if it never leaves your laptop; the simplest model can deliver tremendous value if it’s properly integrated into business processes.

Machine learning engineering is an emerging discipline focused on building production ML systems. It combines data science skills (statistics, modeling) with software engineering practices (testing, deployment, monitoring). Success requires competence throughout the pipeline, not just excellence at one stage.

Start with simpler pipelines and evolve them as you learn. Automate progressively. Document continuously. Learn from failures. Share knowledge with your team. Build incrementally, validating at each stage before proceeding.

The machine learning pipeline is your roadmap from idea to impact. Master it, and you’ll be equipped to build ML systems that actually work in the real world—not just in theory, but in production, delivering measurable business value day after day.