DeepMind CEO Warns China Is Closing AI Gap Faster Than Expected: “Maybe Only Months Behind”

In a stark assessment that challenges prevailing assumptions about America’s lead in artificial intelligence, Google DeepMind CEO Demis Hassabis revealed on January 15, 2026, that Chinese AI models may now be just “a matter of months” behind their Western counterparts—a dramatic acceleration from the years-long gap previously estimated by industry observers.

Speaking on CNBC’s newly launched podcast “The Tech Download,” Hassabis, who leads one of the world’s most advanced AI research laboratories and drives much of Google’s AI strategy including the Gemini assistant, provided a nuanced evaluation of China’s rapid progress while highlighting a critical distinction between catching up to existing capabilities and pioneering entirely new breakthrough technologies.

Rapid Convergence at the AI Frontier

“Maybe they’re only a matter of months behind at this point,” Hassabis stated, emphasizing that Chinese AI models have drawn much closer to what researchers call “the frontier”—the cutting edge of AI capabilities—than most Western observers realized. This assessment marks a significant shift from conventional wisdom just one or two years ago, when many experts estimated China lagged by several years.

The rapid progress became undeniable throughout 2025, when Chinese AI firms including DeepSeek, Alibaba, Baidu, Tencent, and newer entrants like Moonshot AI and Zhipu released a series of advanced language models that performed remarkably close to their US peers on major AI benchmarks. DeepSeek in particular shocked the global tech industry with its DeepSeek-V3.2-Speciale model, which the company claimed matched Google DeepMind’s Gemini 3 Pro in reasoning capabilities despite being developed with significantly less computational resources and restricted access to cutting-edge semiconductors due to US export controls.

Hassabis credited China’s progress to the country’s formidable engineering capabilities: “That’s already very difficult, because you need world-class engineering already to be able to do that. And China definitely has that.” The country’s massive tech companies—with Alibaba, Tencent, and Baidu each employing tens of thousands of engineers—have poured billions of dollars into AI development, recognizing the technology’s strategic importance.

The Innovation Question: Catching Up vs. Breaking Through

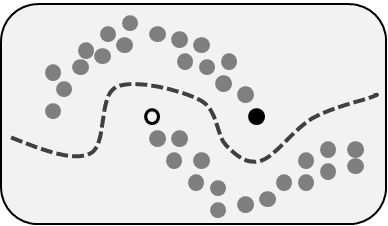

However, Hassabis drew a critical distinction between replicating existing technologies and creating fundamentally new breakthroughs that expand the boundaries of what AI can do. “The question is, can they innovate something new beyond the frontier?” he asked. “So I think they’ve shown they can catch up…and be very close to the frontier…But can they actually innovate something new, like a new transformer…that gets beyond the frontier? I don’t think that’s been shown yet.”

He referenced the Transformer architecture—a groundbreaking innovation developed by Google researchers in 2017 that now underpins virtually all modern large language models, including OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and Meta’s Llama. This type of foundational scientific breakthrough represents a different challenge than engineering excellence alone.

“To invent something is about 100 times harder than it is to copy it,” Hassabis explained. “That’s the next frontier really, and I haven’t seen evidence of that yet, but it’s very difficult.”

Hassabis compared DeepMind to Bell Labs, the legendary research institution founded in the early 1900s that was responsible for numerous Nobel Prize-winning discoveries including the transistor, laser, and information theory. “The scientific innovation part, that’s a lot harder,” he said, suggesting that DeepMind’s focus on “exploratory innovation” rather than merely “scaling out what’s known today” represents a crucial differentiator.

Semiconductor Access Remains a Limiting Factor

One significant constraint on China’s AI development remains access to the most advanced semiconductors. US export restrictions have limited Chinese companies’ ability to purchase Nvidia’s most powerful AI training chips, including the H100 and upcoming H200 GPUs that dominate Western AI development.

Despite domestic chipmakers like Huawei working to bridge this gap, their technology still lags significantly behind Nvidia’s offerings in terms of raw computational power and energy efficiency. This hardware disadvantage forces Chinese AI developers to be more creative with software optimizations and algorithmic efficiency—skills that may ultimately prove valuable even if chip restrictions are eventually relaxed.

Nvidia CEO Jensen Huang echoed Hassabis’s assessment in remarks from late 2025, stating that while the US maintains a lead, China is “not far behind” in both AI infrastructure and model development. The gap, Huang suggested, is narrower in practical capabilities than many realize, even if the US retains advantages in the most cutting-edge research.

DeepMind’s Intensifying Role at Google

Hassabis’s comments come as he and Google CEO Sundar Pichai have entered into what Hassabis describes as daily communication—”every day”—to coordinate Google’s AI strategy amid what he characterizes as a “ferocious competitive environment.” This level of executive coordination underscores how central AI has become to Google’s business and how seriously the company is taking competitive threats from both domestic rivals like OpenAI and international competitors.

After initially appearing to lag OpenAI following the November 2022 launch of ChatGPT, Google staged a significant comeback throughout 2025, driven primarily by DeepMind’s research breakthroughs. The company’s Alphabet stock posted its best annual performance since 2009, with investors rewarding Google’s renewed AI momentum.

In 2023, Google made a strategic decision to combine its Google Brain research division with DeepMind, acquired by Google in 2014 for approximately £400 million ($500 million). This merger created a unified AI powerhouse that now serves as “the engine room” of Google’s AI efforts, according to Hassabis.

“All the AI technologies is done by this group…and then it’s diffused across all of these incredible products right across Google,” Hassabis explained. “And the last couple of years, we’ve been building that backbone, so not just the models, but also…architecting the entire infrastructure of Google so that…these things can ship incredibly quickly.”

Google’s challenge, Hassabis admitted, was never invention—after all, Google researchers created the Transformer architecture that made modern AI possible. Rather, the company was “maybe a little bit slow to commercialize it and scale it,” he said. “That’s what OpenAI and others did very well. The last two, three years, I think we’ve had to come back to almost our startup or entrepreneurial roots and be scrappier, be faster.”

Open Source as China’s Strategic Advantage

Beyond pure technical capabilities, Chinese AI firms have made a strategic choice that may yield long-term dividends: a near-unanimous embrace of open source. Throughout 2025, companies like DeepSeek, Zhipu, and Moonshot released their models openly, allowing researchers and developers worldwide to use, modify, and build upon their work.

This approach stands in contrast to the closed-source strategies of leading US companies like OpenAI and Anthropic, though even American firms have begun following suit—OpenAI released its first open-source model in August 2025, while the Allen Institute for AI released Olmo 3 in November 2025.

The open-source strategy has earned Chinese AI firms significant goodwill in the global AI research community and may provide a “long-term trust advantage,” according to AI researchers. As one MIT Technology Review analysis noted, “In 2026, expect more Silicon Valley apps to quietly ship on top of Chinese open models, and look for the lag between Chinese releases and the Western frontier to keep shrinking—from months to weeks, and sometimes less.”

Implications for the Global AI Race

Hassabis’s assessment has significant implications for policymakers, businesses, and researchers as the AI race intensifies. If China can indeed match Western capabilities within months rather than years, the competitive landscape becomes far more dynamic than previously assumed.

For US policymakers, the rapid convergence suggests that export controls on semiconductor technology, while creating obstacles, have not prevented China from advancing its AI capabilities at a rapid pace. Chinese researchers and companies have adapted by improving algorithmic efficiency, leveraging China’s vast data resources, and mobilizing extraordinary engineering talent.

For businesses, the shrinking gap means that competitive advantages based on AI superiority may be shorter-lived than anticipated. Companies will need to continuously innovate rather than relying on a sustained technological lead over Chinese competitors.

For the broader AI research community, the question of whether China can move from fast-follower to innovation leader remains open. Hassabis’s skepticism about China’s ability to pioneer truly novel architectures like the Transformer may prove prescient, or it may underestimate the innovative potential unleashed when thousands of world-class researchers are given massive resources and strategic focus.

What seems clear is that the AI race is far closer than many Western observers previously believed—and getting closer every month. In Hassabis’s telling, China has already won the race to match existing capabilities. The next race—to define what comes after the Transformer era—is just beginning, and its outcome remains uncertain.