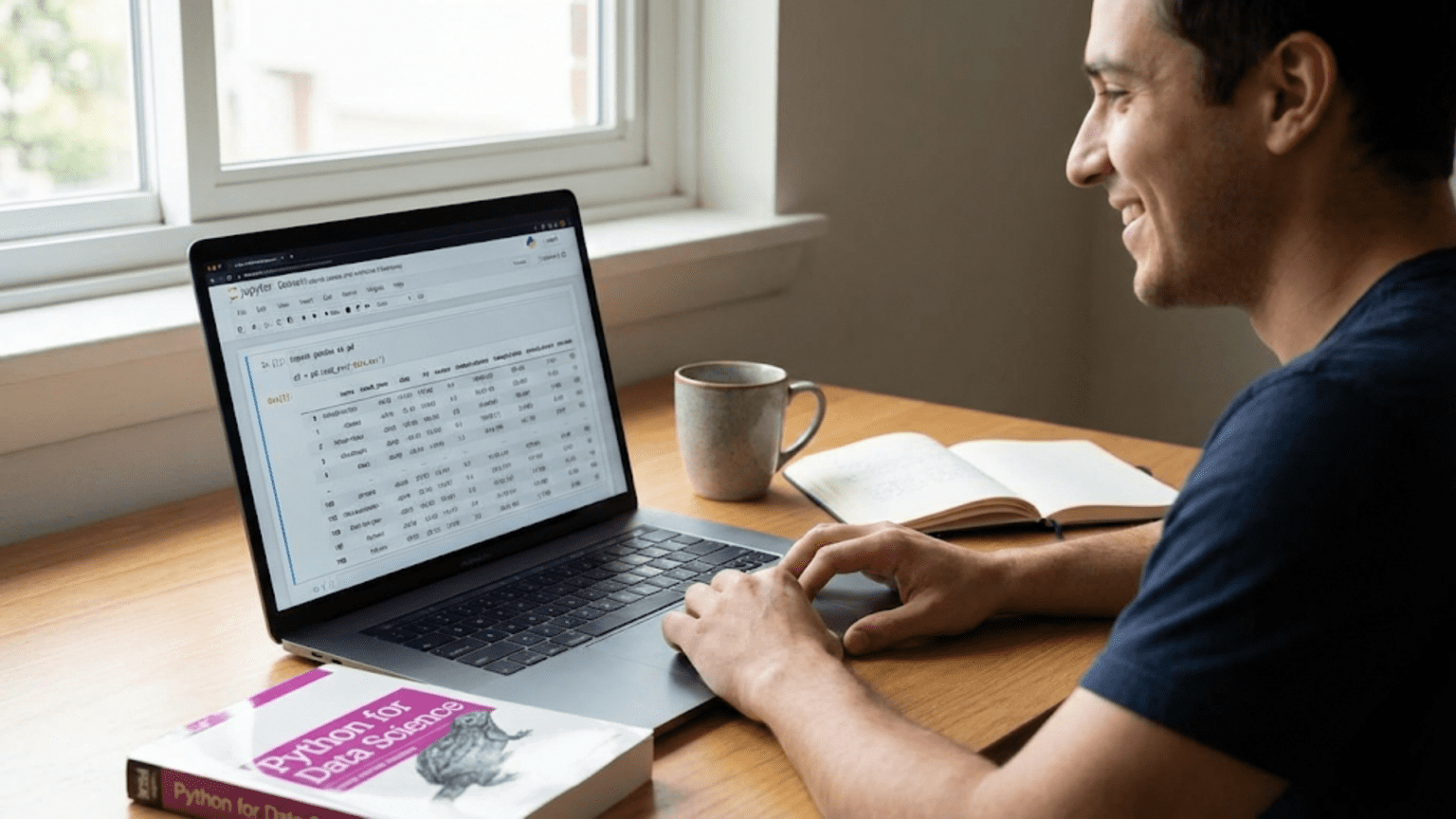

Your Gateway to Real Data Analysis

You have learned about DataFrames, practiced with small examples created in code, and understood the theoretical foundations of data science. Now comes the exciting moment when theory meets practice: loading real data from actual files. The CSV (comma-separated values) file format stores the vast majority of tabular data you will encounter as a data scientist. Mastering the seemingly simple task of reading CSV files unlocks your ability to work with real datasets rather than toy examples.

This moment feels significant because it represents a threshold crossing. Before you can read files, data science feels abstract—practicing with made-up data in tutorials. After you can confidently load files, analyze them, and extract insights, you are actually doing data science with real information. The tools that seemed mysterious become practical instruments you control. The gap between tutorial examples and real projects shrinks dramatically.

Yet reading CSV files involves more complexity than you might expect. Real-world files come with character encoding issues, inconsistent delimiters, missing values coded in unusual ways, mixed data types in columns, malformed rows, and dozens of other quirks that never appear in clean tutorial examples. This guide walks you through not just the mechanics of reading CSV files, but the practical considerations and problem-solving approaches you need when files misbehave. By the end, you will confidently handle CSV files in all their messy reality.

Understanding CSV Files: What You Are Actually Reading

Before diving into pandas code, understanding what CSV files actually contain helps you anticipate problems and make sense of the options you will use when reading them.

A CSV file is fundamentally just a text file where data is organized into rows and columns using simple formatting conventions. Each line represents one row of data. Within each row, values are separated by commas (hence “comma-separated”). The first row typically contains column names, though not always. That is the entire specification at its most basic level.

Here is what a simple CSV file looks like if you open it in a text editor:

name,age,city,salary

Alice,25,New York,70000

Bob,30,Los Angeles,80000

Charlie,35,Chicago,75000

David,28,Houston,72000

The first line lists column names: name, age, city, salary. Each subsequent line contains data for one person, with values separated by commas. This human-readable format explains CSV’s popularity—you can open CSV files in any text editor, examine them, and even edit them manually if needed.

However, this simplicity creates complications. What happens when a value itself contains a comma? What if a field contains a line break? What about values with quotes? The CSV standard handles these through escaping and quoting conventions, typically surrounding problematic values with quotation marks:

name,age,city,description

Alice,25,New York,"Loves Python, data science, and hiking"

Bob,30,Los Angeles,"Said ""Hello World"" to programming"

The description for Alice contains commas, so the entire field is quoted. Bob’s description contains quotes within the text, which are escaped by doubling them. These conventions work but add complexity.

Not all CSV files use commas as separators. Despite the name, some files use semicolons, tabs, pipes, or other characters as delimiters. These are still called CSV files colloquially even though they are technically “character-separated values” rather than specifically comma-separated. European Excel often exports using semicolons because commas serve as decimal separators in many European locales.

Character encoding represents another source of complications. Text files can encode characters in different ways (UTF-8, Latin-1, Windows-1252, etc.), and reading a file with the wrong encoding produces garbled text or errors. Modern files typically use UTF-8, but older files or files from specific regions might use different encodings.

Missing values appear inconsistently across files. Some files leave cells empty, some write “NA” or “NULL”, some use question marks or dashes, and some put the string “missing”. Pandas needs to know what represents missing data to handle it properly.

Understanding these realities prepares you for the parameters you will use with read_csv() and the problems you might encounter. Reading CSV files is not always as simple as calling one function with the filename—you often need to specify additional information to handle real-world file quirks.

Your First CSV File: The Basic Read Operation

Let me walk you through reading your first CSV file step by step, starting with the simplest case and building up to handle complications.

First, you need a CSV file to read. You can create one yourself in Excel or a text editor, download sample data from the internet, or use a file from the pandas documentation. For this example, let me show you how to create a simple CSV file in Python that you can then read back:

import pandas as pd

# Create sample data

data = {

'product': ['Laptop', 'Phone', 'Tablet', 'Monitor', 'Keyboard'],

'price': [1200, 800, 500, 300, 100],

'quantity': [5, 15, 8, 12, 25],

'category': ['Electronics', 'Electronics', 'Electronics', 'Electronics', 'Accessories']

}

df_original = pd.DataFrame(data)

# Save to CSV file

df_original.to_csv('products.csv', index=False)

print("CSV file created successfully")The index=False parameter prevents pandas from including the row numbers as a column in the file, which is usually what you want. Now you have a file called products.csv in your working directory.

Reading this file is remarkably simple:

# Read the CSV file

df = pd.read_csv('products.csv')

# Display the DataFrame

print(df)That is it. Two lines of code load the file and display its contents. The read_csv() function handles opening the file, parsing the CSV format, inferring data types for each column, creating a DataFrame, and returning it to you. This simplicity is why pandas has become the standard tool for data analysis in Python.

Examine what you loaded:

# Check the shape

print(f"Shape: {df.shape}")

# Check data types

print(f"\nData types:\n{df.dtypes}")

# View first few rows

print(f"\nFirst rows:\n{df.head()}")

# Get summary statistics

print(f"\nSummary:\n{df.describe()}")These commands should become automatic whenever you load a new file. They tell you how much data you have, what types pandas inferred for each column, what the data looks like, and basic statistics about numerical columns. This immediate examination catches problems early.

Notice that pandas automatically used the first row as column names. It detected that ‘product’ and ‘category’ should be string columns, while ‘price’ and ‘quantity’ are integers. This automatic type inference works well most of the time but occasionally needs correction.

Specifying File Paths: Where Is Your CSV File?

Understanding file paths is crucial because pandas needs to know where to find your CSV file. Path issues cause a large proportion of beginner frustration with reading files.

If your CSV file is in the same directory where you are running Python (your working directory), you can use just the filename:

df = pd.read_csv('products.csv')However, files are often stored elsewhere. You can specify full paths:

# Windows path

df = pd.read_csv('C:/Users/YourName/Documents/data/products.csv')

# Mac/Linux path

df = pd.read_csv('/home/yourname/data/products.csv')

# Relative path

df = pd.read_csv('../data/products.csv') # Go up one directory, then into data

df = pd.read_csv('data/products.csv') # Look in data subdirectoryWindows paths traditionally use backslashes, but forward slashes work in Python on all platforms including Windows, so using forward slashes avoids complications. If you must use backslashes on Windows, either escape them (C:\\Users\\...) or use raw strings (r'C:\Users\...').

Check your current working directory to understand relative paths:

import os

print(os.getcwd()) # Print current working directoryThis shows where Python is looking for files when you use relative paths. If your file is not in that directory and you use just the filename, you will get a “file not found” error.

You can read files directly from URLs:

url = 'https://example.com/data/products.csv'

df = pd.read_csv(url)This is incredibly useful for tutorials and examples where data is hosted online. Many educational datasets are available via direct URLs.

For reproducible analysis, organizing your project files systematically helps. A common structure is:

project/

├── data/

│ ├── raw/

│ │ └── products.csv

│ └── processed/

├── notebooks/

│ └── analysis.ipynb

└── scripts/

└── clean_data.py

With this structure, a notebook in the notebooks directory would read data using:

df = pd.read_csv('../data/raw/products.csv')Good file organization prevents path-related confusion and makes your work reproducible.

Handling Different Delimiters and Separators

Despite the name “comma-separated values,” many CSV files use different separators. Pandas handles these through the sep or delimiter parameter.

If your file uses semicolons:

df = pd.read_csv('data.csv', sep=';')Tab-separated files (TSV):

df = pd.read_csv('data.tsv', sep='\t')Other delimiters:

df = pd.read_csv('data.txt', sep='|') # Pipe-separatedIf you are unsure what delimiter a file uses, open it in a text editor and look at the first few lines. The pattern should be obvious.

Pandas can also automatically detect delimiters using the Python csv module’s “sniffer”:

df = pd.read_csv('data.csv', sep=None, engine='python')Setting sep=None with the Python engine tells pandas to detect the delimiter automatically. This works reasonably well but is slower than specifying the delimiter explicitly.

Some files use multiple characters as delimiters or have inconsistent spacing. The delim_whitespace parameter handles files with any amount of whitespace (spaces or tabs) separating values:

df = pd.read_csv('data.txt', delim_whitespace=True)Or use regex patterns for complex delimiters:

df = pd.read_csv('data.txt', sep='\s+', engine='python') # One or more spaces/tabsWhen creating CSV files yourself, stick with commas and save yourself future headaches. When reading files from others, be prepared to check and specify the correct delimiter.

Managing Column Names and Headers

The first row of CSV files typically contains column names, and pandas uses them automatically. However, files do not always follow this convention.

If your file has no header row, tell pandas:

df = pd.read_csv('data.csv', header=None)Pandas assigns default column names: 0, 1, 2, etc. You probably want better names:

df = pd.read_csv('data.csv', header=None,

names=['product', 'price', 'quantity'])If the header is not in the first row:

df = pd.read_csv('data.csv', header=2) # Third row is the headerSometimes files have multiple header rows or metadata at the top:

df = pd.read_csv('data.csv', skiprows=3) # Skip first 3 rowsYou can skip specific rows rather than a count:

df = pd.read_csv('data.csv', skiprows=[0, 2, 4]) # Skip rows 0, 2, and 4Or skip rows based on a condition:

df = pd.read_csv('data.csv', skiprows=lambda x: x > 0 and x < 5)Footers with notes or totals cause problems if included:

df = pd.read_csv('data.csv', skipfooter=2, engine='python') # Skip last 2 rowsIf column names need renaming after loading:

df = pd.read_csv('data.csv')

df = df.rename(columns={'old_name': 'new_name', 'Price': 'price'})Column names with spaces or special characters cause problems later, so cleaning them immediately helps:

# Convert to lowercase and replace spaces with underscores

df.columns = df.columns.str.lower().str.replace(' ', '_')Handling Missing Values Properly

Real data contains missing values, and how you handle them affects analysis validity. Pandas recognizes several missing value indicators by default, but you often need to specify others.

Default missing value indicators include empty cells, “NA”, “N/A”, “nan”, “NaN”, and a few others. These automatically become NaN (Not a Number) in your DataFrame:

df = pd.read_csv('data.csv')

print(df.isnull().sum()) # Count missing values per columnIf your file uses different missing value indicators:

df = pd.read_csv('data.csv', na_values=['', 'missing', '?', '-'])Different columns might have different missing indicators:

df = pd.read_csv('data.csv', na_values={

'age': ['', 'unknown'],

'price': ['', '0', 'N/A']

})Sometimes you want to preserve certain strings that pandas would normally interpret as missing:

df = pd.read_csv('data.csv', keep_default_na=False, na_values=[''])This prevents pandas from converting “NA” (like the abbreviation for North America) into missing values while still treating empty strings as missing.

The na_filter parameter can improve performance when you know your file has no missing values:

df = pd.read_csv('data.csv', na_filter=False)This skips missing value detection entirely, which can be much faster for large files.

After loading, examine missing values:

# Total missing values

print(df.isnull().sum())

# Rows with any missing values

print(df[df.isnull().any(axis=1)])

# Percentage missing per column

print(df.isnull().sum() / len(df) * 100)Understanding how much data is missing guides decisions about imputation or removal.

Specifying and Correcting Data Types

Pandas infers data types automatically, but sometimes it guesses wrong or you want specific types for memory efficiency or correctness.

Check what types were inferred:

df = pd.read_csv('data.csv')

print(df.dtypes)Specify types explicitly during reading:

df = pd.read_csv('data.csv', dtype={

'customer_id': str, # Force to string even if numeric

'age': 'int64',

'salary': 'float64',

'category': 'category' # Categorical type saves memory

})Explicit types prevent mistakes like customer IDs being read as numbers (enabling mathematical operations that make no sense) or preventing pandas from converting leading zeros in zip codes to integers.

The category data type dramatically reduces memory for columns with many repeated values:

# Regular string column

df = pd.read_csv('data.csv')

print(df['category'].memory_usage(deep=True))

# Categorical column

df = pd.read_csv('data.csv', dtype={'category': 'category'})

print(df['category'].memory_usage(deep=True))For large datasets, categorical types can reduce memory usage by 80% or more for appropriate columns.

Date columns need special handling:

df = pd.read_csv('data.csv', parse_dates=['date_column'])This automatically converts the column to datetime type. For multiple date columns:

df = pd.read_csv('data.csv', parse_dates=['order_date', 'ship_date'])If your dates have unusual formats:

df = pd.read_csv('data.csv',

parse_dates=['date'],

date_format='%d/%m/%Y') # Day/Month/Year formatConvert types after loading if needed:

df['age'] = pd.to_numeric(df['age'], errors='coerce') # Invalid values become NaN

df['date'] = pd.to_datetime(df['date'], errors='coerce')

df['category'] = df['category'].astype('category')Handling Character Encoding Issues

Character encoding problems manifest as garbled text or errors when reading files. These issues arise because different systems encode text characters differently.

Most modern files use UTF-8 encoding, which pandas assumes by default:

df = pd.read_csv('data.csv') # Assumes UTF-8If you see garbled characters (like � or strange symbols), try different encodings:

df = pd.read_csv('data.csv', encoding='latin1')

df = pd.read_csv('data.csv', encoding='ISO-8859-1')

df = pd.read_csv('data.csv', encoding='cp1252') # Windows encodingCommon encodings include UTF-8 (modern standard), Latin-1 or ISO-8859-1 (Western European), cp1252 (Windows Western European), and UTF-16 (sometimes used by Excel).

If reading fails with an encoding error, try specifying encoding errors parameter:

df = pd.read_csv('data.csv', encoding='utf-8', encoding_errors='ignore')

df = pd.read_csv('data.csv', encoding='utf-8', encoding_errors='replace')The ignore option skips problematic characters, while replace substitutes them with replacement characters. Both prevent crashes but may lose information.

To detect encoding automatically, use the chardet library:

import chardet

with open('data.csv', 'rb') as file:

result = chardet.detect(file.read(100000)) # Check first 100KB

print(result['encoding'])

df = pd.read_csv('data.csv', encoding=result['encoding'])This adds a dependency but can save substantial debugging time with problematic files.

Reading Large Files Efficiently

Large CSV files (hundreds of megabytes or gigabytes) require special handling to avoid memory errors or excessive loading times.

Read only specific columns:

df = pd.read_csv('large_file.csv', usecols=['name', 'age', 'city'])This dramatically reduces memory usage when you need only a subset of columns.

Read in chunks:

chunk_size = 10000

chunks = []

for chunk in pd.read_csv('large_file.csv', chunksize=chunk_size):

# Process each chunk

processed_chunk = chunk[chunk['age'] > 18]

chunks.append(processed_chunk)

df = pd.concat(chunks, ignore_index=True)Processing in chunks prevents loading the entire file into memory at once, enabling analysis of files larger than your RAM.

Read only a subset of rows:

df = pd.read_csv('large_file.csv', nrows=10000) # Read first 10,000 rowsUseful for exploring file structure or testing code before processing the full dataset.

Use appropriate data types to reduce memory:

df = pd.read_csv('large_file.csv', dtype={

'id': 'int32', # Instead of int64

'category': 'category', # Instead of object

'value': 'float32' # Instead of float64

})Smaller integer types and categorical types can halve memory usage without information loss.

For extremely large files, consider alternatives like Dask (pandas for larger-than-memory data) or databases (load into SQLite or PostgreSQL, query as needed).

Common Problems and How to Fix Them

Let me address the most common issues beginners encounter when reading CSV files and their solutions.

FileNotFoundError: Python cannot find your file. Check the filename spelling exactly (including capitalization on Mac/Linux), verify the file is where you think it is, print your current working directory with os.getcwd(), and use absolute paths if relative paths confuse you.

UnicodeDecodeError: Character encoding mismatch. Try different encodings like ‘latin1’ or ‘cp1252’, use encoding_errors='ignore', or detect encoding with chardet.

ParserError: Malformed CSV file. Common causes include inconsistent number of columns (some rows have more or fewer values), unescaped quotes or commas, or mixed delimiters. Try error_bad_lines=False (older pandas) or on_bad_lines='skip' (newer pandas) to skip problematic rows, or examine the file in a text editor to find malformed rows.

Mixed types warning: Pandas detected different types in the same column (like numbers and text). This usually indicates data quality issues. Specify the type explicitly with dtype={'column': str}, or use low_memory=False to let pandas infer types from the entire file.

Memory errors: File too large for RAM. Read in chunks, select only needed columns with usecols, use more efficient data types, or use tools designed for larger-than-memory data.

Wrong delimiter: File appears as a single column. Check what delimiter the file actually uses and specify it with sep=';' or whatever is appropriate.

Column names become data: No header row, but pandas assumed there was. Use header=None and specify column names.

Data types are wrong: Zip codes lose leading zeros, IDs treated as numbers. Specify types explicitly with dtype={'zipcode': str}.

Best Practices for Reading CSV Files

Based on common issues and professional practices, follow these guidelines when reading CSV files.

Always examine files first. Open CSV files in a text editor to see actual structure, check for delimiters, headers, and encoding before writing code. This five-minute inspection can save hours of debugging.

Start simple, add complexity as needed:

# First attempt

df = pd.read_csv('data.csv')

# If problems arise, add parameters

df = pd.read_csv('data.csv',

sep=';',

encoding='latin1',

na_values=['', 'missing'])Immediately examine what you loaded:

print(df.shape)

print(df.dtypes)

print(df.head())

print(df.isnull().sum())These checks catch problems before they propagate through analysis.

Specify types explicitly for production code:

df = pd.read_csv('data.csv', dtype={

'id': str,

'age': 'int64',

'category': 'category'

})Explicit types make code more robust and self-documenting.

Handle missing values appropriately for your context:

df = pd.read_csv('data.csv', na_values=['', 'NA', 'missing'])Document what you consider missing and why.

Use descriptive variable names:

customers_df = pd.read_csv('customers.csv')

sales_df = pd.read_csv('sales.csv')Clear names prevent confusion when working with multiple DataFrames.

Save cleaned data after initial processing:

df_clean = pd.read_csv('raw_data.csv')

# ... cleaning operations ...

df_clean.to_csv('processed_data.csv', index=False)This separates raw data from processed data and speeds up repeated analysis.

Conclusion

Reading CSV files is the gateway between data sources and data analysis. While the basic operation is simple—pd.read_csv('filename.csv')—real-world files often require additional parameters to handle delimiters, encodings, missing values, data types, and structural quirks. Understanding these parameters and when to use them transforms you from someone who can only read clean tutorial files to someone who confidently handles messy real-world data.

The key is starting with the simple approach and adding complexity only when problems arise. Examine files in text editors before reading them. Immediately check what you loaded. Specify types explicitly for important columns. Handle missing values appropriately. These practices prevent most issues and make your code more robust.

Every data science project begins with loading data from files. Mastering this seemingly simple task unlocks your ability to work with actual datasets rather than toy examples. The confidence you gain from successfully reading, examining, and understanding real data files propels you forward in your data science journey. Each file you successfully load and analyze builds experience that makes the next one easier.

In the next article, we will explore the concept of data science as detective work for the digital age, examining how the mindset and methods of investigation apply to extracting insights from data. This perspective will help you approach data analysis projects with the right mental framework for asking questions, following evidence, and drawing valid conclusions.

Key Takeaways

CSV files store tabular data as text with values separated by delimiters (usually commas), but real-world files often use different separators, unusual missing value indicators, various character encodings, and structural quirks that require additional parameters beyond the basic pd.read_csv('filename.csv') command. Understanding what CSV files actually contain and common variations helps you anticipate problems and choose appropriate parameters.

File path specification is crucial, with relative paths being relative to your current working directory which you can check with os.getcwd(), and absolute paths or URLs also being valid options. Organizing project files systematically with separate directories for raw data, processed data, notebooks, and scripts prevents path-related confusion.

Common parameters address frequent issues: sep or delimiter for non-comma separators, encoding for character encoding problems (try ‘latin1’ or ‘cp1252’), na_values for custom missing value indicators, dtype for specifying column types explicitly, parse_dates for date columns, and usecols for reading only needed columns from large files. These parameters transform simple file reading into robust data loading that handles real-world complexity.

Immediately examining loaded DataFrames with df.shape, df.dtypes, df.head(), and df.isnull().sum() catches problems early before they propagate through analysis. This habit of immediate validation should become automatic whenever you load new data.

For large files, reading in chunks with chunksize, selecting specific columns with usecols, specifying efficient data types, or using alternative tools like Dask prevents memory errors. Common problems like FileNotFoundError, UnicodeDecodeError, and ParserError have straightforward solutions once you understand their causes and appropriate parameters to address them.