Data science is a multidisciplinary field that leverages programming, statistics, and machine learning to extract insights from data. Among the plethora of tools available to data scientists, Python and R stand out as two of the most popular and versatile programming languages. Each has its strengths, and understanding how to use them effectively can accelerate your journey into the world of data science.

This article serves as a comprehensive guide to getting started with Python and R for data science. We will explore their features, benefits, and the core libraries that make them indispensable for data analysis and modeling.

Why Python and R for Data Science?

Python and R have earned their reputation as go-to tools for data science because of their rich ecosystems, simplicity, and flexibility. Here’s why they are essential for any data scientist:

1. Python: The All-Purpose Language

Python is a general-purpose programming language that excels in readability and versatility. It is widely used across industries for tasks ranging from web development to artificial intelligence. In data science, Python’s ecosystem includes powerful libraries for data manipulation, visualization, and machine learning.

Key Features of Python:

- Easy to learn and use, even for beginners.

- Extensive libraries for data analysis (Pandas, NumPy), machine learning (Scikit-learn, TensorFlow), and visualization (Matplotlib, Seaborn).

- Strong community support and a wealth of online resources.

2. R: The Statistician’s Toolbox

R was designed specifically for statistical computing and data visualization. Its capabilities make it a favorite among statisticians and researchers for exploratory data analysis and hypothesis testing.

Key Features of R:

- Built-in functions for statistical analysis and modeling.

- Advanced plotting capabilities with packages like ggplot2 and lattice.

- Widely used in academia and research for its statistical rigor.

Getting Started with Python for Data Science

Python’s popularity in data science stems from its simplicity and its ability to handle end-to-end workflows. From data preprocessing to machine learning deployment, Python provides tools for every stage.

1. Installing Python

To get started, download and install Python from the official website (python.org) or use a distribution like Anaconda, which comes preloaded with essential data science libraries.

Using Anaconda:

- Download Anaconda from anaconda.com.

- Create a new virtual environment for your projects:

conda create --name data_env python=3.9- Activate the environment:

conda activate data_env2. Core Libraries in Python

Python’s ecosystem includes libraries that cover the entire data science workflow:

- Pandas: For data manipulation and analysis.

import pandas as pd

data = pd.read_csv('data.csv') # Load a CSV file

print(data.head()) # View the first few rows- NumPy: For numerical computations and array operations.

import numpy as np

arr = np.array([1, 2, 3])

print(arr * 2) # Element-wise multiplication- Matplotlib and Seaborn: For creating visualizations.

import matplotlib.pyplot as plt

import seaborn as sns

sns.histplot(data['column_name'])

plt.show()- Scikit-learn: For machine learning and predictive modeling.

from sklearn.linear_model import LinearRegression

model = LinearRegression()

model.fit(X_train, y_train) # Train the model3. Popular Python IDEs

- Jupyter Notebook: Ideal for interactive data exploration and visualization.

- VS Code: A lightweight, versatile editor for Python development.

- PyCharm: A feature-rich IDE designed specifically for Python.

Getting Started with R for Data Science

R’s strengths in statistical analysis and data visualization make it a powerful tool for exploring data and communicating findings.

1. Installing R

Download and install R from the CRAN repository. For an enhanced user experience, install RStudio, a popular IDE for R development.

Installing RStudio:

- Download from rstudio.com.

- Open RStudio and set your working directory using:

setwd("path/to/your/project")2. Core Libraries in R

R’s package ecosystem is tailored for data manipulation, analysis, and visualization:

- dplyr: For data manipulation and transformation.

library(dplyr)

data <- read.csv("data.csv")

summary <- data %>% group_by(column_name) %>% summarize(mean_value = mean(value_column))

print(summary)- ggplot2: For creating high-quality visualizations.

library(ggplot2)

ggplot(data, aes(x = column_name)) + geom_histogram()- tidyr: For reshaping and tidying data.

library(tidyr)

tidy_data <- pivot_longer(data, cols = starts_with("column_prefix"), names_to = "new_column_name")- caret: For machine learning and predictive modeling.

library(caret)

model <- train(target_column ~ ., data = data, method = "lm")3. Popular R IDEs

- RStudio: The most widely used IDE for R programming, offering features like integrated plotting and markdown reporting.

Python vs. R: When to Use Each

Choosing between Python and R often depends on the task and your background. Here’s a quick comparison:

| Feature | Python | R |

|---|---|---|

| Ease of Learning | Beginner-friendly | Steeper learning curve |

| Statistical Analysis | Supported via libraries | Built-in functions for stats |

| Machine Learning | Extensive libraries (Scikit-learn, TensorFlow) | Limited compared to Python |

| Visualization | Matplotlib, Seaborn, Plotly | ggplot2, lattice |

| Integration | Better for deployment and APIs | Stronger in research and academia |

Advanced Tools and Workflows in Python

Python’s versatility allows data scientists to tackle complex workflows efficiently, from preprocessing raw data to deploying machine learning models. Below are advanced tools and techniques that elevate Python’s utility in data science:

1. Data Engineering with Python

Python is well-suited for handling large-scale data pipelines and processing.

- Apache Airflow: A platform for orchestrating complex workflows.

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

def extract_data():

# Your data extraction logic here

pass

dag = DAG('data_pipeline', schedule_interval='@daily')

task = PythonOperator(task_id='extract', python_callable=extract_data, dag=dag)- Pandas Integration with Databases: Directly query databases and manipulate large datasets using Pandas.

import pandas as pd

from sqlalchemy import create_engine

engine = create_engine('sqlite:///my_database.db')

data = pd.read_sql_query("SELECT * FROM table_name", engine)

print(data.head())2. Visualization Beyond Basics

Advanced Python visualization libraries offer interactive and dynamic capabilities:

- Plotly: For creating interactive dashboards and visualizations.

import plotly.express as px

fig = px.scatter(data, x='feature1', y='feature2', color='target')

fig.show()- Dash: A framework for building web applications with live data visualizations.

import dash

from dash import html, dcc

app = dash.Dash(__name__)

app.layout = html.Div([dcc.Graph(figure=fig)])

app.run_server(debug=True)3. Machine Learning Pipelines

Efficient workflows ensure repeatability and scalability:

- Pipeline in Scikit-learn: Automate preprocessing and modeling steps.

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

pipeline = Pipeline([

('scaler', StandardScaler()),

('model', LogisticRegression())

])

pipeline.fit(X_train, y_train)

predictions = pipeline.predict(X_test)Advanced Tools and Workflows in R

R’s strength in statistical computing and visualization extends to advanced workflows that cater to both research and practical data science applications.

1. Advanced Visualization

R excels in creating high-quality, publication-ready visualizations.

- Interactive Visualizations with Plotly in R:

library(plotly)

fig <- plot_ly(data, x = ~feature1, y = ~feature2, color = ~target, type = 'scatter', mode = 'markers')

fig- Customizing ggplot2 Visualizations:

library(ggplot2)

ggplot(data, aes(x = feature1, y = feature2)) +

geom_point(aes(color = target)) +

theme_minimal() +

labs(title = "Custom Plot", x = "Feature 1", y = "Feature 2")2. Statistical Modeling

R provides robust tools for statistical modeling and hypothesis testing:

- Generalized Linear Models (GLMs):

model <- glm(target ~ feature1 + feature2, data = data, family = binomial)

summary(model)- Time Series Analysis:

library(forecast)

ts_data <- ts(data$column, frequency = 12)

forecast_model <- auto.arima(ts_data)

forecast(forecast_model, h = 12)3. Workflow Automation

Streamline repetitive tasks in R with automation:

- R Markdown: Combine code, visualizations, and text in dynamic reports.

---

title: "Data Analysis Report"

output: html_document

---

```{r}

library(ggplot2)

ggplot(data, aes(x = column)) + geom_histogram()- Shiny Apps: Build interactive web applications directly in R.

library(shiny)

ui <- fluidPage(

plotOutput("scatterplot")

)

server <- function(input, output) {

output$scatterplot <- renderPlot({

plot(data$feature1, data$feature2)

})

}

shinyApp(ui, server)Combining Python and R in Data Science

Data scientists often leverage both Python and R to capitalize on their unique strengths. Combining these tools can enhance efficiency and enable seamless workflows.

1. Using reticulate in R

The reticulate package allows R to integrate Python code, enabling users to access Python libraries within R.

library(reticulate)

use_python("/path/to/python")

py_run_string("import pandas as pd")

py_run_string("print(pd.DataFrame({'A': [1, 2, 3]}))")2. Using R in Python

With the rpy2 library, Python can run R scripts and access R objects.

import rpy2.robjects as robjects

# Execute R code in Python

r_code = """

library(ggplot2)

data <- data.frame(x = c(1, 2, 3), y = c(4, 5, 6))

ggplot(data, aes(x, y)) + geom_point()

"""

robjects.r(r_code)3. Data Interchange Between Python and R

Save data as intermediate files (e.g., CSV, JSON) or use databases to pass data between Python and R.

Practical Tips for Efficiency

1. Version Control with Git

Use Git and GitHub for version control and collaboration. Track changes, manage branches, and collaborate on projects efficiently.

2. Automate Routine Tasks

Leverage libraries like Airflow (Python) or knitr (R) to automate recurring workflows, such as data cleaning or reporting.

3. Keep Your Environment Organized

Use virtual environments in Python (e.g., venv, conda) and R (e.g., renv) to manage dependencies and ensure reproducibility.

Real-World Applications of Python and R in Data Science

Python and R are widely adopted across industries due to their versatility and efficiency. Here are some examples of how these tools are applied in real-world scenarios:

1. Python Applications

Python’s scalability and integration capabilities make it a top choice for complex systems and end-to-end workflows.

- E-Commerce: Recommendation Systems

- Python powers recommendation engines used by companies like Amazon and Netflix to suggest products and content.

- Libraries like Scikit-learn and TensorFlow are used to build collaborative filtering and content-based filtering models.

- Healthcare: Predictive Analytics

- Python is used to analyze patient data and predict disease progression.

- For example, predicting the onset of diabetes using Scikit-learn or TensorFlow models trained on historical health records.

- Finance: Fraud Detection

- Python’s Pandas and NumPy libraries are used to preprocess transaction data.

- Machine learning algorithms in Python help identify unusual patterns that indicate fraudulent activity.

2. R Applications

R’s strength in statistical analysis and visualization makes it a go-to tool for data-driven research and exploration.

- Academia: Statistical Research

- R is widely used in social sciences and biology to conduct hypothesis testing, create regression models, and perform ANOVA.

- Researchers use R packages like

lme4for mixed-effects models andsurvivalfor survival analysis.

- Healthcare: Clinical Trials

- R’s statistical rigor makes it a standard tool for analyzing clinical trial data.

- The

survivalpackage is used to study time-to-event data, such as patient survival rates.

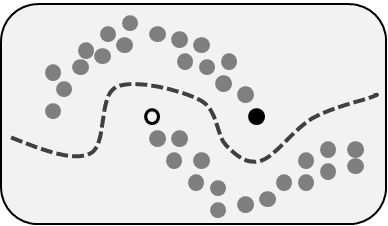

- Marketing: Customer Segmentation

- R is used for clustering analysis to segment customers based on demographics or purchasing behavior.

- Visualization tools like ggplot2 help present insights to stakeholders.

Choosing the Right Tool: Python or R?

Both Python and R have distinct advantages, and the choice often depends on the project requirements and your personal or organizational preferences. Below is a comparison to help you decide:

| Criteria | Python | R |

|---|---|---|

| Ease of Learning | Beginner-friendly, versatile | Requires a steeper learning curve for beginners |

| Data Manipulation | Extensive support with Pandas and NumPy | Simplified syntax with dplyr and tidyr |

| Machine Learning | Excellent support with Scikit-learn, TensorFlow, PyTorch | Limited compared to Python |

| Statistical Analysis | Requires additional libraries | Built-in functions for statistical tests |

| Visualization | Matplotlib, Seaborn, Plotly | ggplot2, lattice, Shiny |

| Scalability and APIs | Better suited for large-scale systems | Primarily used for analysis and visualization |

| Community Support | Broad adoption across industries | Strong academic and research focus |

When to Use Python

- Projects requiring end-to-end workflows, from data collection to deployment.

- Machine learning, deep learning, or natural language processing tasks.

- Integrating data science solutions with web applications or APIs.

When to Use R

- Exploratory data analysis and statistical modeling.

- Academic research or hypothesis-driven studies.

- Creating high-quality visualizations or interactive dashboards.

Industry Use Cases Combining Python and R

Some organizations leverage the strengths of both Python and R to maximize efficiency. Here are examples of hybrid workflows:

1. Data Analysis with R, Deployment with Python

- R is used for detailed statistical analysis and visualization.

- Results are exported to Python for building machine learning models and deploying them as APIs or web applications.

2. Real-Time Analytics in Finance

- Python is used for real-time data streaming and processing using tools like Kafka.

- R is used to perform detailed statistical analysis on historical data to uncover trends and anomalies.

3. Marketing Campaign Optimization

- R is used to segment customers based on behavior and visualize campaign performance.

- Python is used to implement automated workflows for sending personalized offers via machine learning models.

Tips for Beginners

If you’re just starting with Python and R, here’s how you can build your skills effectively:

1. Learn the Basics

- Start with Python if you’re new to programming, as it’s easier to learn and has applications beyond data science.

- Learn R if you’re focused on statistical analysis or academic research.

2. Work on Small Projects

- Use Python for tasks like building a simple regression model or visualizing data with Matplotlib.

- Use R to analyze a public dataset and create visualizations with ggplot2.

3. Practice with Real Data

- Explore datasets from sources like Kaggle, UCI Machine Learning Repository, or public government data portals.

- Create projects that reflect real-world applications, such as customer segmentation or sentiment analysis.

4. Engage with the Community

- Join online forums like Stack Overflow, Reddit’s r/datascience, or dedicated Python and R communities.

- Participate in data science competitions on Kaggle to test your skills.

5. Learn Both Tools

- While specializing in one tool is important, having a working knowledge of both Python and R can make you more versatile and valuable in the job market.

Conclusion

Python and R are essential tools in the data science toolkit, each offering unique advantages for different tasks. Python excels in scalability, machine learning, and deployment, while R shines in statistical analysis and visualization. By understanding their strengths and combining them effectively, you can tackle a wide range of data science challenges with confidence.

Whether you’re a beginner or an experienced practitioner, mastering Python and R opens up a world of opportunities in data science. With practice, continuous learning, and engagement with the community, you can build expertise and deliver impactful solutions in this dynamic and growing field.