Introduction

You find yourself drawn to data science, intrigued by stories of professionals using data to solve problems, make predictions, and drive business decisions. Perhaps you read about how companies use machine learning to recommend products, detect fraud, or optimize operations. Maybe you encountered a data visualization that revealed surprising patterns, or you heard about the strong job market and compelling salaries in the field. Whatever sparked your interest, you now face a fundamental question: Can someone with no background in data science, statistics, or programming actually break into this field?

The short answer is yes, absolutely. The longer answer, which this guide will explore in depth, is that breaking into data science with no experience requires strategic planning, dedicated learning, and realistic expectations about the journey ahead. Unlike some fields where credentials and formal education create nearly insurmountable barriers, data science values demonstrable skills and practical problem-solving ability. What you can do matters more than where you studied or what your previous job title was.

However, the path from complete beginner to employed data scientist is neither quick nor easy. You will need to invest significant time building foundational knowledge in programming, statistics, and machine learning. You will create portfolio projects that demonstrate your capabilities. You will face moments of frustration when concepts feel overwhelming or code refuses to work. You will likely apply for many jobs before receiving your first offer. This reality should not discourage you, but it should inform your expectations and preparation.

What makes the journey possible is that every skill you need can be learned through free or affordable resources available online. You do not need an expensive degree or special access to exclusive programs. What you do need is commitment to systematic learning, patience with the inevitable challenges, and willingness to demonstrate your capabilities through projects and concrete work rather than relying on credentials you do not yet possess.

In this comprehensive guide, you will learn exactly how to break into data science starting from zero. We will explore what realistic timelines look like, how to structure your learning to build skills efficiently, what portfolio projects can compensate for lack of professional experience, how to position yourself in the job market, and what strategies successful career changers have used to land their first data science roles. By understanding both the challenges you will face and the proven approaches that overcome them, you can create a realistic plan for entering this dynamic and rewarding field.

Understanding What You Are Getting Into

Before diving into learning resources and job search strategies, you need a clear picture of what data science actually involves and what entry-level roles realistically look like. Many beginners hold misconceptions that lead to misdirected effort or disappointment when reality does not match expectations.

Data science work centers on extracting insights and value from data. This might involve analyzing data to understand business trends and customer behavior, building predictive models to forecast future outcomes, creating recommendation systems that personalize user experiences, identifying anomalies or fraud in transaction data, or optimizing processes and decision-making through data-driven approaches. The common thread across these activities is using data systematically to inform decisions or automate judgments.

The day-to-day reality of data science work differs significantly from what you might imagine based on popular articles or conference presentations. Data scientists spend substantial time on tasks that sound less glamorous than “building AI systems” but are absolutely essential. You will clean messy data that has missing values, inconsistent formats, and outright errors. You will write queries to extract data from databases. You will create visualizations to understand data patterns and communicate findings. You will document your work so others can understand and build upon it. The actual model building, while important, often represents a smaller portion of the work than beginners expect.

Moreover, much data science work focuses on relatively straightforward business problems rather than cutting-edge research. Your first role likely will not involve developing novel algorithms or pushing the boundaries of artificial intelligence. Instead, you might build a churn prediction model using standard techniques, create customer segmentation to inform marketing strategies, forecast demand to optimize inventory, or analyze A/B test results to guide product decisions. These applications create real business value even though they do not involve groundbreaking innovation.

Entry-level data science roles vary considerably across companies and industries. Some organizations have mature data science teams with well-defined processes and mentorship for junior members. Others are building data capabilities for the first time and need someone who can work independently with minimal guidance. Some roles emphasize programming and production deployment of models. Others focus more on analysis and communication of insights to business stakeholders. Understanding this variation helps you target opportunities that match your developing skill set.

The skills that data science requires span several domains, each of which you will need to develop. Programming ability, particularly in Python or R, allows you to manipulate data and implement analyses. Statistical knowledge helps you understand when patterns are meaningful versus random noise. Machine learning familiarity enables you to build predictive models. Data manipulation skills let you clean and transform messy real-world data. Visualization capabilities help you explore data and communicate findings. Domain knowledge about the business or industry you work in provides context for your analyses. Communication skills allow you to explain technical work to non-technical stakeholders.

This breadth means that becoming competent in data science takes time. You cannot master all these areas in a few weeks or even a few months. Realistic timelines for going from zero to employable typically range from six months to two years of dedicated learning, depending on how much time you can invest and how quickly you grasp new concepts. This extended timeline should not discourage you, but it should help you set realistic expectations and plan for sustained effort rather than expecting quick results.

The job market for data science is competitive, particularly for entry-level positions. Many people are attracted to the field, and the number of applicants often exceeds available openings for junior roles. However, this competition primarily affects candidates who cannot demonstrate genuine capability. If you can show through portfolio projects and concrete skills that you can do data science work effectively, you significantly differentiate yourself from the crowd of applicants with only coursework on their resumes.

What this means for you as someone starting from zero is that breaking into data science is absolutely possible, but it requires strategic effort, substantial learning, and realistic expectations about both the timeline and the nature of the work. The field offers intellectual challenge, strong compensation, and the satisfaction of solving real problems with data. Reaching that point requires navigating the learning journey thoughtfully and positioning yourself effectively in the job market.

Building Your Foundation: Where to Start

When you are starting from absolute zero, the sheer breadth of data science can feel overwhelming. Statistics, programming, machine learning, deep learning, natural language processing, computer vision, and dozens of other topics all seem essential. The key to making progress is understanding that you need to build systematically, starting with core foundations before branching into specialized areas.

Your first priority should be developing basic programming literacy in Python. Python has become the dominant language for data science, with rich libraries for data manipulation, visualization, statistical analysis, and machine learning. Learning Python provides the foundation for everything else you will do in data science. At the beginning, you need to understand how to store information in variables, work with different data types like numbers and text, use basic operators to perform calculations and comparisons, write conditional logic that makes decisions based on conditions, create loops that repeat operations, and define functions that encapsulate reusable logic.

These programming fundamentals might seem elementary, but they form the building blocks of all data science code. You cannot build sophisticated machine learning models until you understand variables and functions. Take time to build solid programming foundations rather than rushing through basics to reach more exciting topics. The effort you invest here pays dividends throughout your data science learning.

For learning Python, choose resources designed specifically for absolute beginners with no programming experience. “Python for Everybody” by Charles Severance provides an excellent starting point, explaining concepts clearly without assuming prior knowledge. “Automate the Boring Stuff with Python” by Al Sweigart teaches programming through practical examples that help you see real applications. These resources use everyday language and progress gradually, making them accessible for complete newcomers to programming.

As you learn programming basics, practice constantly by writing code yourself. Do not simply read examples or watch tutorials. Type out code, experiment with modifications, and work through exercises. Programming is a skill learned through doing, not through passive observation. When you encounter errors, which you inevitably will, resist the urge to immediately seek solutions. Spend time trying to understand what went wrong and how to fix it. This debugging practice builds problem-solving skills essential for data science work.

Once you have basic Python comfort, typically after a few weeks of consistent practice, begin learning data manipulation using the pandas library. Pandas brings spreadsheet-like functionality to Python, providing tools for loading data from files, filtering and selecting subsets of data, creating calculated columns, handling missing values, grouping data to compute summaries, and merging different datasets together. These operations form the backbone of data science work, as you will use them constantly regardless of what type of analysis or modeling you pursue.

Learning pandas while your programming skills are still developing actually reinforces both. You apply your emerging Python knowledge in the context of real data manipulation tasks, which helps concepts solidify. Work through pandas tutorials using actual datasets rather than toy examples. Kaggle provides thousands of datasets across every imaginable domain. Choose topics that interest you personally, as intrinsic motivation sustains learning far better than forcing yourself through boring exercises.

Parallel to developing programming skills, start building statistical knowledge. Statistics provides the theoretical foundation for understanding data and making inferences. You need to grasp concepts like measures of central tendency such as mean and median, measures of variability like standard deviation, probability distributions and what they reveal about data, correlation and its distinction from causation, statistical significance and hypothesis testing, and confidence intervals that quantify uncertainty.

Many people fear statistics because of negative experiences with theoretical mathematics courses. However, learning statistics for practical application differs dramatically from abstract mathematical treatments. Modern statistics education emphasizes intuition and application using real examples and computational tools. Resources like “Statistics Done Wrong” by Alex Reinhart or “Naked Statistics” by Charles Wheelan build understanding through clear explanations and engaging examples without requiring advanced mathematics.

As you learn statistical concepts, apply them using Python. Calculate summary statistics for real datasets. Create visualizations to understand distributions. Run simple hypothesis tests. This hands-on application makes abstract concepts concrete and shows you why statistical thinking matters for data science work.

Data visualization deserves attention as part of your foundation. Visualization serves two crucial purposes in data science. First, it helps you explore and understand data by revealing patterns, outliers, and relationships. Second, it communicates findings to others in ways that tables of numbers cannot match. Learn to create basic plots including histograms that show distributions, scatter plots that reveal relationships between variables, line charts for time series data, and bar charts for comparing categories.

Python’s matplotlib and seaborn libraries provide visualization capabilities. Start with simple plots and gradually explore more sophisticated visualizations as your skills develop. More important than technical mastery of plotting libraries is developing judgment about which visualizations effectively communicate different types of information. This design sense develops through practice and exposure to both good and bad examples.

Your foundation-building phase should span roughly two to four months of consistent effort, assuming you can dedicate substantial time each week to learning. This timeline might feel slow, but building solid foundations pays enormous dividends. Many beginners rush through basics to reach machine learning quickly, then struggle because their programming or statistical understanding has gaps. Taking time to truly understand fundamentals accelerates your learning of more advanced topics later.

Throughout this foundation phase, seek out communities of other learners. Online forums like the Python subreddit, Stack Overflow for programming questions, or dedicated data science communities provide places to ask questions, share resources, and connect with others on similar journeys. Learning alongside others provides motivation, different perspectives, and emotional support during challenging periods.

Mastering Machine Learning Fundamentals

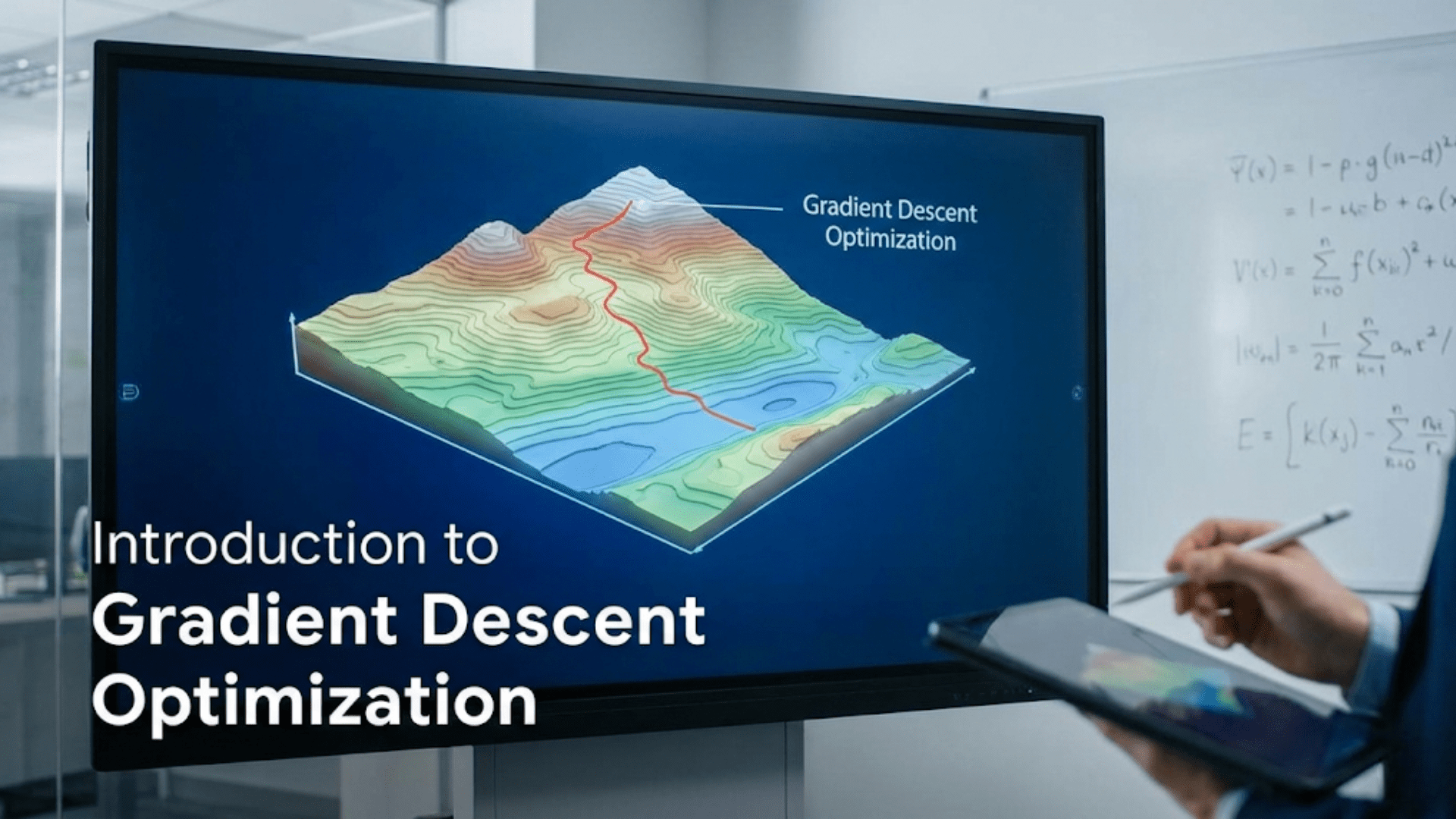

Once you have established programming and statistical foundations, you are ready to begin learning machine learning, the core technical component of data science. Machine learning involves training algorithms to learn patterns from data and make predictions or decisions. Understanding these techniques and knowing when and how to apply them is central to data science work.

Start your machine learning education by understanding the overall workflow and key concepts before diving into specific algorithms. Machine learning projects follow a general process. You begin by clearly defining the problem you want to solve. Then you collect and prepare data that can inform that problem. Next you split data into separate training and testing sets, which allows you to validate that models generalize beyond data they were trained on. You choose and train a model using the training data. You evaluate model performance on the testing data. Finally, you refine your approach based on results and deploy the model for use.

This workflow provides structure for all machine learning projects, whether simple or sophisticated. Understanding it helps you approach new problems systematically rather than randomly trying different techniques.

Machine learning divides into several major categories based on the type of problem being solved. Supervised learning involves training models on labeled examples, where you know the correct answer for each training case. This category includes regression problems where you predict continuous values like prices or temperatures, and classification problems where you predict categories like whether an email is spam or whether a customer will churn. Unsupervised learning finds patterns in data without predefined labels, such as clustering similar items together or reducing data to its most important dimensions. Reinforcement learning, though less common in typical data science work, involves training agents to make sequences of decisions through trial and error.

For beginners breaking into data science, supervised learning represents the most important category to master initially. Most business applications of data science involve prediction, making supervised learning the most immediately practical skill. Focus your learning on regression and classification before exploring other areas.

Begin learning supervised learning with linear regression, the simplest and most interpretable predictive model. Linear regression predicts a continuous outcome by finding linear relationships between input features and the target variable. Despite its simplicity, linear regression is widely used and understanding it deeply provides insights that transfer to more complex models. Work through complete examples where you load data, prepare it for modeling, split it into training and test sets, train a linear regression model, make predictions, and evaluate performance using metrics like mean absolute error or R-squared.

The key to learning linear regression effectively is understanding not just how to call a library function but what the algorithm does, what assumptions it makes about data, when those assumptions are reasonable, and how to interpret its results. This deeper understanding helps you use the technique appropriately and recognize when it may fail.

From linear regression, progress to logistic regression for binary classification problems. Logistic regression predicts probabilities that an example belongs to one of two classes, such as predicting whether a customer will churn or whether a transaction is fraudulent. Learning logistic regression introduces new concepts like probability predictions, decision thresholds, and classification evaluation metrics including accuracy, precision, recall, and F1 score. Understanding these evaluation metrics proves crucial, as they help you assess whether models are actually useful for business applications.

After mastering these foundational algorithms, explore decision trees and ensemble methods. Decision trees split data recursively based on feature values to make predictions. They are intuitive and provide useful feature importance scores showing which variables most influence predictions. Random forests and gradient boosting combine multiple decision trees to improve performance, introducing the powerful concept of ensemble learning where multiple models working together outperform individual models.

These ensemble methods like random forest and XGBoost are workhorses of practical data science, often providing excellent performance with relatively little tuning. Learning to use them effectively, including understanding how to tune their hyperparameters, makes you immediately productive on real problems.

As you learn each algorithm, work through complete projects applying it to real datasets. Do not just read about algorithms or work through toy examples. Download a dataset from Kaggle, formulate a prediction problem, apply the algorithm, evaluate results, and iterate to improve performance. This hands-on practice builds practical skills that reading alone cannot provide.

For learning machine learning, several excellent resources cater to different learning styles. “Introduction to Statistical Learning” by James, Witten, Hastie, and Tibshirani provides rigorous but accessible treatment of core machine learning concepts with exercises in R, which translates readily to Python. The fast.ai course “Practical Deep Learning for Coders” teaches through a top-down approach where you build working models quickly then progressively understand their internals. Andrew Ng’s Machine Learning course on Coursera provides structured video lectures with programming assignments that build understanding systematically.

Supplement these structured courses with hands-on practice. Participate in Kaggle competitions designed for learning, where you can see how others approach problems and learn from their solutions. Build personal projects that apply machine learning to questions that interest you. This combination of structured learning and self-directed practice accelerates your skill development.

Do not feel pressure to learn every machine learning algorithm or technique immediately. Focus on understanding core supervised learning methods deeply before branching into specialized areas like neural networks, natural language processing, or computer vision. These advanced topics become more accessible once you have mastered fundamentals and built intuition about how machine learning works.

Throughout your machine learning learning, continue strengthening your programming and statistics. Machine learning builds on these foundations, and gaps in either area will slow your progress. When you encounter concepts you do not fully understand, pause to fill those gaps rather than pushing forward with incomplete understanding.

Creating Your Portfolio from Scratch

When you lack professional data science experience, your portfolio becomes the primary evidence of your capabilities. A strong portfolio demonstrates that you can do data science work effectively, compensating for your lack of job history in the field. Building this portfolio requires strategic project selection and thorough documentation that showcases both technical skills and analytical thinking.

Your portfolio should contain three to five substantial projects that demonstrate different capabilities and approaches. Quality matters far more than quantity. A few well-executed, thoroughly documented projects make stronger impressions than many rushed, poorly explained ones. Each project should represent significant effort, typically requiring twenty to forty hours of work from initial exploration through final documentation.

Choose project topics that genuinely interest you rather than defaulting to overdone datasets that thousands of others have analyzed. The Titanic dataset and Iris classification are useful for initial learning but do not differentiate your portfolio. Instead, look for datasets related to your hobbies, interests, or previous work experience. If you enjoy sports, analyze player performance data or predict game outcomes. If you follow politics, examine election results or polling data. If you care about health, explore medical datasets or fitness tracking information. Your genuine interest in the domain shines through in your analysis and documentation.

Every portfolio project should begin with a clear problem statement that explains what question you are addressing and why it matters. Frame problems in terms of decisions or insights rather than just technical exercises. Instead of “I built a model to predict housing prices,” write “Housing markets are opaque to most buyers who struggle to determine fair values. This project develops a pricing model that helps homebuyers assess whether properties are overpriced or undervalued based on characteristics and location, potentially saving thousands of dollars on purchases.”

This problem framing immediately tells reviewers that you think about real-world applications and business value, not just technical implementation. It demonstrates that you can connect data science work to practical outcomes.

Document your exploratory data analysis extensively in your portfolio projects. Show how you initially explored the dataset to understand its characteristics, what patterns or relationships you discovered, what hypotheses you formed based on exploration, and how you validated those hypotheses. Include visualizations that reveal interesting patterns, always explaining what each visualization shows and why it matters. This documented exploration demonstrates analytical thinking and data intuition that employers value.

Explain your data cleaning and preprocessing decisions in detail. Real-world data is messy, and how you handle that messiness reveals your judgment and practical skills. Document how you handled missing values and why you chose that approach, how you dealt with outliers or unusual values, what transformations you applied to features and why, how you encoded categorical variables, and how you split data into training and test sets. These seemingly mundane details actually demonstrate important practical skills that separate competent practitioners from beginners who only know how to run models on clean data.

Feature engineering deserves particular attention in your documentation. Creating useful features from raw data requires both domain knowledge and creativity. Explain what features you engineered, what intuition or hypothesis motivated each feature, and how features impacted model performance. This demonstrates that you understand machine learning success often depends more on good features than on algorithm choice.

Compare multiple modeling approaches in each project rather than just applying a single algorithm. Build a simple baseline model, then progressively try more sophisticated methods. Explain why you chose particular algorithms, what you expected their strengths and weaknesses to be, and what you actually observed. This comparison shows you understand different techniques and can make informed choices rather than blindly applying complex models.

Present your results using both technical metrics and business interpretation. Report accuracy, precision, recall, or whatever metrics are appropriate for your problem. But also explain what these metrics mean in practical terms. If your fraud detection model achieves ninety percent recall, explain that this means catching ninety percent of fraudulent transactions while some legitimate transactions get flagged for review. Connect technical performance to real-world implications.

Discuss limitations and potential improvements honestly. No project is perfect, and acknowledging limitations demonstrates maturity and critical thinking. Explain where your model might fail, what data you wish you had, what assumptions you made that might not hold in all cases, and how the work could be extended or improved. This reflection shows you can think critically about your own work and understand its scope and boundaries.

Create polished, professional documentation for each portfolio project. Write README files that guide readers through your work, explaining the problem, data, approach, findings, and how to run your code. Include clear visualizations that illuminate key findings. Organize code logically with meaningful variable names and helpful comments. Ensure someone else could understand your project without needing to ask you questions.

Consider building at least one project as a deployed application rather than just a notebook. Use tools like Streamlit or Flask to create a simple web interface that allows users to interact with your model. This deployment demonstrates you can move beyond analysis to creating usable systems, a valuable differentiator. The application does not need to be sophisticated. Even a simple interface that takes user inputs and returns predictions shows you understand the full data science workflow.

Host your portfolio projects on GitHub with professional repository organization. Each project should have its own repository with a comprehensive README, well-organized code, and clear documentation. This GitHub presence becomes your portfolio website, allowing potential employers to easily explore your work.

Beyond individual projects, consider contributing to open-source data science projects. Contributing bug fixes, documentation improvements, or small features to libraries like pandas or scikit-learn demonstrates collaborative skills and deeper understanding of tools. These contributions carry weight because they help the broader community and show you can work with existing codebases.

Throughout your portfolio building, remember that your goal is demonstrating capability despite lack of professional experience. Each project should tell a story about a problem you solved, how you approached it, what you learned, and what value your analysis provides. This narrative focus, combined with technical competence, creates a compelling portfolio that opens doors even without traditional credentials.

Navigating the Learning Resources Landscape

The abundance of learning resources for data science can feel overwhelming. Free courses, paid bootcamps, university degrees, books, tutorials, and videos all compete for your attention. Choosing the right resources and using them effectively accelerates your learning while avoiding wasted time on low-quality or inappropriate materials.

Free online courses provide excellent starting points for learning core data science skills. Coursera, edX, and other platforms host courses from top universities and industry experts. Andrew Ng’s Machine Learning course offers rigorous introduction to foundational concepts. The University of Michigan’s Applied Data Science with Python specialization teaches practical skills through hands-on exercises. These structured courses provide curricula, exercises, and often certificates of completion that demonstrate your learning.

The advantage of structured courses is that experts have already organized material in a logical progression, created exercises that reinforce concepts, and provided support through forums where you can ask questions. The disadvantage is that courses move at predetermined paces and cover predetermined topics, which may not match your specific needs or learning speed.

Books complement courses by providing deeper treatment of topics and serving as references you return to repeatedly. For Python programming, “Python Crash Course” by Eric Matthes offers beginner-friendly introduction. For statistics, “Think Stats” by Allen Downey teaches statistical concepts through Python code. For machine learning, “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron provides practical, code-focused instruction. These books combine explanation with exercises that build skills progressively.

Choose books that match your current level. Attempting advanced texts before building foundations leads to frustration and inefficient learning. Do not feel obligated to read books cover to cover. Skip chapters covering topics you already understand or are not immediately relevant to your goals.

Interactive platforms like DataCamp, Codecademy, and Kaggle Learn provide hands-on learning through exercises you complete in your browser. These platforms excel at teaching tool usage and syntax through immediate practice. They work well for learning specific libraries like pandas or matplotlib, where you need to develop facility with particular functions and methods.

However, interactive platforms often provide less depth than books or courses, focusing on mechanics rather than conceptual understanding. Use them to build practical skills but supplement with resources that develop deeper comprehension.

YouTube tutorials and video series offer free instruction on virtually every data science topic. Channels like StatQuest explain statistical concepts with exceptional clarity through visual explanations. Corey Schafer’s Python tutorials provide thorough, accessible instruction on programming. Video learning works particularly well for visual learners or when you want to see someone work through problems in real time.

The challenge with YouTube learning is finding high-quality content among vast quantities of variable-quality videos. Look for creators with strong track records, clear explanations, and production quality that makes content easy to follow. Avoid tutorial hell where you watch videos passively without practicing concepts yourself.

Data science bootcamps provide intensive, structured learning with instructor support and career services. Bootcamps condense learning into weeks or months of focused study, often with curricula designed specifically to prepare students for job searches. They provide accountability, community, and direct access to instructors who can answer questions.

Bootcamps require significant financial and time investment. Before committing, research carefully to understand what specific bootcamp offers, what outcomes graduates achieve, and whether the cost justifies potential benefits. Some bootcamps offer income share agreements where you pay only after finding employment, reducing upfront financial risk.

University degrees including online master’s programs in data science or analytics provide comprehensive education with recognized credentials. Degrees signal commitment and provide structured, rigorous learning. They also offer access to alumni networks and university career services.

However, degrees require substantial time and money, often taking one to two years and costing tens of thousands of dollars. For breaking into data science, degrees are not necessary though they can help. Evaluate whether credential value justifies the investment for your specific situation.

Regardless of which resources you choose, active learning beats passive consumption. Work through exercises yourself rather than just watching or reading. Build projects that apply what you learn. Explain concepts to others, whether through blog posts, discussions in forums, or teaching friends. This active engagement transforms information into genuine understanding and skill.

Combine resources strategically rather than expecting any single resource to teach you everything. Use structured courses for foundation and progression, books for deep dives into topics you need to understand thoroughly, interactive platforms for building facility with specific tools, videos for visual explanations of challenging concepts, and projects to integrate everything into practical skills.

Track your learning to maintain motivation and identify gaps. Keep notes on concepts you have studied, maintain a list of skills you want to develop, and regularly assess your progress. This metacognitive awareness helps you direct your learning effectively rather than following resources aimlessly.

Join communities of learners for support, accountability, and shared resources. Online forums, local meetups, and study groups connect you with others on similar journeys. These connections provide motivation during challenging periods, expose you to different perspectives and approaches, and create networks that may lead to opportunities.

Getting Your First Data Science Job

Landing your first data science role with no professional experience requires strategic positioning, persistent effort, and realistic expectations about the job search process. Understanding what employers seek and how to demonstrate those qualities despite lacking traditional credentials increases your chances of success.

Start by recognizing that entry-level data science roles vary dramatically across companies. Some organizations have junior data scientist positions designed for recent graduates or career changers with mentorship and training built in. Others seek data scientists who can work independently from day one. Target roles that match your current skill level rather than applying indiscriminately to any position with “data scientist” in the title.

Look for job titles beyond just “data scientist” that might represent accessible entry points. Data analyst roles often require less advanced machine learning knowledge while still involving substantial data work. Business intelligence analyst positions focus on reporting and visualization, playing to communication strengths. Analytics engineer roles blend data analysis with technical implementation. Junior machine learning engineer positions may be more accessible than senior data scientist roles. Each of these can provide entry into data-focused work and pathways to pure data science roles later.

Tailor your resume to emphasize skills and projects over credentials you lack. Your resume should highlight technical skills you have developed including Python programming, statistical analysis, machine learning, data visualization, and SQL. List specific libraries and tools you can use such as pandas, scikit-learn, matplotlib, and others relevant to data science. Include your portfolio projects prominently, treating them like professional experience with descriptions of problems you solved, approaches you used, and results you achieved.

Your resume summary or objective should acknowledge your career transition while emphasizing your commitment and capability. Something like “Self-taught data scientist with strong foundation in Python, statistics, and machine learning developed through intensive self-study and portfolio projects. Seeking entry-level data science role where I can apply analytical skills and technical capabilities to solve real business problems while continuing to grow expertise” clearly communicates your situation and enthusiasm.

Write compelling cover letters that tell your story and connect your background to specific roles. Explain what drew you to data science, how you built skills systematically despite lacking formal background, and why you are interested in the particular company and role. Reference specific aspects of the job description and explain how your skills and projects prepare you to contribute. Personalized cover letters require more effort than generic applications but dramatically increase your chances of getting responses.

Leverage every possible advantage in your job search. Apply to roles through referrals whenever possible, as applications submitted by internal employees receive far more attention than general applications. Attend data science meetups and conferences to network with practitioners who might know about openings. Participate in online communities where people share job opportunities. Contact data scientists at companies you admire for informational interviews that might lead to referrals.

Consider contract work or internships as entry points. Short-term projects build experience and expand your portfolio while providing income. Some companies hire contractors as trial periods before making full-time offers. Internships designed for career changers exist at some organizations and provide structured entry points into the field.

Look for opportunities at companies building data science capabilities rather than those with mature data science teams. Organizations in early stages of data science adoption may be more flexible about credentials and more willing to invest in promising candidates. You might find opportunities at startups, small to medium enterprises, or traditional companies beginning to invest in analytics.

Target roles where your unique background provides differentiation. If you have domain expertise in healthcare, pursue healthcare analytics roles. If you understand retail operations, look at retail data science positions. This domain knowledge compensates for lack of data science experience and makes you valuable in ways purely technical candidates are not.

Prepare thoroughly for interviews by practicing common data science interview questions. Expect questions about statistics like “Explain what a p-value means” or “What is the central limit theorem?” Be ready to discuss machine learning concepts such as the bias-variance tradeoff, overfitting, or how different algorithms work. Practice explaining your portfolio projects in depth, preparing to discuss technical decisions, challenges you faced, and lessons you learned.

Many data science interviews include coding exercises or take-home assignments. Practice solving data analysis problems with Python, working through exercises on platforms like LeetCode, HackerRank, or stratascratch. Time yourself solving problems to build comfort with coding under pressure. Review your solutions to understand better approaches.

Case study interviews test your ability to approach business problems analytically. Practice breaking down vague questions into well-defined analytical problems. When asked “How would you reduce customer churn?”, walk through understanding what data is available, what features might predict churn, how you would validate a model, and how the organization would use predictions. These structured responses demonstrate analytical thinking even when you cannot provide technical implementation details.

Be honest in interviews about your experience level while confidently presenting your strengths. Acknowledge that you are early in your data science journey but emphasize your systematic learning, strong foundational skills, proven ability to complete projects, and enthusiasm for continued growth. Frame your lack of professional experience as an opportunity, explaining that you bring fresh perspective and eagerness to learn without outdated assumptions.

Prepare thoughtful questions to ask interviewers. Inquire about what success looks like in the role, what projects the team is working on, what tools and technologies they use, and what mentorship or learning opportunities exist. These questions show genuine interest and help you assess whether positions would support your continued development.

Expect rejection and use it constructively. Many applications will go unanswered. Interviews will not always convert to offers. This reflects the competitive entry-level market, not your inadequacy. Learn from each interview experience, note what questions challenged you, and use that information to strengthen your preparation.

Track your applications systematically, noting where you applied, interview dates, and outcomes. This organization helps you follow up appropriately and gives you data about what strategies work. If you are not getting interviews, revise your resume or target different types of roles. If you reach interviews but not offers, focus on interview preparation and technical skills.

Persist through the job search with realistic expectations. Finding your first data science role commonly takes three to six months of active searching, sometimes longer. This timeline is normal for career changers entering competitive fields. Maintain your learning during the job search, continuing to build skills and portfolio projects. Each week of searching makes you more qualified and better prepared.

Staying Motivated Through the Journey

Breaking into data science from zero is a marathon, not a sprint. Maintaining motivation through months of learning and job searching requires intentional strategies that sustain your commitment during inevitable challenging periods.

Set clear, achievable milestones that break your overall goal into manageable steps. Rather than focusing only on the distant objective of landing a data science job, create intermediate goals like completing an introductory Python course this month, building your first machine learning model by a specific date, finishing a portfolio project within six weeks, or applying to ten jobs per week. These concrete milestones provide regular wins that maintain momentum.

Track your progress visibly through a learning journal, checklist, or progress chart. Seeing accumulated accomplishments reminds you how far you have come during moments when progress feels slow. Looking back at concepts that once confused you but now feel clear demonstrates growth that daily effort makes difficult to perceive.

Celebrate achievements along the way, not just the ultimate goal. Completing a challenging course deserves recognition. Successfully debugging complex code warrants celebration. Finishing a portfolio project is an accomplishment. Acknowledging these victories maintains positive motivation and makes the journey more enjoyable.

Connect with a community of fellow learners who understand your challenges and can provide support. Join online forums, attend local meetups, or form study groups with others pursuing similar goals. These connections provide accountability when motivation wanes, different perspectives when you feel stuck, and camaraderie that makes learning more social and less isolating.

Maintain balance to avoid burnout. While dedication is important, trying to study every waking hour quickly leads to exhaustion and diminishing returns. Schedule regular breaks, maintain physical exercise, preserve social connections, and engage in hobbies unrelated to data science. These activities recharge your mental energy and prevent the resentment that comes from feeling data science has consumed your entire life.

When you hit frustration with difficult concepts or stubborn code, remember that struggle is productive learning, not a sign of inadequacy. Everyone who becomes competent in data science worked through similar challenges. The difference between those who succeed and those who give up is persistence through difficult periods.

Vary your learning activities to maintain engagement. If you feel bored with video courses, switch to working on projects. If coding becomes tedious, read about theoretical concepts. If statistics feels abstract, apply it to real data. This variety keeps learning fresh and engages different aspects of your intelligence.

Remind yourself regularly why you started this journey. What attracted you to data science? What do you hope to achieve? Reconnecting with your motivation helps you push through periods when learning feels like an obligation rather than an opportunity.

Seek inspiration from others who successfully transitioned into data science from non-traditional backgrounds. Many data scientists started their careers in completely different fields and navigated similar journeys to yours. Their stories demonstrate that what you are attempting is achievable and provide concrete examples of successful transitions.

Be compassionate with yourself when progress feels slow or when you make mistakes. Learning complex new skills inevitably involves confusion, errors, and setbacks. Treating yourself with kindness rather than harsh judgment maintains the positive mindset that sustains long-term effort.

Focus on the process and daily habits rather than fixating on the end goal. Commit to studying for a specific amount of time each day rather than worrying about how long until you are job-ready. This process focus keeps you grounded in what you can control, your daily effort, rather than what you cannot, the exact timeline to employment.

Conclusion: Your Path Forward

Breaking into data science with no experience is challenging but entirely achievable through strategic learning, dedicated effort, and persistent job searching. Thousands of people have successfully made this transition before you by following approaches similar to what this guide outlines. What separates those who succeed from those who give up is not innate talent or special advantages but rather sustained commitment and systematic skill-building over time.

Your journey begins by building solid foundations in programming, statistics, and data manipulation. These fundamentals support everything else you will learn and use in data science. Take time to genuinely understand core concepts rather than rushing through basics to reach more exciting topics. The investment in foundations pays enormous dividends as you progress to machine learning and more advanced techniques.

Develop your skills through hands-on practice with real data and meaningful projects. Reading about data science or watching tutorials provides knowledge, but building actual projects develops capability. Your portfolio projects become the evidence that compensates for your lack of professional experience, demonstrating to employers that you can do data science work effectively despite your unconventional path into the field.

Approach your learning strategically by choosing appropriate resources, maintaining consistent study habits, and tracking your progress. The abundance of free and affordable learning materials means that financial barriers need not stop you. What you need is commitment to systematic learning over an extended period, typically six months to two years depending on your starting point and available time.

Navigate your job search by positioning yourself thoughtfully, targeting appropriate roles, and leveraging every advantage including networking, portfolio projects, and persistence. Entry-level data science roles are competitive, but candidates who can demonstrate genuine capability through projects and skills stand out from those with only credentials or coursework.

Maintain motivation through the journey by setting achievable milestones, connecting with communities, celebrating progress, and remembering why you chose this path. The months of learning and job searching test your commitment, but they also build the resilience and problem-solving skills that will serve you throughout your data science career.

Your path forward is clear. Begin learning Python programming today. Build statistical knowledge in parallel. Start working with real datasets as soon as you have basic skills. Create portfolio projects that showcase your developing capabilities. Engage with communities of learners and practitioners. Prepare thoroughly for your job search. Apply persistently to appropriate roles. Learn from every experience, whether success or setback.

Breaking into data science from zero is not a matter of if but when, assuming you maintain consistent effort and strategic direction. The timeline varies for different people based on their starting knowledge, available time, and learning pace, but the destination is reachable for anyone willing to invest the necessary work.

Begin today with one small step. Enroll in an introductory Python course. Download a dataset and create your first visualization. Attend a local data science meetup. Join an online community of learners. Each action, however small, moves you forward on the path from complete beginner to employed data scientist. The journey of a thousand miles begins with a single step, and your journey into data science begins right now.