Reinforcement Learning (RL) is a machine learning paradigm where an agent learns to make decisions by interacting with an environment to achieve a specific goal. The core idea of reinforcement learning is that the agent takes actions in an environment, receives feedback in the form of rewards or penalties, and then adjusts its actions to maximize cumulative rewards over time. Unlike supervised learning, which relies on labeled data, reinforcement learning involves learning from the consequences of actions, making it especially well-suited for tasks where real-time feedback and adaptive strategies are essential.

In reinforcement learning, the agent’s objective is to find an optimal policy—a set of rules for selecting actions based on the current state—to maximize the long-term reward. By exploring different actions and learning from the outcomes, the agent can discover the best course of action for each situation it encounters. Reinforcement learning has gained prominence for its effectiveness in complex, dynamic environments, such as games, robotics, autonomous vehicles, and personalized recommendations.

This article explores the foundational concepts, types, advantages, and limitations of reinforcement learning. We’ll also cover how it differs from other learning paradigms like supervised and unsupervised learning, highlighting the unique features that make RL effective for sequential decision-making tasks.

Key Concepts in Reinforcement Learning

To understand reinforcement learning, it’s important to explore several foundational concepts that define how this approach works, including agents, environments, states, actions, rewards, and policies.

1. Agent and Environment

In reinforcement learning, the agent is the learner or decision-maker, while the environment represents everything that the agent interacts with to achieve its goals. The agent’s objective is to take actions within this environment to maximize its cumulative rewards.

- Example: In a game like chess, the agent is the AI playing the game, and the environment includes the chessboard and pieces. The agent’s goal is to make moves that increase its chances of winning the game.

2. States, Actions, and Rewards

The interaction between the agent and the environment occurs through states, actions, and rewards.

- States: The current situation of the environment that the agent can observe. In reinforcement learning, states can be fully or partially observable, depending on whether the agent has complete information about the environment.

- Actions: The choices available to the agent at any given state. Each action influences the environment, causing it to transition to a new state.

- Rewards: The feedback the agent receives after taking an action. Positive rewards indicate desirable outcomes, while negative rewards (penalties) discourage certain actions. The agent’s goal is to maximize cumulative rewards over time.

- Example: In a robot navigation task, the state could be the robot’s position in the room, actions might include moving forward or turning, and rewards could be assigned for reaching a target location or avoiding obstacles.

3. Policy and Value Functions

A policy defines the agent’s strategy for choosing actions in each state. It is often represented as a probability distribution over actions for each state, indicating the likelihood of selecting each action. The policy guides the agent’s behavior and evolves as it learns from interactions with the environment.

- Example: In a video game, an agent’s policy might include strategies for when to attack or defend based on the opponent’s position and health.

The value function estimates the expected long-term reward for each state or state-action pair, helping the agent understand which states or actions are beneficial over the long term. There are two main types of value functions:

- State Value Function (V): Represents the expected reward for a specific state, assuming the agent follows a particular policy.

- Action Value Function (Q): Represents the expected reward for taking a specific action in a given state, assuming the agent follows a particular policy.

These value functions provide insights into the effectiveness of different states and actions, helping the agent optimize its policy.

4. Exploration vs. Exploitation

Reinforcement learning involves a balance between exploration (trying new actions to discover their rewards) and exploitation (choosing actions that are known to yield high rewards). If an agent always exploits known actions, it may miss opportunities for better strategies, while excessive exploration can lead to suboptimal outcomes. The agent must strike a balance to maximize rewards effectively.

- Example: In a maze-solving task, the agent might initially explore different paths to find the shortest route. Once it has identified the optimal path, it can exploit this knowledge to solve the maze quickly in subsequent trials.

Balancing exploration and exploitation is crucial for effective reinforcement learning, as it enables the agent to gather information about the environment while also achieving high rewards.

Types of Reinforcement Learning

Reinforcement learning algorithms are typically classified into three main types based on how they approach the problem of finding optimal policies and value functions: Value-Based Methods, Policy-Based Methods, and Actor-Critic Methods.

1. Value-Based Methods

In value-based methods, the agent learns a value function to estimate the expected rewards for each state or state-action pair and uses this information to derive a policy. The agent selects actions based on the value function, aiming to maximize cumulative rewards.

- Example: Q-learning is a popular value-based algorithm where the agent learns a Q-value (action-value) for each state-action pair. It then selects actions based on these Q-values, gradually refining them over time through interaction with the environment.

2. Policy-Based Methods

Policy-based methods focus on directly optimizing the policy without relying on value functions. The agent learns a policy that maps states to actions and iteratively improves this policy to maximize rewards. Policy-based methods are particularly useful for environments with continuous action spaces.

- Example: In robotic control, policy gradient methods allow the agent to learn smooth, continuous actions, such as adjusting the angle or speed of a robotic arm, which is often difficult with value-based approaches.

3. Actor-Critic Methods

Actor-critic methods combine elements of both value-based and policy-based approaches. The actor component learns the policy, while the critic component evaluates the policy by learning a value function. The critic provides feedback to the actor, helping it improve over time. Actor-critic methods are highly versatile and can handle both discrete and continuous action spaces effectively.

- Example: In autonomous driving, actor-critic methods allow the agent to balance between actions like accelerating, braking, and steering, while learning to optimize for safe and efficient navigation.

These three types of reinforcement learning offer flexibility in tackling a wide range of problems, from simple game environments to complex real-world tasks with continuous action spaces.

Advantages of Reinforcement Learning

Reinforcement learning offers several advantages, making it well-suited for applications that involve sequential decision-making, dynamic environments, and adaptive behavior. Here are some key benefits:

1. Adaptability to Complex Environments

Reinforcement learning is highly adaptable, allowing agents to learn from interactions within complex environments where other learning methods may struggle. By observing the outcomes of actions, the agent can learn strategies that optimize performance over time.

- Example: In robotics, RL enables robots to adapt to unfamiliar environments, allowing them to perform tasks like picking up objects, navigating obstacles, and adjusting to dynamic surroundings.

2. No Need for Labeled Data

Unlike supervised learning, which requires labeled data, reinforcement learning relies on feedback from the environment rather than explicit labels. This allows RL to learn directly from experience, making it practical for tasks where labeled data is unavailable or costly to obtain.

- Example: In autonomous driving, labeled data for every possible driving scenario is impractical. RL allows the vehicle to learn safe and effective driving strategies through simulated environments.

3. Effective for Sequential Decision-Making

Reinforcement learning is uniquely suited to tasks involving sequences of decisions, where actions have long-term consequences. This characteristic makes RL effective in scenarios where the optimal strategy requires considering both immediate and future rewards.

- Example: In chess, each move affects the potential outcomes of future moves, making RL an ideal approach for developing strategies that maximize the chance of winning over multiple moves.

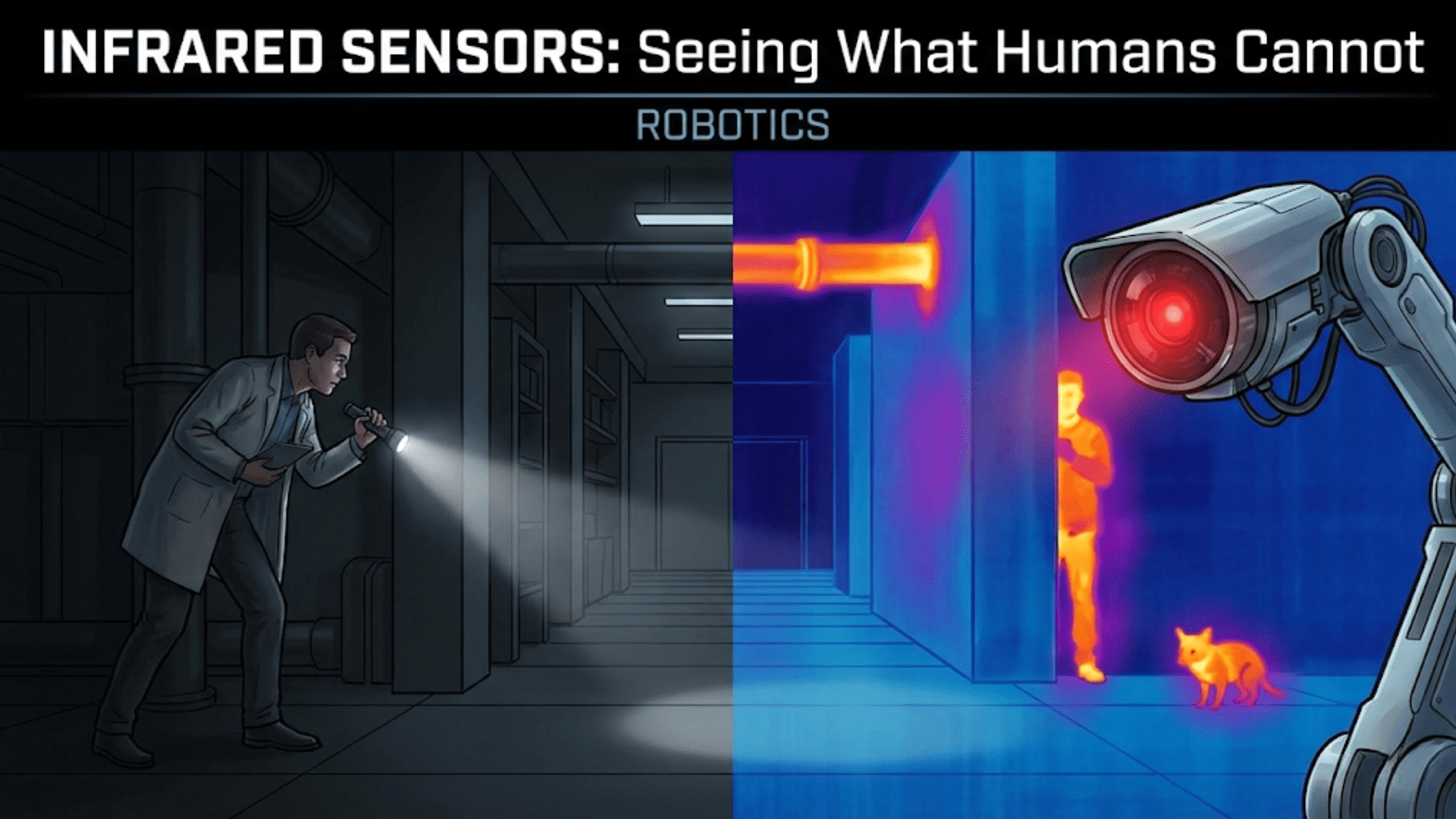

4. Ability to Handle Partial Observability

RL can work in environments where the agent has limited or partial observability, relying on its memory of past states and rewards. This adaptability enables RL agents to perform well even when they don’t have full information about the environment.

- Example: In video games, an RL agent might only see a portion of the game screen at any time, yet it can learn to win by using memory and strategies developed from previous experience.

These advantages make reinforcement learning particularly valuable for applications requiring adaptive, autonomous decision-making in complex, uncertain environments.

Limitations of Reinforcement Learning

Despite its advantages, reinforcement learning also has limitations that can make it challenging to implement effectively in certain situations. Here are some key limitations:

1. High Computational Requirements

RL often requires substantial computational power, as agents may need to explore numerous possible actions and outcomes to find optimal strategies. Training an RL model can be time-consuming, especially in complex environments with large state-action spaces.

- Example: Training a self-driving car in a realistic simulation may require hundreds of hours of computational time to explore different scenarios, increasing costs and complexity.

2. Difficulties with Sparse Rewards

In some tasks, rewards are rare or infrequent, making it challenging for the agent to learn effectively. When rewards are sparse, the agent may struggle to understand which actions contribute to success, leading to slow learning progress.

- Example: In a maze-solving task, the agent may only receive a reward upon reaching the exit. Sparse rewards make it difficult for the agent to learn which intermediate steps are beneficial, potentially hindering optimal learning.

3. Risk of Suboptimal Policies

If the agent explores inefficient or harmful actions, it may settle on suboptimal policies, especially if it overexploits short-term rewards without considering long-term outcomes. Balancing exploration and exploitation is crucial, but achieving this balance can be challenging.

- Example: In a financial trading system, an RL agent might choose short-term profits without recognizing that certain trades could lead to significant losses over time, resulting in a suboptimal trading strategy.

Despite these limitations, reinforcement learning remains a powerful approach for sequential decision-making tasks, and advancements in computational resources and algorithm development are helping to overcome these challenges.

Popular Reinforcement Learning Algorithms

Reinforcement learning encompasses a variety of algorithms, each suited to different types of environments and tasks. Here are some of the most popular RL algorithms and their typical applications.

1. Q-Learning

Q-Learning is a value-based algorithm used in environments with discrete states and actions. It aims to learn the Q-value (action-value) for each state-action pair, which represents the expected reward of taking an action in a given state. Q-learning builds a Q-table to store these values, allowing the agent to select actions that maximize cumulative rewards.

- Example: In a grid-based game, Q-learning can help an agent navigate from a starting point to a goal by learning the Q-values for each grid cell and action, guiding the agent toward the most rewarding path.

2. Deep Q-Networks (DQN)

Deep Q-Networks (DQN) extend Q-learning by using a neural network to approximate the Q-values. This approach allows RL to be applied to environments with large or continuous state spaces, where maintaining a Q-table would be infeasible. DQNs have shown remarkable success in complex tasks, such as playing video games.

- Example: In the game of Pong, a DQN can learn to play at a high level by observing the game screen as pixels and determining actions based on estimated Q-values for each frame.

3. Policy Gradient Methods

Policy Gradient Methods focus on directly optimizing the policy by adjusting parameters to maximize cumulative rewards. Unlike value-based methods, policy gradients learn a probabilistic policy, making them suitable for environments with continuous action spaces, where value-based approaches are limited.

- Example: In a robotic control task, a policy gradient algorithm can learn smooth and continuous actions, such as adjusting a robotic arm’s angle and speed, which is challenging for value-based methods.

4. Actor-Critic Methods

Actor-Critic Methods combine elements of both value-based and policy-based approaches. The actor learns the policy, while the critic estimates the value function. The critic evaluates the actions suggested by the actor and provides feedback, helping the actor refine the policy over time. Actor-critic methods are effective in handling large state spaces and can balance exploration and exploitation efficiently.

- Example: In autonomous driving, an actor-critic model can help a vehicle learn actions like accelerating, braking, and steering while also learning to value safe and efficient driving behaviors over time.

5. Proximal Policy Optimization (PPO)

Proximal Policy Optimization (PPO) is a popular policy-based algorithm that improves upon traditional policy gradient methods. PPO maintains a balance between exploring new policies and staying close to the current policy by limiting the changes made during each update. This stabilization technique makes PPO suitable for high-dimensional, continuous control tasks.

- Example: In advanced video game AI, PPO allows an agent to make complex decisions without overreacting to each update, helping it develop stable and effective strategies for complex games.

These algorithms each have unique strengths and are suited for different types of environments, from simple grid-based tasks to complex robotic control and game-playing scenarios.

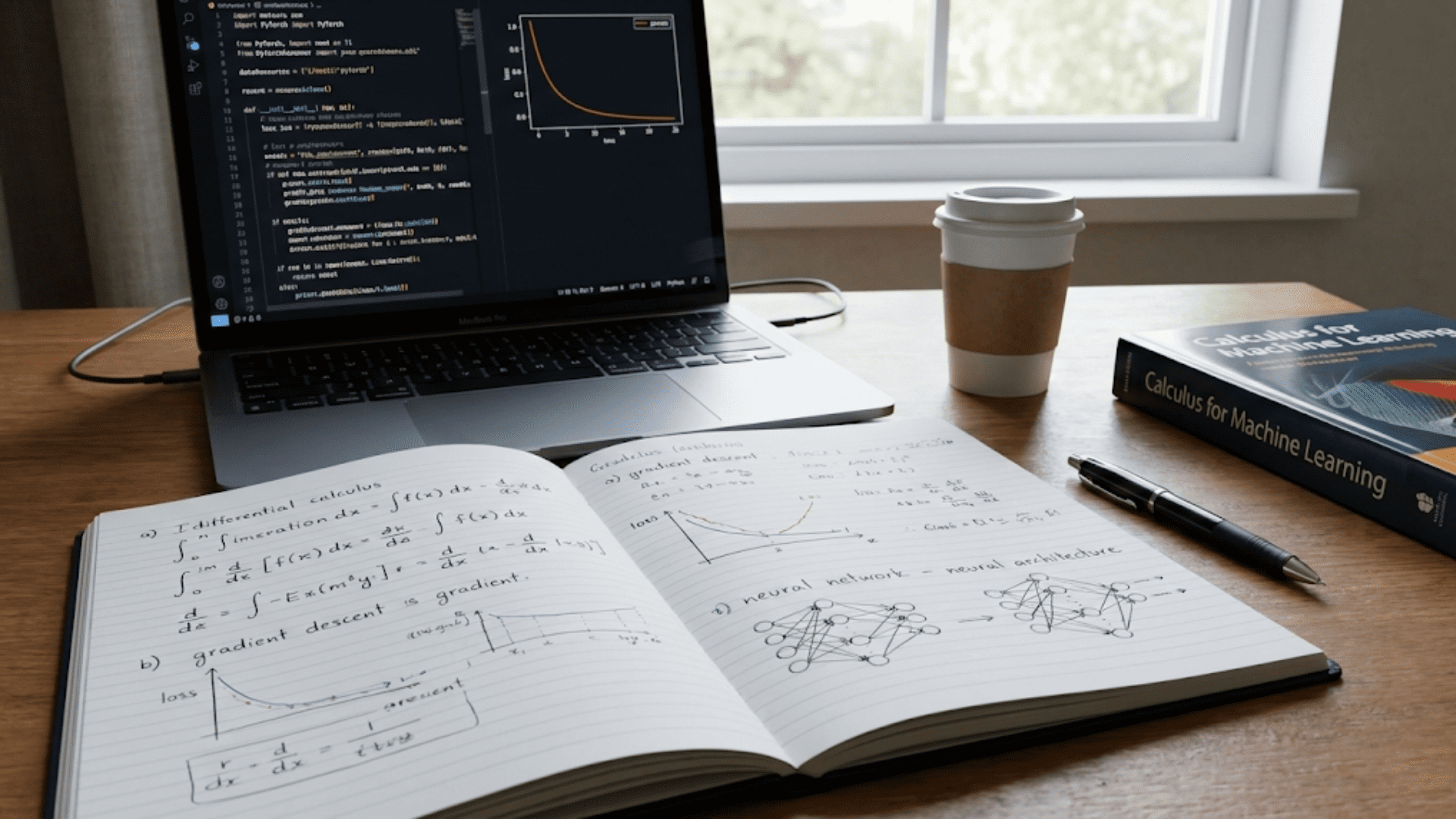

Step-by-Step Guide to Implementing a Reinforcement Learning Model

Building a reinforcement learning model involves several steps, from setting up the environment to selecting the algorithm, training the model, and evaluating its performance. Here’s a guide to help you implement an effective RL model.

Step 1: Define the Environment

The environment in RL defines the space in which the agent operates, including possible states, actions, and rewards. The environment can be custom-built or selected from pre-existing libraries, such as OpenAI Gym, which provides standard environments for testing RL algorithms.

- Example: For a cart-pole balancing task, OpenAI Gym’s

CartPole-v1environment simulates a pole on a cart, where the agent’s actions are moving the cart left or right to keep the pole balanced.

import gym

# Load CartPole environment

env = gym.make("CartPole-v1")Step 2: Define the Agent and Policy

The agent interacts with the environment by following a policy that determines its actions based on the current state. Depending on the algorithm chosen, this policy can be a Q-table, a neural network, or a probabilistic function. In value-based methods, the agent will use a Q-table or neural network to approximate Q-values, while in policy-based methods, the policy may be represented by a neural network.

- Example: In a DQN model, a neural network approximates the Q-values for each state-action pair. The input to the network is the state, and the output is a set of Q-values, one for each possible action.

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Define a neural network model for DQN

def build_model(state_size, action_size):

model = Sequential()

model.add(Dense(24, input_dim=state_size, activation="relu"))

model.add(Dense(24, activation="relu"))

model.add(Dense(action_size, activation="linear"))

model.compile(loss="mse", optimizer="adam")

return modelStep 3: Implement the Reward Structure

Define the reward structure that guides the agent’s learning. Rewards should encourage desired behaviors and discourage undesirable ones. Designing an effective reward structure is crucial, as poorly designed rewards can lead to suboptimal strategies or unintended behaviors.

- Example: In a robot navigation task, rewards could include positive feedback for reaching the target location and negative feedback for colliding with obstacles. This structure encourages the robot to find the safest, shortest path.

Step 4: Train the Model

Training the agent involves running multiple episodes, where the agent interacts with the environment and updates its policy based on the rewards received. For value-based methods like DQN, training includes using algorithms such as experience replay, where past experiences are stored in a memory buffer and sampled randomly to reduce correlation and improve learning stability.

- Example: In DQN, the agent explores the environment, and each action taken is stored in a memory buffer. After each episode, a mini-batch of experiences is sampled from the buffer, and the Q-values are updated based on these samples.

from collections import deque

import random

# Experience replay buffer

memory = deque(maxlen=2000)

# Store experiences in the buffer

def remember(state, action, reward, next_state, done):

memory.append((state, action, reward, next_state, done))

# Sample mini-batch from memory

def replay(batch_size):

minibatch = random.sample(memory, batch_size)

for state, action, reward, next_state, done in minibatch:

# Perform Q-learning update (omitting full code for brevity)

passStep 5: Evaluate the Model

Evaluate the model’s performance by measuring cumulative rewards over several episodes. Evaluations help determine if the agent has learned an effective policy or if further training and optimization are required. Metrics such as cumulative reward, success rate, and episode length provide insights into the model’s behavior.

- Example: In a cart-pole balancing task, the model’s performance could be evaluated by tracking the number of steps the pole remains balanced. A higher step count indicates better policy learning.

Step 6: Fine-Tuning and Hyperparameter Optimization

Fine-tuning the RL model involves adjusting hyperparameters, such as the learning rate, exploration rate, and discount factor, to improve learning efficiency and stability. Hyperparameter tuning helps strike a balance between exploration and exploitation, and can significantly impact the model’s performance.

- Example: In DQN, fine-tuning the exploration rate (epsilon) allows the agent to explore enough at the beginning of training and gradually shift to exploitation as it gains confidence in its actions.

# Adjust exploration rate for epsilon-greedy policy

epsilon = max(0.1, epsilon * 0.995)By following these steps, practitioners can build effective reinforcement learning models that learn optimal strategies for various tasks, from simple navigation challenges to complex game environments.

Practical Considerations for Optimizing Reinforcement Learning

Reinforcement learning models often face unique challenges, and several practical considerations can help ensure they operate efficiently and accurately.

1. Reward Shaping

Reward shaping involves refining the reward structure to make learning more effective. By providing intermediate rewards that guide the agent toward the goal, reward shaping can reduce the learning time required, especially in tasks where rewards are sparse.

- Best Practice: Introduce small rewards for reaching subgoals, such as rewarding a robot for approaching an object even if it hasn’t reached the final destination, to encourage gradual progress.

2. Addressing the Exploration-Exploitation Dilemma

Balancing exploration and exploitation is essential in RL, as too much exploration can slow down learning, while too little can prevent the model from finding the optimal policy. Techniques like epsilon-greedy policies or entropy-based exploration can help achieve this balance.

- Best Practice: Start with a high exploration rate and gradually reduce it as the model gains confidence. Use decay functions for parameters like epsilon to help the agent shift from exploration to exploitation over time.

3. Managing Computational Resources

Reinforcement learning models can be computationally intensive, especially with complex environments and large state spaces. Techniques like experience replay, parallelized training, and hardware acceleration can help manage resource usage and speed up training.

- Best Practice: Use experience replay to store and sample from past experiences, which reduces memory consumption and improves learning efficiency. For large-scale tasks, consider running simulations in parallel.

4. Preventing Overfitting to the Training Environment

Overfitting is a concern in RL, particularly when the agent is trained in a limited environment. Testing the agent in varied scenarios or introducing random elements in the training environment can improve robustness and help prevent overfitting.

- Best Practice: Use domain randomization or environmental variations during training to expose the agent to diverse situations, enhancing its adaptability and generalizability.

5. Monitoring and Debugging Model Behavior

Monitoring the agent’s behavior during training is crucial for understanding its progress and identifying issues. Visualizations, episode summaries, and reward tracking help ensure the model learns as expected and can highlight areas for improvement.

- Best Practice: Regularly log metrics like cumulative rewards, episode length, and success rate. Use visual tools to track progress and debug behaviors that deviate from desired goals.

By addressing these considerations, practitioners can optimize reinforcement learning models for effective, stable, and reliable performance across a range of applications.

Real-World Applications of Reinforcement Learning

Reinforcement learning has proven effective in solving complex, real-world problems that involve sequential decision-making, dynamic environments, and the need for adaptive strategies. Here are some prominent applications of RL across different industries:

1. Robotics and Autonomous Systems

Reinforcement learning is widely used in robotics, enabling robots to learn tasks through trial and error. RL allows robots to adapt to changing environments and develop control policies that maximize efficiency, making it particularly valuable for tasks like navigation, manipulation, and autonomous driving.

- Example: In robotic arm control, RL can help the robot learn to pick up and place objects accurately by experimenting with different grasping angles, speeds, and grips until it finds the optimal technique.

- Example: Autonomous vehicles use RL to navigate roads safely. RL models learn to drive by adjusting speed, steering, and braking based on the environment, improving safety and efficiency.

2. Game AI and Virtual Environments

Reinforcement learning has shown remarkable success in gaming, where agents can interact with virtual environments to learn winning strategies. Games offer controlled environments where RL algorithms can explore strategies, refine tactics, and develop advanced gameplay.

- Example: In 2016, AlphaGo, developed by DeepMind, used reinforcement learning to master the game of Go. By combining RL with deep learning, AlphaGo surpassed human performance, beating world champion players.

- Example: In modern video games, RL-based NPCs (non-playable characters) are designed to adapt to player actions, creating more challenging and engaging gameplay experiences.

3. Healthcare and Medical Research

Reinforcement learning is making inroads into healthcare, where it is used to optimize treatment strategies, personalize patient care, and improve diagnostics. RL models help identify optimal treatment paths for various medical conditions by simulating possible interventions and outcomes.

- Example: In personalized medicine, RL is used to adjust dosages of drugs based on real-time patient responses, optimizing treatment effectiveness while minimizing side effects.

- Example: RL is being explored in clinical trial design, where the model learns to recommend patient-specific treatments based on historical trial data, maximizing treatment success rates and reducing trial durations.

4. Finance and Trading

In finance, reinforcement learning is applied to algorithmic trading, portfolio management, and risk assessment. By learning from market data, RL agents can develop strategies that adapt to market fluctuations and maximize profits.

- Example: In high-frequency trading, RL algorithms analyze market data and execute trades in milliseconds, identifying short-term price changes and adjusting trades to capitalize on market conditions.

- Example: RL-based portfolio management helps optimize asset allocation by balancing risks and returns, adjusting the portfolio in response to market trends.

5. Energy Management and Smart Grids

Reinforcement learning is used in energy management to optimize energy production, distribution, and consumption. RL models help smart grids respond to changes in demand and supply, ensuring efficient energy utilization and minimizing costs.

- Example: In renewable energy management, RL can optimize the operation of wind farms and solar panels by adjusting power output based on weather forecasts, grid demand, and storage capacity.

- Example: In building management, RL models learn to adjust heating, cooling, and lighting systems based on occupancy and environmental conditions, maximizing energy efficiency.

These applications illustrate RL’s versatility and effectiveness in dynamic environments requiring adaptive strategies, highlighting its potential to drive innovation across diverse industries.

Future Trends in Reinforcement Learning

As reinforcement learning advances, several trends are shaping its future, expanding its capabilities and making it more accessible for real-world applications.

1. Transfer Learning in RL

Transfer learning, where knowledge gained in one task is applied to another, is becoming increasingly relevant in RL. By leveraging pre-trained models or prior experience, transfer learning reduces training time and enhances performance, especially in related tasks.

- Example: A robot trained to navigate one type of environment (e.g., an office) can apply its knowledge to a similar environment (e.g., a warehouse), adapting faster than learning from scratch.

2. Hierarchical Reinforcement Learning (HRL)

Hierarchical reinforcement learning involves dividing complex tasks into sub-tasks, enabling agents to solve problems in a structured manner. HRL allows agents to develop high-level strategies while handling low-level actions separately, improving learning efficiency and adaptability.

- Example: In warehouse automation, HRL can enable robots to complete multi-step tasks, such as navigating to a shelf, picking an item, and delivering it to a destination, by breaking down each step into manageable sub-tasks.

3. Safe and Explainable RL

As RL finds applications in high-stakes fields like healthcare and finance, there is a growing demand for safe and explainable models. Safe RL focuses on ensuring that agents operate within predefined constraints to prevent unsafe actions, while explainable RL aims to make agents’ decisions more understandable for humans.

- Example: In healthcare, safe RL is crucial to prevent actions that could harm patients. RL models trained with safe constraints help ensure that treatment recommendations align with medical guidelines.

- Example: In finance, explainable RL models allow analysts to understand the reasons behind trading decisions, helping build trust and ensuring compliance with regulatory standards.

4. Multi-Agent Reinforcement Learning (MARL)

Multi-agent reinforcement learning involves training multiple agents that interact within the same environment. This approach is useful for tasks that require collaboration or competition among multiple agents, such as logistics, resource allocation, and traffic management.

- Example: In autonomous vehicle fleets, MARL enables vehicles to communicate and coordinate their movements, reducing traffic congestion and enhancing safety.

5. Integration with Simulation and Digital Twins

Simulation-based RL is gaining traction, especially with the rise of digital twins—virtual replicas of physical systems. Simulations allow RL agents to experiment and learn in risk-free environments, accelerating development for tasks where real-world training would be costly or impractical.

- Example: In manufacturing, RL models trained in digital twin environments help optimize production processes, enabling real-time adjustments without risking damage to equipment.

These trends are advancing reinforcement learning’s capabilities, making it more versatile, interpretable, and applicable across a broader range of real-world challenges.

Best Practices for Deploying Reinforcement Learning Models

Deploying RL models in complex environments requires careful planning and ongoing monitoring. Here are some best practices for ensuring effective, stable RL model deployments.

1. Design a Clear Reward Structure

A well-designed reward structure is crucial for guiding the RL agent’s learning. The reward structure should align with the desired outcomes and discourage unintended behaviors. Regularly review and refine rewards to ensure that the agent learns to optimize for the correct goals.

- Best Practice: Implement intermediate rewards for achieving sub-goals in tasks with sparse rewards, helping the agent understand which actions contribute to long-term success.

2. Test in Simulation Before Real-World Deployment

Training RL agents directly in the real world can be risky, especially for tasks involving safety-critical operations. Using simulations allows agents to learn in a controlled environment before deployment, minimizing risks.

- Best Practice: Conduct thorough simulations of the task environment, using realistic scenarios to ensure the agent can handle various conditions. Once the agent performs well in simulation, carefully transition to real-world deployment with ongoing monitoring.

3. Monitor for Policy Stability and Drift

RL agents trained in dynamic environments may experience policy drift over time, where their learned policy becomes less effective due to changing conditions. Regular monitoring and retraining help maintain policy stability, ensuring the agent continues to perform well as the environment evolves.

- Best Practice: Set up automated performance monitoring with key metrics like cumulative reward, success rate, and safety violations. Trigger retraining if performance drops below acceptable thresholds.

4. Prioritize Safety and Robustness

For applications where safety is critical, ensure that the RL agent is trained with safe exploration techniques and constrained actions to avoid risky behaviors. Use conservative exploration strategies, and consider safety constraints during training to prevent unintended actions.

- Best Practice: Implement constraints on actions that could cause harm or lead to failures. Safe RL methods, such as reward shaping or constrained policies, can help prevent the agent from making dangerous choices.

5. Make Use of Explainability Tools

Explainability is essential in RL, particularly in regulated fields or when human oversight is required. Explainability tools help stakeholders understand the agent’s decisions, fostering trust and compliance.

- Best Practice: Use tools like SHAP and LIME to analyze and interpret the agent’s policy decisions, providing transparency for complex actions. For high-stakes applications, ensure that explanations are accessible to both technical and non-technical stakeholders.

6. Continuously Improve the Model

RL models may require ongoing updates to adapt to new situations or refine their performance. Consider implementing continuous learning or periodic retraining to maintain the agent’s effectiveness and responsiveness.

- Best Practice: Establish a regular retraining schedule based on observed performance metrics, and update the model when significant changes in the environment or task requirements are detected.

By following these best practices, practitioners can deploy reinforcement learning models that are safe, reliable, and effective, providing value across diverse real-world applications.

The Potential of Reinforcement Learning

Reinforcement learning is a powerful approach to machine learning, uniquely suited to tasks that involve complex decision-making, adaptive strategies, and dynamic environments. Unlike other learning paradigms, RL enables agents to learn through interaction, making it effective for applications where rewards and consequences are experienced over time.

From robotics and healthcare to finance and energy management, reinforcement learning is transforming industries by enabling intelligent, autonomous systems capable of optimizing long-term outcomes. Emerging trends, including transfer learning, hierarchical RL, and explainable RL, are expanding its capabilities and applicability, ensuring that RL can address increasingly complex and high-stakes challenges.

By adhering to best practices—such as designing clear reward structures, testing in simulations, monitoring for policy drift, prioritizing safety, and ensuring explainability—data scientists and engineers can build robust RL models that deliver substantial impact. As technology and research continue to evolve, reinforcement learning will remain a central driver of innovation in AI, creating smarter, more adaptable solutions for the world’s most challenging problems.