Online learning, also known as incremental learning, is a machine learning approach in which the model learns and updates continuously as new data arrives, rather than being trained on a fixed dataset all at once. This method allows the model to adapt dynamically to changing data patterns in real time, making it particularly suitable for applications where data is generated continuously and where immediate responsiveness is crucial. Unlike batch learning, where a model is trained on a static dataset and redeployed periodically, online learning enables models to evolve with each new data point or batch, ensuring that they remain current with the latest trends or patterns.

Online learning is often applied in real-time applications such as financial market predictions, recommendation systems, autonomous vehicles, and spam detection, where adaptability and responsiveness are essential. By learning incrementally, online learning models avoid the computational cost of retraining on entire datasets, focusing only on newly available data to make predictions that reflect the current state of the environment.

This article will provide an in-depth look at the core principles, advantages, and limitations of online learning. We’ll also explore the types of applications that benefit most from this approach and how online learning differs from batch learning.

Key Concepts in Online Learning

To understand how online learning works, it’s important to explore the foundational concepts that define this approach, including how the model processes data incrementally and how it adapts to changing data distributions.

1. Incremental Data Processing

In online learning, the model is designed to process data incrementally, updating its parameters as each new data point or batch arrives. This approach allows the model to learn continuously without needing to retrain on the entire dataset, making it both computationally efficient and adaptable to changing patterns in the data.

- Example: In fraud detection for credit card transactions, an online learning model processes each transaction as it occurs, adjusting to new fraud tactics without retraining from scratch. By learning incrementally, the model remains responsive to emerging threats, providing real-time protection.

2. Real-Time Model Updates

Online learning models are updated in real-time, adapting to the latest data without the need for batch processing. This real-time update capability is critical in environments where data changes rapidly, allowing the model to incorporate new information immediately and refine its predictions accordingly.

- Example: In a recommendation system for a streaming service, an online learning model adjusts recommendations based on each user’s most recent interactions, such as watching a particular movie or liking a song. This real-time adaptation allows the model to reflect users’ evolving preferences.

3. Memory Efficiency

One of the defining characteristics of online learning is its memory efficiency. Since the model does not require access to the entire historical dataset, it can function with limited memory, retaining only the information necessary to update parameters based on the latest data points. This memory efficiency is valuable for applications with limited storage capacity, such as embedded systems and IoT devices.

- Example: An online learning model in an IoT-based smart thermostat can adjust heating and cooling preferences based on recent patterns without storing extensive historical temperature and usage data.

4. Handling Concept Drift

Concept drift occurs when the statistical properties of the data change over time, causing a previously trained model to lose accuracy. Online learning is particularly well-suited to handle concept drift, as it continuously updates with new data, allowing the model to adjust to changes in the data distribution.

- Example: In stock market prediction, where market dynamics can shift due to economic events, an online learning model adapts its predictions in response to each new data point. This responsiveness helps the model remain accurate in volatile environments.

Online learning’s incremental processing, real-time adaptability, memory efficiency, and capacity to handle concept drift make it a preferred approach for dynamic environments where traditional batch learning would be less effective.

Advantages of Online Learning

Online learning offers several key advantages, making it an ideal choice for applications where data changes frequently, and continuous adaptation is necessary.

1. Immediate Adaptability to New Data

Since online learning models update as each new data point arrives, they adapt instantly to changing data trends. This adaptability makes online learning ideal for situations where new information must be acted on promptly.

- Example: In social media monitoring, an online learning model can detect emerging trends or hashtags as they appear, enabling platforms to respond to user interests in real-time, whether through content recommendations or moderation.

2. Computational Efficiency

Unlike batch learning, which requires retraining on the entire dataset, online learning focuses on new data only, making it more computationally efficient. This efficiency reduces training time and computational load, which is beneficial for applications requiring immediate processing and prediction.

- Example: In natural language processing for live chat support, an online learning model updates with each user query, adapting responses based on the latest conversational patterns without high computational overhead.

3. Scalability for Large and Streaming Datasets

Online learning can scale effectively with large datasets, as it doesn’t require access to all data at once. By focusing only on incremental updates, online learning models handle streaming data efficiently, making them suitable for high-frequency data environments.

- Example: In autonomous vehicles, online learning processes sensor data in real-time, enabling the vehicle to make navigation adjustments based on new information, such as obstacles, road conditions, or traffic patterns.

4. Ability to Address Concept Drift

Online learning’s continuous updating helps it tackle concept drift effectively, allowing the model to stay relevant in changing environments. This ability to handle drift is crucial in applications where data distributions are likely to evolve, such as in consumer behavior or environmental monitoring.

- Example: In weather prediction, an online learning model adapts to changing seasonal patterns and new weather events as they occur, ensuring that predictions reflect the latest data and maintain accuracy.

The adaptability, efficiency, scalability, and resilience to concept drift make online learning an essential tool for applications where data volatility and rapid response times are required.

Limitations of Online Learning

Despite its advantages, online learning also has limitations that make it less suitable for certain applications. Here are some primary challenges:

1. Sensitivity to Noisy Data

Online learning models are highly sensitive to noisy data, as they update continuously with each new data point. Without careful data preprocessing, noisy data points can cause the model to drift inaccurately, reducing its predictive accuracy.

- Example: In stock price prediction, if the model encounters sudden price spikes due to anomalies rather than real trends, it may adjust its predictions based on these outliers, affecting accuracy.

2. Potential for Model Instability

Since online learning models adjust with each new data point, they may become unstable if the incoming data contains high variability or lacks consistency. This can lead to fluctuations in model predictions, particularly if the data stream includes outliers or irregular patterns.

- Example: In fraud detection, if a sudden surge in false positives occurs due to atypical transaction patterns, the model may adapt incorrectly, leading to an increased rate of false alerts.

3. Limited Retention of Historical Data

In online learning, models don’t typically retain historical data, as they focus on current trends. While this approach enhances memory efficiency, it limits the model’s ability to revisit past data for context, which can be problematic in applications requiring long-term trends.

- Example: In customer retention analysis, an online learning model may overlook valuable long-term behavior patterns, such as cyclical purchase trends, because it focuses only on recent transactions.

4. Complexity in Model Tuning and Optimization

Online learning models often require more careful parameter tuning than batch learning models, as they must balance responsiveness to new data with stability over time. Hyperparameter tuning, such as learning rate adjustment, becomes critical to prevent the model from adapting too aggressively or too slowly to new information.

- Example: In an online advertisement recommendation system, tuning the learning rate determines how quickly the model updates based on recent user behavior. Setting it too high could cause erratic recommendations, while setting it too low might make the model too slow to adapt to changing interests.

Despite these limitations, online learning remains invaluable for applications that require real-time processing and responsiveness. By addressing noise, model instability, and optimizing parameters, practitioners can mitigate these challenges effectively.

Comparison with Batch Learning

Online learning is often contrasted with batch learning, where the model is trained on a fixed dataset all at once and redeployed periodically. Here’s a quick comparison:

- Online Learning: Updates incrementally as new data arrives, adapts to changing patterns in real-time, and is ideal for dynamic, high-frequency data.

- Batch Learning: Processes a fixed dataset, provides stable models optimized for specific tasks, and requires retraining periodically to stay current.

For instance, in fraud detection, online learning would enable the model to adapt to new fraudulent behaviors instantly, while batch learning would provide a more stable, thoroughly optimized model that is updated periodically. The choice between online and batch learning largely depends on the application’s need for real-time adaptation versus stability and thoroughness.

Use Cases for Online Learning Across Industries

Online learning is especially valuable for industries that require immediate responses to changing data patterns or frequent data updates. Here are some specific use cases where online learning models excel:

1. Financial Services and Trading

In the financial sector, online learning models are applied to analyze and predict stock prices, monitor market trends, and detect fraudulent activities. Given the fast pace and volatile nature of financial markets, online learning provides the adaptability required to handle rapidly changing data.

- Example: Stock Price Prediction – Online learning models analyze incoming price and trading volume data in real time, adjusting their predictions based on the latest market behavior. This enables traders to make informed, timely investment decisions.

- Example: Fraud Detection – Online learning models process credit card transactions as they occur, adapting to new fraud patterns as they emerge. By learning from each transaction, these models quickly detect anomalies and protect against financial losses.

2. E-commerce and Retail

Online learning is used extensively in e-commerce and retail to offer personalized recommendations, optimize inventory, and monitor customer behavior. These models benefit from real-time data updates, ensuring that recommendations and predictions are always relevant to current consumer preferences.

- Example: Product Recommendation – An online learning model continuously updates based on each customer’s browsing and purchasing behavior, providing personalized product suggestions that adapt to the customer’s evolving interests.

- Example: Dynamic Pricing – In response to real-time demand data, online learning models help e-commerce platforms adjust prices dynamically, optimizing revenue by responding instantly to trends, competitor pricing, and seasonal variations.

3. Cybersecurity

In cybersecurity, online learning is crucial for monitoring network activity, detecting intrusions, and identifying potential security threats. As cyber threats evolve, online learning models update continuously to detect new attack patterns, providing proactive defense.

- Example: Intrusion Detection – Online learning models analyze network traffic in real time, flagging unusual patterns that may indicate malicious activity. This adaptability allows the system to detect previously unseen threats and minimize response time.

- Example: Malware Detection – By analyzing new file behaviors and patterns, an online learning model adapts to detect emerging malware strains, even if they differ from previously known variants.

4. Healthcare and Medical Diagnosis

In healthcare, online learning supports applications such as disease outbreak detection, patient monitoring, and diagnostics. Models that can adjust based on incoming patient data or epidemiological information are invaluable for timely interventions and personalized care.

- Example: Patient Monitoring – Online learning models track real-time data from wearable devices, such as heart rate, blood pressure, or glucose levels, adapting their predictions to alert healthcare providers of potential health risks.

- Example: Disease Outbreak Detection – Online learning analyzes patterns in patient symptoms or health reports, allowing health organizations to detect outbreaks early by adjusting to new data as it emerges.

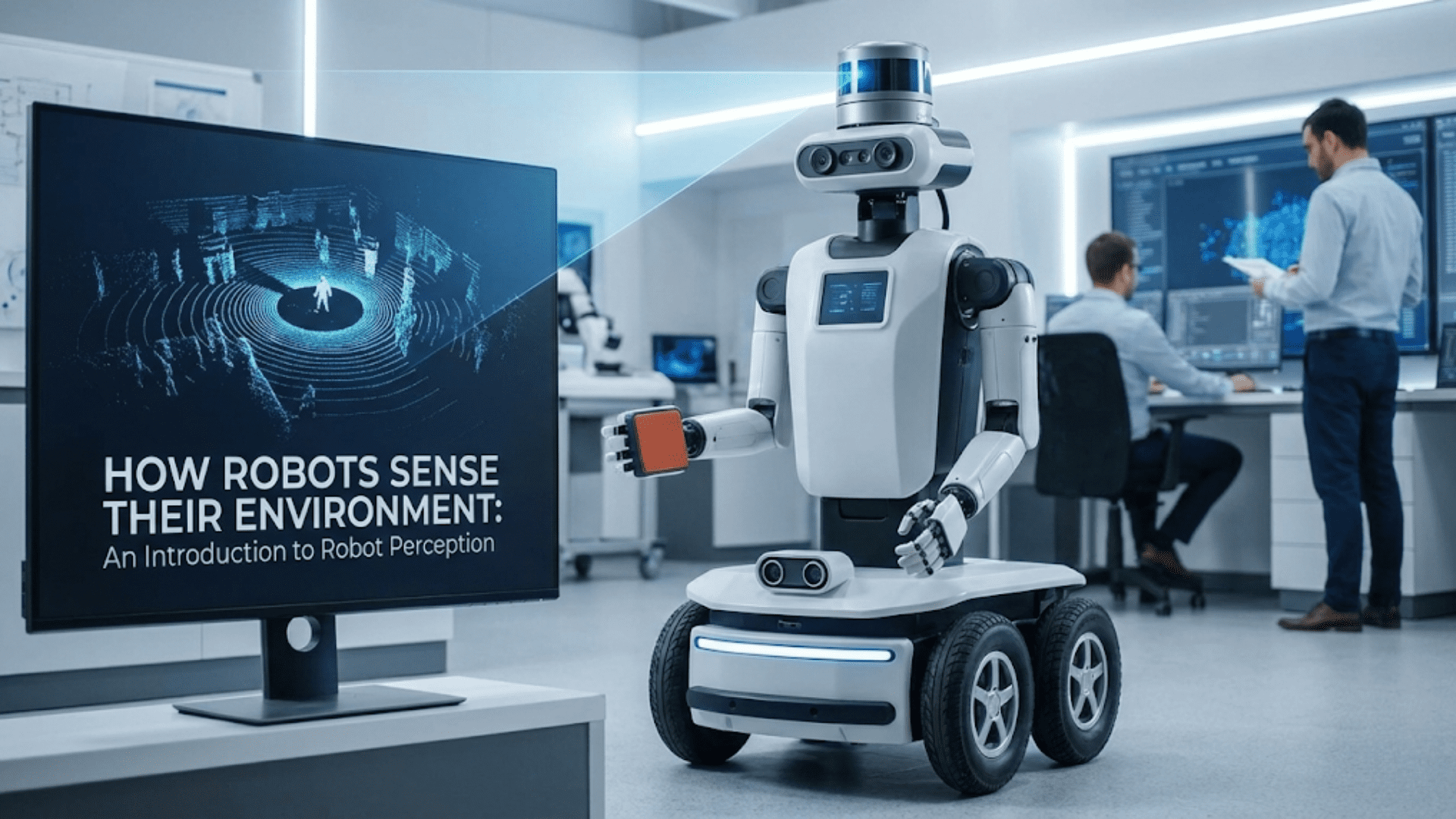

5. Autonomous Vehicles and Robotics

Autonomous vehicles and robots rely on online learning models to process sensor data in real time, enabling them to respond to dynamic environments, such as changes in traffic, road conditions, or obstacles.

- Example: Autonomous Navigation – An online learning model continuously updates as the vehicle receives new sensor inputs, adapting to unexpected obstacles or changes in road conditions to ensure safe driving.

- Example: Object Recognition – For tasks like obstacle detection or pedestrian recognition, online learning models refine their predictions with each new visual input, ensuring real-time accuracy.

These applications highlight online learning’s adaptability and efficiency, especially for industries where data changes frequently and real-time responsiveness is critical.

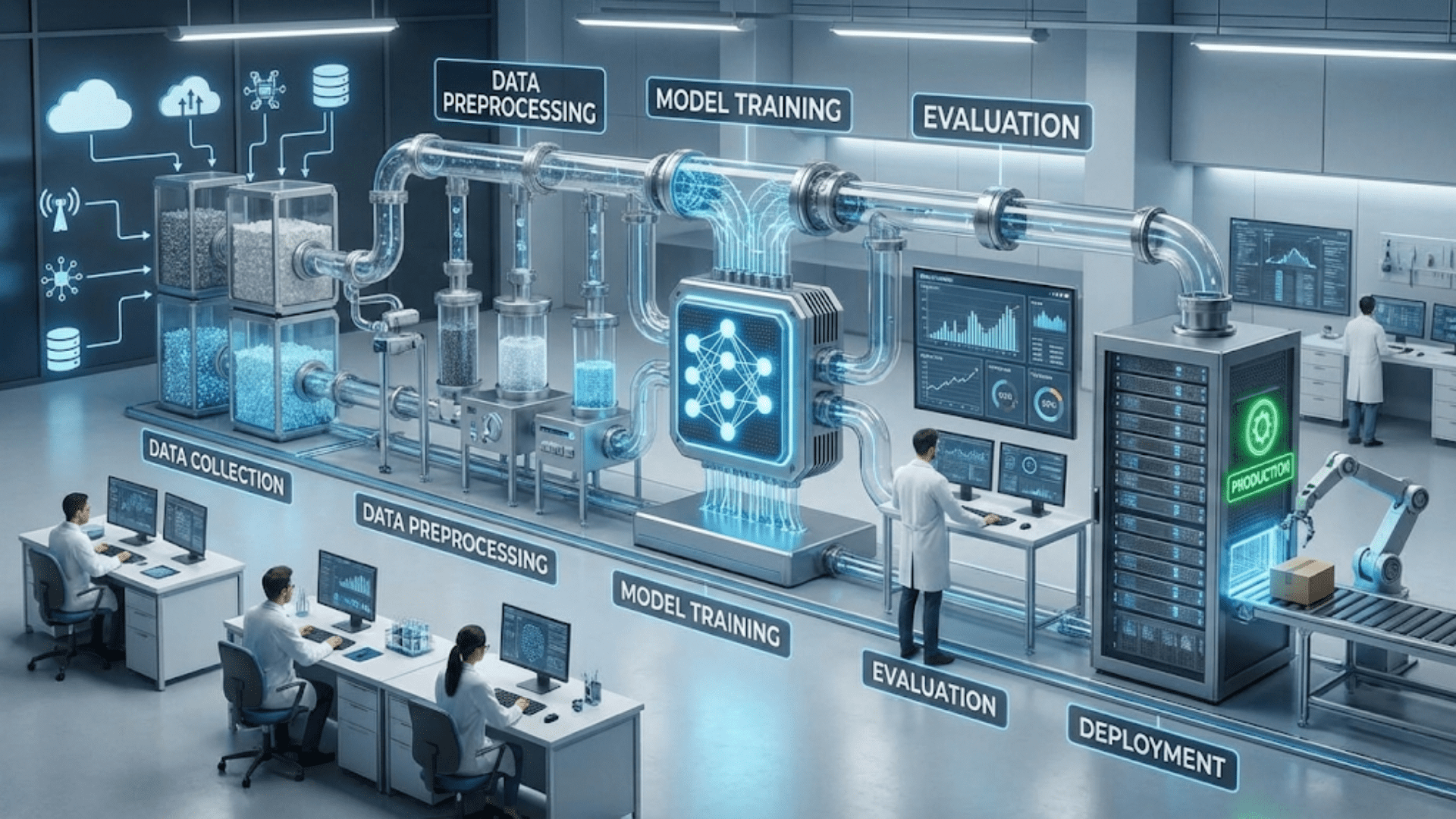

Implementing an Online Learning Model: Step-by-Step Guide

Building an online learning model involves a series of steps, from selecting the appropriate data streaming method to model selection, deployment, and performance monitoring. Here’s a guide to help you implement an effective online learning model.

Step 1: Data Stream Setup and Preprocessing

Since online learning requires a continuous data stream, the first step is to configure a system that delivers real-time data to the model. Data preprocessing should also be performed in real time to handle noise, missing values, or outliers before they are fed into the model.

- Data Stream Setup: Configure data sources to deliver new data points or batches continuously. Systems like Kafka or Amazon Kinesis are commonly used to manage data streaming for large-scale applications.

- Real-Time Preprocessing: Implement preprocessing steps to normalize, scale, or filter data as it arrives, ensuring the model receives clean and relevant inputs.

# Example of streaming data preprocessing in Python

from sklearn.preprocessing import StandardScaler

# Instantiate the scaler for real-time use

scaler = StandardScaler()

# Function to scale incoming data point

def preprocess_data(data_point):

return scaler.fit_transform(data_point)Step 2: Model Selection

Select an online learning algorithm suitable for continuous updating, such as stochastic gradient descent (SGD) for linear models, or incremental versions of decision trees, like Hoeffding Trees. These algorithms are designed to handle incremental data and update their parameters on the fly.

- Example: For binary classification tasks in fraud detection, stochastic gradient descent is a good choice due to its efficiency in processing streaming data.

Step 3: Training the Model Incrementally

Begin by training the model on an initial dataset to establish a baseline, then transition to incremental updates. Each new data point or batch should refine the model’s parameters without requiring full retraining.

- Incremental Learning: Use algorithms that support incremental learning, where model parameters are updated with each new data point, allowing the model to adapt dynamically.

from sklearn.linear_model import SGDClassifier

# Initialize the model with SGD

model = SGDClassifier()

# Incrementally fit the model on streaming data

for data_point, label in stream_data:

model.partial_fit([data_point], [label], classes=[0, 1])Step 4: Real-Time Model Evaluation

Real-time evaluation ensures that the model performs consistently as new data arrives. Metrics such as accuracy, precision, recall, and F1-score should be monitored continuously to track the model’s performance. Setting up alerts for drops in accuracy or precision helps detect issues early.

- Example: In a spam detection model, monitor the false positive and false negative rates as the model updates to ensure it accurately classifies emails without excessive false alerts.

Step 5: Deployment and Continuous Monitoring

Deploy the model in an environment that supports continuous updates and monitoring. Implement logging and alerting systems to notify data scientists if performance degrades due to data drift or unexpected changes in the data.

- Continuous Monitoring: Use monitoring platforms like Prometheus or Grafana to visualize key metrics and detect performance anomalies over time.

# Example: Monitoring accuracy in real-time

def monitor_accuracy(prediction, actual):

correct_predictions = (prediction == actual).sum()

accuracy = correct_predictions / len(actual)

print("Current Accuracy:", accuracy)Step 6: Tuning and Maintenance

Online learning models require ongoing tuning to maintain stability and prevent overfitting to recent data. Adjust hyperparameters like the learning rate periodically and retrain the model if needed to recalibrate it with a representative sample of historical data.

- Learning Rate Adjustment: Tuning the learning rate can prevent the model from reacting too quickly to outliers or noisy data. Regular adjustments help balance responsiveness and stability.

By following these steps, you can implement an online learning model that adapts to new data, remains efficient, and provides real-time insights for applications requiring continuous updates.

Key Considerations for Effective Online Learning

To ensure online learning models perform reliably over time, several key considerations must be addressed, from handling noisy data to managing resource usage and preventing overfitting.

1. Noise and Outlier Management

Online learning models are sensitive to noisy data, which can lead to unstable predictions. Applying techniques to filter noise or limit the influence of outliers is essential to maintaining model stability.

- Best Practice: Use real-time anomaly detection to filter out unusual data points that may distort the model, or apply a moving average to smooth input data over time.

2. Balancing Learning Rate and Model Stability

The learning rate, which determines how quickly the model adapts to new data, is a critical parameter in online learning. A high learning rate may cause the model to overfit recent data, while a low learning rate could make it slow to adapt to trends.

- Best Practice: Tune the learning rate based on data volatility. For applications with frequent changes, a higher learning rate is suitable, while stable environments may benefit from a lower learning rate to reduce overfitting.

3. Memory Management

Since online learning processes data incrementally, it requires efficient memory management, especially when handling large datasets or high-frequency data streams. Implementing memory-efficient algorithms and data structures is crucial.

- Best Practice: Use memory-efficient algorithms like SGD, which process data points independently, or store only the latest data points instead of the entire dataset, optimizing memory usage.

4. Handling Concept Drift

Concept drift, or shifts in the underlying data distribution, can degrade model performance over time. Monitoring for concept drift and updating the model accordingly helps maintain accuracy.

- Best Practice: Use drift detection methods, such as tracking performance metrics over time, and set up alerts for retraining if performance drops below a threshold.

5. Model Interpretability

In applications requiring transparency, such as finance or healthcare, it’s important to ensure the online learning model’s decisions are interpretable. Tools like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) can clarify feature impacts in real time.

- Best Practice: Regularly apply interpretability tools to understand feature influences and detect if the model is over-relying on any particular feature.

By addressing these considerations, practitioners can deploy robust and reliable online learning models capable of adapting to dynamic environments while maintaining high levels of accuracy and stability.

Emerging Trends and Future Applications in Online Learning

Online learning has gained traction as more applications require real-time processing and responsiveness. As data generation continues to grow, several trends are shaping the future of online learning and expanding its potential across industries.

1. Integration with Edge Computing

Edge computing brings data processing closer to data sources, reducing latency and improving the speed of online learning applications. This trend is particularly relevant for IoT (Internet of Things) devices, autonomous vehicles, and smart cities, where real-time decision-making is critical.

- Example: In smart manufacturing, online learning models embedded at the edge analyze sensor data in real time to detect equipment anomalies, enabling immediate responses to prevent potential failures.

2. Online Learning with Federated Data

Federated learning allows models to be trained across multiple decentralized devices while keeping data local, maintaining privacy. Combined with online learning, federated data enables real-time model updates across distributed systems without sharing sensitive data, making it ideal for healthcare, finance, and mobile applications.

- Example: A mobile keyboard app with an online federated learning model can update personalized language predictions for each user based on local typing data while preserving privacy.

3. Self-Supervised Online Learning

Self-supervised learning, where models generate labels from data patterns themselves, is being combined with online learning to enable continuous learning from raw, unlabeled data. This hybrid approach reduces the dependency on labeled data, which is costly and time-consuming to obtain.

- Example: In natural language processing, a self-supervised online learning model might refine language predictions by learning from user input patterns, adapting to new expressions and phrases as they appear in real-time conversations.

4. Hybrid Online and Batch Learning Models

Hybrid models that blend online and batch learning provide both stability and adaptability. In this approach, models trained on historical data in batch mode are continuously updated with new data in online mode, allowing them to retain long-term patterns while adapting to new trends.

- Example: In personalized marketing, a hybrid model uses batch learning for seasonal trends and online learning for immediate changes in customer behavior, ensuring recommendations are both consistent and up-to-date.

5. Automated Drift Detection and Model Retraining

As concept drift becomes a challenge for online learning models, automated drift detection and retraining solutions are emerging to ensure continuous model accuracy. By automating drift detection, models can detect shifts in data patterns and initiate retraining, preventing performance degradation.

- Example: In fraud detection, an automated drift detection system monitors online learning models, alerting the team or triggering retraining if a significant change in transaction patterns is detected.

These trends highlight how online learning is evolving to support real-time processing needs across more complex, distributed environments while addressing data privacy and scalability.

Best Practices for Optimizing Online Learning Models

To build effective online learning models that adapt to dynamic data environments, it’s essential to implement best practices that ensure model accuracy, stability, and scalability.

1. Regularize the Model to Prevent Overfitting

Online learning models that update continuously are prone to overfitting recent data points, especially in volatile environments. Regularization techniques help control this by limiting the model’s complexity, allowing it to generalize better.

- Best Practice: Use techniques like L2 regularization or dropout (in neural networks) to prevent the model from becoming overly responsive to new data. This improves stability and reduces noise.

2. Optimize Hyperparameters Incrementally

Hyperparameters such as the learning rate and regularization strength need to be optimized over time to keep the model’s predictions balanced between current relevance and historical knowledge. Incremental tuning ensures the model adapts effectively without drastic changes.

- Best Practice: Employ adaptive learning rate schedules, like decreasing the learning rate gradually, to prevent the model from becoming overly sensitive to recent data. This fine-tuning maintains stability as the model evolves.

3. Use Memory Management Techniques for Efficient Data Handling

Efficient memory management is crucial for online learning, especially when processing large datasets in real time. Memory-efficient algorithms and data structures allow the model to handle streaming data without excessive memory consumption.

- Best Practice: Use streaming libraries like Apache Flink or Spark Streaming to manage incoming data in a memory-efficient way. Alternatively, store only the most recent data points or summaries of past data to maintain a manageable memory footprint.

4. Implement Continuous Monitoring and Logging

Real-time monitoring and logging are essential to ensure online learning models remain effective and stable. By tracking performance metrics over time, you can identify issues early, such as data drift or accuracy drops, and take corrective action before these issues impact the model’s predictions.

- Best Practice: Set up dashboards to track model performance metrics like accuracy, precision, and recall. Use alerts to notify data scientists if performance deviates from acceptable thresholds, allowing for timely intervention.

5. Apply Drift Detection and Model Refresh Techniques

Concept drift can compromise the accuracy of online learning models if not managed properly. Applying drift detection techniques to monitor the stability of data distributions and refreshing the model periodically ensure that it continues to perform well in changing environments.

- Best Practice: Use statistical tests or algorithms like the Page-Hinkley Test to detect significant changes in data patterns. When drift is detected, retrain the model using a representative sample of both new and historical data to recalibrate predictions.

6. Ensure Explainability for High-Stakes Applications

In applications where transparency is crucial, such as healthcare and finance, model interpretability is essential. Online learning models must be explainable to ensure stakeholders understand the decision-making process, building trust and ensuring compliance with regulatory standards.

- Best Practice: Apply model interpretability tools like SHAP or LIME to explain predictions on a case-by-case basis. Regularly review feature importance scores to ensure the model’s decisions align with domain knowledge and ethical standards.

By following these best practices, practitioners can build robust online learning models that maintain accuracy and relevance in dynamic environments, providing value even in data-intensive applications.

Future Applications of Online Learning

As data sources continue to expand and real-time processing becomes essential across more fields, online learning is poised to support a range of future applications that demand adaptability, responsiveness, and scalability.

1. Smart Cities and IoT Systems

With the proliferation of IoT devices in smart cities, online learning will be central to analyzing real-time data from traffic sensors, public utilities, and environmental monitoring systems. These applications will require models that adjust continuously based on new data to optimize resource use, reduce traffic congestion, and improve urban living conditions.

- Example: An online learning model could process traffic sensor data to predict congestion and reroute vehicles in real time, improving traffic flow in busy city centers.

2. Personalized Healthcare and Wearable Technology

As wearable devices and health apps continue to grow, online learning will enable personalized healthcare solutions that adapt to individual users’ health data in real time. Models trained with online learning can detect changes in vital signs or activity patterns, providing tailored health advice or alerts for potential risks.

- Example: An online learning model monitoring glucose levels in diabetic patients can adjust its predictions based on recent measurements, helping patients manage blood sugar in real time.

3. Financial Market Analysis and Algorithmic Trading

Online learning will become increasingly important in financial markets, where real-time responsiveness is critical for trading and risk management. Models that analyze live market data can provide accurate predictions, enabling algorithmic trading strategies that capitalize on the latest market trends.

- Example: An algorithmic trading model using online learning could adjust its predictions based on sudden shifts in stock prices, helping traders make rapid buy or sell decisions to maximize returns.

4. Autonomous Systems and Real-Time Robotics

In autonomous systems, such as drones, robots, and self-driving vehicles, online learning supports real-time decision-making in response to environmental changes. As these systems encounter new objects, obstacles, or weather conditions, online learning enables continuous learning for safe and efficient operation.

- Example: An online learning model on a delivery drone could adapt its flight path based on weather data, avoiding hazards and ensuring safe delivery routes in real time.

5. Real-Time Sentiment Analysis in Social Media

With the constant influx of social media data, online learning models will play a crucial role in sentiment analysis for brands, political campaigns, and crisis management. By tracking sentiment in real time, these models provide immediate insights into public opinion and enable timely responses to changing trends.

- Example: An online learning model for sentiment analysis could monitor tweets in real time, adjusting sentiment predictions as new content appears, helping brands gauge public reaction to campaigns or announcements.

These applications highlight the growing potential of online learning to drive responsive, real-time solutions across diverse sectors, from urban infrastructure to personalized medicine and financial markets.

Conclusion: The Significance of Online Learning

Online learning has become an essential machine learning approach for applications requiring continuous adaptation, scalability, and real-time responsiveness. Unlike batch learning, where models are trained on fixed datasets, online learning updates with each new data point, making it ideal for dynamic environments where data changes frequently.

From financial trading and autonomous vehicles to smart cities and healthcare, online learning provides the flexibility needed to handle streaming data effectively. As online learning evolves with trends like edge computing, federated data, and self-supervised learning, it will play a central role in supporting applications that require immediate decision-making and responsiveness to shifting data patterns.

By following best practices, such as tuning hyperparameters incrementally, managing concept drift, and ensuring explainability, data scientists can build robust online learning models that maintain accuracy and relevance in dynamic settings. The future of online learning holds exciting possibilities, enabling smarter, faster, and more adaptive systems that meet the demands of a data-driven world.