Introduction

The interviewer leans forward slightly, making eye contact as they ask the question that appears in virtually every data science interview: “Tell me about a data science project you have worked on.” This seemingly simple request can determine whether you advance to the next round or receive a polite rejection email. In this moment, you have the opportunity to showcase not just what you built but how you think, how you solve problems, and why you would be valuable to their team.

Many candidates stumble through project discussions despite having done impressive technical work. They either dive immediately into implementation details that lose the interviewer’s attention, or they stay so high-level that the interviewer questions whether they actually did the work themselves. They fail to connect their project to business value, making it seem like an academic exercise rather than a demonstration of practical skills. They neglect to highlight the interesting challenges they overcame, missing opportunities to showcase problem-solving abilities.

The question about your project is not actually about the project itself. Interviewers already saw your portfolio or resume before inviting you to interview. They know what projects you worked on. What they want to understand through this conversation is how you approach problems, how you make decisions when faced with alternatives, how you handle challenges and setbacks, how deeply you understand the techniques you used, and how effectively you can communicate complex technical work to different audiences.

This means that preparing to discuss your projects requires more than just remembering what you did. You need to develop compelling narratives that highlight your thought process and decision-making. You need to practice explaining technical concepts at appropriate levels of detail for different audiences. You need to anticipate questions interviewers might ask and prepare thoughtful answers. Most importantly, you need to frame your work in terms of problems solved and value created rather than just techniques applied.

In this comprehensive guide, you will learn how to transform your project work into compelling interview responses. We will explore frameworks for structuring your project discussions, techniques for adjusting technical depth to your audience, strategies for highlighting your problem-solving process, and methods for handling challenging questions about your work. By the end, you will have a systematic approach to discussing any project in a way that demonstrates your capabilities and makes interviewers eager to work with you.

Understanding What Interviewers Really Want to Know

Before crafting your project narrative, you need to understand the underlying questions interviewers are trying to answer through this seemingly open-ended request. Recognizing their true objectives helps you shape your response to address their concerns directly.

When interviewers ask about your project, they are assessing your technical depth and breadth. They want to know whether you genuinely understand the methods you used or just followed tutorials blindly. They probe to find the limits of your knowledge and determine at what level you can contribute to their team. A candidate who can explain not just what they did but why they made specific choices demonstrates deeper understanding than someone who simply recounts steps they followed.

Interviewers also evaluate your problem-solving approach through project discussions. They want to see evidence that you can break complex problems into manageable pieces, that you consider multiple approaches before selecting one, that you iterate and refine your solutions based on results, and that you learn from mistakes rather than giving up when initial attempts fail. Your project narrative should reveal these problem-solving qualities through concrete examples rather than abstract claims.

Communication skills emerge as a central evaluation criterion during project discussions. Data scientists must regularly explain technical work to non-technical stakeholders, write clear documentation, and collaborate with colleagues across different specializations. Your ability to explain your project clearly, adjust technical detail appropriately for the audience, and respond thoughtfully to questions demonstrates the communication capabilities that distinguish effective data scientists from purely technical ones.

Through project discussions, interviewers assess whether you think about business impact or just technical implementation. They want to know whether you connected your work to real decisions or outcomes, whether you considered practical constraints like time and resources, whether you validated that your solution actually provided value, and whether you can articulate why the problem mattered in the first place. Candidates who frame projects in business terms rather than purely technical achievements signal mature thinking about data science’s purpose.

Intellectual honesty and self-awareness show through in how you discuss project limitations and mistakes. Interviewers appreciate candidates who acknowledge what did not work, who discuss what they would do differently with more time or different circumstances, who recognize the boundaries of their solutions, and who can critique their own work constructively. This honesty demonstrates confidence and maturity that overconfident candidates who present everything as perfect lack.

Your passion and genuine interest come through during project discussions. When you worked on a project you truly cared about, your enthusiasm shows in how you discuss it. You remember details vividly, you engage energetically with questions, and you convey excitement about what you learned or discovered. This genuine interest signals that you will bring similar engagement to work projects rather than just going through motions for a paycheck.

Interviewers also use project discussions to assess cultural fit and working style. How you describe collaboration on team projects reveals your approach to working with others. How you discuss handling disagreements or challenges shows your response to difficulty. How you talk about learning new skills indicates your growth mindset. These behavioral signals help interviewers envision whether you would thrive in their specific team environment.

Understanding these underlying objectives transforms how you prepare to discuss projects. Rather than simply rehearsing what you did, you craft narratives that demonstrate problem-solving ability, technical depth, business thinking, and communication skills. You prepare examples that show how you handled challenges, made decisions, and iterated based on results. You practice explaining your work at different levels of technical detail. This strategic preparation ensures your project discussion addresses what interviewers actually care about rather than just recounting technical steps.

Structuring Your Project Narrative

An effective project discussion follows a clear structure that makes it easy for interviewers to understand your work while highlighting your capabilities. Without structure, project descriptions become rambling chronologies that lose the interviewer’s attention. With thoughtful structure, you guide the conversation strategically to showcase your strengths.

Begin your project narrative with a concise problem statement that establishes context and motivation. In two or three sentences, explain what problem you were solving, why it mattered, and what the stakes were. This opening immediately orients the interviewer and demonstrates that you think about data science as a tool for solving meaningful problems rather than just an intellectual exercise.

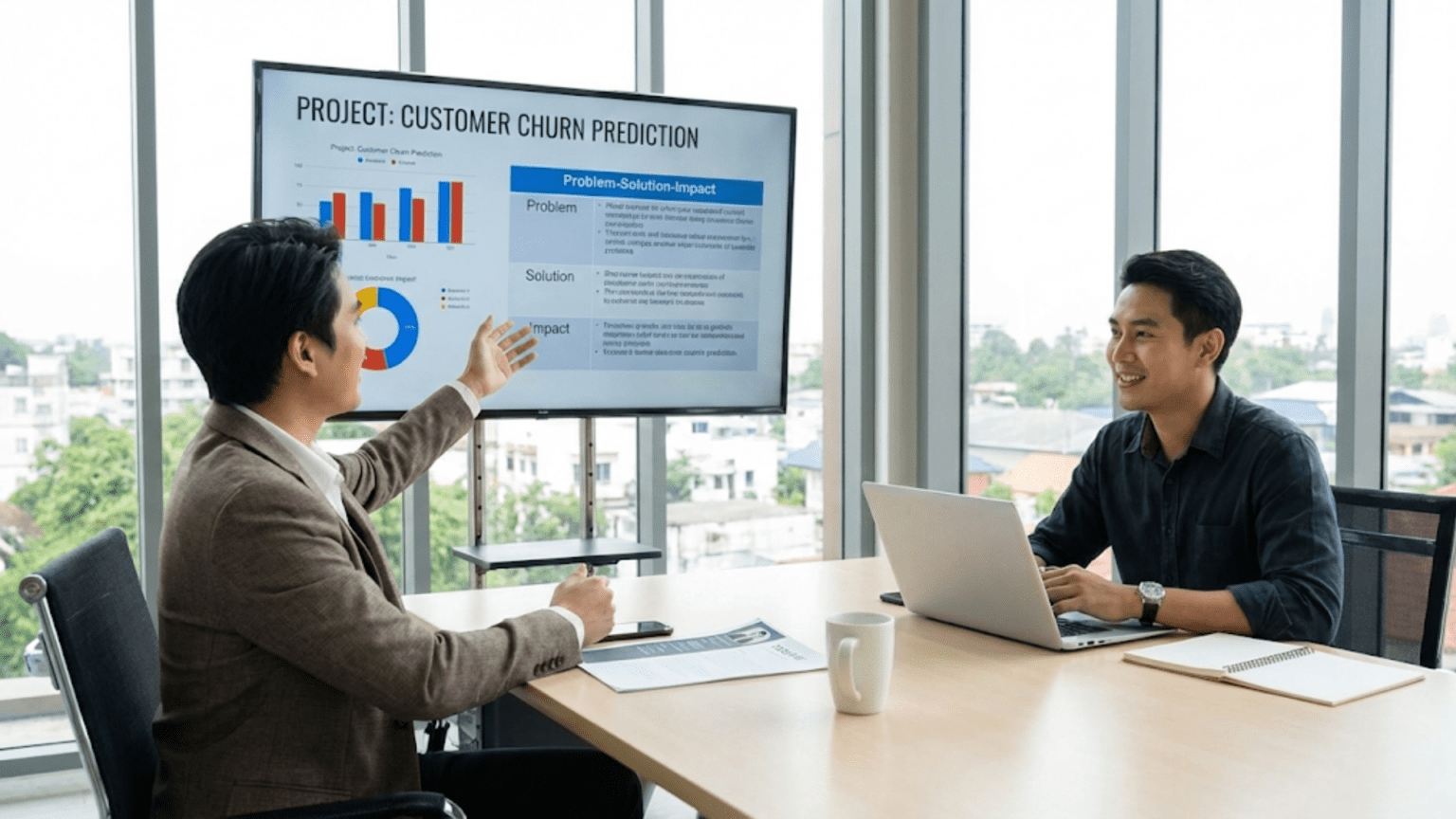

For example, rather than opening with “I built a machine learning model to predict customer churn,” start with context: “E-commerce companies lose significant revenue when customers stop purchasing, but most organizations do not realize customers are at risk until they have already churned. I wanted to build an early warning system that could identify customers likely to churn in the next sixty days, giving the retention team time to intervene with targeted offers before losing them.”

This contextualized opening accomplishes several things. It shows you understand the business problem behind the technical work. It explains why the problem matters in terms of real impact. It frames your technical solution as addressing a practical need. It sets up the rest of your narrative with clear objectives against which to evaluate success.

After establishing the problem, provide a high-level overview of your approach before diving into details. Summarize your solution in one or two sentences so the interviewer knows where the story is going. You might say “I developed a gradient boosting model using customer behavioral data, transaction history, and engagement metrics that achieved eighty-five percent recall in identifying churning customers, allowing the retention team to focus their efforts efficiently.”

This overview gives the interviewer a mental framework for the details that follow. They now know you built a predictive model using specific data sources and achieved measurable results. The subsequent technical discussion fits into this framework rather than leaving the interviewer wondering where your description is headed.

Next, describe your data and how you obtained it. Explain what data sources you worked with, what key features or variables were available, what the data volume and quality looked like, and what initial challenges or limitations existed. This section demonstrates your understanding that data science starts with data, and that data characteristics fundamentally shape what approaches are feasible.

When discussing data, highlight interesting aspects that influenced your approach. Perhaps the data had severe class imbalance that required special handling. Maybe you had to merge multiple data sources with inconsistent formats. Perhaps critical features had substantial missing values that needed imputation. These data challenges showcase problem-solving as you explain how you addressed them.

Walk through your methodology by describing the key steps in your analytical process. Cover data preprocessing and cleaning decisions, feature engineering and what motivated specific features, exploratory analysis and what patterns you discovered, model selection and why you chose your approach, evaluation strategy and metrics, and iteration and refinement based on initial results. This walkthrough demonstrates systematic thinking and technical competence.

During the methodology discussion, emphasize decision points where you chose between alternatives. Explain why you selected gradient boosting over random forest or why you chose particular features over others. These explanations reveal your reasoning process and show you make intentional choices rather than random technical decisions. You might say “I experimented with both logistic regression and gradient boosting. Logistic regression provided good interpretability but missed nonlinear patterns in the data. Gradient boosting achieved better performance while still offering feature importance scores, so I selected it as the primary model.”

Present your results by quantifying what you achieved using appropriate metrics. Connect these metrics to business impact whenever possible. Instead of just stating “the model achieved eighty-five percent recall,” explain “the model correctly identified eighty-five percent of customers who would churn, meaning the retention team could reach most at-risk customers before losing them. At an average customer value of five hundred dollars, catching even half of these customers through targeted retention campaigns could save tens of thousands of dollars monthly.”

Discuss what insights emerged from your analysis beyond just model performance. What did you learn about customer behavior? What factors most influenced churn? Were there surprising patterns in the data? These insights demonstrate that you extract value from analysis beyond just building models, showing analytical thinking that creates actionable knowledge.

Conclude your initial narrative with reflections on limitations and what you would do differently. Acknowledge aspects of the project that could be improved, data you wish you had access to, or approaches you would try with more time. You might say “The model performs well overall but struggles with newly acquired customers who have limited history. With more time, I would explore incorporating external data about customer demographics to improve predictions for this segment.”

This self-critical reflection demonstrates maturity and shows you can evaluate your own work honestly. It also provides natural transition points for the interviewer to ask follow-up questions about topics that interest them.

The entire structured narrative should take three to five minutes to deliver, covering problem, approach, and results at a level that establishes context without overwhelming with details. This initial overview sets the stage for the deeper technical discussion that typically follows.

Adjusting Technical Depth for Your Audience

One of the most challenging aspects of discussing projects in interviews is calibrating technical depth appropriately for different audiences. Too much detail overwhelms non-technical interviewers and wastes time. Too little detail makes technical interviewers question whether you genuinely understand your work. Developing the ability to read your audience and adjust your explanation accordingly demonstrates sophisticated communication skills.

When interviewed by non-technical hiring managers or recruiters, focus on business context, problem-solving approach, and impact rather than technical implementation details. These interviewers care about whether you can solve real problems, communicate clearly, and deliver value. They do not need to understand gradient boosting mathematics or neural network architectures.

For non-technical audiences, use analogies and plain language to explain technical concepts. Instead of discussing “feature engineering to capture interaction effects between variables,” you might say “I created new variables that capture how different customer behaviors combine, like how someone who views many products but adds few to cart represents a different pattern than someone who adds many items but rarely completes purchases.” This explanation conveys the concept without requiring technical background.

Emphasize outcomes and decisions over process when speaking with non-technical interviewers. They want to know what you discovered and how it could inform action. You might explain “The analysis revealed that customers who do not purchase within their first two weeks rarely convert later, suggesting we should focus retention efforts on the critical early window rather than spreading resources evenly over time.”

When interviewed by data scientists or technical leads, you can and should dive deeper into technical details. These interviewers want to understand your methodology, evaluate your technical choices, and assess your depth of knowledge. They appreciate discussions of specific algorithms, evaluation metrics, and implementation details.

For technical audiences, explain your reasoning behind technical decisions. Discuss why you chose particular algorithms, how you handled specific challenges, what hyperparameter tuning approaches you used, and how you validated that your solution generalized beyond training data. A technical interviewer might ask why you used XGBoost rather than a neural network, and a thoughtful answer discussing the tabular nature of your data, the need for interpretability, and XGBoost’s strong performance on structured data demonstrates deep understanding.

Be prepared to go even deeper when technical interviewers probe specific aspects. If they ask about how you handled class imbalance, be ready to discuss whether you tried oversampling, undersampling, or class weights, what metrics you optimized for given the imbalance, and how you validated that your approach worked. This detailed technical discussion shows mastery rather than surface-level knowledge.

For mixed audiences containing both technical and non-technical interviewers, adopt a layered approach. Start with high-level context and business framing that everyone can follow. Then offer to go into technical details, phrasing it as “I can discuss the specific algorithms and implementation if that would be helpful.” This gives technical interviewers the opportunity to dig deeper while not forcing non-technical listeners through details they cannot evaluate.

During your explanation, watch for cues about whether you are at the right level of detail. If the interviewer seems confused or disengaged, you may be too technical and should return to higher-level explanation. If they are nodding along and asking for more specifics, you can dive deeper. If they interrupt with questions, use their questions to gauge what level they want. A question like “How did you validate your model?” signals they want technical detail about cross-validation or test set evaluation. A question like “How would this help the business?” signals they want higher-level impact discussion.

Practice explaining your project at multiple levels of abstraction. Develop a thirty-second elevator pitch, a three-minute overview, and a ten-minute detailed walkthrough. Being comfortable at each level allows you to adapt to interview dynamics and time constraints. You might start with the three-minute version, then dive deeper into areas the interviewer asks about while keeping other sections concise.

Use technical terminology appropriately but always be ready to explain it in simpler terms. When you mention concepts like “regularization” or “cross-validation,” gauge whether your audience needs clarification. With technical audiences, you can use specialized vocabulary freely. With mixed or non-technical audiences, briefly define terms when you introduce them or use simpler language from the start.

The key is developing sensitivity to your audience and flexibility in your presentation. The same project might be discussed primarily in business terms with one interviewer and primarily in technical terms with another. Your ability to make these adjustments shows communication skill that proves valuable in data science roles where you interact with diverse stakeholders.

Highlighting Your Problem-Solving Process

Beyond describing what you did, effective project discussions reveal how you think and solve problems. Interviewers want to see evidence of systematic problem-solving, learning from failures, and iterative refinement. Highlighting these process elements distinguishes you from candidates who simply recite project steps.

Emphasize how you approached uncertainty and ambiguity at the project’s outset. Most real-world projects start with vague problem statements and incomplete information. Discussing how you clarified requirements, identified what data you needed, and scoped the problem demonstrates valuable skills. You might explain “Initially, the problem statement was just ‘predict customer churn,’ which is too vague to actionable. I worked to define churn specifically as no purchase in sixty days, established that predictions needed to occur monthly to align with marketing campaign cycles, and determined that we needed at least seventy percent recall to make the targeting efficient enough to justify costs.”

This clarification process shows you do not just accept vague requirements but actively work to define problems precisely. It demonstrates critical thinking about what success means and what constraints shape the solution. These problem-framing skills prove essential in real data science work.

Discuss the exploratory phase where you investigated the data and formed hypotheses. Explain patterns you looked for, visualizations you created to understand the data, and initial insights that shaped your approach. You might say “I started by exploring purchase patterns over time, which revealed that most customers who churn show declining engagement weeks before their final purchase. This observation led me to engineer features capturing engagement trends rather than just point-in-time snapshots.”

This exploration narrative demonstrates curiosity and analytical thinking. It shows you do not jump immediately to modeling but first invest time understanding the problem domain and data. It reveals how domain insights inform technical decisions, connecting business understanding to technical implementation.

Be transparent about challenges you encountered and how you overcame them. Every project includes obstacles, and discussing them honestly showcases problem-solving resilience. Perhaps your initial model performed poorly due to severe class imbalance. Explain what you tried to address this, whether techniques worked or failed, and how you ultimately solved the problem. You might describe “My first model had ninety-five percent accuracy but caught almost no churning customers because it simply predicted everyone would stay. I addressed this by switching to recall and precision metrics more appropriate for imbalanced classes, trying SMOTE oversampling to balance the training set, and adjusting the decision threshold to prioritize recall over precision given the business need to catch potential churners even if that meant some false alarms.”

This transparent discussion of failure and recovery demonstrates several valuable qualities. It shows you do not give up when initial approaches fail. It reveals methodical debugging where you diagnosed the problem and tested solutions systematically. It demonstrates learning from results and adjusting your approach based on what the data tells you.

Highlight decision points where you chose between alternatives and explain your reasoning. Projects involve countless choices, and explaining why you made specific decisions reveals your thought process. When you chose one algorithm over another, one evaluation metric over another, or one feature engineering approach over another, you had reasons. Articulating those reasons shows intentional decision-making.

You might explain “I considered both random forest and gradient boosting. Random forest trains faster and is less prone to overfitting, but gradient boosting typically achieves better performance on structured data like we had. Since prediction quality was more important than training speed for this monthly batch process, I chose gradient boosting. I also knew it provides feature importance scores that would help explain to the marketing team which factors most influenced churn predictions.”

Discuss how you validated your approach and iterated based on results. Effective problem-solving involves testing assumptions, measuring outcomes, and refining based on what you learn. Explain how you split data for validation, what metrics you tracked, how you identified weaknesses in early versions, and what changes you made to improve performance. This iterative refinement demonstrates that you treat model building as a scientific process of hypothesis and testing rather than hoping the first approach works.

Mention times when you simplified your approach after finding complex methods were not necessary. Sometimes the best problem-solving involves recognizing when simpler solutions suffice. If you tried deep learning but found that gradient boosting performed just as well with much faster training and easier interpretability, discussing that choice demonstrates pragmatic judgment about cost-benefit tradeoffs in technical decisions.

Connect your problem-solving process to outcomes by showing how your methodical approach led to results. Frame your work as a journey from initial uncertainty through investigation and experimentation to eventual solution. This narrative arc makes your project story more engaging while demonstrating the problem-solving capabilities that interviewers value.

Demonstrating Business Impact and Value

Data science exists to create value for organizations, yet many candidates discuss projects purely in technical terms without connecting to business impact. Demonstrating that you think about value creation and real-world application distinguishes you from technically skilled candidates who lack business perspective.

Frame your project’s purpose in terms of business problems or opportunities rather than just technical challenges. Instead of “I wanted to practice using neural networks,” explain “I built a recommendation system to help the e-commerce platform increase average order value by suggesting relevant products that customers might not discover on their own.” This business framing immediately establishes that you understand data science as a tool serving organizational objectives.

Quantify business impact whenever possible, even if approximate. Numbers make impact concrete and memorable. Rather than saying “the model improved customer retention,” specify “the retention team using model predictions retained approximately fifteen percent more at-risk customers than their previous approach of random outreach, translating to an estimated fifty thousand dollars in monthly retained revenue based on average customer lifetime value.”

These quantified impacts demonstrate that you think about return on investment, not just technical performance. Even for academic or portfolio projects without real business deployment, you can estimate potential impact based on reasonable assumptions about how organizations might use your work.

Discuss how your technical choices were influenced by business constraints. Real-world data science operates under time, resource, and complexity constraints that academic exercises ignore. Mentioning these considerations shows practical thinking. You might explain “While deep learning might have provided marginally better accuracy, the marketing team needed to understand which factors influenced predictions to design targeted interventions. This requirement led me to choose an interpretable model like gradient boosting over black-box neural networks.”

Explain how stakeholders would actually use your work in practice. Walk through the workflow from prediction to action. For a churn prediction model, you might describe “Each month, the model scores all active customers for churn risk. Customers above the threshold are flagged for the retention team, who can then prioritize their outreach efforts, personalize their messaging based on the risk factors the model identified, and track conversion rates to measure retention campaign effectiveness.”

This implementation perspective demonstrates that you think beyond building models to how they integrate into organizational processes. It shows awareness that models only create value when people can use them effectively.

Address potential business objections or concerns about your approach. Perhaps stakeholders might worry about the cost of intervening with customers who would not actually churn. Acknowledging this concern and explaining how you balanced false positives against missed churners shows sophisticated thinking about tradeoffs. You demonstrate understanding that perfect technical performance may conflict with business constraints or priorities.

Discuss how you validated that your solution actually provided business value, not just technical performance. Technical metrics like accuracy or AUC matter, but they proxy for real value. If you had the opportunity to test your solution in practice, discuss what business metrics you tracked. If not, explain how you would validate value in production. This validation mindset shows maturity in understanding that models must deliver real-world impact to justify their cost.

Connect your work to strategic objectives when relevant. Perhaps your analysis revealed insights that could inform broader strategy beyond the immediate use case. Your customer churn analysis might have discovered that churn concentrates in specific product categories, suggesting product quality issues worth investigating. Mentioning these strategic insights demonstrates that you see opportunities to create value beyond the specific project scope.

Frame limitations or next steps in business terms as well. Rather than just noting technical improvements you would make, discuss their business implications. You might say “The current model provides strong overall performance but struggles with newly acquired customers. Improving predictions for this segment would be particularly valuable because acquiring customers is expensive, so retaining them from the start provides higher ROI than retention efforts for established customers.”

Even when discussing technical projects or learning exercises without real business application, you can frame them in business contexts. If you built an image classifier for a personal project, discuss how similar techniques solve real business problems in quality control, medical diagnosis, or content moderation. This contextualization shows you connect technical skills to practical applications.

Preparing for Deep-Dive Follow-Up Questions

After your initial project narrative, interviewers typically ask follow-up questions probing specific aspects in greater depth. Anticipating common follow-up questions and preparing thoughtful answers ensures you can discuss your work confidently at whatever level the interviewer requires.

Technical interviewers often ask you to explain your algorithm choices in detail. They want to understand not just what you used but why. Prepare to discuss the algorithms you considered, what strengths and weaknesses each had for your problem, what experiments you ran to compare them, and what factors led to your final choice. For instance, if asked why you chose XGBoost, be ready to explain that it handles tabular data effectively, provides feature importance scores, allows custom loss functions, and typically outperforms other methods on structured data like you had.

Expect questions about your evaluation strategy and metrics. Be prepared to explain how you split your data for training and testing, what metrics you used to assess performance and why those metrics were appropriate, how you handled class imbalance in evaluation, and whether you used cross-validation or other validation strategies. A question like “How did you validate your model?” requires detailed answers about your methodology, not just “I used test data.”

Interviewers frequently probe your handling of data quality issues. Prepare to discuss how you detected missing values and decided how to handle them, how you identified and treated outliers, how you handled categorical variables with many categories, what data transformations or scaling you applied, and how you dealt with any data inconsistencies. These questions test your practical data wrangling skills and judgment about preprocessing decisions.

Feature engineering questions explore your creativity and domain understanding. Be ready to explain what features you created beyond what existed in raw data, what intuition or hypothesis motivated each engineered feature, whether you tried different feature engineering approaches, and how you validated that features improved performance. Strong answers show that feature engineering involved thoughtful hypothesis formation rather than random feature creation.

Expect questions about what did not work during your project. Interviewers want to see that you tried multiple approaches and learned from failures. Prepare examples of techniques you experimented with that did not improve results, be ready to explain why you think they failed, and discuss what you learned from unsuccessful experiments. These discussions demonstrate scientific thinking and resilience in the face of setbacks.

Be prepared for questions about how your solution would scale or work in production. Even for academic projects, interviewers might ask how you would deploy your model, how you would handle much larger datasets, what monitoring you would implement, or how you would update models over time. These questions test whether you think about production concerns beyond just building models in notebooks.

Anticipate questions about model interpretability and explainability. Especially for business applications, stakeholders need to understand what drives predictions. Prepare to discuss what features most influenced your model’s predictions, how you would explain a specific prediction to a non-technical user, what tools or techniques you used for model interpretation, and why interpretability mattered or did not matter for your use case.

Expect hypothetical extensions or variations on your project. Interviewers might ask how your approach would change with different constraints, more data, or different objectives. For instance, “What if you needed real-time predictions instead of batch?” or “How would you approach this if you had ten times more data?” These questions test your ability to think flexibly about problems and understand how different requirements shape solutions.

Be ready for questions that probe the limits of your knowledge. Interviewers intentionally ask increasingly difficult questions until they find topics you cannot answer confidently. This is normal and not a sign of failure. When you reach your knowledge boundary, admit it honestly while demonstrating how you would approach learning what you do not know. You might say “I have not worked with that specific technique, but based on my understanding of similar approaches, I would expect it to help with this problem because…” This response shows intellectual honesty combined with analytical thinking.

Prepare for behavioral questions connected to your project. You might be asked about challenges you faced, how you handled disagreements if working on a team, what you learned from the project, or what you would do differently knowing what you know now. These questions assess soft skills and self-awareness alongside technical capabilities.

Practice explaining technical concepts at different levels of depth. An interviewer might ask you to explain gradient boosting, and your answer should adapt to their follow-up questions. If they ask for more detail, you should be able to discuss how boosting builds sequential models where each corrects errors from previous models. If they ask for even more depth, you should understand how gradients guide the fitting of each new tree. This multi-level understanding demonstrates mastery.

The key to handling deep-dive questions is genuine understanding of your work. You cannot fake your way through detailed technical probing. But if you truly understand what you did and why, you can confidently discuss your project at whatever depth the interviewer requires. When you prepared your project, you made countless decisions and overcame numerous challenges. Reviewing those experiences and articulating your reasoning prepares you for the deep dive questions that reveal the depth of your expertise.

Practicing Your Project Presentations

Knowing what to say is only half the challenge. Delivering your project discussion smoothly and confidently requires practice. Rehearsing your presentation helps you internalize your narrative, identify weak points, and build the confidence that comes from repetition.

Start by writing out your project narrative following the structure outlined earlier: problem statement, approach overview, data description, methodology walkthrough, results, and limitations. This written version helps you organize your thoughts and ensures you cover all important points. Aim for a written narrative that would take three to five minutes to deliver when spoken aloud.

Once you have written your narrative, practice delivering it aloud. Speaking your presentation aloud reveals awkward phrasing, unclear explanations, and pacing issues that you miss when just reading silently. Time yourself to ensure you can cover your core narrative in a reasonable timeframe. Most initial narratives run too long, requiring editing to keep the overview concise while preserving key points.

Record yourself presenting your project and watch or listen to the recording. This self-review, while perhaps uncomfortable, provides invaluable feedback about your delivery. Notice verbal tics like “um” or “like” that you may not realize you use. Observe whether your explanations are clear or whether you assume knowledge the listener lacks. Evaluate whether your pacing is too fast, too slow, or uneven. These observations guide improvements that make your delivery more polished.

Practice with different audiences to develop flexibility in your presentation. Present your project to technical friends who can assess whether your explanations are accurate and appropriately detailed. Present to non-technical friends or family to verify that you can make your work accessible without technical background. Each presentation helps you develop sensitivity to what different audiences need from your explanation.

Conduct mock interviews where someone plays the interviewer role, asking follow-up questions about your project. This practice helps you think on your feet and respond to probing questions. Ask your mock interviewer to challenge your decisions, question your approach, and probe technical details. These simulated deep dives prepare you for real interviews where you cannot predict exactly what will be asked.

Prepare concise answers to common follow-up questions you expect. While you cannot script an entire interview, having well-rehearsed responses to predictable questions ensures you answer smoothly rather than fumbling for words. Practice explaining why you chose specific algorithms, how you validated your model, what challenges you faced, and what you learned from the project.

Create visual aids if appropriate for your presentation format. For some interviews, you might share your screen showing a few key slides or notebook excerpts. Select visualizations that illustrate important findings or demonstrate your analytical thinking. Practice incorporating these visuals into your narrative smoothly. Ensure any code or charts you show are clean, well-labeled, and easy to understand at a glance.

Anticipate difficult questions about your project and practice handling them honestly. Every project has weaknesses or areas that could be improved. Practice acknowledging these limitations while framing them constructively. If asked “What was the biggest mistake you made in this project?” have an honest example prepared along with what you learned from it. This preparation prevents you from appearing defensive or unprepared when facing critical questions.

Practice your project discussion until the narrative feels natural rather than memorized. You want to internalize the key points and flow while maintaining flexibility to adapt to interview dynamics. Overly rehearsed presentations sound robotic and cannot adjust to interviewer questions or interests. Conversely, unrehearsed discussions ramble incoherently. The right balance comes from practicing enough to be comfortable but not so much that spontaneity disappears.

Vary the starting point of your practice presentations. Interviewers might ask “Tell me about your customer churn project” or “What is your favorite project you have worked on?” or “Walk me through something challenging you have built.” Each phrasing slightly changes your entry point into the discussion. Practicing from different angles ensures you can adapt your narrative to however the question is framed.

Time yourself under simulated pressure conditions. Set a timer, take a few deep breaths as if starting an interview, then deliver your presentation. This practice builds comfort performing under the mild stress of interviews. Notice whether pressure causes you to rush through your narrative, forget key points, or become less articulate. Working through these pressure responses in practice makes them less likely to derail actual interviews.

Get feedback from others on your practice presentations and incorporate their suggestions. Perhaps your description of the business problem is unclear. Maybe you use too much jargon. Possibly your results discussion needs more quantitative detail. This external feedback identifies blind spots you miss when self-assessing.

Review and refine your project narrative periodically, especially as you learn more or gain new perspective. Early in your learning, you might focus on implementing algorithms. Later, you might recognize opportunities to better discuss business impact or alternative approaches you could have tried. Updating your narrative to reflect evolving understanding keeps it fresh and sophisticated.

Conclusion: Turning Projects into Compelling Narratives

The question “Tell me about a data science project” appears in virtually every interview because it provides a window into how you think, what you value, and how you approach complex challenges. Your project discussion can distinguish you from other technically qualified candidates by revealing the problem-solving ability, communication skills, and business awareness that make great data scientists, not just competent ones.

Transforming your project work into compelling interview narratives requires strategic preparation beyond just remembering what you did. You must craft structured narratives that quickly establish context and then guide interviewers through your approach while highlighting your decision-making process. You need to develop the ability to adjust technical depth dynamically based on your audience, explaining your work at the level most appropriate for who you are speaking with.

Effective project discussions emphasize your problem-solving process at least as much as your technical implementation. Show how you approached uncertainty, explored data to form hypotheses, overcame challenges through systematic debugging, made intentional choices between alternatives, and iterated based on results. These process elements demonstrate the thinking skills that transfer across projects while pure technical descriptions show only what you did on one specific problem.

Connecting your technical work to business value and real-world impact elevates your project discussion from academic exercise to demonstration of practical capability. Frame problems in terms of decisions or outcomes, quantify impact whenever possible, discuss how stakeholders would actually use your work, and show awareness of constraints that shape real-world data science. This business perspective proves you think beyond just building models to creating value.

Anticipating and preparing for deep-dive follow-up questions ensures you can discuss your work confidently at whatever level interviewers probe. Review your algorithm choices, evaluation strategies, data handling decisions, and feature engineering approaches until you can explain your reasoning clearly. Practice articulating what did not work and what you learned from failures, as these discussions reveal resilience and scientific thinking.

Regular practice transforms your project knowledge into polished interview performance. Rehearse your narratives aloud, record yourself to identify areas for improvement, practice with different audiences to develop flexibility, and conduct mock interviews that simulate the pressure of real conversations. This practice builds confidence that allows you to focus on connecting with the interviewer rather than struggling to remember what to say.

Remember that interviewers already know what project you worked on from your resume or portfolio. What they learn from your discussion is how you think, how you communicate, and whether you would be effective on their team. Your project narrative is not just reporting facts about work you did but demonstrating capabilities you bring to future challenges.

Begin preparing today by selecting one project and developing a structured narrative following the frameworks in this guide. Practice delivering it aloud, record yourself, and refine based on what you observe. Prepare answers to anticipated follow-up questions. The investment you make in crafting compelling project narratives pays dividends not just in landing jobs but in developing communication skills that serve you throughout your data science career. Your projects tell your professional story, make sure you tell that story effectively.