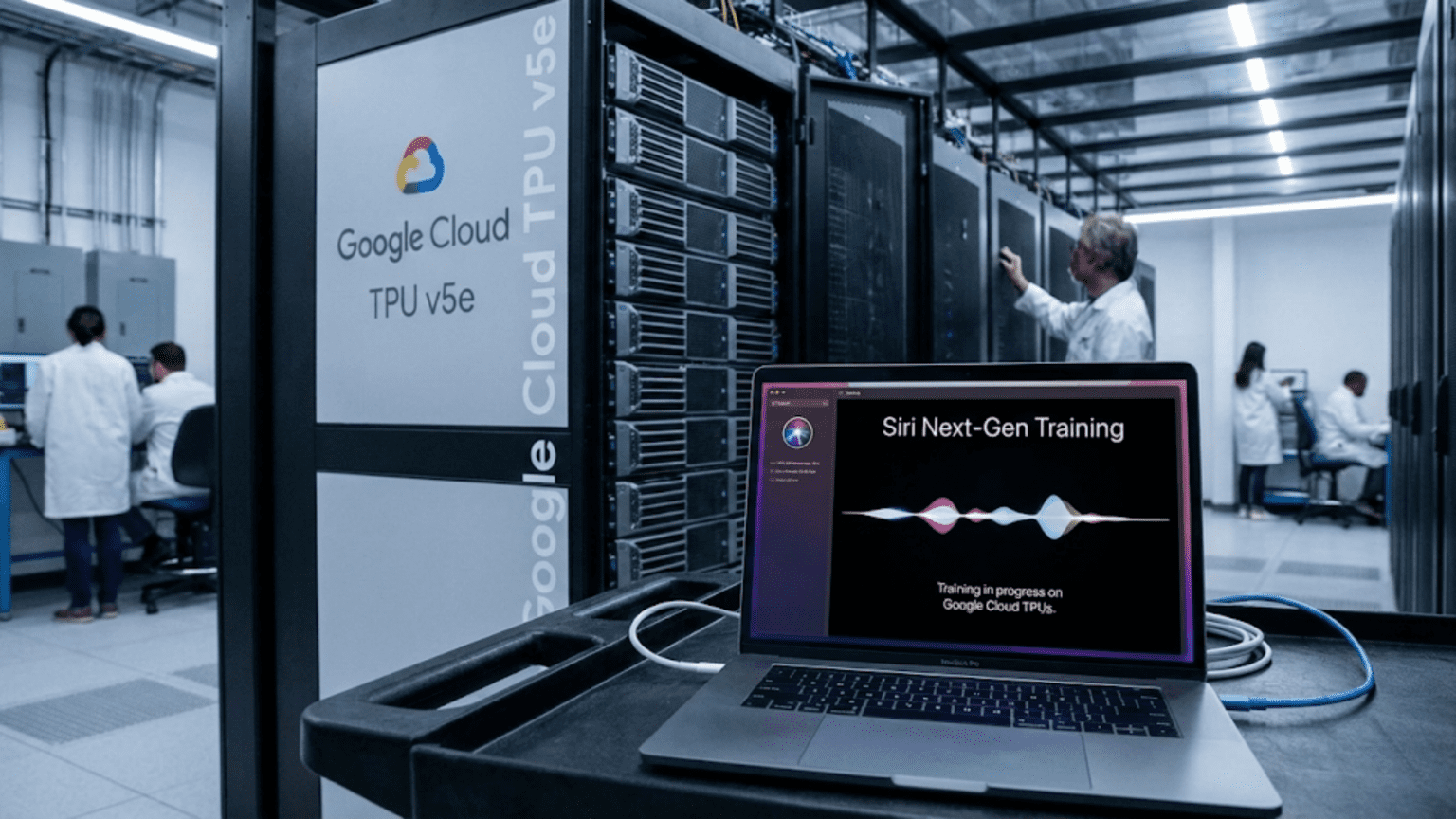

Apple, a company legendary for its commitment to proprietary hardware and tightly controlled ecosystems, is reportedly using Google Cloud’s Tensor Processing Units (TPUs) to train the next generation of its Siri virtual assistant, according to reports emerging on January 22, 2026. The development, if confirmed, would represent a significant strategic shift for Apple in its approach to artificial intelligence development and a pragmatic acknowledgment that sometimes partnering with competitors makes more sense than building everything in-house.

The “LLM Siri” system, as it’s been referred to internally, aims to transform Apple’s voice assistant from its current rule-based and limited natural language system into a truly conversational AI capable of understanding context, maintaining multi-turn dialogues, and performing complex reasoning tasks. This evolution would bring Siri more in line with advanced AI assistants like ChatGPT, Claude, and Google’s Gemini—systems that have demonstrated dramatically superior conversational capabilities since their mainstream emergence in 2022-2023.

Apple’s decision to use Google Cloud infrastructure for such a strategically important project is noteworthy given the company’s historical preference for developing proprietary silicon and controlling its entire technology stack. Apple designs its own processors for iPhones, iPads, and Macs (the A-series, M-series, and related chips), operates its own data centers, and has invested heavily in custom neural engine accelerators specifically for on-device AI workloads. The choice to train a flagship AI system on a competitor’s cloud platform suggests that practical considerations around time-to-market and computational scale outweighed strategic preferences for independence.

Google’s TPUs represent some of the most advanced hardware available for training large AI models. Google developed these custom chips specifically to accelerate neural network computations, with successive generations (currently TPU v5 and the recently announced v6) offering progressively better performance and energy efficiency. Google Cloud makes these chips available to external customers, though many of the most advanced capabilities remain reserved for Google’s internal use initially before broader availability.

The computational requirements for training modern large language models are staggering. Frontier models like GPT-4, Claude Opus, and Gemini Ultra require clusters of thousands or tens of thousands of specialized AI accelerators running for weeks or months. The associated costs run into hundreds of millions of dollars for a single training run. Even companies with substantial resources face difficult decisions about whether to build dedicated infrastructure or rent capacity from cloud providers who can amortize costs across multiple customers.

For Apple, timing may be a critical factor driving the Google Cloud decision. The company reportedly lags behind competitors in conversational AI capabilities, with Siri frequently the subject of user complaints and unfavorable comparisons to alternatives. Internal development of sufficient training infrastructure could take years, during which competitors continue advancing their AI assistants. Renting Google Cloud capacity allows Apple to accelerate development while still maintaining optionality about future infrastructure investments.

The partnership dynamics are complex given the competitive relationship between Apple and Google across multiple domains. Google pays Apple billions of dollars annually to be the default search engine in Safari, making Apple one of Google’s largest partners even as they compete in smartphones, tablets, browsers, cloud services, and AI. This pragmatic coexistence where cooperation and competition coexist—sometimes called “coopetition”—has characterized their relationship for years.

Technical experts note that training on Google Cloud doesn’t necessarily mean Apple is becoming dependent on Google technology. The training process produces a set of neural network weights (the model) that can be deployed anywhere, including on Apple’s own infrastructure or on-device using Apple’s Neural Engine chips. Once trained, the model is essentially a data file that can be moved to any compatible hardware. Apple would retain full ownership and control of the resulting AI system.

However, training represents only part of the AI assistant lifecycle. Inference—actually running the model to respond to user queries—generates ongoing computational requirements. If Apple trains a very large Siri model on Google Cloud, the company faces a choice: run inference on Google infrastructure (creating ongoing dependency and costs), run it on Apple’s own infrastructure (requiring significant investment in deployment hardware), or split processing between on-device and cloud (the likely approach given Apple’s privacy emphasis).

Apple’s privacy-focused brand positioning adds another dimension to the partnership. The company has differentiated itself from Google and other cloud-dependent competitors by emphasizing on-device processing and minimal data collection. Training Siri on Google Cloud doesn’t necessarily compromise this positioning if Apple maintains appropriate data handling practices, but it creates perceptions the company must manage carefully.

The broader AI industry increasingly operates on a model where training and inference occur in different environments optimized for each workload. Training demands massive parallel computation and tolerates higher latency, making it suitable for specialized cloud infrastructure with thousands of accelerators. Inference prioritizes response speed and often benefits from proximity to users, making on-device or edge processing attractive when feasible. Apple’s strategy likely involves training centrally while deploying inferencing capabilities across billions of devices.

Apple’s investment in AI infrastructure is substantial but has focused primarily on inference capabilities embedded in devices. The company’s Neural Engine, custom silicon integrated into A-series and M-series processors, accelerates on-device AI workloads for features like Face ID, photo processing, voice recognition, and other machine learning tasks. These chips excel at inference but aren’t designed for the kind of massive-scale training required for large language models.

Reports suggest Apple has begun building out more substantial AI training infrastructure, but such data centers take years to design, construct, and become operational. Multi-billion-dollar investments in facilities, power infrastructure, cooling systems, and specialized computing equipment cannot be deployed overnight. In the interim, cloud providers offer the fastest path to accessing the computational resources needed for competitive AI development.

The competitive implications extend beyond Apple and Google. If Apple successfully develops a dramatically improved Siri using Google’s infrastructure, it validates the business model for cloud AI training services while potentially accelerating competition in AI assistants. Other device manufacturers and software companies might follow similar approaches, driving demand for AI cloud services.

For Google Cloud specifically, landing Apple as a customer for AI workloads represents a major validation and could influence other enterprise customers evaluating where to run their AI development. Despite strong technical capabilities, Google Cloud has historically run third behind Amazon Web Services and Microsoft Azure in cloud market share. High-profile AI customers could help close this gap as AI workloads become an increasingly important driver of cloud spending.

Amazon Web Services and Microsoft Azure also offer AI training infrastructure, including custom chips (Amazon’s Trainium, Microsoft’s Azure Maia) alongside Nvidia GPUs. Apple’s choice of Google Cloud over these alternatives may reflect technical assessments of TPU performance, pricing considerations, existing relationships, or geographic availability of capacity. The decision is unlikely to be permanent or exclusive, as large AI labs typically maintain relationships with multiple cloud providers for redundancy and negotiating leverage.

Looking ahead, the next-generation Siri could launch as early as late 2026 or 2027, though Apple has not officially confirmed timelines or capabilities. The system will likely face intense scrutiny and comparisons to established conversational AI systems. Success could reinvigorate enthusiasm for Apple’s AI strategy and demonstrate that the company remains competitive in AI despite its more cautious public approach. Failure could reinforce perceptions that Apple has fallen behind in the AI race and struggles to compete with AI-native companies.

The Google Cloud training arrangement represents Apple’s pragmatic adaptation to the realities of modern AI development. Building competitive AI systems requires massive computational resources and specialized expertise. Rather than delay progress while constructing proprietary infrastructure, Apple appears to be leveraging available resources to maintain competitive positioning. Whether this approach proves successful will become clear as new AI-powered Siri capabilities roll out to hundreds of millions of devices in the coming years.