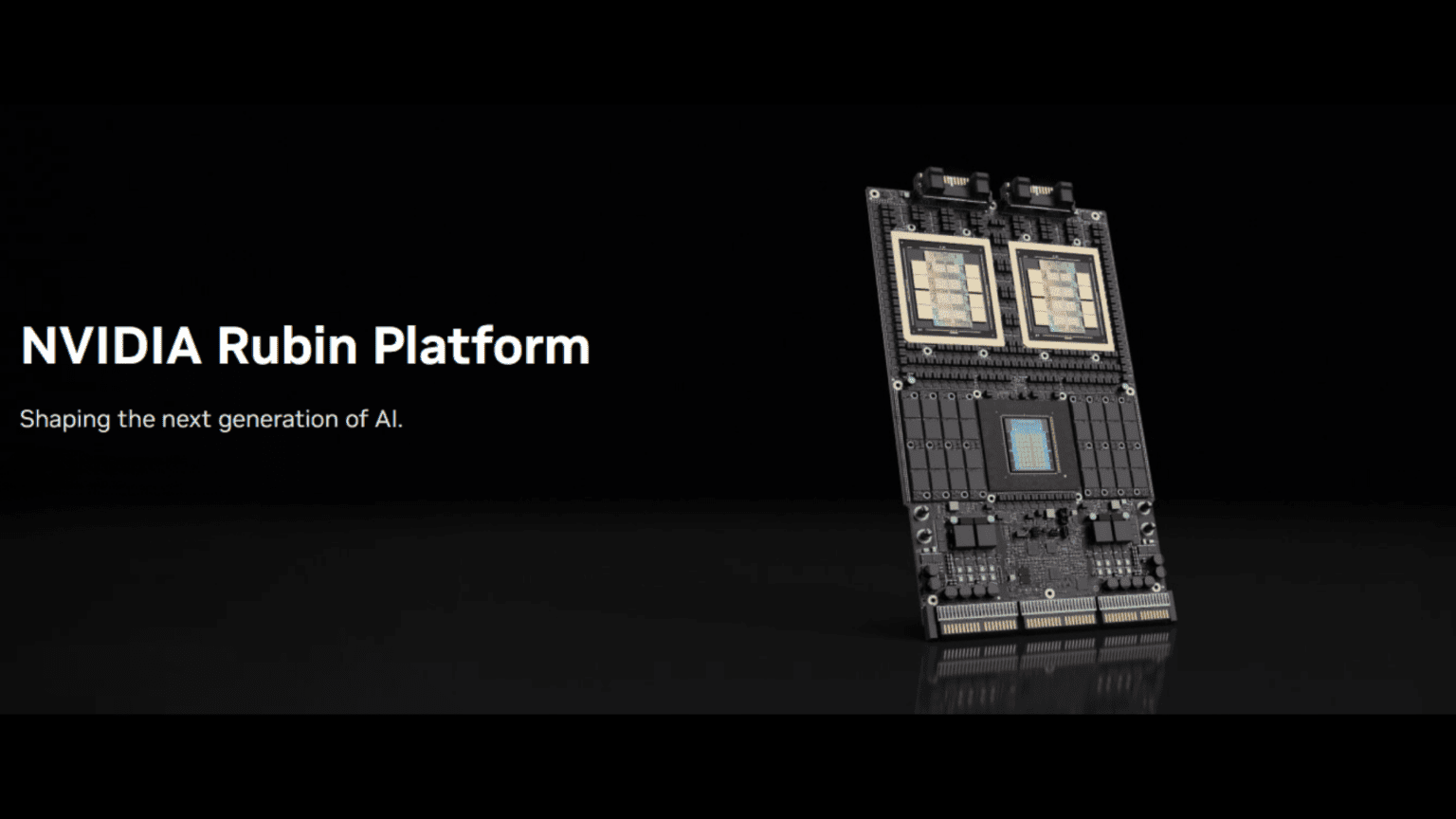

Nvidia’s CES 2026 keynote delivered the spectacle that has become synonymous with CEO Jensen Huang’s presentations, but beneath the showmanship lay substantial announcements about the company’s next-generation AI computing platform. The Vera Rubin architecture, succeeding the current Blackwell platform, incorporates six distinct chip designs manufactured by TSMC and represents Nvidia’s blueprint for sustaining its dominance in AI infrastructure through the second half of 2026 and beyond.

The announcement that Vera Rubin is already in full production carries significant implications. Bringing six concurrent chip designs from development through manufacturing in coordinated fashion demonstrates both Nvidia’s engineering prowess and TSMC’s execution capability. For an AI platform expected to begin customer deployments in the second half of 2026, production must be ramping now to build sufficient inventory.

The six chips comprising Vera Rubin span the complete technology stack required for modern AI data centers. The Vera CPU provides general-purpose processing to complement specialized AI acceleration. The Rubin GPU represents the next generation of Nvidia’s flagship AI processor, incorporating architecture improvements and manufacturing node advances that will determine whether the company can maintain its performance leadership.

Supporting these primary computing elements are the NVLink 6 switch enabling high-speed interconnection between GPUs, the ConnectX-9 SuperNIC handling network traffic at unprecedented speeds, the BlueField-4 data processing unit offloading infrastructure tasks from main processors, and the Spectrum-6 Ethernet switch managing data center networking. This comprehensive platform approach allows Nvidia to optimize the entire computing stack rather than just selling individual components.

The shift from Blackwell to Rubin architectures reflects Nvidia’s relentless pace of innovation in AI computing. Each generation brings measurable improvements in performance, efficiency, and capability, though the company faces the fundamental challenge that AI model training and inference demands continue growing faster than hardware capabilities advance. Sustaining the gap between customer needs and available compute requires both architectural innovation and manufacturing process improvements.

Huang’s presentation emphasized what Nvidia calls physical AI, referring to AI models trained in virtual environments using synthetic data before deployment in real-world applications. This approach addresses the fundamental challenge that training AI systems for physical world tasks requires massive amounts of real-world data that may be expensive, dangerous, or impractical to collect. Simulation allows AI models to experience millions of scenarios safely and inexpensively before facing actual operational conditions.

The presentation also showcased autonomous vehicle applications and robotics, with Huang joined on stage by small robots that generated considerable audience enthusiasm. These demonstrations serve strategic purposes beyond entertainment, signaling Nvidia’s push to expand its AI platform beyond data centers into edge devices, vehicles, and robotic systems. The company sees these emerging markets as crucial growth opportunities as its core data center business matures.

Nvidia’s $500 billion order backlog, disclosed during the presentation, provides perspective on the scale of demand the company faces. With annual revenues approaching $200 billion based on recent quarterly results, the backlog represents more than two years of production at current run rates. This extraordinary demand creates both opportunity and risk, as any execution missteps or competitive threats could significantly impact the company’s trajectory.

The competitive landscape continues evolving as established semiconductor companies and new entrants attempt to challenge Nvidia’s position. AMD has invested heavily in its Instinct accelerator platform, announcing new high-end chips at CES that target the same data center applications as Nvidia’s offerings. Meanwhile, cloud service providers including Amazon, Google, and Microsoft have developed custom AI chips to reduce dependence on external suppliers.

Intel’s ongoing struggles in the AI accelerator market have created space for Nvidia to dominate, though Intel’s new partnership with the Trump administration through a government equity stake could provide resources and strategic support for a revitalization effort. Chinese companies including Huawei and various startups have also invested in AI chip development, though U.S. export restrictions limit their access to cutting-edge manufacturing technology.

The regulatory environment surrounding AI chip exports, particularly to China, adds complexity to Nvidia’s business. Recent reports indicate the Trump administration has authorized sales of more advanced chips to Chinese customers, potentially reopening markets that had been restricted. However, the geopolitical situation remains fluid, and export policies could change based on broader U.S.-China relations.

Nvidia’s stock valuation reflects investor confidence that the company can sustain its growth trajectory despite emerging competition and market saturation concerns. The company recently became the first to achieve a $5 trillion market capitalization, valuing it above any other public company. This extraordinary valuation implies expectations for continued revenue and earnings growth well into the future.

For the technology industry broadly, Nvidia’s continued success validates massive investments in AI infrastructure. The Vera Rubin platform’s production status confirms that at least through late 2026, demand for AI computing capacity shows no signs of moderating. Data center operators, cloud providers, and enterprise customers continue placing orders for capability that won’t be delivered for months, indicating confidence that AI applications will justify the expense.

The platform’s planned deployment timeline in the second half of 2026 means customers ordering systems today face extended wait times, though whether this reflects component shortages or natural product cycle timing remains unclear. Either way, the lag between order and delivery demonstrates that AI infrastructure remains supply-constrained despite massive capacity additions throughout the industry.