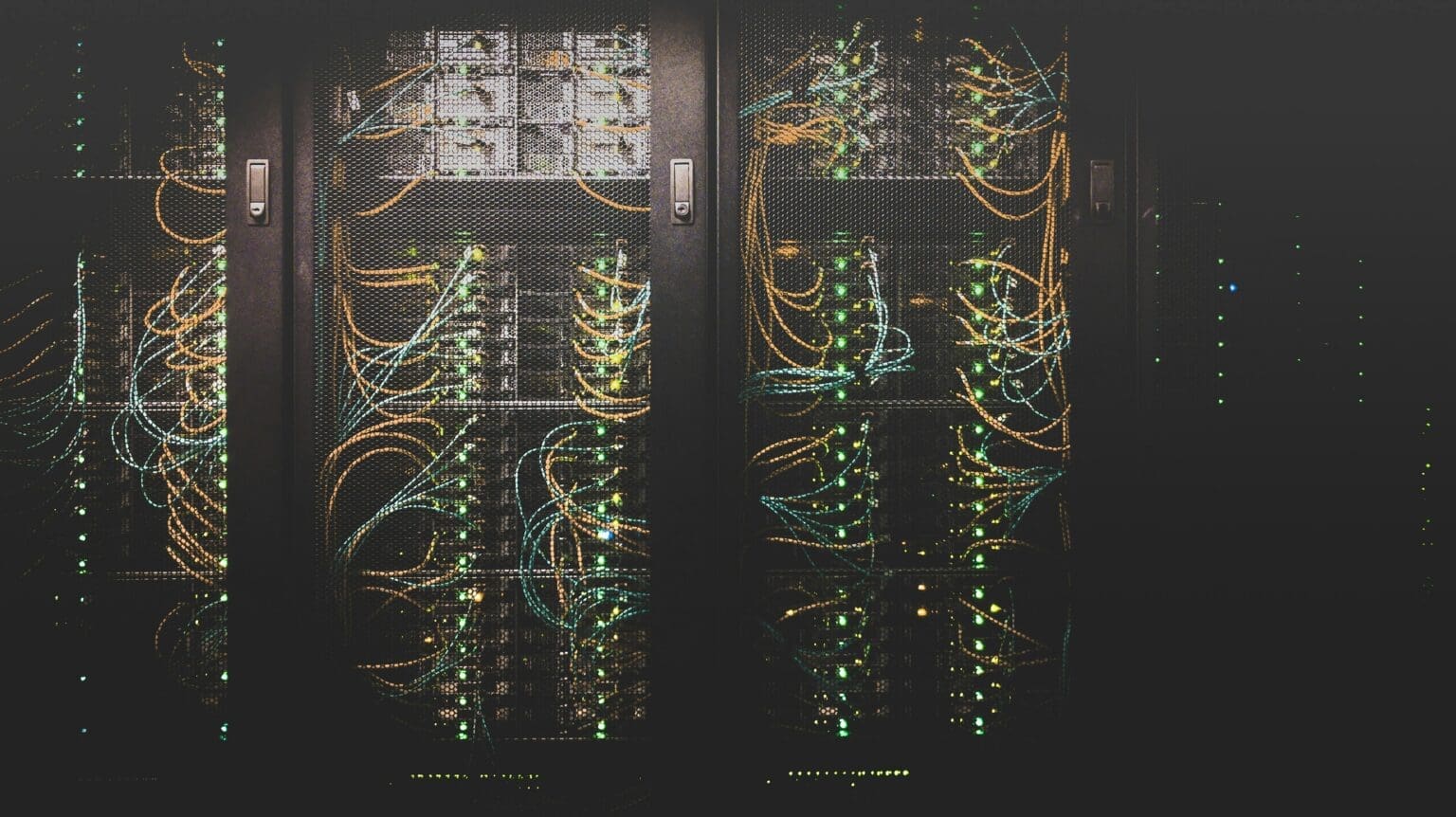

New reporting highlights how companies across the AI stack are channeling enormous capital into infrastructure—chips, server fleets, networking, and the physical realities of compute (power delivery, cooling, and real estate).

This capex wave is increasingly driven by demand for always-available inference (not just periodic training), which changes capacity planning: operators need predictable utilization, high uptime, and geographically distributed compute to cut latency.

The “AI infrastructure boom” is also reshaping vendor strategies—hardware, data-center developers, and platform companies are converging into a single supply chain where bottlenecks (transformers, grid interconnects, liquid cooling) can be as limiting as GPU supply.