The world of robotics has become an integral part of modern technology, influencing industries ranging from manufacturing to healthcare, agriculture, and even entertainment. At its core, robotics programming is the bridge that allows machines to perform tasks with precision and intelligence, making them valuable assets in automating repetitive or complex functions. For anyone looking to enter this exciting field, understanding the basics of programming robotic systems is an essential first step.

Robotics programming involves writing code that controls robots. These robots may vary in their complexity, from simple robotic arms in assembly lines to sophisticated humanoid robots capable of walking, talking, and recognizing objects in their surroundings. While the hardware is important, the software that drives these machines is what enables them to perform tasks efficiently, interact with their environment, and adapt to new situations. Robotics programming offers a blend of computer science, engineering, and mathematics, making it a highly interdisciplinary field.

Key Components of a Robotics System

Before diving into robotics programming, it is essential to understand the fundamental components of a robotic system. A typical robot consists of several elements, each playing a critical role in the machine’s functionality:

- Sensors: Sensors are the eyes and ears of a robot. They allow the machine to perceive its environment by collecting data, such as distance, light, temperature, or pressure. Common sensors include ultrasonic sensors for measuring distance, cameras for visual input, and gyroscopes for balance and orientation.

- Actuators: Actuators are the muscles of a robot. These devices convert electrical signals into physical movement. Examples of actuators include motors, servos, and hydraulic systems. Without actuators, a robot cannot move or manipulate objects in its environment.

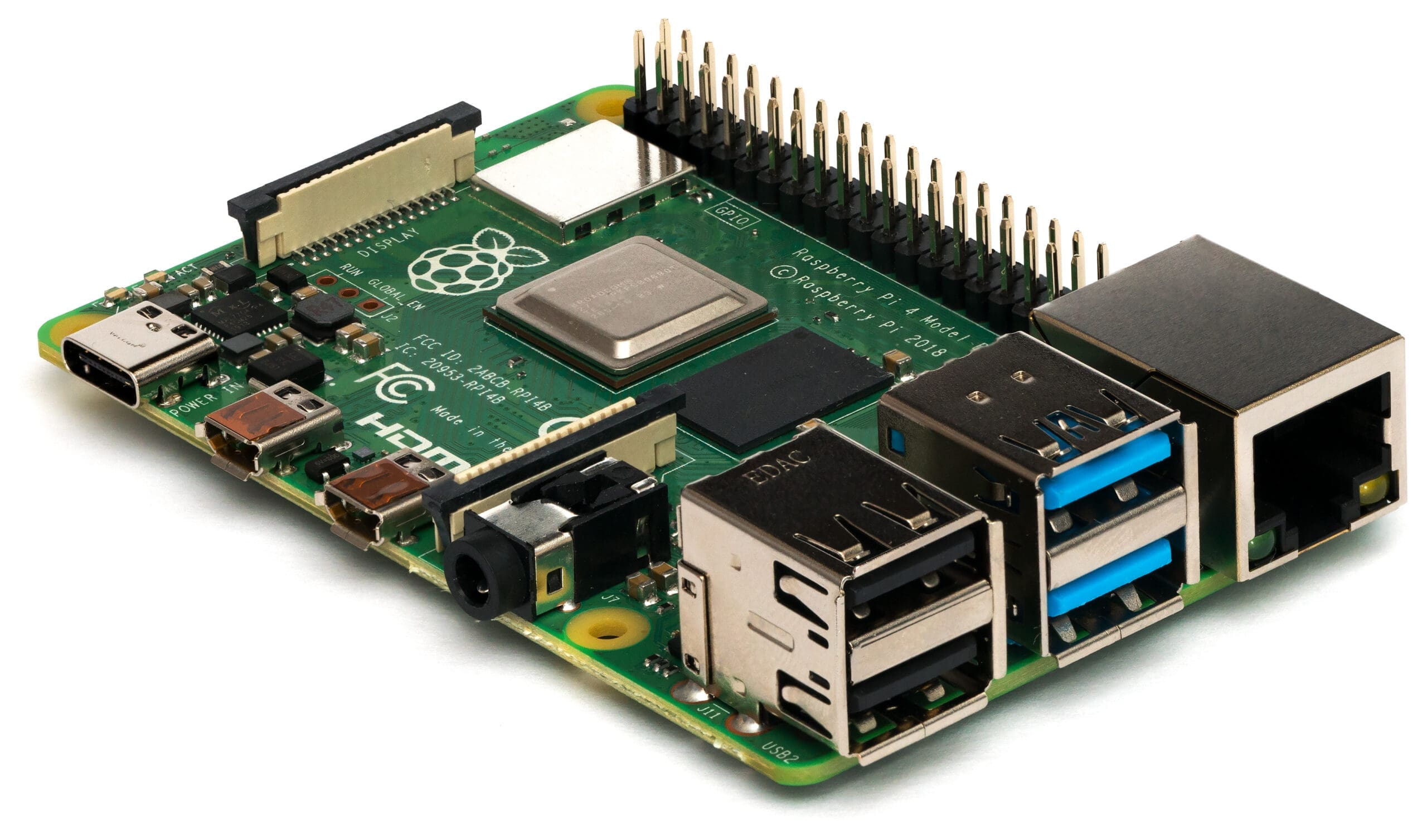

- Control Systems: The control system serves as the brain of the robot, processing the data from sensors and sending instructions to actuators based on that data. It is usually composed of microcontrollers or computers running a specific operating system or firmware designed for robotics.

- Power Supply: Robots require energy to function, typically supplied by batteries or direct electrical connections. The choice of power supply depends on the robot’s size, purpose, and mobility requirements.

- End Effectors: These are the tools or devices attached to the end of a robotic arm, such as grippers, suction cups, or welding torches. The type of end effector determines what task the robot can perform.

- Communication Interface: Modern robots often interact with external systems or humans through various communication protocols like Wi-Fi, Bluetooth, or even cellular networks. The communication interface allows for remote control and data exchange.

Programming Languages for Robotics

One of the first decisions you need to make when getting started with robotics programming is choosing a programming language. Not all languages are equally suitable for every robotics application, so it’s important to select one that aligns with your goals and the platform you’re using.

Python

Python is one of the most popular programming languages for robotics, particularly among beginners. Its simplicity, readability, and extensive libraries make it an ideal choice for those just getting started. Python’s libraries, such as Robot Operating System (ROS) and OpenCV for computer vision, provide pre-built functionality, allowing programmers to focus on the higher-level logic rather than low-level details. Moreover, Python integrates well with hardware, making it easy to prototype and test on real robots.

C++

C++ is another major player in the world of robotics programming. While it is more complex than Python, C++ offers greater control over hardware resources and is known for its speed and efficiency. It is the go-to language for many industrial robotics applications where performance is critical. Additionally, ROS, one of the most widely used platforms for building robot software, supports both Python and C++, but its core components are written in C++.

Java

Java is another versatile language used in robotics, particularly in applications where object-oriented programming and portability are crucial. Java’s platform independence allows the code to run on various systems without modification, making it useful for cross-platform robotics development. Java is commonly used in mobile robotics and simulations, and it also powers a number of robotic frameworks such as leJOS, used for programming LEGO Mindstorms robots.

MATLAB

While not a traditional programming language in the same sense as Python or C++, MATLAB is heavily used in the robotics field, especially for simulation, control system design, and algorithm development. MATLAB’s integration with hardware allows for easy data analysis, simulation, and prototyping, making it a powerful tool for academic research and complex robotic applications.

Other Languages

There are several other languages and platforms worth considering, depending on your project. For example, Arduino is used for simple robots and embedded systems, while languages like LabVIEW are popular in industrial automation and testing.

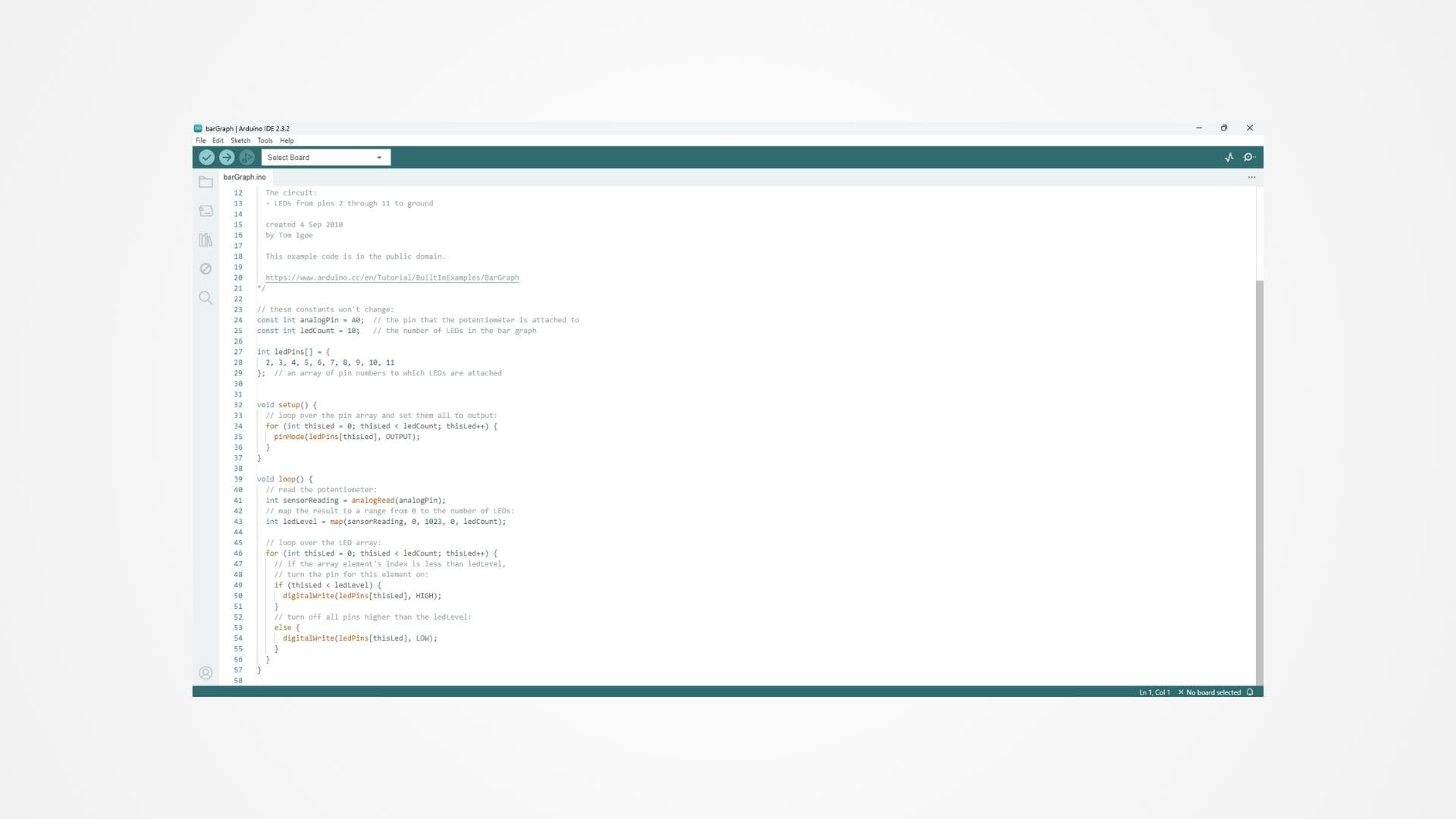

Setting Up Your Development Environment

Once you’ve selected a programming language, the next step is setting up a development environment for robotics programming. The environment will vary based on your language of choice, but generally, you’ll need a few key components:

- Integrated Development Environment (IDE): An IDE helps write, debug, and compile code. For Python, IDEs like PyCharm or Visual Studio Code are popular. For C++, you might use platforms like Eclipse or Microsoft Visual Studio. Most IDEs offer features such as code completion, syntax highlighting, and debugging tools.

- Simulator: If you don’t have a physical robot to test your code on, simulators are incredibly useful. Simulators like Gazebo or V-REP (now CoppeliaSim) allow you to create virtual robots and environments where you can test your code before deploying it on real hardware.

- Robot Operating System (ROS): ROS is a middleware that provides essential services for robotics development, such as hardware abstraction, device control, and communication between different components. It’s supported by both Python and C++, and it’s considered an industry standard for building scalable robotic systems.

Understanding Robotics Architecture and Design Principles

In robotics programming, understanding the architectural and design principles that govern the development of robotic systems is vital. Whether you’re working on simple projects, such as creating a robot that navigates through a room, or more advanced tasks like designing an industrial robot for assembly line operations, having a structured approach to system design can significantly improve the efficiency and effectiveness of your project.

A robotic system’s architecture consists of both hardware and software, carefully integrated to meet the functional requirements of the robot. This integration ensures the robot’s ability to perceive its environment, process data, make decisions, and execute actions. In this section, we’ll explore the different layers and design patterns that form the backbone of robotics programming.

Layers of a Robotic System

A typical robotic system can be broken down into several layers, each responsible for different tasks within the system. Understanding these layers helps programmers design efficient, modular, and scalable systems that can be adapted for various applications.

1. Perception Layer

The perception layer is responsible for gathering data from the robot’s sensors and converting that raw information into a format that the robot can use for decision-making. This layer typically handles tasks such as object detection, obstacle avoidance, and environmental mapping. Programming the perception layer often involves working with data from a variety of sensors, including cameras, infrared sensors, LIDAR (Light Detection and Ranging), and accelerometers.

Key programming challenges in the perception layer include managing noisy data, ensuring real-time performance, and implementing algorithms for sensor fusion — the process of combining data from multiple sensors to create a cohesive understanding of the environment.

Common tools used in the perception layer include:

- OpenCV for computer vision tasks

- PCL (Point Cloud Library) for 3D spatial data

- SLAM (Simultaneous Localization and Mapping) algorithms for mapping and navigation

2. Decision-Making Layer

The decision-making layer, often referred to as the “brain” of the robot, processes the information gathered by the perception layer to make decisions about how the robot should interact with its environment. This layer is responsible for path planning, task scheduling, and behavior control.

One of the most critical aspects of programming the decision-making layer is designing algorithms that allow the robot to achieve its objectives while accounting for constraints such as obstacles, energy consumption, and time. There are several approaches to decision-making in robotics, including:

- Finite State Machines (FSM): A popular control architecture for managing the robot’s behavior based on different states (e.g., idle, moving, picking up an object).

- Rule-based Systems: Involves programming the robot with a set of predefined rules that guide its actions based on sensor input.

- Artificial Intelligence and Machine Learning: For more complex robots, AI and machine learning techniques are increasingly used to allow robots to learn from their experiences and improve their decision-making over time.

The decision-making layer is typically where advanced programming concepts, such as algorithms for search and optimization, machine learning, and real-time computing, come into play.

3. Control Layer

The control layer interfaces directly with the robot’s actuators and motors, converting high-level decisions from the decision-making layer into low-level commands that execute the robot’s movements. In robotics programming, this layer is responsible for trajectory generation, movement control, and maintaining balance or stability in dynamic environments.

A key challenge in the control layer is achieving real-time performance. Robots, especially those operating in dynamic environments, need to react to changes quickly and accurately. Real-time control algorithms ensure that the robot’s movements are smooth, coordinated, and safe. Popular control algorithms include:

- PID Control (Proportional-Integral-Derivative): A classic control loop feedback mechanism used to maintain stability in systems.

- Model Predictive Control (MPC): A more advanced control technique used in robots where dynamic modeling is necessary, such as humanoid robots or drones.

The control layer often relies heavily on mathematics, especially in fields such as kinematics, dynamics, and control theory. As such, programming in this layer often requires a solid understanding of mathematical modeling and optimization.

Common Robotics Frameworks and Middleware

As robots become more complex, robotics frameworks and middleware have emerged to simplify the development process. These frameworks provide pre-built components and libraries that handle common tasks such as hardware abstraction, communication between different modules, and the integration of various sensors and actuators.

Robot Operating System (ROS)

The Robot Operating System (ROS) is one of the most widely used frameworks in robotics programming. Contrary to its name, ROS is not an actual operating system but a middleware that provides essential services for the development of robotic applications. These services include hardware abstraction, communication management, and the ability to reuse code across different projects.

ROS is designed to be modular, allowing developers to write nodes (individual programs) that handle specific tasks such as navigation, perception, or manipulation. These nodes communicate with each other using a publish/subscribe mechanism, making it easy to develop complex robotic systems in a distributed and scalable manner.

One of the strengths of ROS is its extensive library of tools and packages, such as:

- MoveIt! for motion planning and manipulation

- Navigation Stack for autonomous navigation and mapping

- Gazebo for robot simulation and testing

ROS is also compatible with both Python and C++, making it flexible for a wide range of robotics applications.

Microsoft Robotics Developer Studio

Microsoft Robotics Developer Studio (MRDS) is another platform that simplifies robotics development by offering a visual programming environment alongside a traditional coding interface. It supports a wide variety of sensors and actuators and can be used to control both physical robots and simulated robots in a virtual environment.

MRDS is particularly well-suited for educational purposes and is a popular choice for hobbyists and researchers working on experimental projects. While less widely adopted than ROS in the industrial space, it still offers valuable features for rapid prototyping and testing.

VEX Robotics Platform

For those interested in educational or competition-based robotics, the VEX Robotics Platform offers a powerful, beginner-friendly environment. The platform includes a proprietary development environment, VEXcode, that supports both block-based (for beginners) and text-based (for advanced users) programming.

VEX is commonly used in high school and university robotics competitions, where teams design, build, and program robots to perform various tasks. The VEX platform provides an excellent introduction to key robotics concepts while maintaining simplicity for students and educators.

Simulation in Robotics Programming

Building and testing a robot in the real world can be expensive and time-consuming. This is where simulation comes in, allowing developers to prototype, test, and debug their robotic systems in virtual environments before deploying them on actual hardware.

Gazebo

As mentioned earlier, Gazebo is a powerful robot simulation tool that integrates seamlessly with ROS. It provides a robust physics engine, allowing for realistic simulation of robot movement, sensor input, and interaction with the environment. Gazebo supports a wide range of robot models and can simulate everything from simple wheeled robots to complex humanoids.

Webots

Webots is another popular open-source robotics simulator that supports a wide range of robot platforms and sensors. It provides a user-friendly interface and includes features for simulating dynamic environments, making it a great tool for both beginners and advanced users.

Using simulators like Gazebo and Webots, developers can test algorithms, debug code, and refine their designs before committing to physical prototypes, saving time and resources in the process.

Challenges in Robotics Programming

Despite the availability of advanced tools and frameworks, robotics programming presents several challenges that developers must overcome. Some of these challenges include:

- Real-time performance: Robots must process sensor data and execute control commands in real-time to interact effectively with their environment. Ensuring that your system meets real-time requirements is crucial for success.

- Multi-threading and concurrency: Robotics systems often require parallel processing to handle multiple tasks simultaneously, such as perception, control, and decision-making. Programming for concurrency adds complexity but is essential for responsive robotic systems.

- Error handling: In real-world environments, sensors can fail, actuators can jam, or external conditions can change unexpectedly. Robust error handling and recovery mechanisms are necessary to ensure the reliability of the system.

Integrating Artificial Intelligence in Robotics Programming

As robotics technology has advanced, so too has the demand for robots to perform tasks that require more than just pre-programmed actions. To navigate, interact with, and adapt to dynamic environments, robots increasingly rely on Artificial Intelligence (AI). By integrating AI into robotics programming, we empower machines to learn from their experiences, make decisions based on complex data, and respond intelligently to unforeseen challenges.

AI in robotics typically manifests in areas such as computer vision, natural language processing, machine learning, and autonomous decision-making. These technologies allow robots to perform tasks that would otherwise require human intelligence. In this section, we’ll dive into the role of AI in robotics, focusing on how programming enables machines to learn, plan, and interact autonomously.

Machine Learning in Robotics

Machine learning (ML) is a subset of AI that has significantly impacted robotics. ML allows robots to learn from data, identify patterns, and improve their performance over time. Instead of programming every possible action, developers can build systems that adapt based on their environment and experience.

Supervised Learning

In supervised learning, a robot is trained on labeled data, meaning that for each input, the correct output is known. For example, a robot can be trained to recognize different objects by being shown thousands of images of objects labeled with their correct identities. The robot uses this data to learn the features that distinguish one object from another.

Supervised learning is widely used in robotics for tasks such as:

- Object recognition: Training a robot to identify objects, such as tools or parts, based on visual or sensor data.

- Speech recognition: Enabling robots to understand spoken commands by recognizing patterns in audio signals.

- Gesture recognition: Allowing robots to interpret human body language or gestures for more intuitive interaction.

To implement supervised learning in robotics, Python libraries such as TensorFlow and PyTorch are commonly used. These frameworks allow developers to build, train, and deploy neural networks, which are at the heart of most machine learning models.

Reinforcement Learning

Unlike supervised learning, where a robot learns from labeled data, reinforcement learning (RL) involves the robot learning through trial and error. The robot is placed in an environment and must take actions to achieve a specific goal. After each action, it receives feedback in the form of rewards or penalties, which it uses to adjust future behavior.

Reinforcement learning is particularly effective in robotics for tasks where the robot must learn from its own experiences, such as:

- Navigation: Teaching a robot to navigate through a maze or avoid obstacles in real-time.

- Manipulation: Learning to handle and manipulate objects, such as picking up fragile items without breaking them.

- Autonomous driving: Enabling self-driving cars to make decisions in dynamic environments, such as reacting to pedestrians, traffic lights, and other vehicles.

Popular frameworks for implementing RL in robotics include OpenAI Gym, which provides simulated environments for training agents, and RLlib, a scalable library for reinforcement learning.

Unsupervised Learning

While less common in robotics, unsupervised learning allows robots to learn from unlabeled data. In this approach, the robot is not given any explicit instructions about the correct output. Instead, it must find patterns or relationships in the data on its own. Unsupervised learning can be used in robotics for clustering similar objects, anomaly detection, or discovering new strategies for accomplishing tasks.

Autonomous Navigation and Path Planning

One of the most prominent applications of AI in robotics is autonomous navigation. Whether it’s a robot vacuum cleaning your home or an autonomous vehicle driving on the road, these systems need to navigate complex, dynamic environments without human intervention.

Simultaneous Localization and Mapping (SLAM)

SLAM is a critical algorithm used in autonomous navigation. It allows a robot to build a map of an unknown environment while simultaneously tracking its location within that environment. SLAM combines sensor data (e.g., from LIDAR, cameras, or sonar) with probabilistic models to create a detailed map in real time, which the robot uses for navigation and obstacle avoidance.

Programming SLAM algorithms can be complex, but ROS offers ready-made SLAM packages such as gmapping and cartographer, which can be easily integrated into your project.

Path Planning

Path planning is the process of determining the best route for a robot to take from one point to another while avoiding obstacles. Path planning algorithms consider factors like distance, speed, and safety to optimize the robot’s movement. Some popular algorithms used in robotics path planning include:

- A*: A graph-based search algorithm that finds the shortest path from the robot’s starting point to its destination.

- RRT (Rapidly-exploring Random Tree): An algorithm used for path planning in high-dimensional spaces, such as humanoid robots or drones.

- Dijkstra’s Algorithm: A graph-based algorithm that guarantees finding the shortest path, although it can be slower than A* in some cases.

Many of these algorithms are available as ROS packages, making them accessible to developers without having to code them from scratch.

Human-Robot Interaction (HRI)

The field of Human-Robot Interaction (HRI) explores how robots and humans can collaborate effectively. As robots become more common in workplaces, homes, and public spaces, designing systems that allow intuitive and seamless interactions with humans is becoming a priority.

Natural Language Processing (NLP)

One of the most important technologies in HRI is Natural Language Processing (NLP), which enables robots to understand and respond to spoken or written language. NLP allows users to issue commands, ask questions, or even have casual conversations with robots. Popular NLP frameworks include NLTK (Natural Language Toolkit) and spaCy, both of which are compatible with Python and can be integrated into robotic systems.

Gesture and Emotion Recognition

Beyond verbal communication, robots can be programmed to recognize and respond to human gestures or facial expressions. By using AI-driven computer vision algorithms, robots can interpret human emotions or signals such as waving, pointing, or smiling, allowing for more intuitive interaction.

Collaborative Robots (Cobots)

In industrial settings, collaborative robots (cobots) are designed to work alongside human workers safely. Cobots use sensors, AI, and advanced control algorithms to ensure they can operate in close proximity to humans without causing harm. For example, a cobot working on an assembly line might slow down or stop if it detects a human worker too close to its workspace.

The Future of Robotics Programming

Robotics programming is an evolving field, with rapid advancements in AI, machine learning, and hardware technologies pushing the boundaries of what robots can achieve. As autonomous robots become more prevalent, the next frontier in robotics will likely involve greater integration with AI, allowing robots to not only perform predefined tasks but also learn, adapt, and collaborate with humans in more meaningful ways.

Emerging trends in robotics programming include:

- Edge Computing: As robots become more intelligent, there’s a growing need to process data locally (on the robot) rather than relying on cloud-based systems. Edge computing allows robots to operate in real-time with minimal latency, even in areas with limited connectivity.

- Swarm Robotics: Inspired by biological systems, swarm robotics involves programming multiple robots to work together in a coordinated manner, often using simple rules. Swarm robotics has applications in fields like agriculture, disaster response, and space exploration.

- AI-driven Robotics: The convergence of robotics and AI will continue to drive innovation, enabling robots to perform tasks that require cognitive skills, such as problem-solving, planning, and reasoning.

Conclusion

Robotics programming is a dynamic and exciting field that offers a wide range of opportunities for both beginners and experienced developers. As we’ve explored, getting started with robotics involves understanding the basic components of a robot, selecting the right programming language, and using development environments and tools like ROS to streamline the process. From there, you can dive into more advanced topics, such as machine learning, autonomous navigation, and human-robot interaction, to create intelligent systems capable of adapting to their environment and working alongside humans.

The integration of AI has opened new possibilities in robotics, allowing machines to learn from data, interact with humans, and navigate complex environments autonomously. Whether you’re interested in building robots for industrial automation, healthcare, or education, the skills you develop in robotics programming will be invaluable as this technology continues to shape the future.