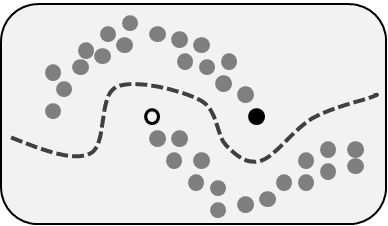

Semi-supervised learning is a machine learning approach that combines both labeled and unlabeled data to build predictive models. It’s particularly valuable in situations where labeled data is scarce or expensive to obtain, but large amounts of unlabeled data are readily available. By leveraging a small amount of labeled data along with a larger pool of unlabeled data, semi-supervised learning improves model accuracy without requiring extensive labeled datasets, making it an efficient and practical choice in fields like healthcare, natural language processing, and image recognition.

Semi-supervised learning is positioned between supervised and unsupervised learning. Unlike supervised learning, where each training example has a label, or unsupervised learning, where there are no labels, semi-supervised learning benefits from the “best of both worlds.” This hybrid approach helps capture complex patterns in data, enabling high-quality predictions with fewer labeled examples.

This article explores the fundamentals of semi-supervised learning, including how it works, the main types of semi-supervised learning techniques, and the benefits it offers in real-world applications.

Key Concepts in Semi-Supervised Learning

To understand semi-supervised learning, it’s essential to grasp the basic concepts and unique aspects that make this learning approach effective.

1. Labeled and Unlabeled Data

In semi-supervised learning, the dataset consists of two parts: a smaller portion of labeled data and a larger pool of unlabeled data. The labeled data provides guidance to the model, while the unlabeled data helps it generalize by identifying broader patterns and relationships within the dataset.

- Example: In medical imaging, a small set of images might be labeled by radiologists to indicate the presence or absence of a specific disease, while the remaining images are unlabeled. The labeled images teach the model what to look for, while the unlabeled images help it learn variations in data patterns.

2. The Role of Unlabeled Data in Learning

In semi-supervised learning, the model initially learns from the labeled data, creating a preliminary understanding of the task. It then applies this understanding to the unlabeled data, using it to refine and expand its knowledge. By integrating both labeled and unlabeled data, semi-supervised learning enables the model to achieve a more comprehensive understanding with fewer labeled examples.

- Example: In text classification, the model first learns from a labeled subset of documents (e.g., positive and negative reviews) and then applies what it has learned to categorize additional, unlabeled documents based on patterns it recognizes from the labeled examples.

3. Inductive vs. Transductive Learning

In semi-supervised learning, there are two main approaches: inductive learning and transductive learning.

- Inductive Learning: The model learns a general rule from the labeled and unlabeled data and applies this rule to new, unseen data. Inductive learning aims to create a model that generalizes beyond the training set.

- Example: A speech recognition system trained on a combination of labeled and unlabeled audio files would learn to recognize words and phrases and then apply this learning to new audio samples.

- Transductive Learning: The model focuses on labeling the specific unlabeled data within the dataset rather than generalizing to new data. This approach is useful when the goal is to label the current dataset accurately without the need for future generalization.

- Example: In document classification, the model uses labeled and unlabeled data within a specific dataset to categorize documents, but it may not perform as well on external datasets.

Types of Semi-Supervised Learning Techniques

Several techniques are used in semi-supervised learning, each leveraging labeled and unlabeled data in different ways. The main approaches include self-training, co-training, and graph-based methods, which allow the model to make use of unlabeled data to improve performance.

1. Self-Training

Self-training is one of the simplest and most commonly used semi-supervised learning methods. In self-training, the model is first trained on labeled data, creating an initial model. The model then uses this trained version to make predictions on the unlabeled data. Predictions that meet a confidence threshold are treated as pseudo-labels and are added to the labeled dataset, and the model is retrained with this expanded set. This process is repeated until the model stabilizes.

- Example: In a text classification task, a self-training model might begin with a small set of labeled reviews. It then assigns pseudo-labels to additional unlabeled reviews, treating high-confidence predictions as new labeled data to further train the model.

2. Co-Training

Co-training is an approach where two different models are trained on separate feature sets of the same labeled data. Each model then labels the unlabeled data independently. The high-confidence predictions from each model are used to train the other, enabling mutual learning. Co-training is effective when the data has multiple, redundant views (or features) that each provide distinct information about the target variable.

- Example: In web page classification, one model could use the page’s text as features, while another model uses links on the page. Each model generates pseudo-labels for unlabeled pages, which are then used to further train both models, improving accuracy.

3. Graph-Based Methods

Graph-based methods are useful when the relationships between data points can be represented as a graph. In this approach, nodes represent the data points, and edges represent the similarity between them. The labeled data is propagated through the graph, allowing the model to make predictions based on similarities between labeled and unlabeled nodes. This approach works well for tasks with complex, interconnected data, such as social networks or molecular structures.

- Example: In social media analysis, a graph-based model might use labeled posts from certain users to classify similar posts from connected users, spreading labels across the network based on shared features.

4. Generative Models

Generative models in semi-supervised learning attempt to model the distribution of both labeled and unlabeled data. They create a probabilistic model that explains how the data is generated, allowing the model to make inferences about the labels of the unlabeled data. Techniques like variational autoencoders (VAEs) and generative adversarial networks (GANs) are commonly used for this purpose, especially when dealing with high-dimensional data such as images.

- Example: In image classification, a generative model might learn to generate images similar to those in the dataset, using this generative process to infer labels for unlabeled images by finding patterns within the generated and real images.

Each of these techniques has its advantages and limitations, and the choice of method often depends on the nature of the data, the complexity of the task, and the availability of labeled and unlabeled data.

Advantages of Semi-Supervised Learning

Semi-supervised learning offers several key advantages that make it a popular choice for tasks where fully labeled datasets are difficult or costly to obtain. Here are some of the main benefits:

1. Cost-Efficiency

Labeling data is often time-consuming and costly, especially in domains like healthcare, where labeling requires expert knowledge. Semi-supervised learning reduces the need for large labeled datasets by making effective use of unlabeled data, lowering the overall cost of model development.

- Example: In medical image analysis, semi-supervised models can leverage a small set of labeled scans to train on a much larger pool of unlabeled scans, reducing the need for extensive expert labeling.

2. Improved Model Accuracy

By combining labeled and unlabeled data, semi-supervised learning can achieve higher accuracy than models trained only on labeled data. The unlabeled data helps the model learn more about the underlying data distribution, resulting in more robust and generalizable predictions.

- Example: In fraud detection, a semi-supervised model trained on labeled fraudulent transactions and a larger set of unlabeled transactions can capture more nuanced patterns, improving detection rates.

3. Scalability

Semi-supervised learning scales well because it can use unlabeled data, which is typically easier to obtain. As datasets grow, semi-supervised models can incorporate new data without requiring extensive labeling, making them adaptable to large-scale applications.

- Example: In speech recognition, semi-supervised learning allows models to scale to new languages and dialects by using readily available audio data with minimal manual transcription, facilitating adaptation to various linguistic contexts.

4. Better Generalization

Semi-supervised models benefit from broader generalization because they learn from a mixture of labeled and unlabeled data, making them less likely to overfit to the labeled examples. This generalization ability is particularly useful for tasks with high data variability, where labeled data alone may not represent all potential cases.

- Example: In environmental monitoring, a semi-supervised model trained on a small set of labeled pollution data combined with vast amounts of unlabeled data from various locations can generalize better to diverse environmental conditions.

The cost-efficiency, accuracy, scalability, and generalization benefits make semi-supervised learning a practical solution for a range of data-intensive applications, especially in fields where labeled data is a scarce resource.

Implementing Semi-Supervised Learning Models

Implementing a semi-supervised learning model involves carefully planning data collection, selecting the appropriate method, and iterating to achieve the best performance. Here’s a step-by-step guide to building a semi-supervised model.

1. Data Collection and Preparation

Data collection for semi-supervised learning involves gathering both labeled and unlabeled data. The labeled data should be a representative sample, as it will guide the model in understanding the target task. Since semi-supervised models rely heavily on unlabeled data, it’s essential to ensure that the unlabeled data is from the same or a similar distribution as the labeled data to avoid introducing bias.

- Example: In a language classification model, a subset of documents is manually labeled by language, while a larger set of unlabeled documents is collected from the same or similar sources to maintain consistency.

Once collected, the data must be cleaned and preprocessed. Preprocessing steps often include handling missing values, normalizing or scaling features, and encoding categorical variables for better model performance.

2. Selecting the Semi-Supervised Learning Technique

The choice of technique depends on the nature of the data and the problem being solved. Here’s a quick guide on when to use different techniques:

- Self-Training: Best suited for tasks where the model can generate confident predictions on unlabeled data, such as document classification.

- Co-Training: Ideal for datasets with multiple independent features or views, such as web page classification (where features may include both content and links).

- Graph-Based Methods: Effective for interconnected data, such as social networks or recommendation systems.

- Generative Models: Suitable for high-dimensional data, like images and audio, where learning data distribution aids in label prediction.

3. Training and Iterating the Model

Semi-supervised models are typically trained in stages, allowing the model to refine its predictions gradually. Here’s how this iterative training process works:

- Initial Training on Labeled Data: Train the model using only the labeled data to establish a baseline understanding of the task.

- Prediction on Unlabeled Data: Use the model to predict labels for the unlabeled data. High-confidence predictions are treated as pseudo-labels, providing additional labeled examples for the model.

- Retraining: Retrain the model with the expanded labeled dataset, which now includes both original labels and pseudo-labels. This process can be repeated until the model’s accuracy stabilizes.

from sklearn.model_selection import train_test_split

from sklearn.semi_supervised import SelfTrainingClassifier

from sklearn.ensemble import RandomForestClassifier

# Example setup for self-training with a random forest classifier

base_model = RandomForestClassifier()

model = SelfTrainingClassifier(base_model, threshold=0.75)

# Fit model on labeled and pseudo-labeled data iteratively

model.fit(labeled_data, labels)4. Evaluating Model Performance

Evaluating a semi-supervised model requires testing its performance on a labeled validation or testing set. Common metrics, such as accuracy, F1-score, precision, and recall, are used depending on the task type (e.g., classification or regression). It’s also important to assess the model’s ability to generalize to new, unseen data, ensuring it has not overfit to the initial labeled set or pseudo-labeled data.

5. Fine-Tuning and Hyperparameter Optimization

Hyperparameter tuning can significantly impact a model’s performance, especially in semi-supervised learning. Techniques such as grid search and random search are commonly used to optimize parameters like confidence thresholds for pseudo-labeling, the number of clusters (in clustering-based methods), and learning rates in neural network-based models.

- Example: In self-training, adjusting the confidence threshold can help the model avoid incorporating low-confidence pseudo-labels, reducing noise and improving accuracy.

By following these steps, practitioners can effectively implement semi-supervised learning models that leverage both labeled and unlabeled data, enhancing model performance with minimal labeling effort.

Challenges in Semi-Supervised Learning

While semi-supervised learning offers numerous advantages, it also comes with challenges that can complicate its implementation. Understanding these challenges is essential for building robust models.

1. Quality of Unlabeled Data

The effectiveness of semi-supervised learning depends on the quality and relevance of the unlabeled data. If the unlabeled data has a different distribution from the labeled data, it can introduce noise and lead the model to incorrect conclusions. Ensuring that the unlabeled data is representative of the problem domain is crucial.

- Example: In speech recognition, using unlabeled data from a different accent or dialect than the labeled examples could confuse the model, reducing accuracy on the target task.

2. Pseudo-Labeling Errors

In self-training and similar methods, pseudo-labeling errors can accumulate over iterations, as the model treats incorrect labels as true labels. This issue is especially prevalent when the initial model’s confidence is low, leading to the propagation of errors that affect model performance.

- Solution: Setting a high confidence threshold for pseudo-labeling can reduce error propagation. Additionally, monitoring performance and manually reviewing high-confidence pseudo-labels can improve accuracy.

3. Lack of Interpretability

Semi-supervised models, particularly those based on complex algorithms like generative models, can be challenging to interpret. The decision-making process can be opaque, making it difficult to understand how the model arrives at specific predictions, which is a concern in high-stakes applications like healthcare and finance.

- Solution: Use interpretable models when possible, and apply model interpretation tools, such as LIME or SHAP, to understand the influence of features on predictions.

4. Computational Complexity

Semi-supervised learning models, especially graph-based and generative models, can be computationally intensive. Processing large datasets or high-dimensional data may require significant computational resources, which can limit scalability for organizations with limited infrastructure.

- Solution: Optimize models using dimensionality reduction or consider cloud-based machine learning services to manage the computational load efficiently.

5. Evaluation Challenges

Evaluating semi-supervised models is difficult because performance depends on both labeled and unlabeled data quality. Metrics that work well for supervised learning may not accurately reflect semi-supervised model performance, requiring careful validation.

- Solution: Use a validation set with labeled data to assess performance, and consider using multiple metrics to ensure a comprehensive evaluation of the model’s strengths and weaknesses.

Despite these challenges, semi-supervised learning remains highly valuable for organizations that lack extensive labeled data but have access to a wealth of unlabeled information. By addressing these challenges through careful data selection, parameter tuning, and evaluation, practitioners can maximize the effectiveness of semi-supervised models.

Real-World Applications of Semi-Supervised Learning

Semi-supervised learning is used across various fields where labeled data is limited, costly, or difficult to obtain. Here are some key applications across industries:

1. Healthcare and Medical Imaging

In healthcare, labeled data often requires expert annotation, which can be costly and time-consuming. Semi-supervised learning enables models to learn from a limited number of labeled medical images, like MRIs or CT scans, along with a larger set of unlabeled images, improving diagnostic accuracy and efficiency.

- Example: In disease detection, semi-supervised learning models can identify anomalies in chest X-rays by using a few annotated images, learning to generalize findings across thousands of unlabeled scans.

2. Natural Language Processing (NLP)

Semi-supervised learning is widely used in NLP tasks like sentiment analysis, translation, and entity recognition, where manually labeled text data is often limited but large volumes of unlabeled text are available. This approach helps NLP models learn linguistic patterns, grammar, and context effectively.

- Example: In sentiment analysis, a semi-supervised model could be trained on a small set of labeled reviews (positive or negative) and then learn from unlabeled reviews to identify sentiment in new, unseen reviews.

3. Autonomous Driving

In autonomous driving, labeled data for every possible driving scenario is difficult to obtain, given the complexity and variability of driving environments. Semi-supervised learning allows autonomous vehicle models to use a limited set of labeled images and videos combined with extensive unlabeled driving data to improve accuracy in detecting road signs, obstacles, and pedestrians.

- Example: A semi-supervised model for object detection might use labeled images of traffic signs alongside numerous unlabeled road images, enabling the car to recognize signs with fewer labeled samples.

4. Customer Behavior Analysis

In marketing and customer relationship management, semi-supervised learning can segment customers based on purchase history, preferences, and behavior, even when labeled customer data is sparse. This approach allows companies to understand different customer segments and personalize offers.

- Example: A retailer might label a small subset of customers as “high-value” or “low-value” and use semi-supervised learning to predict the value of unlabeled customers, optimizing targeting strategies.

5. Speech Recognition

Speech recognition systems use semi-supervised learning to identify words and phrases from audio data. Since transcribing audio manually is costly, semi-supervised learning allows models to learn from labeled and unlabeled audio samples, improving accuracy for different languages, dialects, and accents.

- Example: A semi-supervised model for speech recognition might start with transcribed audio from native speakers and leverage unlabeled audio to extend recognition capabilities to regional dialects and different languages.

6. Fraud Detection in Finance

In finance, detecting fraudulent transactions requires labeled examples of fraudulent and non-fraudulent transactions. Since labeled fraudulent data is limited, semi-supervised learning enables the model to generalize from a small set of labeled transactions and detect suspicious patterns in larger volumes of unlabeled transaction data.

- Example: A semi-supervised model could use a few known cases of fraud along with thousands of unlabeled transactions, identifying unusual patterns that may indicate new fraud cases.

These applications highlight the versatility of semi-supervised learning across sectors, enabling industries to derive insights and make accurate predictions from large, unlabeled datasets with minimal labeling requirements.

Future Trends in Semi-Supervised Learning

Semi-supervised learning continues to evolve as new technologies and techniques make it easier to leverage vast amounts of unlabeled data. Here are some emerging trends shaping the future of semi-supervised learning:

1. Advances in Self-Supervised Learning

Self-supervised learning, a variant of semi-supervised learning, is gaining momentum, especially in fields like natural language processing and computer vision. In self-supervised learning, models generate labels from the data itself by creating tasks, such as predicting missing words in sentences or reconstructing occluded image sections. This approach reduces dependency on manually labeled data and is highly scalable.

- Example: In image processing, self-supervised learning techniques can pretrain models on unlabeled images by predicting missing parts of images. Once pretrained, these models can be fine-tuned for specific tasks like object detection or segmentation, improving performance without extensive labeled datasets.

2. Hybrid Learning Approaches

Combining supervised, unsupervised, and semi-supervised methods is becoming more common as researchers and practitioners seek to maximize data utility. These hybrid learning models are particularly useful for complex tasks requiring the strengths of multiple learning approaches. For example, a model could first use unsupervised learning to pre-cluster data and then apply semi-supervised learning to label certain clusters.

- Example: In personalized recommendation systems, hybrid approaches combine unsupervised clustering of user preferences with supervised models to refine recommendations, resulting in a more accurate and personalized user experience.

3. Federated Semi-Supervised Learning

Federated learning, which enables models to be trained on data distributed across multiple devices while maintaining data privacy, is merging with semi-supervised learning. Federated semi-supervised learning allows models to learn from decentralized and partially labeled data sources, such as mobile devices, without centralizing the data.

- Example: In healthcare, federated semi-supervised learning can use data from multiple hospitals without sharing sensitive patient information, improving models for diagnostics and disease prediction across institutions while protecting privacy.

4. Use of Generative Models

Generative models, like GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders), are increasingly popular in semi-supervised learning. These models learn to generate data that resembles the original dataset, enabling the creation of synthetic labeled data, which is useful when labeled data is limited.

- Example: In speech synthesis, GANs generate realistic audio samples from limited labeled data, allowing speech recognition models to expand training datasets with synthetic examples, improving recognition accuracy across diverse accents and languages.

5. Domain Adaptation Techniques

Domain adaptation techniques are being integrated into semi-supervised learning to enhance the model’s ability to generalize across different domains. This trend is especially useful in cases where labeled data from the target domain is limited, but related labeled data is available from a different domain.

- Example: In autonomous driving, domain adaptation helps a semi-supervised model trained on sunny driving conditions generalize to other conditions like rain or fog, expanding its utility across diverse environments.

These trends reflect the growing sophistication of semi-supervised learning, allowing it to tackle increasingly complex tasks with minimal labeling effort, greater privacy, and improved scalability.

Best Practices for Semi-Supervised Learning

To maximize the effectiveness of semi-supervised learning, it’s essential to follow best practices that help ensure accuracy, reliability, and scalability. Here are some recommended strategies:

1. Start with High-Quality Labeled Data

The labeled data serves as the foundation for the model’s learning, so ensuring its quality is critical. Even though only a small amount of labeled data is used in semi-supervised learning, it must be representative of the overall data distribution to effectively guide the model.

- Best Practice: Carefully select and curate the labeled dataset, using domain experts when necessary, to ensure that labels are accurate and representative of the larger dataset.

2. Choose an Appropriate Confidence Threshold for Pseudo-Labels

When using self-training, selecting an appropriate confidence threshold for pseudo-labels is essential. Setting the threshold too low can introduce noise, as low-confidence predictions are more likely to be inaccurate, while setting it too high may limit the amount of unlabeled data incorporated.

- Best Practice: Experiment with different confidence thresholds and evaluate their impact on model performance. Fine-tuning this parameter helps balance model accuracy and the utility of pseudo-labels.

3. Use Validation Sets for Early Stopping

To prevent overfitting on pseudo-labeled data, employ early stopping by monitoring performance on a labeled validation set. This approach helps ensure that the model doesn’t overfit to noisy pseudo-labels while maintaining generalization.

- Best Practice: Periodically validate the model on a held-out labeled dataset to gauge its performance and stop training if overfitting is detected.

4. Balance Labeled and Unlabeled Data

An imbalance between labeled and unlabeled data can lead to underperformance, especially if the labeled data is too sparse. Increasing the labeled data proportion or augmenting it with pseudo-labels can improve model learning and prevent over-reliance on unlabeled data.

- Best Practice: Monitor the ratio of labeled to unlabeled data and add additional labeled examples if the model struggles to learn effectively. Consider data augmentation techniques to increase labeled data diversity.

5. Use Domain Knowledge to Guide Model Interpretation

Since semi-supervised learning can be challenging to interpret, incorporating domain knowledge can help validate and explain the model’s predictions. Engaging with domain experts ensures that the identified patterns and insights align with real-world phenomena.

- Best Practice: Collaborate with domain experts throughout the modeling process to interpret results and validate pseudo-labels. This practice enhances the model’s trustworthiness, especially in high-stakes fields like healthcare or finance.

6. Leverage Model Interpretability Tools

In applications requiring transparency, such as healthcare diagnostics or financial risk assessment, it’s important to ensure that semi-supervised models are interpretable. Tools like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (Shapley Additive exPlanations) provide insights into the factors influencing the model’s decisions, increasing trust.

- Best Practice: Use interpretability tools to clarify model predictions, making it easier to identify areas where the model may rely on noisy pseudo-labels or biased patterns.

By following these best practices, practitioners can build more robust and reliable semi-supervised learning models that effectively leverage both labeled and unlabeled data while minimizing the risks associated with pseudo-labeling and model interpretability.

The Future of Semi-Supervised Learning

As data volume continues to grow exponentially, the importance of semi-supervised learning will increase, especially in fields where labeling is costly or impractical. Here are some ways semi-supervised learning is expected to impact the future of machine learning:

- Scalability in AI-Driven Applications: Semi-supervised learning will enable AI applications to scale more rapidly across industries by reducing reliance on labeled data. This is particularly impactful for emerging applications, such as voice assistants, predictive maintenance, and precision agriculture, where data diversity is high, but labeled examples are limited.

- Integration with Reinforcement Learning: Semi-supervised learning and reinforcement learning are likely to converge, particularly in environments where both labeled data and reward signals are sparse. By combining these approaches, models can learn from a mix of labels, reward feedback, and environmental cues, creating more adaptive AI systems.

- Enhanced Personalization: Semi-supervised learning is poised to drive advancements in personalization across digital platforms, from social media to e-commerce. By using a limited set of labeled user preferences alongside broader behavioral data, these systems can tailor experiences and recommendations with increased accuracy.

- Advances in Data Privacy: With federated semi-supervised learning, organizations can train models on decentralized data without moving it to a central server. This approach will enhance data privacy, particularly in sensitive industries like healthcare and finance, where data sharing is restricted.

- Improved Accessibility for Small and Medium Enterprises (SMEs): As semi-supervised learning techniques become more accessible through open-source frameworks and cloud platforms, SMEs will be able to leverage AI without needing extensive labeled datasets, leveling the playing field for businesses of all sizes.

Semi-supervised learning will continue to play a critical role in machine learning, helping organizations unlock the potential of vast unlabeled datasets and making AI more accessible, adaptable, and privacy-conscious.

Conclusion: The Significance of Semi-Supervised Learning

Semi-supervised learning bridges the gap between supervised and unsupervised learning, offering a practical approach for tasks where labeled data is limited but unlabeled data is abundant. By combining the strengths of both labeled and unlabeled data, semi-supervised learning enables models to achieve high accuracy without the extensive labeling efforts required in traditional supervised learning.

With applications spanning healthcare, finance, natural language processing, and autonomous vehicles, semi-supervised learning is driving innovation across sectors where data diversity is high but labeling is costly. This approach offers scalability, cost-efficiency, and the ability to generalize effectively, making it an ideal choice for modern machine learning challenges.

As advancements in self-supervised learning, federated learning, and hybrid methods continue to unfold, the power and versatility of semi-supervised learning will only grow, supporting the development of smarter, more adaptive, and privacy-conscious AI solutions. Practitioners who embrace semi-supervised learning and apply best practices can harness the full potential of their data, transforming vast amounts of unlabeled information into actionable insights and driving progress across industries.